Intersite L3Out with PBR

Cisco Application Centric Infrastructure (ACI) policy-based redirect (PBR) enables traffic redirection for service appliances, such as firewalls or load balancers, and intrusion prevention system (IPS). Typical use cases include provisioning service appliances that can be pooled, tailored to application profiles, scaled easily, and have reduced exposure to service outages. PBR simplifies the insertion of service appliances by using contract between the consumer and provider endpoint groups even if they are all in the same virtual routing and forwarding (VRF) instance.

PBR deployment consists of configuring a route redirect policy and a cluster redirect policy, and creating a service graph template that uses these policies. After the service graph template is deployed, you can attach it to a contract between EPGs so that all traffic following that contract is redirected to the service graph devices based on the PBR policies you have created. Effectively, this allows you to choose which type of traffic between the same two EPGs is redirected to the L4-L7 device, and which is subject to a security policy applied at the fabric level.

More in-depth information specific to services graphs and PBR is available in the Cisco APIC Layer 4 to Layer 7 Services Deployment Guide

Configuration Workflow

The use cases described in the following sections are an extension of a basic intersite L3Out (without PBR) use case which is in turn an extension on basic external connectivity (L3Out) configuration in each site. The workflow to configure the supported use cases is the same, with the only differences being whether you create the objects in the same or different VRFs (intra-VRF vs inter-VRF) and where you deploy the objects (stretched vs non-stretched).

-

Configure basic external connectivity (L3Out) for each site.

The intersite L3Out with PBR configuration described in the following sections is built on top of existing external connectivity (L3Out) in each site. If you have not configured an L3Out, create and deploy one as described in the External Connectivity (L3Out) chapter before proceeding with the following sections.

-

Configure an intersite L3Out use case without PBR.

We recommend configuring a simple intersite L3Out use case without any policy-based redirection before adding service chaining to it. This is described in detail in the Intersite L3Out chapter.

-

Add service chaining to the L3Out contract as described in the following sections, which includes:

-

Adding an external TEP pool for each Pod in each site where intersite L3Out is deployed.

-

Creating a Service Device template and assigning it to sites.

The service device template must be assigned to the same sites as the L3Out and application templates that contain other configuration objects.

-

Providing site-level configurations for the Service Device template.

Each site can have its own service device configuration including different high-availability models (such as active/active, active/standby, or independent service nodes).

-

Associating the service device you defined to the contract used for the basic intersite L3Out use case you deployed in the previous step.

-

Supported Use Cases

The following diagrams illustrate the traffic flows between an ACI internal endpoint in application EPG and an external endpoint through the L3Out in another site in the supported intersite L3Out with PBR use cases.

Intra-VRF vs Inter-VRF

When creating and configuring the application EPG and the external EPG, you will need to provide a VRF for the application EPG's bridge domain and for the L3Out. You can choose to use the same VRF (intra-VRF) or different VRFs (inter-VRF).

When establishing a contract between the EPGs, you will need to designate one EPG as the provider and the other one as the consumer:

-

When both EPGs are in the same VRF, either one can be the consumer or the provider.

-

If the EPGs are in different VRFs, the external EPG must be the provider and the application EPG must be the consumer.

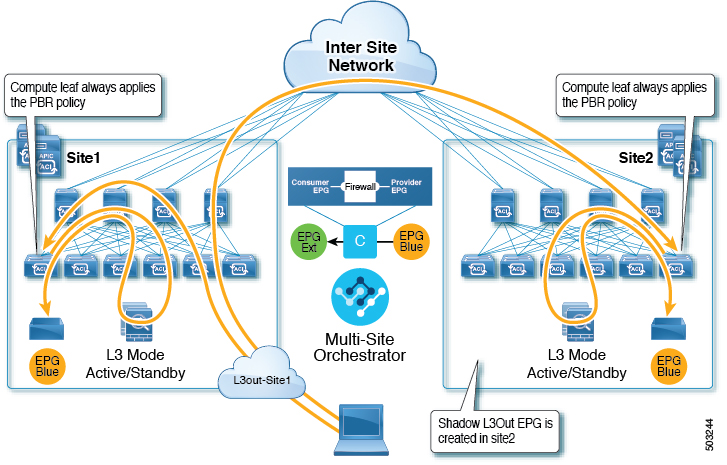

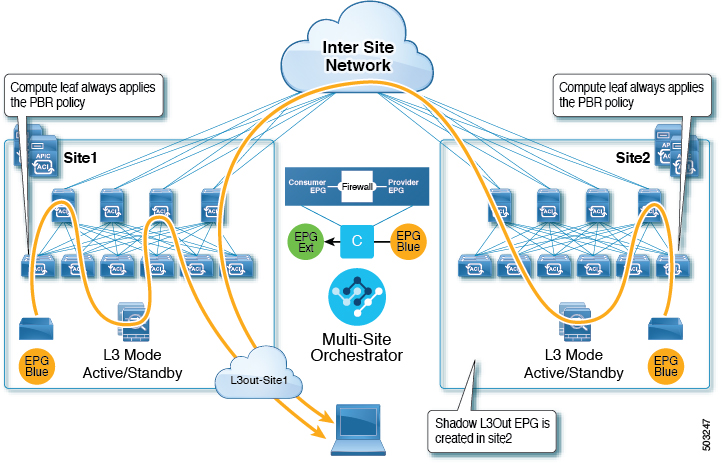

L3Out to Stretched EPG

This use case illustrates a single application EPG that is stretched between two sites and a single L3Out created in only one of the sites. Regardless of whether the application EPG's endpoint is in the same site as the L3Out or the other site, traffic will go through the same L3Out. However, the traffic will always go through the service node that is local to the endpoint's site because for North-South traffic the PBR policy is always applied only on the compute leaf nodes (and not on the border leaf nodes).

Note |

The same flow applies in cases when the external EPG is stretched and each site has its own L3Out, but the L3Out in the site where the traffic is originating or is destined to is down. |

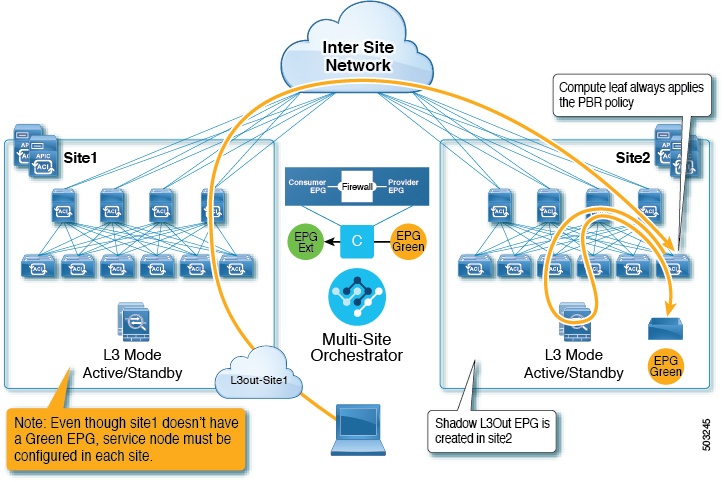

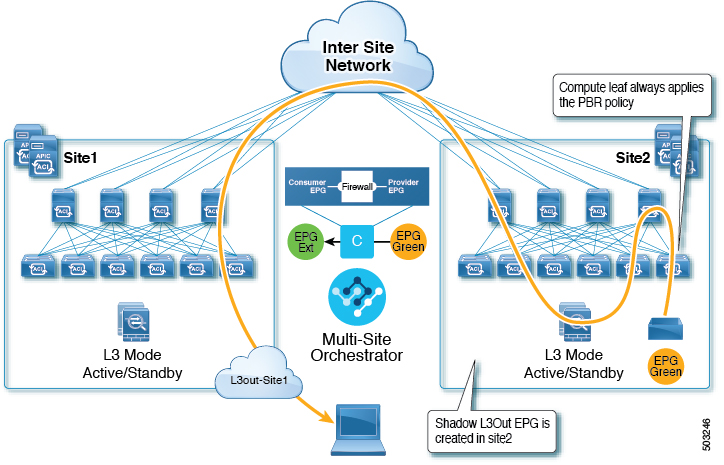

L3Out to Site-Local EPG

This use case illustrates a site-local application EPG that will use the L3Out in the other site for North-South traffic. Like in the previous example, all traffic will use the EPG's site-local service graph device.

Note |

The same flow applies in cases where the external EPG is stretched and each site has its own L3Out, but the EPG's local L3Out is down. |

Feedback

Feedback