Infra Configuration Dashboard

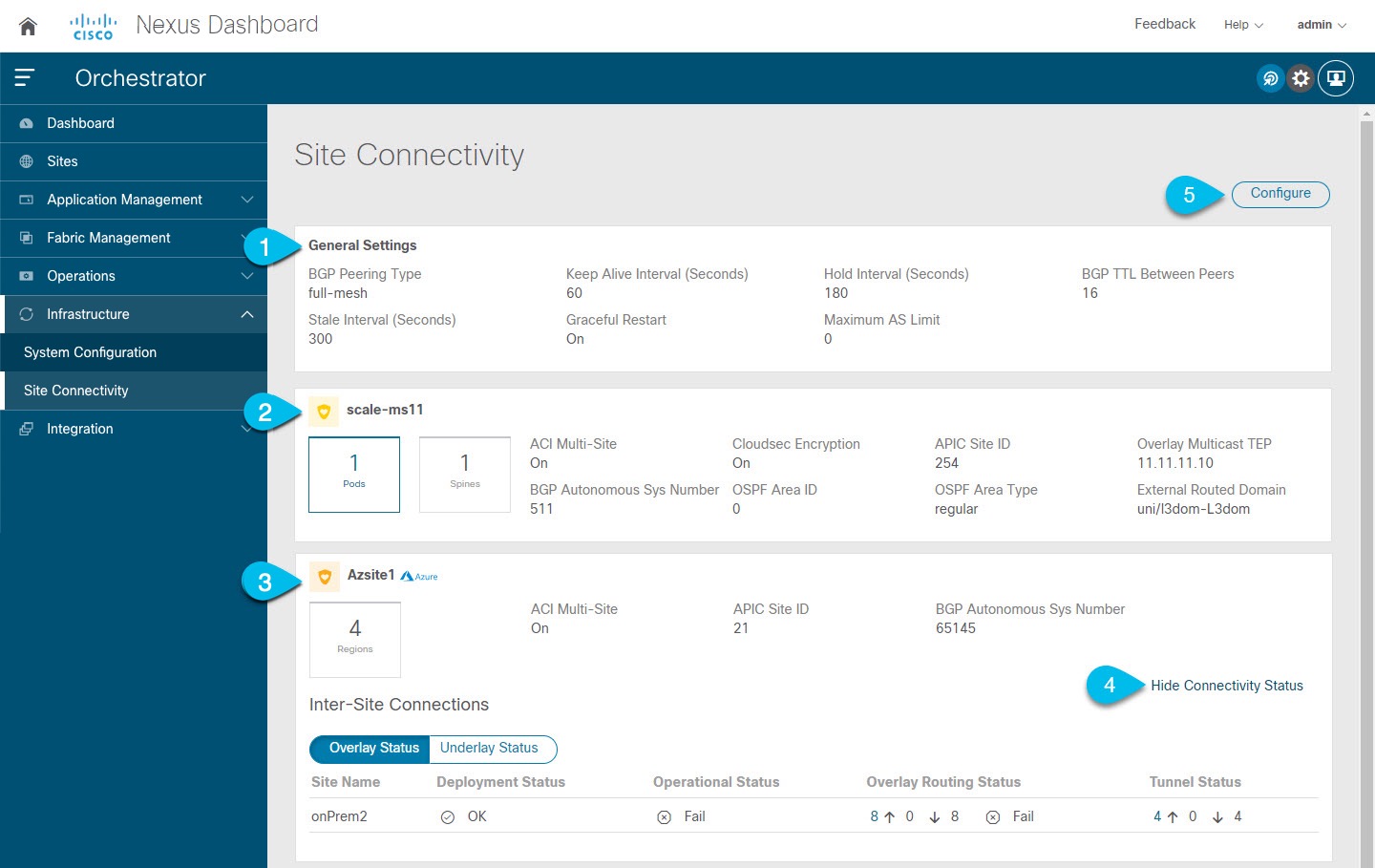

The Infra Configuration page displays an overview of all sites and inter-site connectivity in your Nexus Dashboard Orchestrator deployment and contains the following information:

-

The General Settings tile displays information about BGP peering type and its configuration.

This is described in detail in the next section.

-

The On-Premises tiles display information about every on-premises site that is part of your Multi-Site domain along with their number of Pods and spine switches, OSPF settings, and overlay IPs.

You can click on the Pods tile that displays the number of Pods in the site to show information about the Overlay Unicast TEP addresses of each Pod.

This is described in detail in Configuring Infra for Cisco APIC Sites.

-

The Cloud tiles display information about every cloud site that is part of your Multi-Site domain along with their number of regions and basic site information.

This is described in detail in Configuring Infra for Cisco Cloud Network Controller Sites.

-

You can click Show Connectivity Status to display intersite connectivity details for a specific site.

-

You can use the Configure button to navigate to the intersite connectivity configuration, which is described in detail in the following sections.

The following sections describe the steps necessary to configure the general fabric Infra settings. Fabric-specific requirements and procedures are described in the following chapters based on the specific type of fabric you are managing.

Before you proceed with Infra configuration, you must have configured and added the sites as described in previous sections.

In addition, any infrastructure changes such as adding and removing spine switches or spine node ID changes require a Nexus Dashboard Orchestrator fabric connectivity information refresh described in the Refreshing Site Connectivity Information as part of the general Infra configuration procedures.

Feedback

Feedback