LAN Fabrics

The following terms are referred to in this document:

-

Greenfield Deployments: Applicable for provisioning new VXLAN EVPN fabrics and eBGP-based routed fabrics.

-

Brownfield Deployments: Applicable for existing VXLAN EVPN fabrics:

-

Migrate CLI-configured VXLAN EVPN fabrics to Nexus Dashboard Fabric Controller using the Data Center VXLAN EVPN fabric template.

-

NFM migration to Cisco Nexus Dashboard Fabric Controller using the Data Center VXLAN EVPN fabric template.

-

Note that in this document the terms switch and device are used interchangeably.

For information about upgrades, refer to the Cisco Nexus Dashboard Fabric Controller Installation and Upgrade Guide for LAN Controller Deployment.

The following table describes the fields that appear on .

|

Field |

Description |

|---|---|

|

Fabric Name |

Displays the name of the fabric. |

|

Fabric Technology |

Displays the fabric technology based on the fabric template. |

|

Fabric Type |

Displays the type of the fabric—Switch Fabric, LAN Monitor, or External |

|

ASN |

Displays the ASN for the fabric. |

|

Fabric Health |

Displays the health of the fabric. |

The following table describes the action items in the Actions menu drop‐down list, that appear on .

|

Action Item |

Description |

|---|---|

|

Create Fabric |

From the Actions drop-down list, select Create Fabric. For more instructions, see Create a Fabric. |

|

Edit Fabric |

Select a fabric to edit. From the Actions drop-down list, select Edit Fabric. Make the necessary changes and click Save. Click Close to discard the changes. |

|

Delete Fabric |

Select a fabric to delete. From the drop-down list, select Delete Fabric. Click Confirm to delete the fabric. |

Fabric Summary

Click on a fabric to open the side kick panel. The following sections display the summary of the fabric:

-

Health - Shows the health of the Fabric.

-

Alarms - Displays the alarms based on the categories.

-

Fabric Info - Provides basic about the Fabric.

-

Inventory - Provides information about Switch Configuration and Switch Health.

Click the Launch icon to the right top corner to view the Fabric Overview.

Understanding Fabric Templates

Fabric Templates

The following table provides information about the available fabric templates:

Note |

Enhanced Classic LAN is a preview feature in Nexus Dashboard Fabric Controller, Release 12.1.2e. We recommend that you use this feature marked as BETA in your lab setup only. Do not use this features in your production deployment. To view Enhanced Classic LAN fabrics, you must enable this feature. On Web UI, navigate to , then check the Enable Preview Features check box. |

| Type of Fabric | Description | REST API Template Name | Detailed Procedures |

|---|---|---|---|

| Data Center VXLAN EVPN | Fabric for a VXLAN EVPN deployment with Nexus 9000 and 3000 switches. | Easy_Fabric | Creating a VXLAN EVPN Fabric Using the Data Center VXLAN EVPN Template |

| Enhanced Classic LAN | Fabric for a fully automated 3-tier Classic LAN deployment with Nexus 9000 and 7000 switches. | Easy_Fabric_Classic | LAN Fabrics |

| Campus VXLAN EVPN | Fabric for a VXLAN EVPN Campus deployment with Catalyst 9000 switches. | Easy_Fabric_IOS_XE | Create Data Center VXLAN EVPN for Cisco Catalyst 9000 Series Switches |

| BGP Fabric | Fabric for an eBGP based deployment with Nexus 9000 and 3000 switches. Optionally VXLAN EVPN can be enabled on top of the eBGP underlay. | Easy_Fabric_eBGP | Creating VXLAN EVPN Fabric with eBGP-based Underlay |

| Flexible Network | Fabric for flexible deployments with a mix of Nexus and Non-Nexus devices. | External_Fabric | Creating an External Fabric |

| Fabric Group | Domain that can contain Enhanced Classic LAN, Classic LAN, and External Connectivity Network fabrics. | Fabric_Group | LAN Fabrics |

| Classic LAN | Fabric to manage a legacy Classic LAN deployment with Nexus switches. | LAN_Classic | LAN Fabrics |

| LAN Monitor | Fabric for monitoring Nexus switches for basic discovery and inventory management. | LAN_Monitor | LAN Fabrics |

| VXLAN EVPN Multi-Site | Domain that can contain multiple VXLAN EVPN Fabrics (with Layer-2/Layer-3 Overlay Extensions) and other Fabric Types. | MSD_Fabric | Creating the VXLAN EVPN Multi-Site and Associating Member Fabrics |

| Classic IPFM | Fabric to manage or monitor existing Nexus 9000 switches in an IP Fabric for Media Deployment. | IPFM_Classic | Creating a Classic IPFM Fabric |

| IPFM | Fabric for a fully automated deployment of IP Fabric for Media Network with Nexus 9000 switches. | Easy_Fabric_IPFM | Creating an IPFM Fabric |

| Multi-Site Interconnect Network | Fabric to interconnect VXLAN EVPN for Multi-Site deployments with a mix of Nexus and Non-Nexus devices | External_Fabric | Creating an External Fabric |

| External Connectivity Network | Fabric for core and edge router deployments with a mix of Nexus and Non-Nexus devices. | External_Fabric | Creating an External Fabric |

Note |

Any reference to External_Fabric in this document refers to one of the following 3 fabric templates. Choose the required fabric based on the description in the above table.

|

The type of fabric will be seen as External_Fabric aka the fabric template name, in the following cases:

-

Upgrade and Restore from DCNM 11.5(4) .

-

Upgrade from NDFC 12.0.2f/12.1.1e

All existing functionalists will continue to work similarly to the previous release. You can optionally edit the fabric and choose one of the three options Flexible Network, External Connectivity Network, Multi-Site Interconnect Network. If you edit the fabric settings without choosing one of these options, then the default option Flexible Network will be picked. You can toggle between these three options as desired without any loss of functionality. The type of fabric is stored in nvPairs in a variable called EXT_FABRIC_TYPE. This can be optionally provided in the payload during fabric creation. If not provided, the default option of Flexible Network is picked.

Prerequisites to Creating a Fabric

-

From Cisco NDFC Release 12.1.2e, the ESXi host default setting on the vSphere Client for promiscuous mode is supported. For more information, see ESXi Networking for Promiscuous Mode section. From Nexus Dashboard release 2.3.1c, the vNIC of the POD that has the Persistent IP shares the same MAC address of Nexus Dashboard bond0 or bond1 interface. Therefore, the POD sources the packets using the same MAC address of Nexus Dashboard bond0 or bond1 interfaces that are known by the VMware ESXi system.

-

Configure the persistent IP addresses in Cisco Nexus Dashboard. For more information, see Cluster Configuration section in Cisco Nexus Dashboard User Guide.

Changing Persistent IP Address

From Cisco NDFC Release 12.1.2e, you can change persistent IP addresses which are assigned for mandatory pods such as POAP-SCP and SNMP trap. To change the persistent IP address, perform the following steps:

Procedure

|

Step 1 |

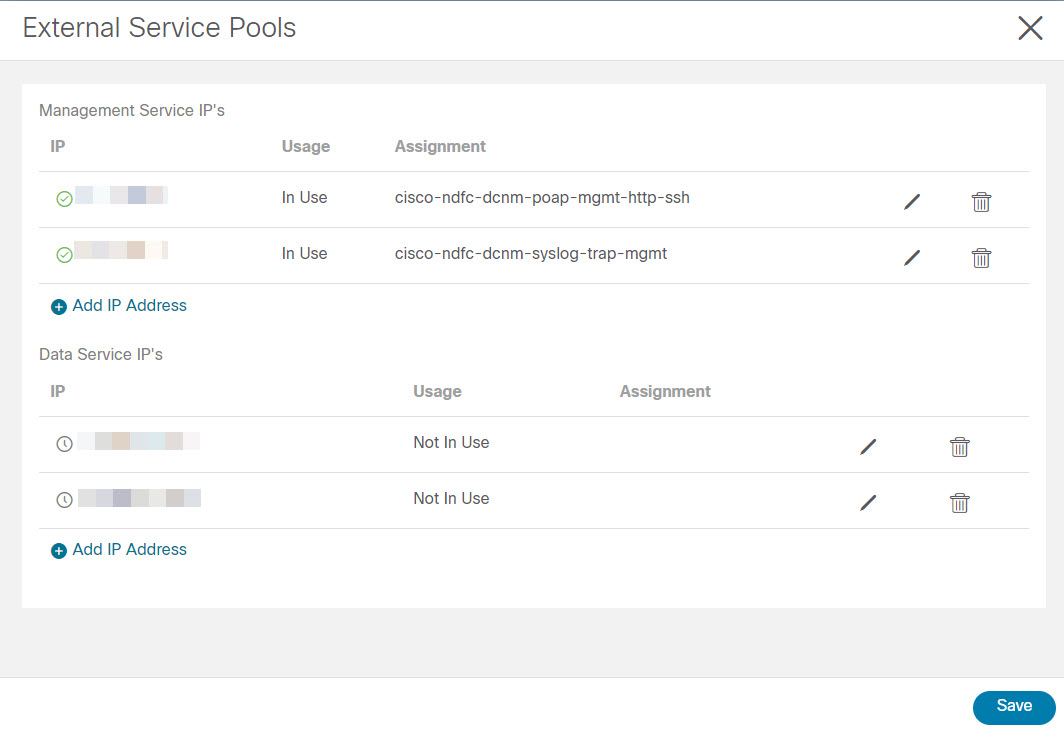

On Cisco NDFC Web UI, navigate to under LAN Device Management Connectivity drop-down list change Management to Data or conversely. Changing option results in migration of SNMP and POAP-SCP pods to the persistent IP addresses associated with External Service Pool on Nexus Dashboard connected with the new LAN Device Management Connectivity option. After the completion of this process, the following message is displayed: Some features have been updated. Reload the page to see latest changes. Click Reload the page. |

|

Step 2 |

On Cisco Nexus Dashboard Web UI, navigate to , in External Service Pools card, change the required IP addresses for Management Service IP Usage or Data Service IP Usage. |

|

Step 3 |

Navigate to NDFC Web UI Server Settings page, change the option in LAN Device Management Connectivity drop-down list to its initial selection. Restoring this option to initial settings, results in migration of the SNMP and POAP-SCP pods to use the updated persistent IP address from the appropriate External Service IP pool. |

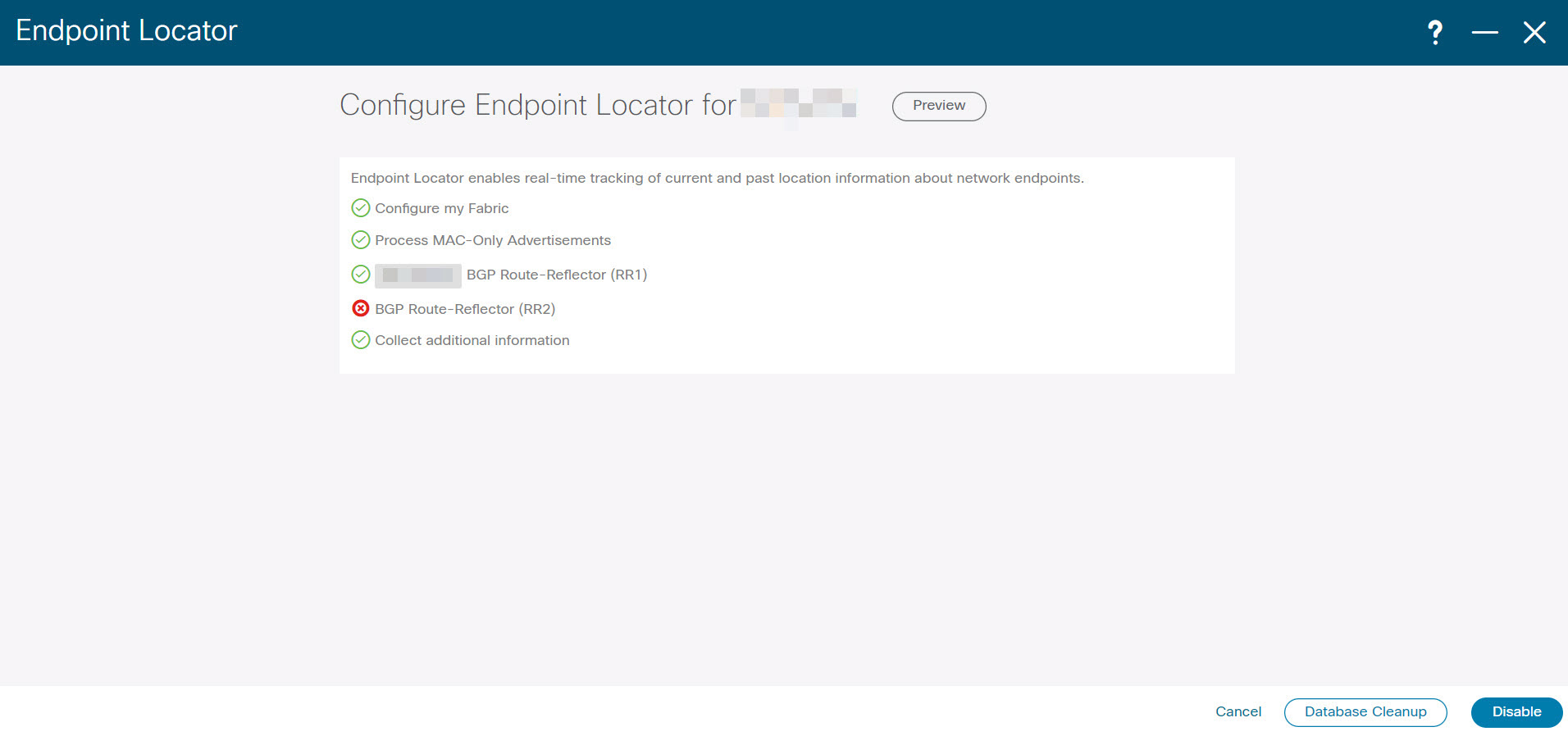

ESXi Networking for Promiscuous Mode

From Cisco NDFC Release 12.1.2e, you can run NDFC on top of virtual Nexus Dashboard (vND) instance with promiscuous mode that is disabled on port groups that are associated with Nexus Dashboard interfaces where External Service IP addresses are specified. vND comprises Nexus Dashboard management interface and data interface. By default, for fabric controller persona, two external service IP addresses are required for the Nexus Dashboard management interface subnet.

Before the NDFC Release 12.1.2e, if Inband management or Endpoint Locator or POAP feature was enabled on NDFC, you must also enable promiscuous mode for the Nexus Dashboard data or fabric interface port-group. This setting was mandatory for traffic flow that is associated for these features.

Enabling promiscuous mode raise risk of security issues in NDFC, it is recommended to set default setting for promiscuous mode.

Note |

|

If Inband management or EPL is enabled, you must specify External Service IP addresses in the Nexus Dashboard data interface subnet. You can disable promiscuous mode for the Nexus Dashboard data or fabric interface port-group. For more information, refer to Cisco Nexus Dashboard Deployment Guide

Note |

Default option for promiscuous mode is Reject. |

Procedure

|

Step 1 |

Log into your vSphere Client. |

|

Step 2 |

Navigate to the ESXi host. |

|

Step 3 |

Right-click the host and choose Settings. A sub-menu appears. |

|

Step 4 |

Choose Networking > Virtual Switches. All the virtual switches appear as blocks. |

|

Step 5 |

Click Edit Settings of the VM Network. |

|

Step 6 |

Navigate to the Security tab. |

|

Step 7 |

Update the Promiscuous mode settings as follows:

|

|

Step 8 |

Click OK. |

Create a Fabric

Procedure

|

Step 1 |

Choose . |

|

Step 2 |

From the Actions drop-down list, select Create Fabric. |

|

Step 3 |

Enter the fabric name and click Choose Template. |

|

Step 4 |

Specify the values for the fabric settings and click Save. |

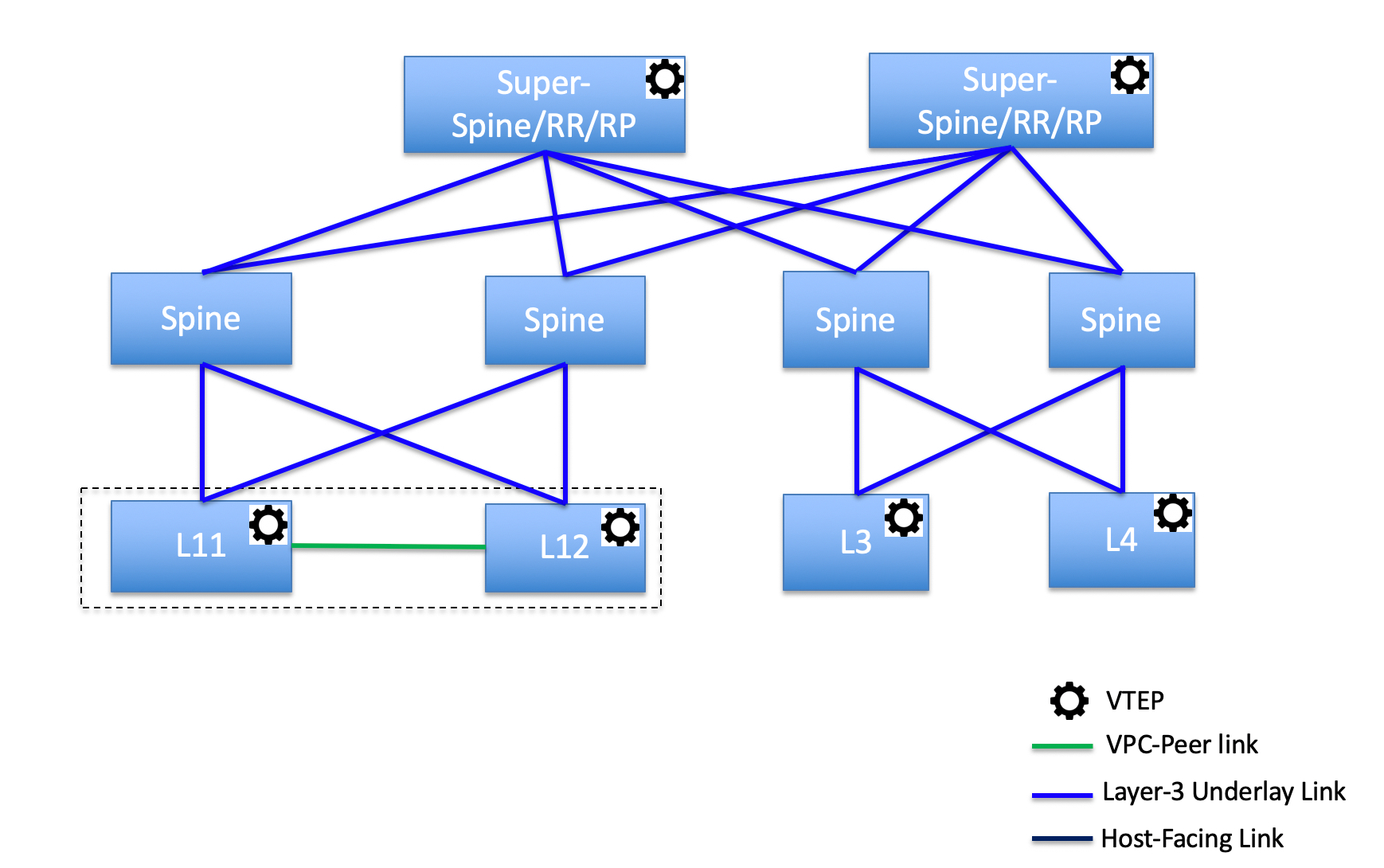

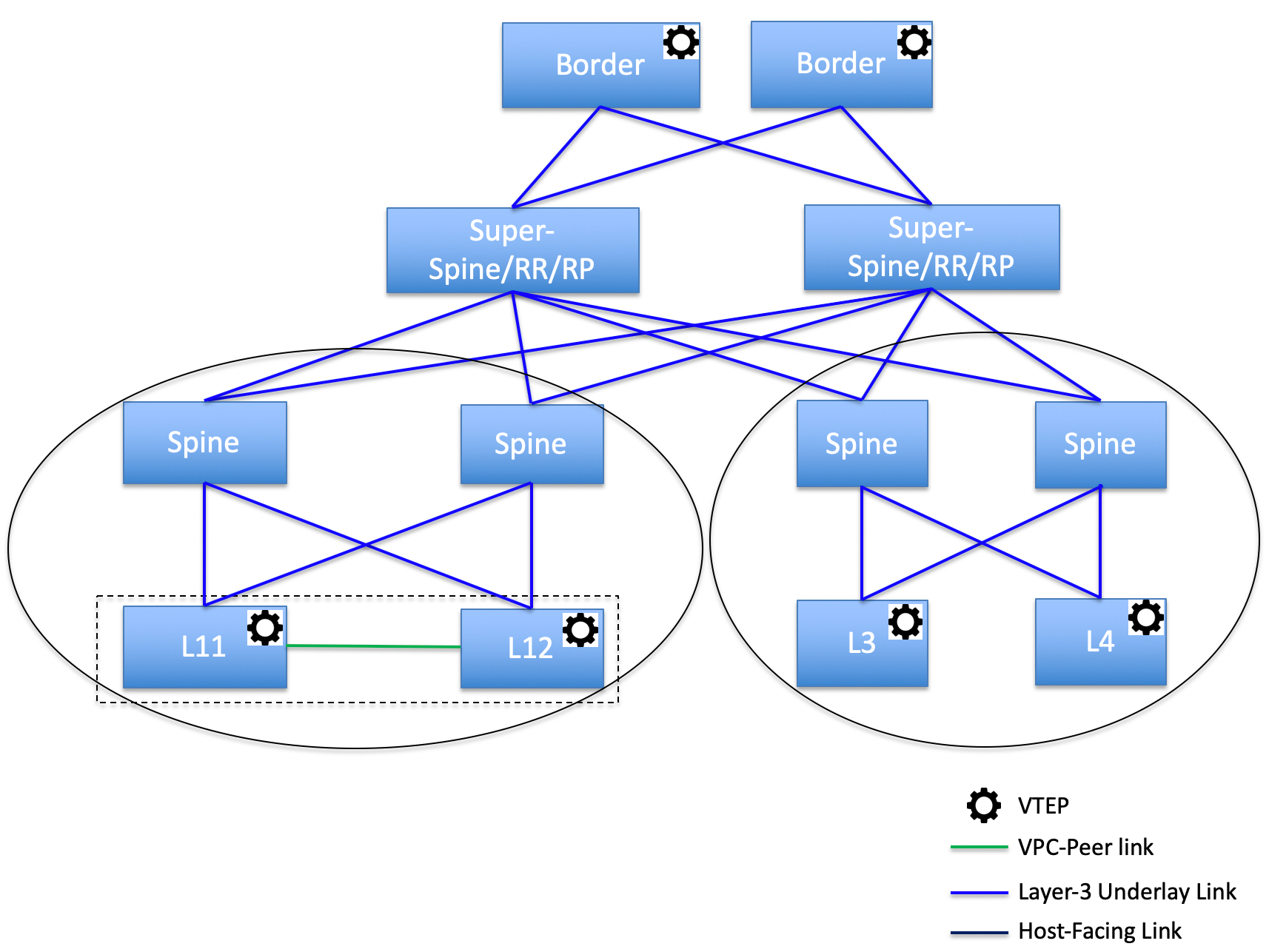

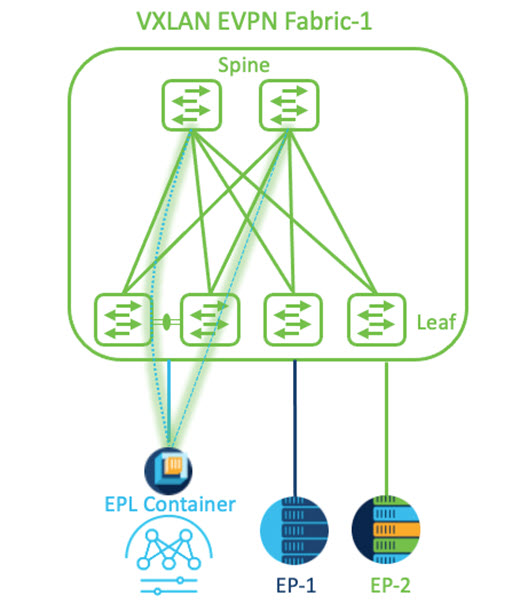

VXLAN EVPN Fabrics Provisioning

Cisco Nexus Dashboard Fabric Controller provides an enhanced “Easy” fabric workflow for unified underlay and overlay provisioning of the VXLAN BGP EVPN configuration on Nexus 9000 and 3000 series of switches. The configuration of the fabric is achieved via a powerful, flexible, and customizable template-based framework. Using minimal user inputs, an entire fabric can be brought up with Cisco-recommended best practice configurations in a short period of time. The set of parameters exposed in the Fabric Settings allow you to tailor the fabric to your preferred underlay provisioning options.

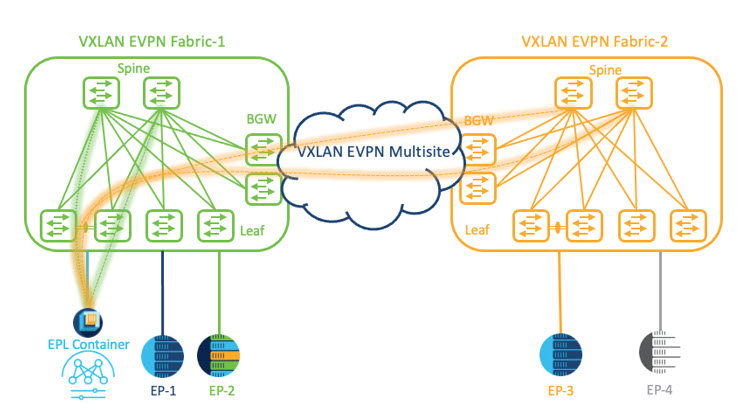

Border devices in a fabric typically provide external connectivity via peering with appropriate edge/core/WAN routers. These edge/core routers may either be managed or monitored by Nexus Dashboard Fabric Controller. These devices are placed in a special fabric called the External Fabric. The same Nexus Dashboard Fabric Controller can manage multiple VXLAN BGP EVPN fabrics while also offering easy provisioning and management of Layer-2 and Layer-3 DCI underlay and overlay configuration among these fabrics using a special construct called a Multi-Site Domain (MSD) fabric.

The Nexus Dashboard Fabric Controller GUI functions for creating and deploying VXLAN BGP EVPN fabrics are as follows:

LAN > Fabrics > LAN Fabrics Create Fabric under Actions drop-down list.

Create, edit, and delete a fabric:

-

Create new VXLAN, MSD, and external VXLAN fabrics.

-

View the VXLAN and MSD fabric topologies, including connections between fabrics.

-

Update fabric settings.

-

Save and deploy updated changes.

-

Delete a fabric (if devices are removed).

Device discovery and provisioning start-up configurations on new switches:

-

Add switch instances to the fabric.

-

Provision start-up configurations and an IP address to a new switch through POAP configuration.

-

Update switch policies, save, and deploy updated changes.

-

Create intra-fabric and inter-fabric links (also called Inter-Fabric Connections [IFCs]).

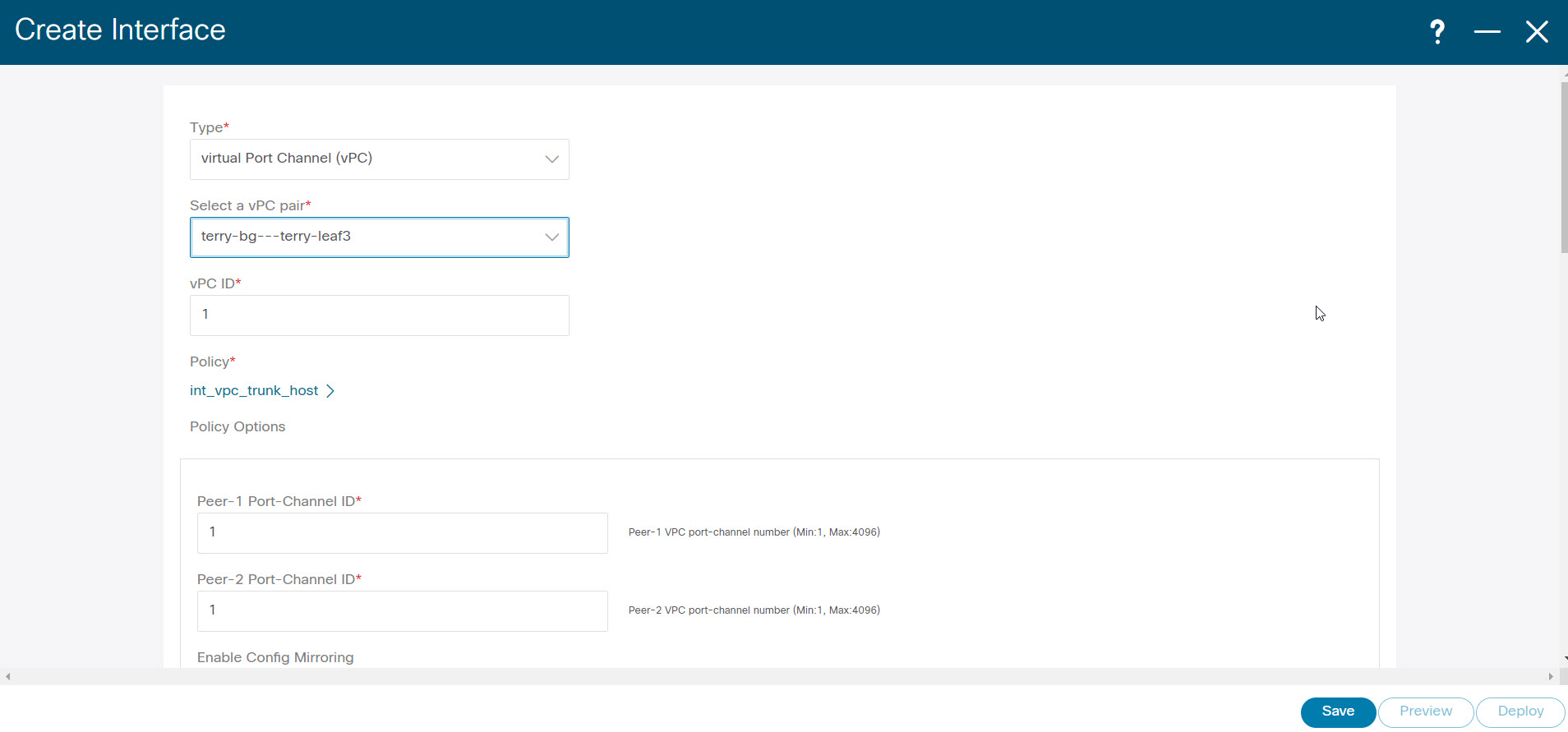

LAN > Interfaces > LAN Fabrics Create New Interface under Actions drop-down list.

Underlay provisioning:

-

Create, deploy, view, edit, and delete a port-channel, vPC switch pair, Straight Through FEX (ST-FEX), Active-Active FEX (AA-FEX), loopback, subinterface, etc.

-

Create breakout and unbreakout ports.

-

Shut down and bring up interfaces.

-

Rediscover ports and view interface configuration history.

LAN > Switches > LAN Fabrics Add under Actions drop-down list.

Overlay network provisioning.

-

Create new overlay networks and VRFs (from the range specified in fabric creation).

-

Provision the overlay networks and VRFs on the switches of the fabric.

-

Undeploy the networks and VRFs from the switches.

-

Remove the provisioning from the fabric in Nexus Dashboard Fabric Controller.

LAN > Services menu option.

Provisioning of configuration on service leafs to which L4-7 service appliances may be attached. For more information, see L4-L7 Service Basic Workflow.

This chapter mostly covers configuration provisioning for a single VXLAN BGP EVPN fabric. EVPN Multi-Site provisioning for Layer-2/Layer-3 DCI across multiple fabrics using the MSD fabric, is documented in a separate chapter. The deployment details of how overlay Networks and VRFs can be easily provisioned from the Fabric Controller, is covered in the Creating Networks and Creating VRFs in the Networks and VRFs sections.

Guidelines for VXLAN BGP EVPN Fabrics Provisioning

-

For any switch to be successfully imported into Nexus Dashboard Fabric Controller, the user specified for discovery/import, should have the following permissions:

-

SSH access to the switch

-

Ability to perform SNMPv3 queries

-

Ability to run the show commands including show run, show interfaces, etc.

-

Ability to execute the guestshell commands, which are prefixed by run guestshell for the Nexus Dashboard Fabric Controller tracker.

-

-

The switch discovery user need not have the ability to make any configuration changes on the switches. It is primarily used for read access.

-

When an invalid command is deployed by Nexus Dashboard Fabric Controller to a device, for example, a command with an invalid key chain due to an invalid entry in the fabric settings, an error is generated displaying this issue. This error is not cleared after correcting the invalid fabric entry. You need to manually clean up or delete the invalid commands to clear the error.

Note that the fabric errors related to the command execution are automatically cleared only when the same failed command succeeds in the subsequent deployment.

-

LAN credentials are required to be set of any user that needs to be perform any write access to the device. LAN credentials need to be set on the Nexus Dashboard Fabric Controller, on a per user per device basis. When a user imports a device into the Easy Fabric, and LAN credentials are not set for that device, Nexus Dashboard Fabric Controller moves this device to a migration mode. Once the user sets the appropriate LAN credentials for that device, a subsequent Save & Deploy retriggers the device import process.

-

The Save & Deploy button triggers the intent regeneration for the entire fabric as well as a configuration compliance check for all the switches within the fabric. This button is required but not limited to the following cases:

-

A switch or a link is added, or any change in the topology

-

A change in the fabric settings that must be shared across the fabric

-

A switch is removed or deleted

-

A new vPC pairing or unpairing is done

-

A change in the role for a device

When you click Recalculate Config, the changes in the fabric are evaluated, and the configuration for the entire fabric is generated. Click Preview Config to preview the generated configuration, and then deploy it at a fabric level. Therefore, Deploy Config can take more time depending on the size of the fabric.

When you right-click on a switch icon, you can use the Deploy config to switches option to deploy per switch configurations. This option is a local operation for a switch, that is, the expected configuration or intent for a switch is evaluated against it’s current running configuration, and a config compliance check is performed for the switch to get the In-Sync or Out-of-Sync status. If the switch is out of sync, the user is provided with a preview of all the configurations running in that particular switch that vary from the intent defined by the user for that respective switch.

-

-

Persistent configuration diff is seen for the command line: system nve infra-vlan int force . The persistent diff occurs if you have deployed this command via the freeform configuration to the switch. Although the switch requires the force keyword during deployment, the running configuration that is obtained from the switch in Nexus Dashboard Fabric Controller doesn’t display the force keyword. Therefore, the system nve infra-vlan int force command always shows up as a diff.

The intent in Nexus Dashboard Fabric Controller contains the line:

system nve infra-vlan int forceThe running config contains the line:

system nve infra-vlan intAs a workaround to fix the persistent diff, edit the freeform config to remove the force keyword after the first deployment such that it is system nve infra-vlan int .

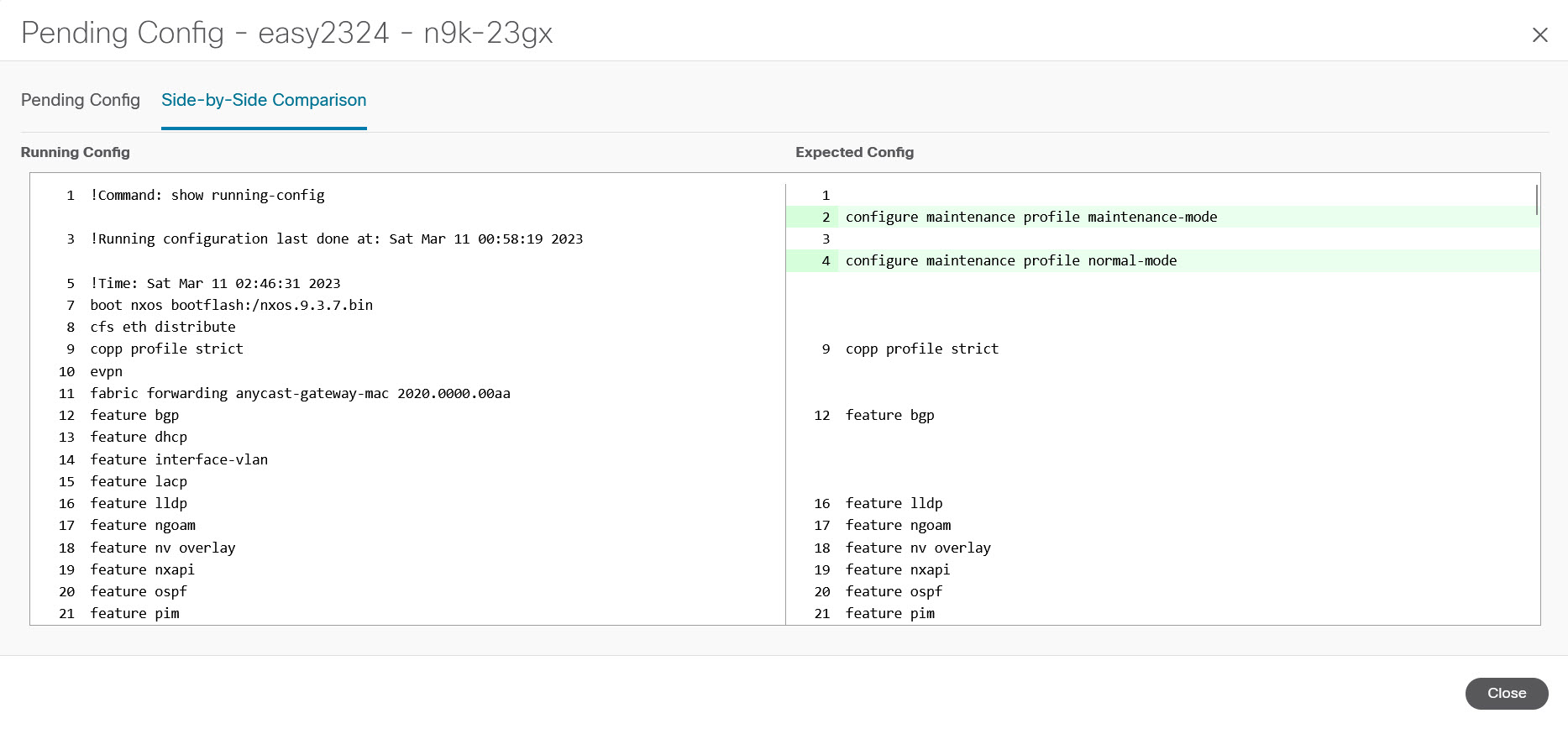

The force keyword is required for the initial deploy and must be removed after a successful deploy. You can confirm the diff by using the Side-by-side Comparison tab in the Config Preview window.

The persistent diff is also seen after a write erase and reload of a switch. Update the intent on Nexus Dashboard Fabric Controller to include the force keyword, and then you need to remove the force keyword after the first deployment.

-

When the switch contains the hardware access-list tcam region arp-ether 256 command, which is deprecated without the double-wide keyword, the below warning is displayed:

WARNING: Configuring the arp-ether region without "double-wide" is deprecated and can result in silent non-vxlan packet drops. Use the "double-wide" keyword when carving TCAM space for the arp-ether region.

Since the original hardware access-list tcam region arp-ether 256 command doesn’t match the policies in Nexus Dashboard Fabric Controller, this config is captured in the switch_freeform policy. After the hardware access-list tcam region arp-ether 256 double-wide command is pushed to the switch, the original tcam command that does not contain the double-wide keyword is removed.

You must manually remove the hardware access-list tcam region arp-ether 256 command from the switch_freeform policy. Otherwise, config compliance shows a persistent diff.

Here is an example of the hardware access-list command on the switch:

switch(config)# show run | inc arp-ether switch(config)# hardware access-list tcam region arp-ether 256 Warning: Please save config and reload the system for the configuration to take effect switch(config)# show run | inc arp-ether hardware access-list tcam region arp-ether 256 switch(config)# switch(config)# hardware access-list tcam region arp-ether 256 double-wide Warning: Please save config and reload the system for the configuration to take effect switch(config)# show run | inc arp-ether hardware access-list tcam region arp-ether 256 double-wideYou can see that the original tcam command is overwritten.

Creating a VXLAN EVPN Fabric Using the Data Center VXLAN EVPN Template

This topic describes how to create a new VXLAN EVPN fabric using the Data Center VXLAN EVPN template and contains descriptions for the IPv4 underlay. For information about the IPv6 underlay, see IPv6 Underlay Support for Easy Fabric.

-

Navigate to the LAN Fabrics page:

-

Click .

The Create Fabric window appears.

-

Enter a unique name for the fabric in the Fabric Name field, then click Choose Fabric.

A list of all available fabric templates are listed.

-

From the available list of fabric templates, choose the Data Center VXLAN EVPN template, then click Select.

-

Enter the necessary field values to create a fabric.

The tabs and their fields in the screen are explained in the following sections. The overlay and underlay network parameters are included in these tabs.

Note

If you’re creating a standalone fabric as a potential member fabric of an MSD fabric (used for provisioning overlay networks for fabrics that are connected through EVPN Multi-Site technology), see Multi-Site Domain for VXLAN BGP EVPN Fabrics before creating the member fabric.

-

When you have completed the necessary configurations, click Save.

-

Click on the fabric to display a summary in the slide-in pane.

-

Click on the Launch icon to display the Fabric Overview.

-

General Parameters

The General Parameters tab is displayed by default. The fields in this tab are described in the following table.

|

Field |

Description |

|---|---|

|

BGP ASN |

Enter the BGP AS number the fabric is associated with. This must be same as existing fabric. |

|

Enable IPv6 Underlay |

Enable the IPv6 underlay feature. For information, see IPv6 Underlay Support for Easy Fabric. |

|

Enable IPv6 Link-Local Address |

Enables the IPv6 Link-Local address. |

|

Fabric Interface Numbering |

Specifies whether you want to use point-to-point (p2p) or unnumbered networks. |

|

Underlay Subnet IP Mask |

Specifies the subnet mask for the fabric interface IP addresses. |

|

Underlay Subnet IPv6 Mask |

Specifies the subnet mask for the fabric interface IPv6 addresses. |

|

Underlay Routing Protocol |

The IGP used in the fabric, OSPF, or IS-IS. |

|

Route-Reflectors (RRs) |

The number of spine switches that are used as route reflectors for transporting BGP traffic. Choose 2 or 4 from the drop-down box. The default value is 2. To deploy spine devices as RRs, Nexus Dashboard Fabric Controller sorts the spine devices based on their serial numbers, and designates two or four spine devices as RRs. If you add more spine devices, existing RR configuration won’t change. Increasing the count – You can increase the route reflectors from two to four at any point in time. Configurations are automatically generated on the other two spine devices designated as RRs. Decreasing the count – When you reduce four route reflectors to two, remove the unneeded route reflector devices from the fabric. Follow these steps to reduce the count from 4 to 2.

You can preselect RRs and RPs before performing the first Save & Deploy operation. For more information, see Preselecting Switches as Route-Reflectors and Rendezvous-Points. |

|

Anycast Gateway MAC |

Specifies the anycast gateway MAC address. |

|

Enable Performance Monitoring |

Check the check box to enable performance monitoring. Ensure that you do not clear interface counters from the Command Line Interface of the switches. Clearing interface counters

can cause the Performance Monitor to display incorrect data for traffic utilization. If you must clear the counters and the

switch has both |

What's next: Complete the configurations in another tab if necessary, or click Save when you have completed the necessary configurations for this fabric.

Replication

The fields in the Replication tab are described in the following table. Most of the fields are automatically generated based on Cisco-recommended best practice configurations, but you can update the fields if needed.

|

Field |

Description |

||

|---|---|---|---|

|

Replication Mode |

The mode of replication that is used in the fabric for BUM (Broadcast, Unknown Unicast, Multicast) traffic. The choices are Ingress Replication or Multicast. When you choose Ingress replication, the multicast related fields get disabled. You can change the fabric setting from one mode to the other, if no overlay profile exists for the fabric. |

||

|

Multicast Group Subnet |

IP address prefix used for multicast communication. A unique IP address is allocated from this group for each overlay network. The replication mode change isn’t allowed if a policy template instance is created for the current mode. For example, if a multicast related policy is created and deployed, you can’t change the mode to Ingress. |

||

|

Enable Tenant Routed Multicast (TRM) |

Check the check box to enable Tenant Routed Multicast (TRM) that allows overlay multicast traffic to be supported over EVPN/MVPN in the VXLAN BGP EVPN fabric. |

||

|

Default MDT Address for TRM VRFs |

The multicast address for Tenant Routed Multicast traffic is populated. By default, this address is from the IP prefix specified in the Multicast Group Subnet field. When you update either field, ensure that the TRM address is chosen from the IP prefix specified in Multicast Group Subnet. For more information, see Overview of Tenant Routed Multicast. |

||

|

Rendezvous-Points |

Enter the number of spine switches acting as rendezvous points. |

||

|

RP mode |

Choose from the two supported multicast modes of replication, ASM (for Any-Source Multicast [ASM]) or BiDir (for Bidirectional PIM [BIDIR-PIM]). When you choose ASM, the BiDir related fields aren’t enabled. When you choose BiDir, the BiDir related fields are enabled.

When you create a new VRF for the fabric overlay, this address is populated in the Underlay Multicast Address field, in the Advanced tab. |

||

|

Underlay RP Loopback ID |

The loopback ID used for the rendezvous point (RP), for multicast protocol peering purposes in the fabric underlay. |

||

|

Underlay Primary RP Loopback ID |

Enabled if you choose BIDIR-PIM as the multicast mode of replication. The primary loopback ID used for the phantom RP, for multicast protocol peering purposes in the fabric underlay. |

||

|

Underlay Backup RP Loopback ID |

Enabled if you choose BIDIR-PIM as the multicast mode of replication. The secondary loopback ID used for the phantom RP, for multicast protocol peering purposes in the fabric underlay. |

||

|

Underlay Second Backup RP Loopback Id |

Used for the second fallback Bidir-PIM Phantom RP. |

||

|

Underlay Third Backup RP Loopback Id |

Used for the third fallback Bidir-PIM Phantom RP. |

What's next: Complete the configurations in another tab if necessary, or click Save when you have completed the necessary configurations for this fabric.

VPC

The fields in the VPC tab are described in the following table. Most of the fields are automatically generated based on Cisco-recommended best practice configurations, but you can update the fields if needed.

|

Field |

Description |

||

|---|---|---|---|

|

vPC Peer Link VLAN |

VLAN used for the vPC peer link SVI. |

||

|

Make vPC Peer Link VLAN as Native VLAN |

Enables vPC peer link VLAN as Native VLAN. |

||

|

vPC Peer Keep Alive option |

Choose the management or loopback option. If you want to use IP addresses assigned to the management port and the management VRF, choose management. If you use IP addresses assigned to loopback interfaces (and a non-management VRF), choose loopback. If you use IPv6 addresses, you must use loopback IDs. |

||

|

vPC Auto Recovery Time |

Specifies the vPC auto recovery time-out period in seconds. |

||

|

vPC Delay Restore Time |

Specifies the vPC delay restore period in seconds. |

||

|

vPC Peer Link Port Channel ID |

Specifies the Port Channel ID for a vPC Peer Link. By default, the value in this field is 500. |

||

|

vPC IPv6 ND Synchronize |

Enables IPv6 Neighbor Discovery synchronization between vPC switches. The check box is enabled by default. Uncheck the check box to disable the function. |

||

|

vPC advertise-pip |

Select the check box to enable the Advertise PIP feature. You can enable the advertise PIP feature on a specific vPC as well. . |

||

|

Enable the same vPC Domain Id for all vPC Pairs |

Enable the same vPC Domain ID for all vPC pairs. When you select this field, the vPC Domain Id field is editable. |

||

|

vPC Domain Id |

Specifies the vPC domain ID to be used on all vPC pairs. |

||

|

vPC Domain Id Range |

Specifies the vPC Domain Id range to use for new pairings. |

||

|

Enable QoS for Fabric vPC-Peering |

Enable QoS on spines for guaranteed delivery of vPC Fabric Peering communication. .

|

||

|

QoS Policy Name |

Specifies QoS policy name that should be same on all fabric vPC peering spines. The default name is spine_qos_for_fabric_vpc_peering. |

What's next: Complete the configurations in another tab if necessary, or click Save when you have completed the necessary configurations for this fabric.

Protocols

The fields in the Protocols tab are described in the following table. Most of the fields are automatically generated based on Cisco-recommended best practice configurations, but you can update the fields if needed.

|

Field |

Description |

||

|---|---|---|---|

|

Underlay Routing Loopback Id |

The loopback interface ID is populated as 0 since loopback0 is usually used for fabric underlay IGP peering purposes. |

||

|

Underlay VTEP Loopback Id |

The loopback interface ID is populated as 1 since loopback1 is used for the VTEP peering purposes. |

||

|

Underlay Anycast Loopback Id |

The loopback interface ID is greyed out and used for vPC Peering in VXLANv6 Fabrics only. |

||

|

Underlay Routing Protocol Tag |

The tag defining the type of network. |

||

|

OSPF Area ID |

The OSPF area ID, if OSPF is used as the IGP within the fabric.

|

||

|

Enable OSPF Authentication |

Select the check box to enable OSPF authentication. Deselect the check box to disable it. If you enable this field, the OSPF Authentication Key ID and OSPF Authentication Key fields get enabled. |

||

|

OSPF Authentication Key ID |

The Key ID is populated. |

||

|

OSPF Authentication Key |

The OSPF authentication key must be the 3DES key from the switch.

|

||

|

IS-IS Level |

Select the IS-IS level from this drop-down list. |

||

|

Enable IS-IS Network Point-to-Point |

Enables network point-to-point on fabric interfaces which are numbered. |

||

|

Enable IS-IS Authentication |

Select the check box to enable IS-IS authentication. Deselect the check box to disable it. If you enable this field, the IS-IS authentication fields are enabled. |

||

|

IS-IS Authentication Keychain Name |

Enter the Keychain name, such as CiscoisisAuth. |

||

|

IS-IS Authentication Key ID |

The Key ID is populated. |

||

|

IS-IS Authentication Key |

Enter the Cisco Type 7 encrypted key.

|

||

|

Set IS-IS Overload Bit |

When enabled, set the overload bit for an elapsed time after a reload. |

||

|

IS-IS Overload Bit Elapsed Time |

Allows you to clear the overload bit after an elapsed time in seconds. |

||

|

Enable BGP Authentication |

Select the check box to enable BGP authentication. Deselect the check box to disable it. If you enable this field, the BGP Authentication Key Encryption Type and BGP Authentication Key fields are enabled.

|

||

|

BGP Authentication Key Encryption Type |

Choose the 3 for 3DES encryption type, or 7 for Cisco encryption type. |

||

|

BGP Authentication Key |

Enter the encrypted key based on the encryption type.

|

||

|

Enable PIM Hello Authentication |

Select this check box to enable PIM hello authentication on all the intra-fabric interfaces of the switches in a fabric. This check box is editable only for the Multicast replication mode. Note this check box is valid only for the IPv4 underlay. |

||

|

PIM Hello Authentication Key |

Specifies the PIM hello authentication key. For more information, see Retrieving PIM Hello Authentication Key. To retrieve the PIM Hello Authentication Key, perform the following steps:

|

||

|

Enable BFD |

Check the check box to enable feature bfd on all switches in the fabric. This feature is valid only on IPv4 underlay and the scope is within a fabric. BFD within a fabric is supported natively. The BFD feature is disabled by default in the Fabric Settings. If enabled, BFD is enabled for the underlay protocols with the default settings. Any custom required BFD configurations must be deployed via the per switch freeform or per interface freeform policies. The following config is pushed after you select the Enable BFD check box:

For information about BFD feature compatibility, refer your respective platform documentation and for information about the supported software images, see Compatibility Matrix for Cisco Nexus Dashboard Fabric Controller. |

||

|

Enable BFD for iBGP |

Check the check box to enable BFD for the iBGP neighbor. This option is disabled by default. |

||

|

Enable BFD for OSPF |

Check the check box to enable BFD for the OSPF underlay instance. This option is disabled by default, and it is grayed out if the link state protocol is ISIS. |

||

|

Enable BFD for ISIS |

Check the check box to enable BFD for the ISIS underlay instance. This option is disabled by default, and it is grayed out if the link state protocol is OSPF. |

||

|

Enable BFD for PIM |

Check the check box to enable BFD for PIM. This option is disabled by default, and it is be grayed out if the replication mode is Ingress. Following are examples of the BFD global policies: |

||

|

Enable BFD Authentication |

Check the check box to enable BFD authentication. If you enable this field, the BFD Authentication Key ID and BFD Authentication Key fields are editable.

|

||

|

BFD Authentication Key ID |

Specifies the BFD authentication key ID for the interface authentication. The default value is 100. |

||

|

BFD Authentication Key |

Specifies the BFD authentication key. For information about how to retrieve the BFD authentication parameters. . |

||

|

iBGP Peer-Template Config |

Add iBGP peer template configurations on the leaf switches to establish an iBGP session between the leaf switch and route reflector. If you use BGP templates, add the authentication configuration within the template and uncheck the Enable BGP Authentication check box to avoid duplicate configuration. In the sample configuration, the 3DES password is displayed after password 3. The following fields can be used to specify different configurations:

In a brownfield migration, if the spine and leaf use different peer template names, both iBGP Peer-Template Config and Leaf/Border/Border Gateway iBGP Peer-Template Config fields need to be set according to the switch config. If spine and leaf use the same peer template name and content (except for the “route-reflector-client” CLI), only iBGP Peer-Template Config field in fabric setting needs to be set. If the fabric settings on iBGP peer templates do not match the existing switch configuration, an error message is generated and the migration will not proceed. |

What's next: Complete the configurations in another tab if necessary, or click Save when you have completed the necessary configurations for this fabric.

Advanced

The fields in the Advanced tab are described in the following table. Most of the fields are automatically generated based on Cisco-recommended best practice configurations, but you can update the fields if needed.

|

Field |

Description |

||

|---|---|---|---|

|

VRF Template |

Specifies the VRF template for creating VRFs. |

||

|

Network Template |

Specifies the network template for creating networks. |

||

|

VRF Extension Template |

Specifies the VRF extension template for enabling VRF extension to other fabrics. |

||

|

Network Extension Template |

Specifies the network extension template for extending networks to other fabrics. |

||

|

Overlay Mode |

VRF/Network configuration using config-profile or CLI, default is config-profile. For more information, see Overlay Mode. |

||

|

Site ID |

The ID for this fabric if you are moving this fabric within an MSD. The site ID is mandatory for a member fabric to be a part of an MSD. Each member fabric of an MSD has a unique site ID for identification. |

||

|

Intra Fabric Interface MTU |

Specifies the MTU for the intra fabric interface. This value should be an even number. |

||

|

Layer 2 Host Interface MTU |

Specifies the MTU for the layer 2 host interface. This value should be an even number. |

||

|

Unshut Host Interfaces by Default |

Check this check box to unshut the host interfaces by default. |

||

|

Power Supply Mode |

Choose the appropriate power supply mode. |

||

|

CoPP Profile |

Choose the appropriate Control Plane Policing (CoPP) profile policy for the fabric. By default, the strict option is populated. |

||

|

VTEP HoldDown Time |

Specifies the NVE source interface hold down time. |

||

|

Brownfield Overlay Network Name Format |

Enter the format to be used to build the overlay network name during a brownfield import or migration. The network name should not contain any white spaces or special characters except underscore (_) and hyphen (-). The network name must not be changed once the brownfield migration has been initiated. See the Creating Networks for the Standalone Fabric section for the naming convention of the network name. The syntax is [<string> | $$VLAN_ID$$] $$VNI$$ [<string>| $$VLAN_ID$$] and the default value is Auto_Net_VNI$$VNI$$_VLAN$$VLAN_ID$$. When you create networks, the name is generated according to the syntax you specify. The following list describes the variables in the syntax:

An example overlay network name: Site_VNI12345_VLAN1234

|

||

|

Enable CDP for Bootstrapped Switch |

Enables CDP on management (mgmt0) interface for bootstrapped switch. By default, for bootstrapped switches, CDP is disabled on the mgmt0 interface. |

||

|

Enable VXLAN OAM |

Enables the VXLAM OAM functionality for devices in the fabric. This is enabled by default. Uncheck the check box to disable VXLAN OAM function. If you want to enable the VXLAN OAM function on specific switches and disable on other switches in the fabric, you can use freeform configurations to enable OAM and disable OAM in the fabric settings.

|

||

|

Enable Tenant DHCP |

Check the check box to enable feature dhcp and associated configurations globally on all switches in the fabric. This is a pre-requisite for support of DHCP for overlay networks that are part of the tenant VRFs.

|

||

|

Enable NX-API |

Specifies enabling of NX-API on HTTPS. This check box is checked by default. |

||

|

Enable NX-API on HTTP Port |

Specifies enabling of NX-API on HTTP. Enable this check box and the Enable NX-API check box to use HTTP. This check box is checked by default. If you uncheck this check box, the applications that use NX-API and supported by Cisco Nexus Dashboard Fabric Controller, such as Endpoint Locator (EPL), Layer 4-Layer 7 services (L4-L7 services), VXLAN OAM, and so on, start using the HTTPS instead of HTTP.

|

||

|

Enable Policy-Based Routing (PBR) |

Check this check box to enable routing of packets based on the specified policy. Starting with Cisco NX-OS Release 7.0(3)I7(1) and later releases, this feature works on Cisco Nexus 9000 Series switches with Nexus 9000 Cloud Scale (Tahoe) ASICs. This feature is used along with the Layer 4-Layer 7 service workflow. For information on Layer 4-Layer 7 service, refer the Layer 4-Layer 7 Service chapter. |

||

|

Enable Strict Config Compliance |

Enable the Strict Config Compliance feature by selecting this check box. It enables bi-directional compliance checks to flag additional configs in the running config that are not in the intent/expected config. By default, this feature is disabled. |

||

|

Enable AAA IP Authorization |

Enables AAA IP authorization, when IP Authorization is enabled in the remote authentication server. This is required to support Nexus Dashboard Fabric Controller in scenarios where customers have strict control of which IP addresses can have access to the switches. |

||

|

Enable NDFC as Trap Host |

Select this check box to enable Nexus Dashboard Fabric Controller as an SNMP trap destination. Typically, for a native HA Nexus Dashboard Fabric Controller deployment, the eth1 VIP IP address will be configured as SNMP trap destination on the switches. By default, this check box is enabled. |

||

|

Anycast Border Gateway advertise-pip |

Enables to advertise Anycast Border Gateway PIP as VTEP. Effective on MSD fabric 'Recalculate Config'. |

||

|

Greenfield Cleanup Option |

Enable the switch cleanup option for switches imported into Nexus Dashboard Fabric Controller with Preserve-Config=No, without a switch reload. This option is typically recommended only for the fabric environments with Cisco Nexus 9000v Switches to improve on the switch clean up time. The recommended option for Greenfield deployment is to employ Bootstrap or switch cleanup with a reboot. In other words, this option should be unchecked. |

||

|

Enable Precision Time Protocol (PTP) |

Enables PTP across a fabric. When you check this check box, PTP is enabled globally and on core-facing interfaces. Additionally, the PTP Source Loopback Id and PTP Domain Id fields are editable. For more information, see Precision Time Protocol for Easy Fabric. |

||

|

PTP Source Loopback Id |

Specifies the loopback interface ID Loopback that is used as the Source IP Address for all PTP packets. The valid values range from 0 to 1023. The PTP loopback ID cannot be the same as RP, Phantom RP, NVE, or MPLS loopback ID. Otherwise, an error will be generated. The PTP loopback ID can be the same as BGP loopback or user-defined loopback which is created from Nexus Dashboard Fabric Controller. If the PTP loopback ID is not found during Deploy Config, the following error is generated: Loopback interface to use for PTP source IP is not found. Create PTP loopback interface on all the devices to enable PTP feature. |

||

|

PTP Domain Id |

Specifies the PTP domain ID on a single network. The valid values range from 0 to 127. |

||

|

Enable MPLS Handoff |

Check the check box to enable the MPLS Handoff feature. For more information, see the MPLS SR and LDP Handoff chapter in External/WAN Layer 3 Connectivity for VXLAN BGP EVPN Fabrics. |

||

|

Underlay MPLS Loopback Id |

Specifies the underlay MPLS loopback ID. The default value is 101. |

||

|

Enable TCAM Allocation |

TCAM commands are automatically generated for VXLAN and vPC Fabric Peering when enabled. |

||

|

Enable Default Queuing Policies |

Check this check box to apply QoS policies on all the switches in this fabric. To remove the QoS policies that you applied on all the switches, uncheck this check box, update all the configurations to remove the references to the policies, and save and deploy. Pre-defined QoS configurations are included that can be used for various Cisco Nexus 9000 Series Switches. When you check this check box, the appropriate QoS configurations are pushed to the switches in the fabric. The system queuing is updated when configurations are deployed to the switches. You can perform the interface marking with defined queuing policies, if required, by adding the required configuration to the per interface freeform block. Review the actual queuing policies by opening the policy file in the template editor. From Cisco Nexus Dashboard Fabric Controller Web UI, choose Operations > Templates. Search for the queuing policies by the policy file name, for example, queuing_policy_default_8q_cloudscale. Choose the file. From the Actions drop-down list, select Edit template content to edit the policy. See the Cisco Nexus 9000 Series NX-OS Quality of Service Configuration Guide for platform specific details. |

||

|

N9K Cloud Scale Platform Queuing Policy |

Choose the queuing policy from the drop-down list to be applied to all Cisco Nexus 9200 Series Switches and the Cisco Nexus 9000 Series Switches that ends with EX, FX, and FX2 in the fabric. The valid values are queuing_policy_default_4q_cloudscale and queuing_policy_default_8q_cloudscale. Use the queuing_policy_default_4q_cloudscale policy for FEXes. You can change from the queuing_policy_default_4q_cloudscale policy to the queuing_policy_default_8q_cloudscale policy only when FEXes are offline. |

||

|

N9K R-Series Platform Queuing Policy |

Choose the queuing policy from the drop-down list to be applied to all Cisco Nexus switches that ends with R in the fabric. The valid value is queuing_policy_default_r_series. |

||

|

Other N9K Platform Queuing Policy |

Choose the queuing policy from the drop-down list to be applied to all other switches in the fabric other than the switches mentioned in the above two options. The valid value is queuing_policy_default_other. |

||

|

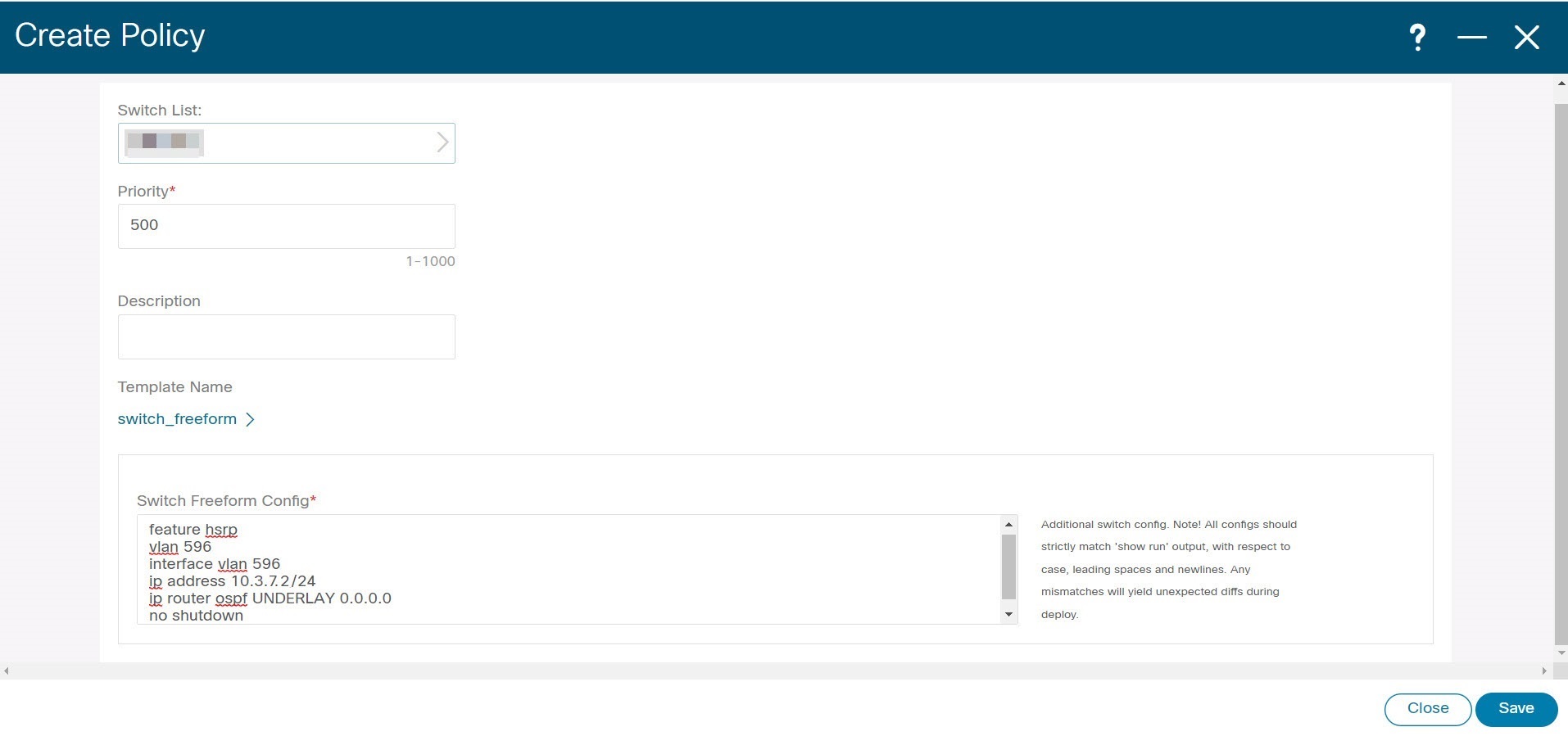

Enable MACsec |

Enables MACsec for the fabric. For more information, see Enabling MACsec. Freeform CLIs - Fabric level freeform CLIs can be added while creating or editing a fabric. They are applicable to switches across the fabric. You must add the configurations as displayed in the running configuration, without indentation. Switch level freeform configurations should be added via the switch freeform on NDFC. For more information, see Enabling Freeform Configurations on Fabric Switches. |

||

|

Leaf Freeform Config |

Add CLIs that should be added to switches that have the Leaf, Border, and Border Gateway roles. |

||

|

Spine Freeform Config |

Add CLIs that should be added to switches with a Spine, Border Spine, Border Gateway Spine, and Super Spine roles. |

||

|

Intra-fabric Links Additional Config |

Add CLIs that should be added to the intra-fabric links. |

What's next: Complete the configurations in another tab if necessary, or click Save when you have completed the necessary configurations for this fabric.

Resources

The fields in the Resources tab are described in the following table. Most of the fields are automatically generated based on Cisco-recommended best practice configurations, but you can update the fields if needed.

|

Field |

Description |

||

|---|---|---|---|

|

Manual Underlay IP Address Allocation |

Do not check this check box if you are transitioning your VXLAN fabric management to Nexus Dashboard Fabric Controller.

|

||

|

Underlay Routing Loopback IP Range |

Specifies loopback IP addresses for the protocol peering. |

||

|

Underlay VTEP Loopback IP Range |

Specifies loopback IP addresses for VTEPs. |

||

|

Underlay RP Loopback IP Range |

Specifies the anycast or phantom RP IP address range. |

||

|

Underlay Subnet IP Range |

IP addresses for underlay P2P routing traffic between interfaces. |

||

|

Underlay MPLS Loopback IP Range |

Specifies the underlay MPLS loopback IP address range. For eBGP between Border of Easy A and Easy B, Underlay routing loopback and Underlay MPLS loopback IP range must be a unique range. It should not overlap with IP ranges of the other fabrics, else VPNv4 peering will not come up. |

||

|

Underlay Routing Loopback IPv6 Range |

Specifies Loopback0 IPv6 Address Range |

||

|

Underlay VTEP Loopback IPv6 Range |

Specifies Loopback1 and Anycast Loopback IPv6 Address Range. |

||

|

Underlay Subnet IPv6 Range |

Specifies IPv6 Address range to assign Numbered and Peer Link SVI IPs. |

||

|

BGP Router ID Range for IPv6 Underlay |

Specifies BGP router ID range for IPv6 underlay. |

||

|

Layer 2 VXLAN VNI Range |

Specifies the overlay VXLAN VNI range for the fabric (min:1, max:16777214). |

||

|

Layer 3 VXLAN VNI Range |

Specifies the overlay VRF VNI range for the fabric (min:1, max:16777214). |

||

|

Network VLAN Range |

VLAN range for the per switch overlay network (min:2, max:4094). |

||

|

VRF VLAN Range |

VLAN range for the per switch overlay Layer 3 VRF (min:2, max:4094). |

||

|

Subinterface Dot1q Range |

Specifies the subinterface range when L3 sub interfaces are used. |

||

|

VRF Lite Deployment |

Specify the VRF Lite method for extending inter fabric connections. The VRF Lite Subnet IP Range field specifies resources reserved for IP address used for VRF Lite when VRF Lite IFCs are auto-created. If you select Back2Back&ToExternal, then VRF Lite IFCs are auto-created. |

||

|

Auto Deploy for Peer |

This check box is applicable for VRF Lite deployment. When you select this checkbox, auto-created VRF Lite IFCs will have the Auto Generate Configuration for Peer field in the VRF Lite tab set. To access VRF Lite IFC configuration, navigate to the Links tab, select the particular link, and then choose . You can check or uncheck the check box when the VRF Lite Deployment field is not set to Manual. This configuration only affects the new auto-created IFCs and does not affect the existing IFCs. You can edit an auto-created IFC and check or uncheck the Auto Generate Configuration for Peer field. This setting takes priority always. |

||

|

Auto Deploy Default VRF |

When you select this check box, the Auto Generate Configuration on default VRF field is automatically enabled for auto-created VRF Lite IFCs. You can check or uncheck this check box when the VRF Lite Deployment field is not set to Manual. The Auto Generate Configuration on default VRF field when set, automatically configures the physical interface for the border device, and establishes an EBGP connection between the border device and the edge device or another border device in a different VXLAN EVPN fabric. |

||

|

Auto Deploy Default VRF for Peer |

When you select this check box, the Auto Generate Configuration for NX-OS Peer on default VRF field is automatically enabled for auto-created VRF Lite IFCs. You can check or uncheck this check box when the VRF Lite Deployment field is not set to Manual. The Auto Generate Configuration for NX-OS Peer on default VRF field when set, automatically configures the physical interface and the EBGP commands for the peer NX-OS switch.

|

||

|

Redistribute BGP Route-map Name |

Defines the route map for redistributing the BGP routes in default VRF. |

||

|

VRF Lite Subnet IP Range and VRF Lite Subnet Mask |

These fields are populated with the DCI subnet details. Update the fields as needed. The values shown in your screen are automatically generated. If you want to update the IP address ranges, VXLAN Layer 2/Layer 3 network ID ranges or the VRF/Network VLAN ranges, ensure the following:

|

||

|

Service Network VLAN Range |

Specifies a VLAN range in the Service Network VLAN Range field. This is a per switch overlay service network VLAN range. The minimum allowed value is 2 and the maximum allowed value is 3967. |

||

|

Route Map Sequence Number Range |

Specifies the route map sequence number range. The minimum allowed value is 1 and the maximum allowed value is 65534. |

What's next: Complete the configurations in another tab if necessary, or click Save when you have completed the necessary configurations for this fabric.

Manageability

The fields in the Manageability tab are described in the following table. Most of the fields are automatically generated based on Cisco-recommended best practice configurations, but you can update the fields if needed.

|

Field |

Description |

|---|---|

|

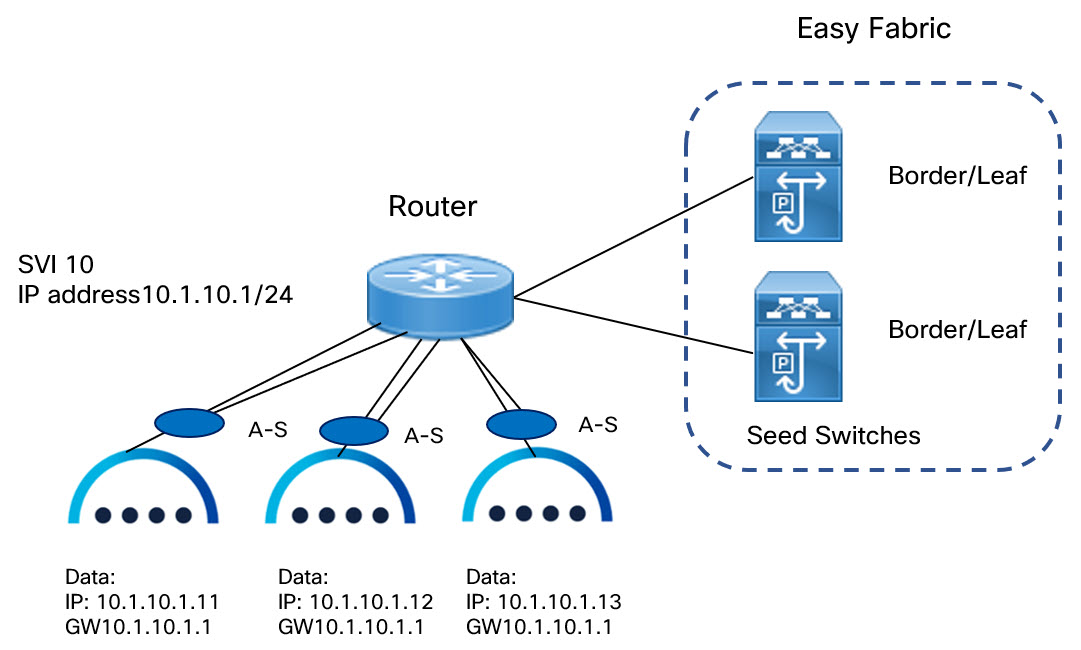

Inband Management |

Enabling this allows the management of the switches over their front panel interfaces. The Underlay Routing Loopback interface is used for discovery. If enabled, switches cannot be added to the fabric over their out-of-band (OOB) mgmt0 interface. To manage easy fabrics through Inband management ensure that you have chosen Data in NDFC Web UI, Settings > Server Settings > Admin. Both inband management and out-of-band connectivity (mgmt0) are supported for this setting. For more information, see Inband Management and Inband POAP in Easy Fabrics. |

|

DNS Server IPs |

Specifies the comma separated list of IP addresses (v4/v6) of the DNS servers. |

|

DNS Server VRFs |

Specifies one VRF for all DNS servers or a comma separated list of VRFs, one per DNS server. |

|

NTP Server IPs |

Specifies comma separated list of IP addresses (v4/v6) of the NTP server. |

|

NTP Server VRFs |

Specifies one VRF for all NTP servers or a comma separated list of VRFs, one per NTP server. |

|

Syslog Server IPs |

Specifies the comma separated list of IP addresses (v4/v6) IP address of the syslog servers, if used. |

|

Syslog Server Severity |

Specifies the comma separated list of syslog severity values, one per syslog server. The minimum value is 0 and the maximum value is 7. To specify a higher severity, enter a higher number. |

|

Syslog Server VRFs |

Specifies one VRF for all syslog servers or a comma separated list of VRFs, one per syslog server. |

|

AAA Freeform Config |

Specifies the AAA freeform configurations. If AAA configurations are specified in the fabric settings, switch_freeform PTI with source as UNDERLAY_AAA and description as AAA Configurations will be created. |

What's next: Complete the configurations in another tab if necessary, or click Save when you have completed the necessary configurations for this fabric.

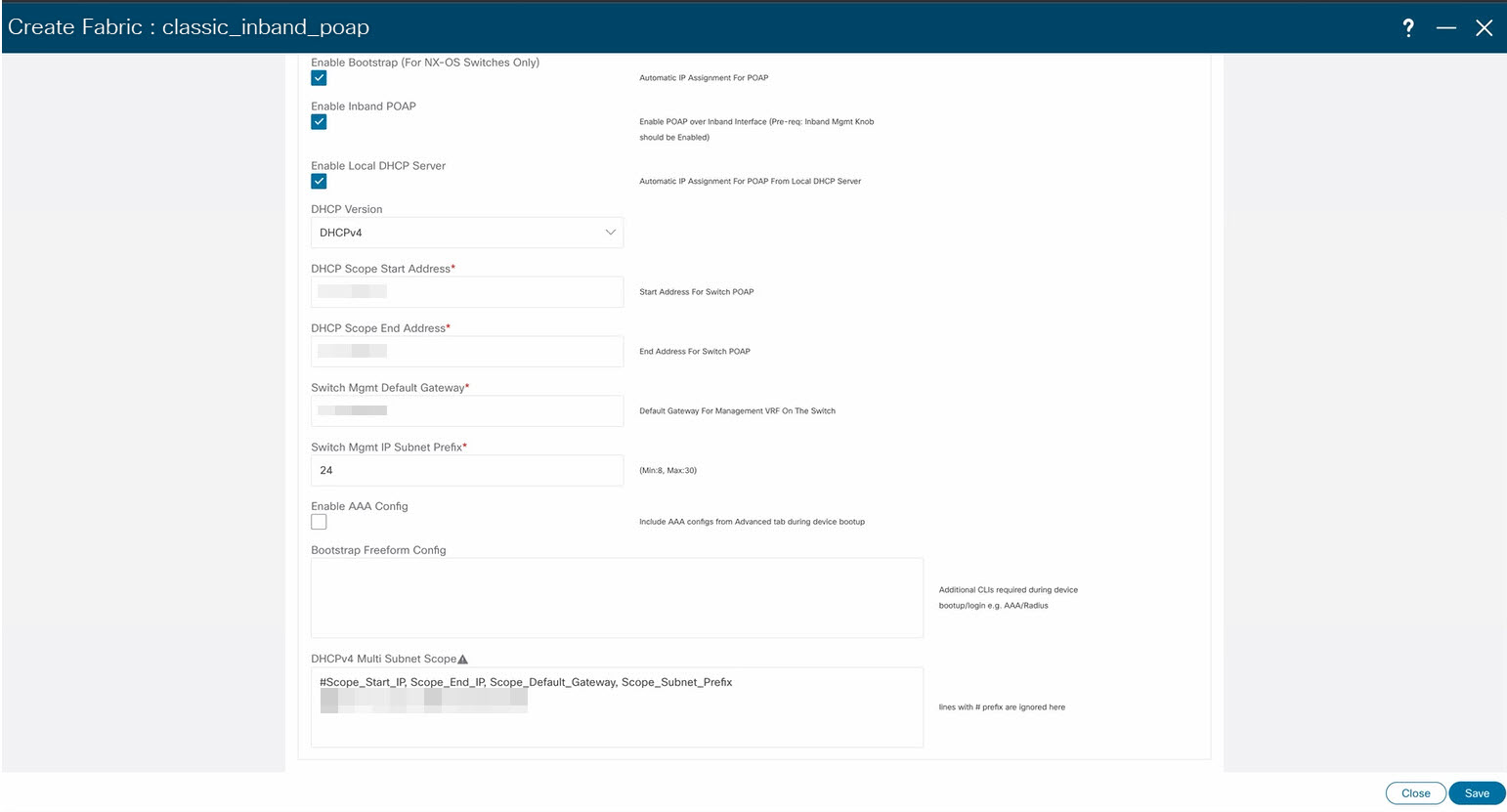

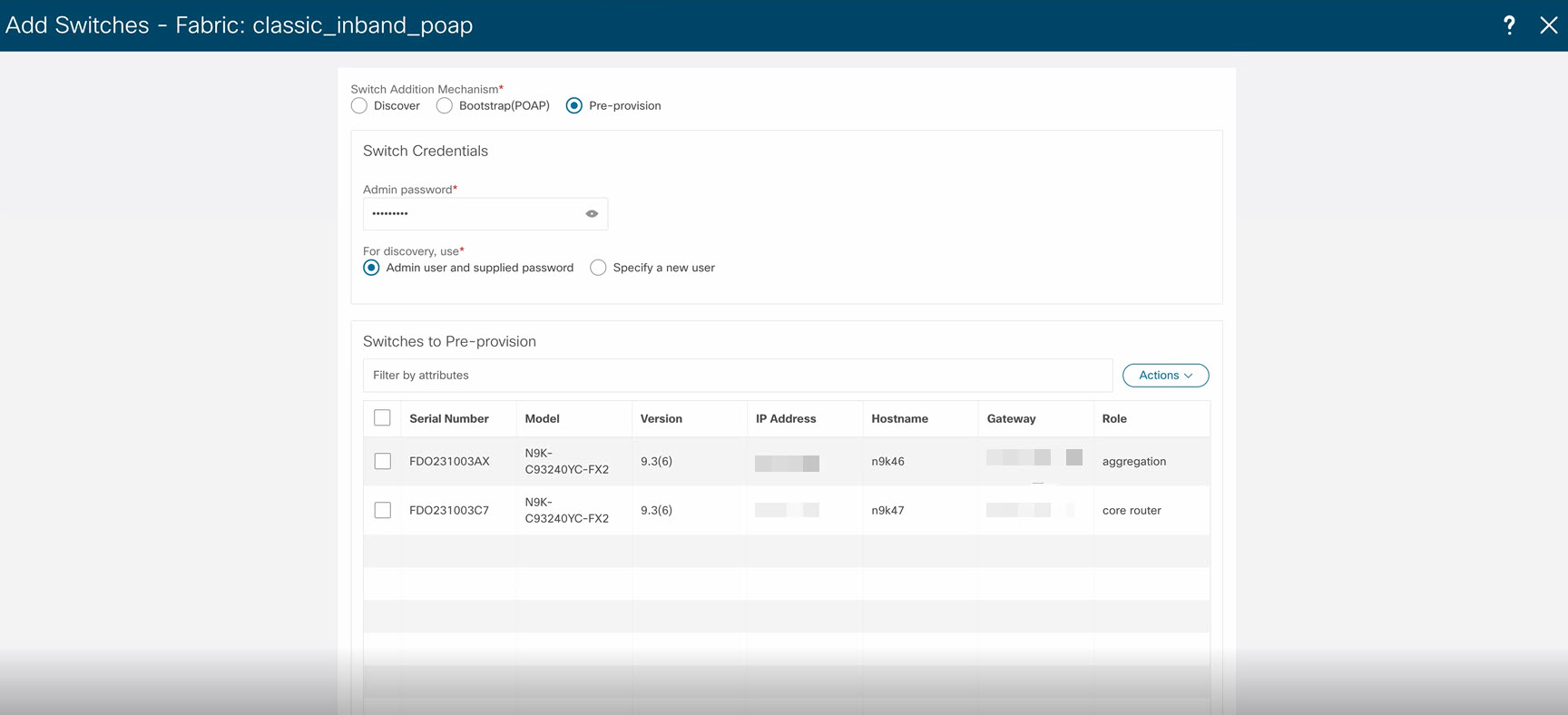

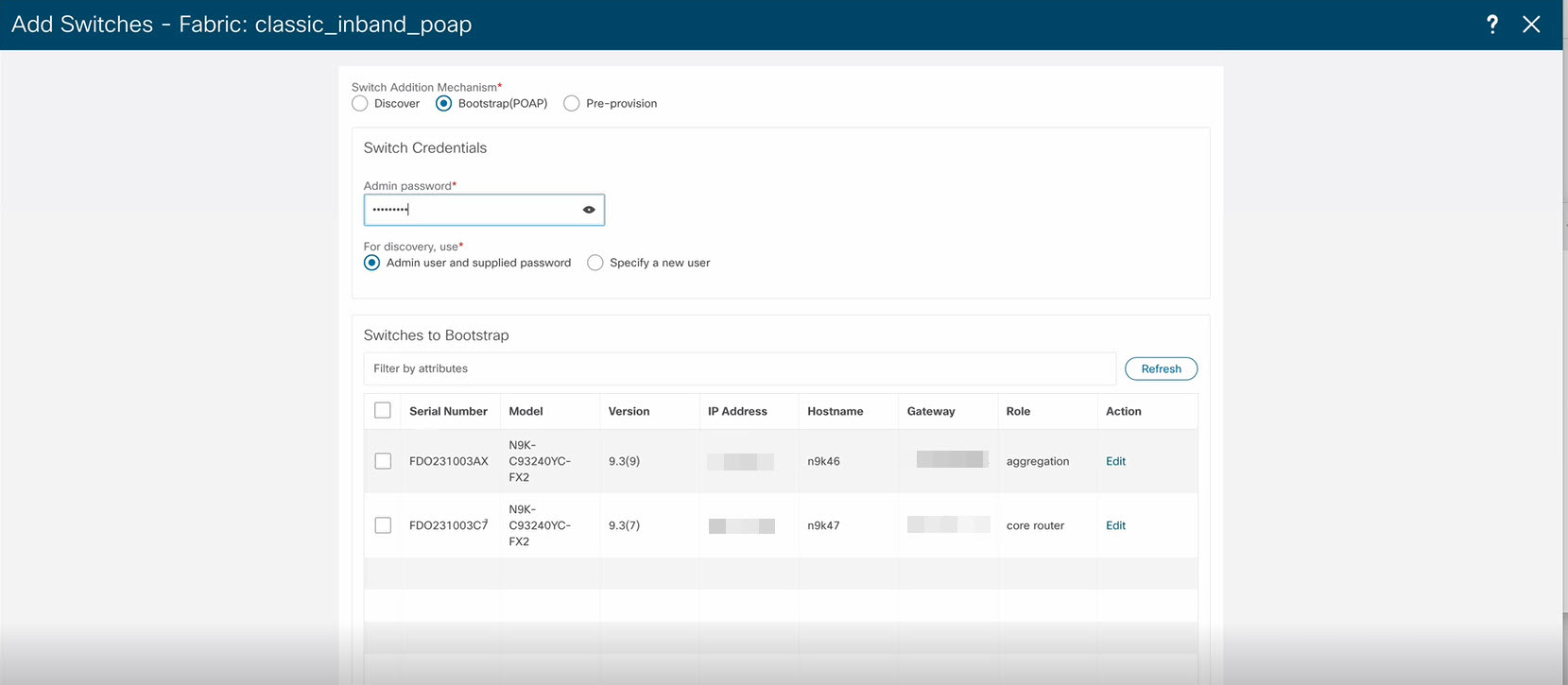

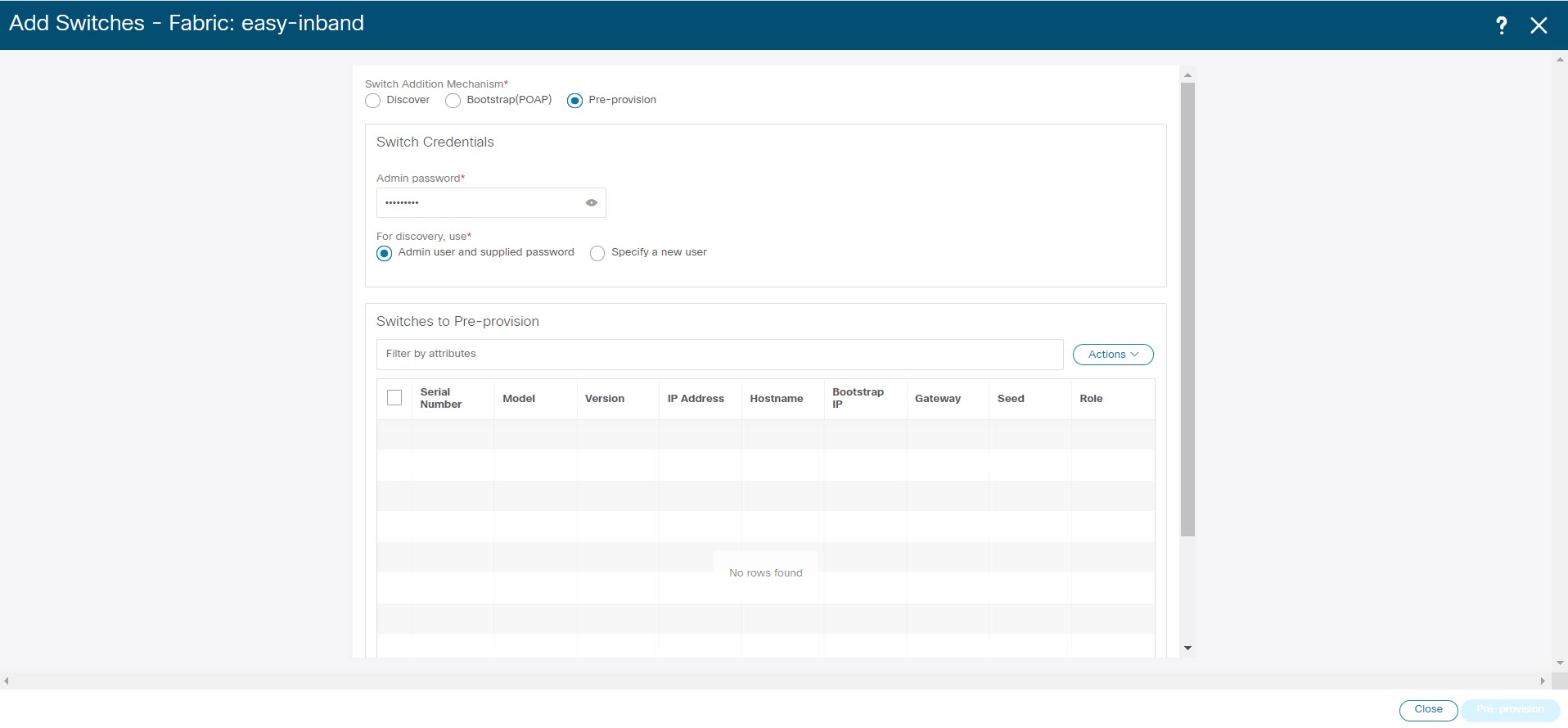

Bootstrap

The fields in the Bootstrap tab are described in the following table. Most of the fields are automatically generated based on Cisco-recommended best practice configurations, but you can update the fields if needed.

|

Field |

Description |

||

|---|---|---|---|

|

Enable Bootstrap |

Select this check box to enable the bootstrap feature. Bootstrap allows easy day-0 import and bring-up of new devices into an existing fabric. Bootstrap leverages the NX-OS POAP functionality. Starting from Cisco NDFC Release 12.1.1e, to add more switches and for POAP capability, chose check box for Enable Bootstrap and Enable Local DHCP Server. For more information, see Inband Management and Inband POAP in Easy Fabrics After you enable bootstrap, you can enable the DHCP server for automatic IP address assignment using one of the following methods:

|

||

|

Enable Local DHCP Server |

Select this check box to initiate enabling of automatic IP address assignment through the local DHCP server. When you select this check box, the DHCP Scope Start Address and DHCP Scope End Address fields become editable. If you do not select this check box, Nexus Dashboard Fabric Controller uses the remote or external DHCP server for automatic IP address assignment. |

||

|

DHCP Version |

Select DHCPv4 or DHCPv6 from this drop-down list. When you select DHCPv4, the Switch Mgmt IPv6 Subnet Prefix field is disabled. If you select DHCPv6, the Switch Mgmt IP Subnet Prefix is disabled.

|

||

|

DHCP Scope Start Address and DHCP Scope End Address |

Specifies the first and last IP addresses of the IP address range to be used for the switch out of band POAP. |

||

|

Switch Mgmt Default Gateway |

Specifies the default gateway for the management VRF on the switch. |

||

|

Switch Mgmt IP Subnet Prefix |

Specifies the prefix for the Mgmt0 interface on the switch. The prefix should be between 8 and 30. DHCP scope and management default gateway IP address specification - If you specify the management default gateway IP address 10.0.1.1 and subnet mask 24, ensure that the DHCP scope is within the specified subnet, between 10.0.1.2 and 10.0.1.254. |

||

|

Switch Mgmt IPv6 Subnet Prefix |

Specifies the IPv6 prefix for the Mgmt0 interface on the switch. The prefix should be between 112 and 126. This field is editable if you enable IPv6 for DHCP. |

||

|

Enable AAA Config |

Select this check box to include AAA configurations from the Manageability tab as part of the device start-up config post bootstrap. |

||

|

DHCPv4/DHCPv6 Multi Subnet Scope |

Specifies the field to enter one subnet scope per line. This field is editable after you check the Enable Local DHCP Server check box. The format of the scope should be defined as: DHCP Scope Start Address, DHCP Scope End Address, Switch Management Default Gateway, Switch Management Subnet Prefix For example: 10.6.0.2, 10.6.0.9, 10.6.0.1, 24 |

||

|

Bootstrap Freeform Config |

(Optional) Enter additional commands as needed. For example, if you require some additional configurations to be pushed to the device and be available post device bootstrap, they can be captured in this field, to save the desired intent. After the devices boot up, they will contain the configuration defined in the Bootstrap Freeform Config field. Copy-paste the running-config to a freeform config field with correct indentation, as seen in the running configuration on the NX-OS switches. The freeform config must match the running config. For more information, see Enabling Freeform Configurations on Fabric Switches. |

What's next: Complete the configurations in another tab if necessary, or click Save when you have completed the necessary configurations for this fabric.

Configuration Backup

The fields in the Configuration Backup tab are described in the following table. Most of the fields are automatically generated based on Cisco-recommended best practice configurations, but you can update the fields if needed.

|

Field |

Description |

||

|---|---|---|---|

|

Hourly Fabric Backup |

Select the check box to enable an hourly backup of fabric configurations and the intent. The hourly backups are triggered during the first 10 minutes of the hour. |

||

|

Scheduled Fabric Backup |

Check the check box to enable a daily backup. This backup tracks changes in running configurations on the fabric devices that are not tracked by configuration compliance. |

||

|

Scheduled Time |

Specify the scheduled backup time in a 24-hour format. This field is enabled if you check the Scheduled Fabric Backup check box. Select both the check boxes to enable both back up processes. The backup process is initiated after you click Save. The scheduled backups are triggered exactly at the time you specify with a delay of up to two minutes. The scheduled backups are triggered regardless of the configuration deployment status. The number of fabric backups that will be retained on NDFC is decided by the . The number of archived files that can be retained is set in the # Number of archived files per device to be retained: field in the Server Properties window.

You can also initiate the fabric backup in the fabric topology window. Click Backup Now in the Actions pane. |

What's next: Complete the configurations in another tab if necessary, or click Save when you have completed the necessary configurations for this fabric.

Flow Monitor

The fields in the Flow Monitor tab are described in the following table. Most of the fields are automatically generated based on Cisco-recommended best practice configurations, but you can update the fields if needed.

|

Field |

Description |

||

|---|---|---|---|

|

Enable Netflow |

Check this check box to enable Netflow on VTEPs for this Fabric. By default, Netflow is disabled. On Enable, NetFlow configuration will be applied to all VTEPS that support netflow.

If netflow is not enabled at the fabric level, an error message is generated when you enable netflow at the interface, network, or vrf level. For information about Netflow support for Cisco NDFC, refer to Netflow Support. |

In the Netflow Exporter area, click Actions > Add to add one or more Netflow exporters. This exporter is the receiver of the netflow data. The fields on this screen are:

-

Exporter Name – Specifies the name of the exporter.

-

IP – Specifies the IP address of the exporter.

-

VRF – Specifies the VRF over which the exporter is routed.

-

Source Interface – Enter the source interface name.

-

UDP Port – Specifies the UDP port over which the netflow data is exported.

Click Save to configure the exporter. Click Cancel to discard. You can also choose an existing exporter and select Actions > Edit or Actions > Delete to perform relevant actions.

In the Netflow Record area, click Actions > Add to add one or more Netflow records. The fields on this screen are:

-

Record Name – Specifies the name of the record.

-

Record Template – Specifies the template for the record. Enter one of the record templates names. In Release 12.0.2, the following two record templates are available for use. You can create custom netflow record templates. Custom record templates saved in the template library are available for use here.

-

netflow_ipv4_record – to use the IPv4 record template.

-

netflow_l2_record – to use the Layer 2 record template.

-

-

Is Layer2 Record – Check this check box if the record is for Layer2 netflow.

Click Save to configure the report. Click Cancel to discard. You can also choose an existing record and select Actions > Edit or Actions > Delete to perform relevant actions.

In the Netflow Monitor area, click Actions > Add to add one or more Netflow monitors. The fields on this screen are:

-

Monitor Name – Specifies the name of the monitor.

-

Record Name – Specifies the name of the record for the monitor.

-

Exporter1 Name – Specifies the name of the exporter for the netflow monitor.

-

Exporter2 Name – (optional) Specifies the name of the secondary exporter for the netflow monitor.

The record name and exporters referred to in each netflow monitor must be defined in "Netflow Record" and "Netflow Exporter".

Click Save to configure the monitor. Click Cancel to discard. You can also choose an existing monitor and select Actions > Edit or Actions > Delete to perform relevant actions.

What's next: Complete the configurations in another tab if necessary, or click Save when you have completed the necessary configurations for this fabric.

Configuring Fabrics with eBGP Underlay

You can use the BGP Fabric fabric template to create a fabric with eBGP underlay. For more information, see Configuring a Fabric with eBGP Underlay.

IPv6 Underlay Support for Easy Fabric

You can create an Easy fabric with IPv6 only underlay. The IPv6 underlay is supported only for the Data Center VXLAN EVPN template. For more information, see Configuring a VXLANv6 Fabric.

Overview of Tenant Routed Multicast

Tenant Routed Multicast (TRM) enables multicast forwarding on the VXLAN fabric that uses a BGP-based EVPN control plane. TRM provides multi-tenancy aware multicast forwarding between senders and receivers within the same or different subnet local or across VTEPs.

With TRM enabled, multicast forwarding in the underlay is leveraged to replicate VXLAN encapsulated routed multicast traffic. A Default Multicast Distribution Tree (Default-MDT) is built per-VRF. This is an addition to the existing multicast groups for Layer-2 VNI Broadcast, Unknown Unicast, and Layer-2 multicast replication group. The individual multicast group addresses in the overlay are mapped to the respective underlay multicast address for replication and transport. The advantage of using a BGP-based approach allows the VXLAN BGP EVPN fabric with TRM to operate as fully distributed Overlay Rendezvous-Point (RP), with the RP presence on every edge-device (VTEP).

A multicast-enabled data center fabric is typically part of an overall multicast network. Multicast sources, receivers, and multicast rendezvous points might reside inside the data center but also might be inside the campus or externally reachable via the WAN. TRM allows a seamless integration with existing multicast networks. It can leverage multicast rendezvous points external to the fabric. Furthermore, TRM allows for tenant-aware external connectivity using Layer-3 physical interfaces or subinterfaces.

For more information, see the following:

Overview of Tenant Routed Multicast with VXLAN EVPN Multi-Site

Tenant Routed Multicast with Multi-Site enables multicast forwarding across multiple VXLAN EVPN fabrics connected via Multi-Site.

The following two use cases are supported:

-

Use Case 1: TRM provides Layer 2 and Layer 3 multicast services across sites for sources and receivers across different sites.

-

Use Case 2: Extending TRM functionality from VXLAN fabric to sources receivers external to the fabric.

TRM Multi-Site is an extension of BGP-based TRM solution that enables multiple TRM sites with multiple VTEPs to connect to each other to provide multicast services across sites in most efficient possible way. Each TRM site is operating independently and border gateway on each site allows stitching across each site. There can be multiple Border Gateways for each site. In a given site, the BGW peers with Route Sever or BGWs of other sites to exchange EVPN and MVPN routes. On the BGW, BGP will import routes into the local VRF/L3VNI/L2VNI and then advertise those imported routes into the Fabric or WAN depending on where the routes were learnt from.

Tenant Routed Multicast with VXLAN EVPN Multi-Site Operations

The operations for TRM with VXLAN EVPN Multi-Site are as follows:

-

Each Site is represented by Anycast VTEP BGWs. DF election across BGWs ensures no packet duplication.

-

Traffic between Border Gateways uses ingress replication mechanism. Traffic is encapsulated with VXLAN header followed by IP header.

-

Each Site will only receive one copy of the packet.

-

Multicast source and receiver information across sites is propagated by BGP protocol on the Border Gateways configured with TRM.

-

BGW on each site receives the multicast packet and re-encapsulate the packet before sending it to the local site.

For information about guidelines and limitations for TRM with VXLAN EVPN Multi-Site, see Configuring Tenant Routed Multicast.

Configuring TRM for Single Site Using Cisco Nexus Dashboard Fabric Controller

This section is assumes that a VXLAN EVPN fabric has already been provisioned using Cisco Nexus Dashboard Fabric Controller.

Procedure

|

Step 1 |

Enable TRM for the selected Easy Fabric. If the fabric template is Data Center VXLAN EVPN, from the Fabric Overview Actions drop-down, choose the Edit Fabric option. Click the Replication tab. The fields on this tab are: Enable Tenant Routed Multicast (TRM): Select the check box to enable Tenant Routed Multicast (TRM) that allows overlay multicast traffic to be supported over EVPN/MVPN in the VXLAN BGP EVPN fabric. Default MDT Address for TRM VRFs: When you select the Enable Tenant Routed Multicast (TRM) check box, the multicast address for Tenant Routed Multicast traffic is auto populated. By default, this address is from the IP prefix specified in the Multicast Group Subnet field. When you update either field, ensure that the TRM address is chosen from the IP prefix specified in Multicast Group Subnet. Click Save to save the fabric settings. At this point, all the switches turn “Blue” as it will be in the pending state. From the Fabric Overview Actions drop-down list, choose Recalculate Config and then choose Deploy Config to enable the following:

For VXLAN EVPN fabric created using BGP Fabric fabric template, Enable Tenant Routed Multicast (TRM) field and Default MDT Address for TRM VRFs field can be found on the EVPN tab. |

||

|

Step 2 |

Enable TRM for the VRF. Navigate to Fabric Overview > VRFs > VRFs and edit the selected VRF. Navigate to the Advanced tab and edit the following TRM settings: TRM Enable – Select the check box to enable TRM. If you enable TRM, then the RP address and the underlay multicast address must be entered. Is RP External – Enable this check box if the RP is external to the fabric. If this field is unchecked, RP is distributed in every VTEP.

RP Address – Specifies the IP address of the RP. RP Loopback ID – Specifies the loopback ID of the RP, if Is RP External is not enabled. Underlay Mcast Address – Specifies the multicast address associated with the VRF. The multicast address is used for transporting multicast traffic in the fabric underlay. Overlay Mcast Groups – Specifies the multicast group subnet for the specified RP. The value is the group range in “ip pim rp-address” command. If the field is empty, 224.0.0.0/24 is used as default. Click Save to save the settings. The switches go into the pending state, that is, blue color. These settings enable the following:

|

||

|

Step 3 |

Enable TRM for the network. Navigate to Fabric Overview > Networks > Networks. Edit the selected network and navigate to the Advanced tab. Edit the following TRM setting: TRM Enable – Select the check box to enable TRM. Click Save to save the settings. The switches go into the pending state, that is, the blue color. The TRM settings enable the following:

|

Configuring TRM for Multi-Site Using Cisco Nexus Dashboard Fabric Controller

This section assumes that a Multi-Site Domain (MSD) has already been deployed by Cisco Nexus Dashboard Fabric Controller and TRM needs to be enabled.

Procedure

|

Step 1 |

Enable TRM on the BGWs. Navigate to Fabric Overview > VRFs > VRFs. Make sure that the right DC Fabric is selected under the Scope and edit the VRF. Navigate to the Advanced tab. Edit the TRM settings. Repeat this process for every DC Fabric and its VRFs. TRM Enable – Select the check box to enable TRM. If you enable TRM, then the RP address and the underlay multicast address must be entered. Is RP External – Enable this check box if the RP is external to the fabric. If this field is unchecked, RP is distributed in every VTEP.

RP Address – Specifies the IP address of the RP. RP Loopback ID – Specifies the loopback ID of the RP, if Is RP External is not enabled. Underlay Mcast Address – Specifies the multicast address associated with the VRF. The multicast address is used for transporting multicast traffic in the fabric underlay. Overlay Mcast Groups – Specifies the multicast group subnet for the specified RP. The value is the group range in “ip pim rp-address” command. If the field is empty, 224.0.0.0/24 is used as default. Enable TRM BGW MSite - Select the check box to enable TRM on Border Gateway Multi-Site. Click on Save to save the settings. The switches go into the pending state, that is, blue color. These settings enable the following:

|

||

|

Step 2 |

Establish MVPN AFI between the BGWs. Double-click the MSD fabric to open the Fabric Overview window. Choose Links. Filter it by the policy - Overlays. Select and edit each overlay peering to enable TRM by checking the Enable TRM check box. Click Save to save the settings. The switches go into the pending state, that is, the blue color. The TRM settings enable the MVPN peering’s between the BGWs, or BGWs and Route Server. |

vPC Fabric Peering

vPC Fabric Peering provides an enhanced dual-homing access solution without the overhead of wasting physical ports for vPC Peer Link. This feature preserves all the characteristics of a traditional vPC. For more information, see Information about vPC Fabric Peering section in Cisco Nexus 9000 Series NX-OS VXLAN Configuration Guide.

You can create a virtual peer link for two switches or change the existing physical peer link to a virtual peer link. Cisco NDFC support vPC fabric peering in both greenfield as well as brownfield deployments. This feature is applicable for Data Center VXLAN EVPN and BGP Fabric fabric templates.

Note |

The BGP Fabric fabric does not support brownfield import. |

Guidelines and Limitations

The following are the guidelines and limitations for vPC fabric pairing.

-

vPC fabric peering is supported from Cisco NX-OS Release 9.2(3).

-

Only Cisco Nexus N9K-C9332C Switch, Cisco Nexus N9K-C9364C Switch, Cisco Nexus N9K-C9348GC-FXP Switch as also the Cisco Nexus 9000 Series Switches that ends with FX, and FX2 support vPC fabric peering.

-

Cisco Nexus N9K-C93180YC-FX3S and N9K-C93108TC-FX3P platform switches support vPC fabric peering.

-

Cisco Nexus 9300-EX, and 9300-FX/FXP/FX2/FX3/GX/GX2 platform switches support vPC Fabric Peering. Cisco Nexus 9200 and 9500 platform switches do not support vPC Fabric Peering. For more information, see Guidelines and Limitations for vPC Fabric Peering section in Cisco Nexus 9000 Series NX-OS VXLAN Configuration Guide.

-

If you use other Cisco Nexus 9000 Series Switches, a warning will appear during Recalculate & Deploy. A warning appears in this case because these switches will be supported in future releases.

-

If you try pairing switches that do not support vPC fabric peering, using the Use Virtual Peerlink option, a warning will appear when you deploy the fabric.

-

You can convert a physical peer link to a virtual peer link and vice-versa with or without overlays.

-

Switches with border gateway leaf roles do not support vPC fabric peering.

-

vPC fabric peering is not supported for Cisco Nexus 9000 Series Modular Chassis and FEXs. An error appears during Recalculate & Deploy if you try to pair any of these.

-

Brownfield deployments and greenfield deployments support vPC fabric peering in Cisco NDFC.

-

However, you can import switches that are connected using physical peer links and convert the physical peer links to virtual peer links after Recalculate & Deploy. To update a TCAM region during the feature configuration, use the hardware access-list tcam ingress-flow redirect 512 command in the configuration terminal.

QoS for Fabric vPC-Peering

In the Data Center VXLAN EVPN fabric settings, you can enable QoS on spines for guaranteed delivery of vPC Fabric Peering communication. Additionally, you can specify the QoS policy name.

Note the following guidelines for a greenfield deployment:

-

If QoS is enabled and the fabric is newly created:

-

If spines or super spines neighbor is a virtual vPC, make sure neighbor is not honored from invalid links, for example, super spine to leaf or borders to spine when super spine is present.

-

Based on the Cisco Nexus 9000 Series Switch model, create the recommended global QoS config using the switch_freeform policy template.

-

Enable QoS on fabric links from spine to the correct neighbor.

-

-

If the QoS policy name is edited, make sure policy name change is honored everywhere, that is, global and links.

-

If QoS is disabled, delete all configuration related to QoS fabric vPC peering.

-

If there is no change, then honor the existing PTI.

For more information about a greenfield deployment, see Creating a VXLAN EVPN Fabric Using the Data Center VXLAN EVPN Template.

Note the following guidelines for a brownfield deployment:

Brownfield Scenario 1:

-

If QoS is enabled and the policy name is specified:

Note

You need to enable only when the policy name for the global QoS and neighbor link service policy is same for all the fabric vPC peering connected spines.

-