|

Step 1

|

Download the Cisco Nexus Dashboard image.

-

Browse to the Software Download page.

-

Click Nexus Dashboard Software.

-

From the left sidebar, choose the Nexus Dashboard version you want to download.

-

Download the Cisco Nexus Dashboard image for Linux KVM (nd-dk9.<version>.qcow2).

|

|

Step 2

|

Copy the image to the Linux KVM servers where you will host the nodes.

You can use scp to copy the image, for example:

# scp nd-dk9.2.1.1a.qcow2 root@<kvm-host-ip>:/home/nd-base

The following steps assume you copied the image into the /home/nd-base directory.

|

|

Step 3

|

Create the required disk images for the first node.

You will create a snapshot of the base qcow2 image you downloaded and use the snapshots as the disk images for the nodes' VMs. You will also need to create a second disk

image for each node.

-

Log in to your KVM host as the root user.

-

Create a directory for the node's snapshot.

The following steps assume you create the snapshot in the /home/nd-node1 directory.

# mkdir -p /home/nd-node1/

# cd /home/nd-node1

-

Create the snapshot.

In the following command, replace /home/nd-base/nd-dk9.2.1.1a.qcow2 with the location of the base image you created in the previous step.

# qemu-img create -f qcow2 -b /home/nd-base/nd-dk9.2.1.1a.qcow2 /home/nd-node1/nd-node1-disk1.qcow2

-

Create the additional disk image for the node.

Each node requires two disks: a snapshot of the base Nexus Dashboard qcow2 image and a second 500GB disk.

# qemu-img create -f qcow2 /home/nd-node1/nd-node1-disk2.qcow2 500G

|

|

Step 4

|

Repeat the previous step to create the disk images for the second and third nodes.

Before you proceed to the next step, you should have the following:

-

For node1, /home/nd-node1/ directory with two disk images:

-

/home/nd-node1/nd-node1-disk1.qcow2, which is a snapshot of the base qcow2 image you downloaded in Step 1.

-

/home/nd-node1/nd-node1-disk2.qcow2, which is a new 500GB disk you created.

-

For node2, /home/nd-node2/ directory with two disk images:

-

/home/nd-node2/nd-node2-disk1.qcow2, which is a snapshot of the base qcow2 image you downloaded in Step 1.

-

/home/nd-node2/nd-node2-disk2.qcow2, which is a new 500GB disk you created.

-

For node3, /home/nd-node3/ directory with two disk images:

-

/home/nd-node1/nd-node3-disk1.qcow2, which is a snapshot of the base qcow2 image you downloaded in Step 1.

-

/home/nd-node1/nd-node3-disk2.qcow2, which is a new 500GB disk you created.

|

|

Step 5

|

Create the first node's VM.

-

Open the KVM console and click New Virtual Machine.

You can open the KVM console from the command line using the virt-manager command.

-

In the New VM screen, choose Import existing disk image option and click Forward.

-

In the Provide existing storage path field, click Browse and select the nd-node1-disk1.qcow2 file.

We recommend that each node's disk image is stored on its own disk partition.

-

Choose Generic for the OS type and Version, then click Forward.

-

Specify 64GB memory and 16 CPUs, then click Forward.

-

Enter the Name of the virtual machine, for example nd-node1 and check the Customize configuration before install option. Then click Finish.

|

Note

|

You must select the Customize configuration before install checkbox to be able to make the disk and network card customizations required for the node.

|

The VM details window will open.

In the VM details window, change the NIC's device model:

-

Select NIC <mac>.

-

For Device model, choose e1000.

-

For Network Source, choose the bridge device and provide the name of the "mgmt" bridge.

In the VM details window, add a second NIC:

-

Click Add Hardware.

-

In the Add New Virtual Hardware screen, select Network.

-

For Network Source, choose the bridge device and provide the name of the created "data" bridge.

-

Leave the default Mac address value.

-

For Device model, choose e1000.

In the VM details window, add the second disk image:

-

Click Add Hardware.

-

In the Add New Virtual Hardware screen, select Storage.

-

For the disk's bus driver, choose IDE.

-

Select Select or create custom storage, click Manage, and select the nd-node1-disk2.qcow2 file you created.

-

Click Finish to add the second disk.

Finally, click Begin Installation to finish creating the node's VM.

|

|

Step 6

|

Repeat the previous step to create the VMs for the second and third nodes, then start all VMs.

|

|

Step 7

|

Open one of the node's console and configure the node's basic information.

-

Begin initial setup.

You will be prompted to run the first-time setup utility:

[ OK ] Started atomix-boot-setup.

Starting Initial cloud-init job (pre-networking)...

Starting logrotate...

Starting logwatch...

Starting keyhole...

[ OK ] Started keyhole.

[ OK ] Started logrotate.

[ OK ] Started logwatch.

Press any key to run first-boot setup on this console...

-

Enter and confirm the admin password

This password will be used for the rescue-user SSH login as well as the initial GUI password.

Admin Password:

Reenter Admin Password:

-

Enter the management network information.

Management Network:

IP Address/Mask: 192.168.9.172/24

Gateway: 192.168.9.1

-

For the first node only, designate it as the "Cluster Leader".

You will log into the cluster leader node to finish configuration and complete cluster creation.

Is cluster leader?: y

-

Review and confirm the entered information.

You will be asked if you want to change the entered information. If all the fields are correct, choose n to proceed. If you want to change any of the entered information, enter y to re-start the basic configuration script.

Please review the config

Management network:

Gateway: 192.168.9.1

IP Address/Mask: 192.168.9.172/24

Cluster leader: no

Re-enter config? (y/N): n

|

|

Step 8

|

Repeat previous step to configure the initial information for the second and third nodes.

You do not need to wait for the first node configuration to complete, you can begin configuring the other two nodes simultaneously.

The steps to deploy the second and third nodes are identical with the only exception being that you must indicate that they

are not the Cluster Leader.

|

|

Step 9

|

Wait for the initial bootstrap process to complete on all nodes.

After you provide and confirm management network information, the initial setup on the first node (Cluster Leader) configures the networking and brings up the UI, which you will use to add two other nodes and complete the cluster deployment.

Please wait for system to boot: [#########################] 100%

System up, please wait for UI to be online.

System UI online, please login to https://192.168.9.172 to continue.

|

|

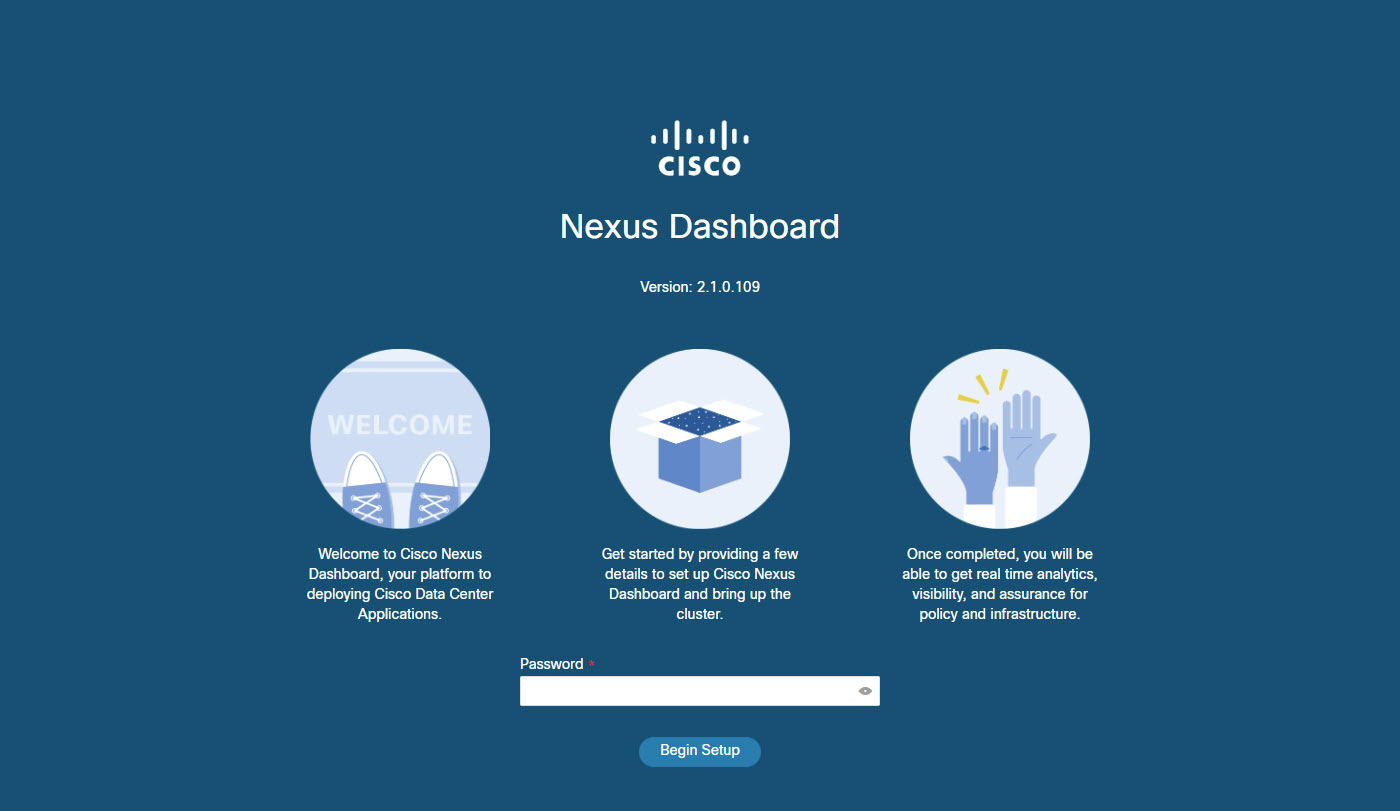

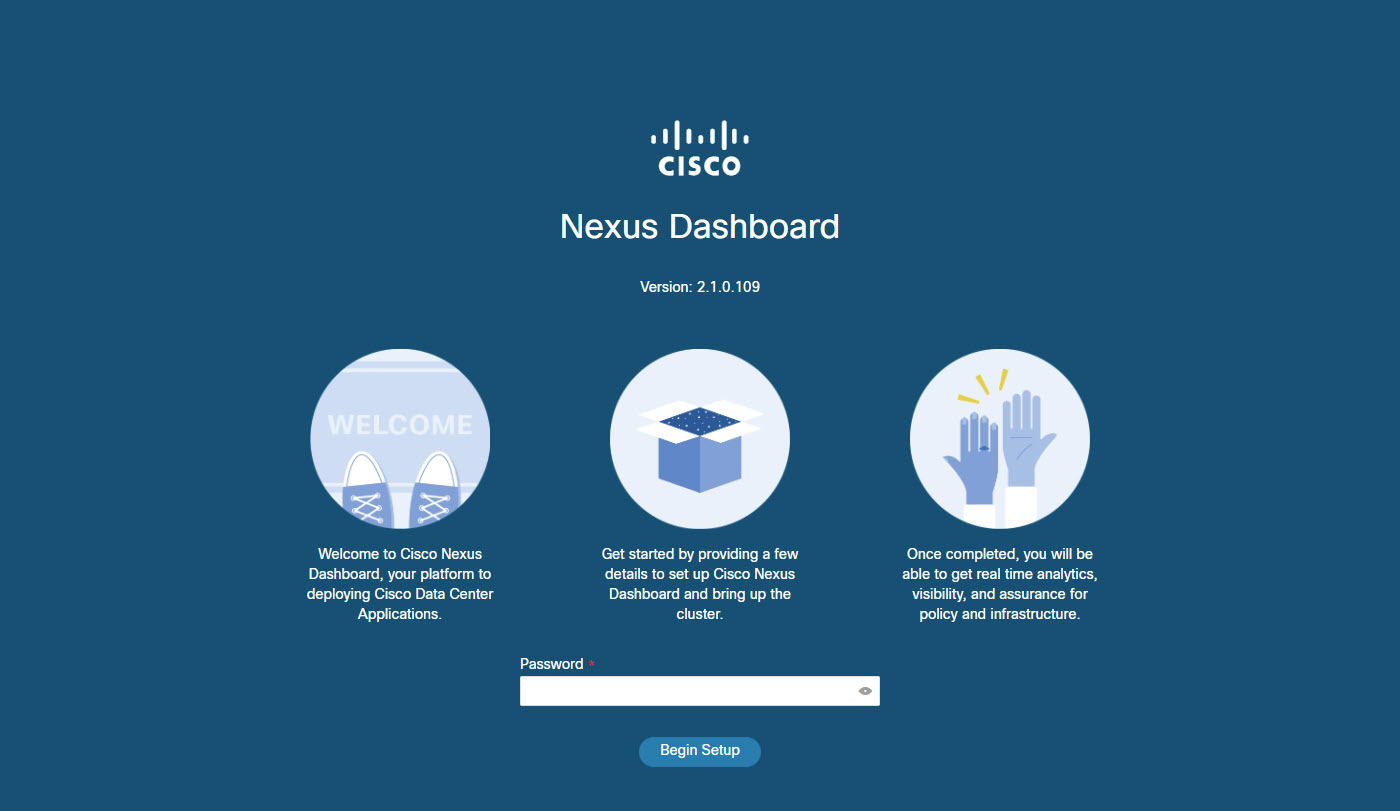

Step 10

|

Open your browser and navigate to https://<first-node-management-ip> to open the GUI.

The rest of the configuration workflow takes place from the first node's (Cluster Leader) GUI. You do not need to log in to or configure the other two nodes directly.

Enter the password you provided in a previous step and click Begin Setup

|

|

Step 11

|

Enter the password you provided for the first node and click Begin Setup.

|

|

Step 12

|

Provide the Cluster Details.

In the Cluster Details screen of the initial setup wizard, provide the following information:

-

Provide the Cluster Name for this Nexus Dashboard cluster.

-

Click +Add NTP Host to add one or more NTP servers.

You must provide an IP address, fully qualified domain name (FQDN) are not supported.

After you enter the IP address, click the green checkmark icon to save it.

-

Click +Add DNS Provider to add one or more DNS servers.

After you enter the IP address, click the green checkmark icon to save it.

-

Provide a Proxy Server.

For clusters that do not have direct connectivity to Cisco cloud, we recommend configuring a proxy server to establish the

connectivity, which will allow you to mitigate risk from exposure to non-conformant hardware and software in your fabrics.

If you want to skip proxy configuration, click the information (i) icon next to the field, then click Skip.

-

(Optional) If your proxy server required authentication, change Authentication required for Proxy to Yes and provide the login credentials.

-

(Optional) Expand the Advanced Settings category and change the settings if required.

Under advanced settings, you can configure the following:

-

Provide one or more search domains by clicking +Add DNS Search Domain.

After you enter the IP address, click the green checkmark icon to save it.

-

Provide custom App Network and Service Network.

The application overlay network defines the address space used by the application's services running in the Nexus Dashboard.

The field is pre-populated with the default 172.17.0.1/16 value.

The services network is an internal network used by the Nexus Dashboard and its processes. The field is pre-populated with

the default 100.80.0.0/16 value.

Application and Services networks are described in the Prerequisites and Guidelines section earlier in this document.

-

Click Next to continue.

|

|

Step 13

|

In the Node Details screen, provide the node's information.

-

Click the Edit button next to the first node.

-

Provide the node's Name.

-

Provide the node's Data Network information.

The Management Network information is already pre-populated with the information you provided for the first node.

You must provide the data network IP address, netmask, and gateway. Optionally, you can also provide the VLAN ID for the network.

For most deployments, you can leave the VLAN ID field blank.

-

(Optional) Provide IPv6 addresses for the management and data networks.

Starting with release 2.1.1, Nexus Dashboard supports dual stack IPv4/IPv6 for the management and data networks.

|

Note

|

If you want to provide IPv6 information, you must do it during cluster bootstrap process. If you deploy the cluster using

only IPv4 stack and want to add IPv6 information later, you would need to redeploy the cluster.

All nodes in the cluster must be configured with either only IPv4 or dual IPv4/IPv6 stack.

|

-

Click Save to save the changes.

|

|

Step 14

|

Click Add Node to add the second node to the cluster.

The Node Details window opens.

-

Provide the node's Name.

-

In the Credentials section, provide the node's Management Network IP address and login credentials, then click Verify.

The IP address and login credentials are used to pull that node's information.

-

Provide the node's Data Network IP address and gateway.

The Management Network information will be pre-populated with the information pulled from the node based on the IP address and credentials you provided

in the previous sub-step.

You must provide the data network IP address, netmask, and gateway. Optionally, you can also provide the VLAN ID for the network.

For most deployments, you can leave the VLAN ID field blank.

-

(Optional) Provide IPv6 information for the management and data networks.

Starting with release 2.1.1, Nexus Dashboard supports dual stack IPv4/IPv6 for the management and data networks.

|

Note

|

If you want to provide IPv6 information, you must do it during cluster bootstrap process. If you deploy the cluster using

only IPv4 stack and want to add IPv6 information later, you would need to redeploy the cluster.

All nodes in the cluster must be configured with either only IPv4 or dual IPv4/IPv6 stack.

|

-

Click Save to save the changes.

|

|

Step 15

|

Repeat the previous step to add the 3rd node.

|

|

Step 16

|

Click Next to continue.

|

|

Step 17

|

In the Confirmation screen, review the entered information and click Configure to create the cluster.

During the node bootstrap and cluster bring-up, the overall progress as well as each node's individual progress will be displayed

in the UI.

It may take up to 30 minutes for the cluster to form and all the services to start. When cluster configuration is complete,

the page will reload to the Nexus Dashboard GUI.

|

|

Step 18

|

Verify that the cluster is healthy.

It may take up to 30 minutes for the cluster to form and all the services to start.

After all three nodes are ready, you can log in to any one node via SSH and run the following command to verify cluster health:

-

Verify that the cluster is up and running.

You can check the current status of cluster deployment by logging in to any of the nodes and running the acs health command.

While the cluster is converging, you may see the following outputs: $ acs health

k8s install is in-progress

$ acs health

k8s services not in desired state - [...]

$ acs health

k8s: Etcd cluster is not ready

When the cluster is up and running, the following output will be displayed:$ acs health

All components are healthy

-

Log in to the Nexus Dashboard GUI.

After the cluster becomes available, you can access it by browsing to any one of your nodes' management IP addresses. The

default password for the admin user is the same as the rescue-user password you chose for the first node of the Nexus Dashboard cluster.

|

Feedback

Feedback