Cisco UCS B200 M6 Blade Server

The Cisco UCS B200 M6 blade server is a half-width blade server that is designed for the Cisco UCS 5108 Blade Server Chassis. You can install up to eight UCS B200 M6 blade servers in a UCS 5108 chassis, mixing with other models of Cisco UCS blade servers in the chassis if desired. The server supports the following features:

-

Two CPU sockets for Third Generation Intel Xeon Scalable family of CPUs support one or two CPU blade configurations.

-

Up to 32 DDR4 DIMMs (16 sockets/8 channels per CPU).

-

Support for Intel Optane persistent memory 200 series DIMMs.

-

One front mezzanine storage module with the following options:

-

Cisco FlexStorage module supporting two 7 mm SATA SSDs. A 12G SAS controller chip is included on the module to provide hardware RAID for the two drives.

-

Cisco FlexStorage module supporting two 7 mm NVMe SSDs.

-

Cisco FlexStorage module supporting two mini-storage modules, module "1" and module "2." Each mini-storage module is a SATA M.2 dual-SSD mini-storage module that includes an on-board SATA RAID controller chip. Each RAID controller chip manages two SATA M.2 dual SSD modules.

-

-

Rear mLOM, which is required for blade discovery. This mLOM VIC card (for example, a Cisco VIC 1440) can provide per fabric connectivity of 20G or 40G when used with the pass-through Cisco UCS Port Expander Card in the rear mezzanine slot.

-

Optionally, the rear mezzanine slot can have a Cisco VIC Card (for example, a Cisco VIC 1480) or the pass-through Cisco UCS Port Expander Card.

Note |

Component support is subject to chassis power configuration restrictions. |

|

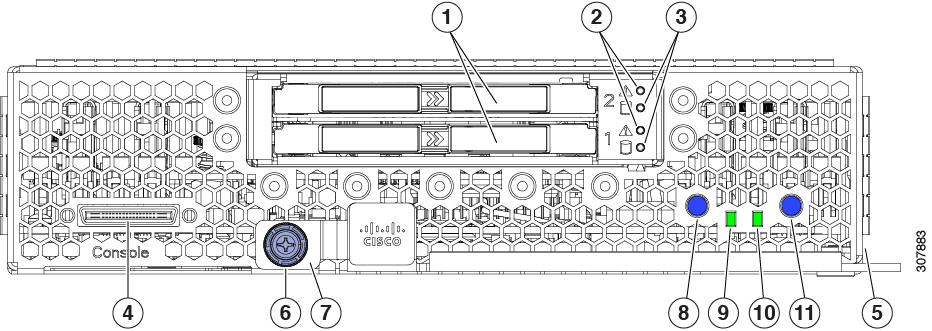

1 |

Cisco FlexStorage Module, showing drive bays 1 and 2 |

2 |

Disk Drive Status LEDs for each drive. |

|

3 |

Disk Drive activity LED for each drive |

4 |

Asset pull tag |

|

5 |

Local console connector |

6 |

Blade ejector thumbscrew |

|

7 |

Blade ejector handle |

8 |

Blade power button and LED |

|

9 |

Network link status LED |

10 |

Blade health LED |

|

11 |

Locator button and LED |

Note |

The asset pull tag is a blank plastic tag that pulls out from the front panel. You can add your own asset tracking label to the asset pull tag and not interfere with the intended air flow of the server. |

Feedback

Feedback