Overview

There are two approaches when it comes to upgrading your Nexus Dashboard Orchestrator:

-

Upgrading in-place by upgrading each component (such as the Nexus Dashboard platform and the Orchestrator service) in sequence.

This approach is described in Upgrading Automatically Via Service Catalog and is recommended in the following cases:

-

If you are using a physical Nexus Dashboard cluster.

-

If you are running a recent release of Nexus Dashboard (2.2.2 or later) and Nexus Dashboard Orchestrator (3.7.1 or later).

While you can use this approach to upgrade any Orchestrator release 3.3(1) or later, it may require upgrading the underlying Nexus Dashboard platform before you can upgrade the Orchestrator service. In those cases, an upgrade via configuration restore described below may be faster and simpler.

-

-

Deploy a brand new Nexus Dashboard cluster, installing a new NDO service instance in it and transferring existing Orchestrator configuration via the configuration restore workflow

This approach is described in this chapter and is recommended in the following cases:

-

If you are running any release of Nexus Dashboard Orchestrator or Multi-Site Orchestrator prior to release 3.3(1).

In this case you must upgrade using configuration restore because in-place upgrade is not supported.

-

If you are using a virtual Nexus Dashboard cluster and running an older release of Nexus Dashboard Orchestrator.

Upgrading from an old Nexus Dashboard Orchestrator release requires upgrading the underlying Nexus Dashboard platform as well, in which case deploying a new cluster and restoring configuration may shorten the required maintenance window.

This also allows you to simply disconnect the existing cluster and keep the existing VMs until the upgrade is complete in case you want to revert to the previous version or the upgrade does not succeed.

-

Changes in Release 4.0(1) and Later

Beginning with Release 4.0(1), Nexus Dashboard Orchestrator will validate and enforce a number of best practices when it comes to template design and deployment:

-

All policy objects must be deployed in order according to their dependencies.

For example, when creating a bridge domain (BD), you must associate it with a VRF. In this case, the BD has a VRF dependency so the VRF must be deployed to the fabric before or together with the BD. If these two objects are defined in the same template, then the Orchestrator will ensure that during deployment, the VRF is created first and associate it with the bridge domain.

However, if you define these two objects in separate templates and attempt to deploy the template with the BD first, the Orchestrator will return a validation error as the associated VRF is not yet deployed. In this case you must deploy the VRF template first, followed by the BD template.

-

All policy objects must be undeployed in order according to their dependencies, or in other words in the opposite order in which they were deployed.

As a corollary to the point above, when you undeploy templates, you must not undeploy objects on which other objects depend. For example, you cannot undeploy a VRF before undeploying the BD with which the VRF is associated.

-

No cyclical dependencies are allowed across multiple templates.

Consider a case of a VRF (

vrf1) associated with a bridge domain (bd1), which is in turn associated with an EPG (epg1). If you createvrf1intemplate1and deploy that template, then createbd1intemplate2and deploy that template, there will be no validation errors since the objects are deployed in correct order. However, if you then attempt to createepg1intemplate1, it would create a circular dependency between the two template, so the Orchestrator will not allow you to savetemplate1addition of the EPG.

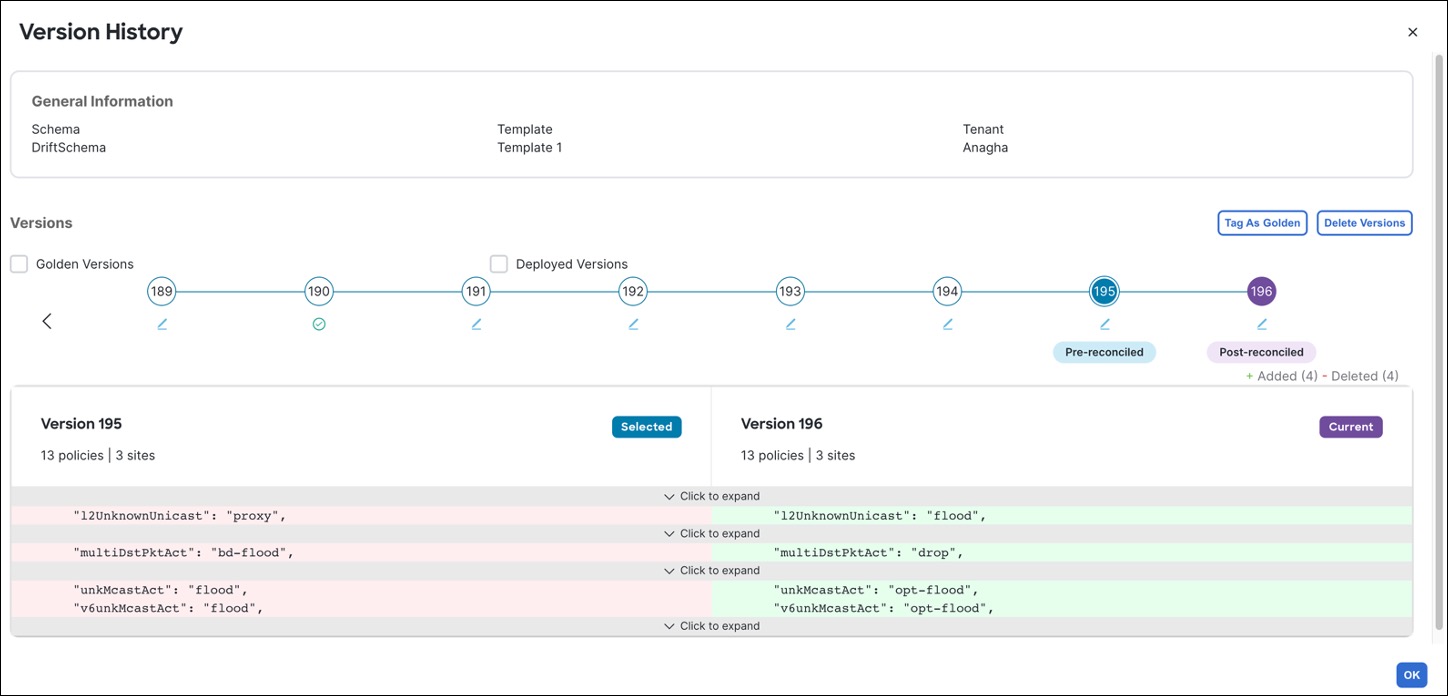

Due to these additional rules and requirements, an upgrade to release 4.0(1) or later from an earlier release requires an analysis of all existing templates and conversion of any template that does not satisfy the new requirements. This is done automatically during the upgrade process described in the following sections and you will receive a detailed report of all the changes that had to be applied to your existing templates to make them compliant with the new best practices.

Note |

You must ensure that you complete all the requirements described in the following "Prerequisites and Guidelines" section before you back up your existing configuration for the upgrade. Failure to do so may result in template conversion to fail for one or more templates and require you to manually resolve the issues or restart the migration process. |

Upgrade Workflow

The following list provides a high level overview of the migration process and the order of tasks you will need to perform.

-

Review the upgrade guidelines and complete all prerequisites.

-

Validate existing configuration using a Cisco-provided validation script, then create a backup of the existing Nexus Dashboard Orchestrator configuration and download the backup to your local machine.

-

Disconnect or bring down your existing cluster.

If your existing cluster is virtual, you can simply disconnect it from the network until you've deployed a new cluster and restored the configuration backup in it. This allows you to preserve your existing cluster and easily bring it back in service in case of any issues with the migration procedure.

-

Deploy a brand new Nexus Dashboard cluster release 2.3(b) or later and install Nexus Dashboard Orchestrator release 4.1(2) or later.

-

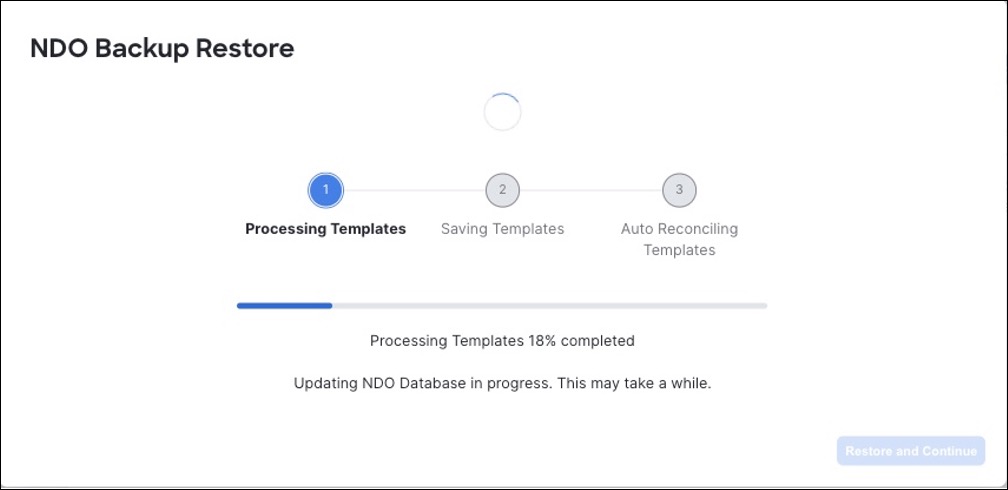

Add a remote location for backups to the fresh Nexus Dashboard Orchestrator instance, upload the backup you took on your previous release, and restore the configuration backup in the new NDO installation.

-

Resolve any configuration drifts.

) icon next to the backup and select

) icon next to the backup and select

Feedback

Feedback