Prerequisites and Guidelines

Virtual deployments are supported starting with Nexus Dashboard, Release 2.0.2h. Earlier releases support only the physical form factor described in Deploying as Physical Appliance.

Before you proceed with deploying the Nexus Dashboard cluster in VMware ESX, you must:

-

Review and complete the general prerequisites described in the Deployment Overview.

Note that this document describes how to initially deploy a three-node Nexus Dashboard cluster. If you want to expand an existing cluster with additional nodes (such as

workerorstandby), see the "Deploying Additional Nodes" section of the Cisco Nexus Dashboard User Guide instead.The guide is available from the Nexus Dashboard UI or online at Cisco Nexus Dashboard User Guide

-

Ensure that the ESX form factor supports your scale and application requirements.

Scale and application co-hosting vary based on the cluster form factor. You can use the Nexus Dashboard Capacity Planning tool to verify that the virtual form factor satisfies your deployment requirements.

-

Ensure you have enough system resources:

Table 1. Deployment Requirements Nexus Dashboard Version Requirements Release 2.0.2h

Earlier releases are not supported.

-

VMware vCenter 6.x

-

VMware ESXi 6.5 or 6.7

-

Each VM requires:

-

16 vCPUs

-

64 GB of RAM

-

500 GB disk

-

-

We recommend that each Nexus Dashboard node is deployed in a different ESXi server.

-

-

After each node's VM is deployed, ensure that the VMware Tools periodic time synchronization is disabled as described in the deployment procedure in the next section.

ESX Host Network Connectivity

If you plan to install Nexus Dashboard Insights or Fabric Controller service and use the Persistent IPs feature, you must

ensure that the ESX host where the cluster nodes are deployed has a single logical uplink. In other words, it is connected

via a single link, PC, or vPC and not a dual Active/Active (A/A) or Active/Standby (A/S) link without PC/vPC.

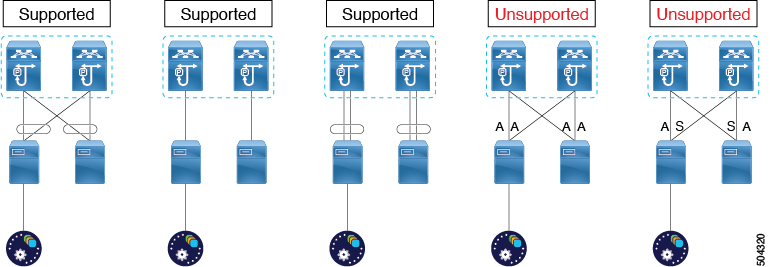

The following diagrams summarize the supported and unsupported network connectivity configurations for the ESX host where the nodes are deployed:

-

In case the ESX host is connected directly, the following configurations are supported:

-

A/Auplinks of Port-Group or virtual switch with PC or vPC -

Single uplink of Port-Group or virtual switch

-

Port-Channel used for the uplink of Port-Group or virtual switch.

A/AorA/Suplinks of Port-Group or virtual switch without PC or vPC are not supportedFigure 1. ESX Host Connectivity (Direct)

-

-

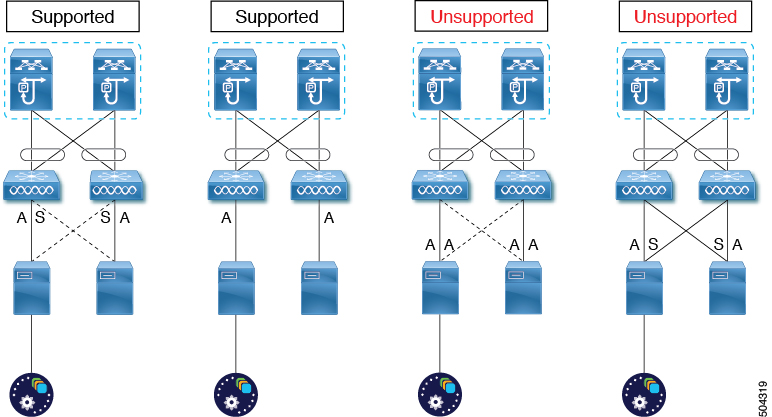

In case the ESX host is connected via a UCS Fabric Interconnect (or equivalent), the following configurations are supported:

-

A/Suplinks of Port-Group or virtual switch at UCS Fabric Interconnect level without PC or vPCIn this case, the

Active/Standbylinks are based on the server technology, such as Fabric Failover for Cisco UCS and not at the ESXi hypervisor level. -

Single uplink of Port-Group or virtual switch

A/AorA/Suplinks of Port-Group or virtual switch at the hypervisor level without PC or vPC are not supportedFigure 2. ESX Host Connectivity (with Fabric Interconnect)

-

Feedback

Feedback