System Overview

The Cisco UCS X9508 Server Chassis and its components are part of the Cisco Unified Computing System (UCS). This system can use multiple server chassis configurations along with the Cisco UCS Fabric Interconnects to provide advanced options and capabilities in server and data management. The following configuration options are supported:

-

All Cisco UCS compute nodes. In a compute node-only configuration, two Intelligent Fabric Modules (IFMs) are required.

-

A mix of Cisco UCS compute nodes and Cisco UCS PCI Nodes. In this configuration, the compute nodes are paired 1:1 with Cisco UCS PCIe nodes, such as the Cisco UCS X440p PCIe Node. Two Intelligent Fabric Modules (IFMs) and two Cisco X9416 X-Fabric Modules (XFMs) are required.

All servers, compute, and PCIe nodes are managed through the GUI or API with Cisco Intersight.

The Cisco UCS X9508 Server Chassis system consists of the following components:

-

Chassis versions:

-

Cisco UCS X9508 server chassis–AC version

-

-

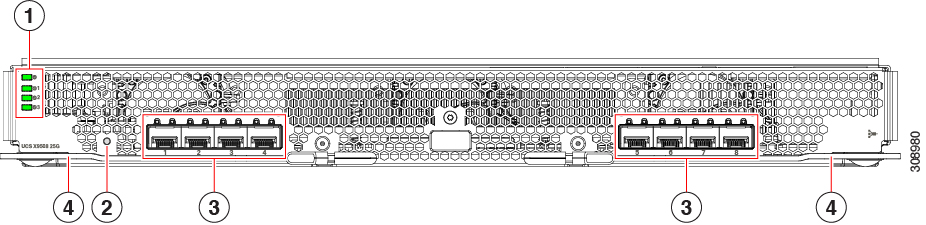

Intelligent Fabric Modules (IFMs), two deployed as a pair:

-

Cisco UCS 9108 100G IFMs (UCSX-I-9108-100G)—Two I/O modules, each with 8 100 Gigabit QSFP28 optical ports

-

Cisco UCS 9108 25G IFMs (UCSX-I-9108-25G)—Two I/O modules, each with 8 25 Gigabit SFP28 optical ports

-

-

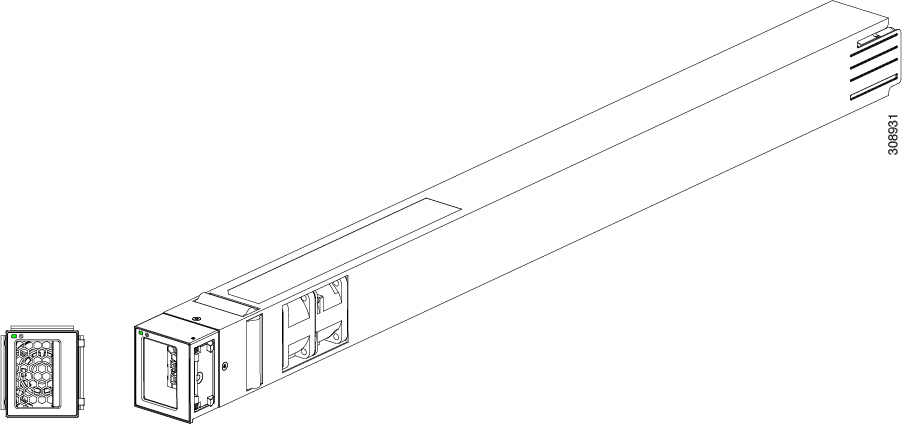

X-Fabric Modules (UCSX-F-9416)—Two XFMs are required in each UCS X9508 server chassis to support GPU acceleration through Cisco UCS X440p PCIe nodes.

-

Power supplies—Up to six 2800 Watt, hot-swappable power supplies

-

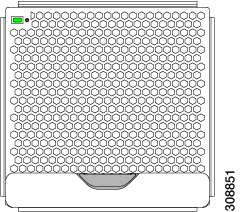

Fan modules—Four hot-swappable fan modules

-

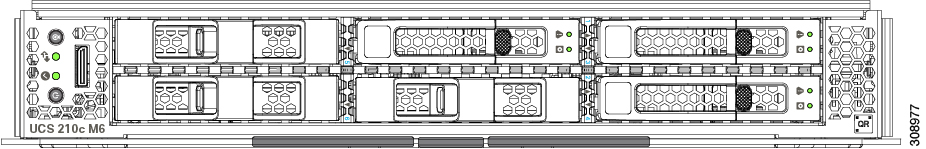

Up to 8 UCS X Series compute nodes, including the Cisco UCS X210c M6 compute nodes (UCSX-210C-M6), a compute node that contains one or two CPUs and up to six hard drives. For information about the compute node, go to the Cisco UCS X210c M6 Compute Node Installation and Service Note.

-

Up to 4 UCS X-Series compute nodes paired 1:1 with up to 4 Cisco UCS X-Series PCIe nodes, including the Cisco UCS X440p PCIe Node. This configuration requires two Cisco UCS X9416 X-Fabric Modules regardless of the number of PCIe nodes installed. For information about the PCIe node, go to the Cisco UCS X440p PCIe Node Installation and Service Guide.

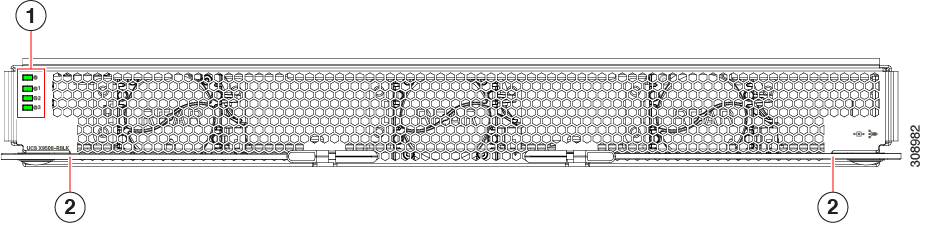

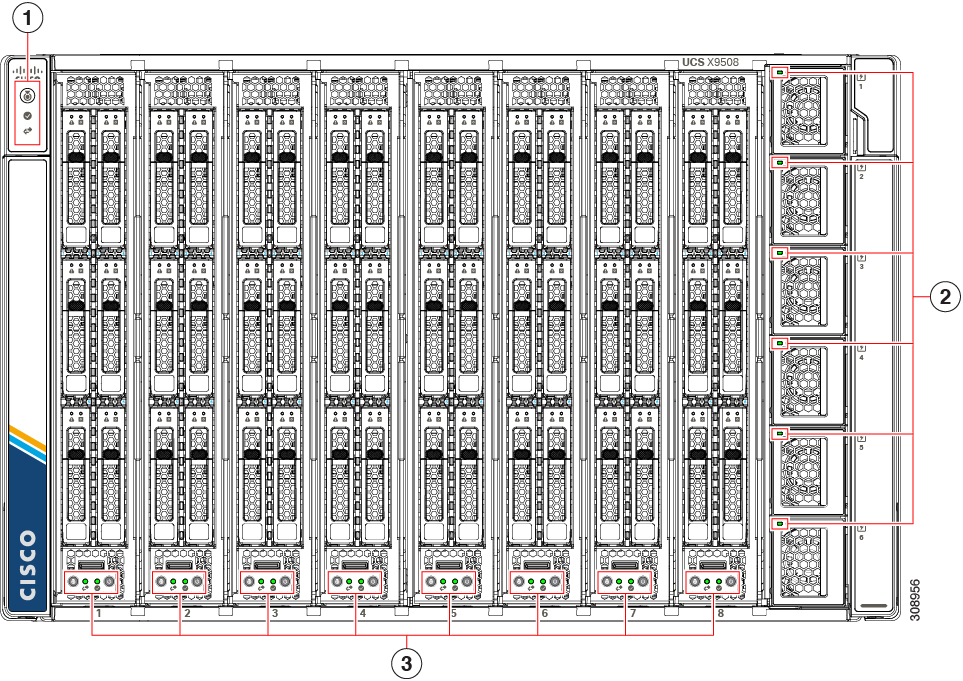

|

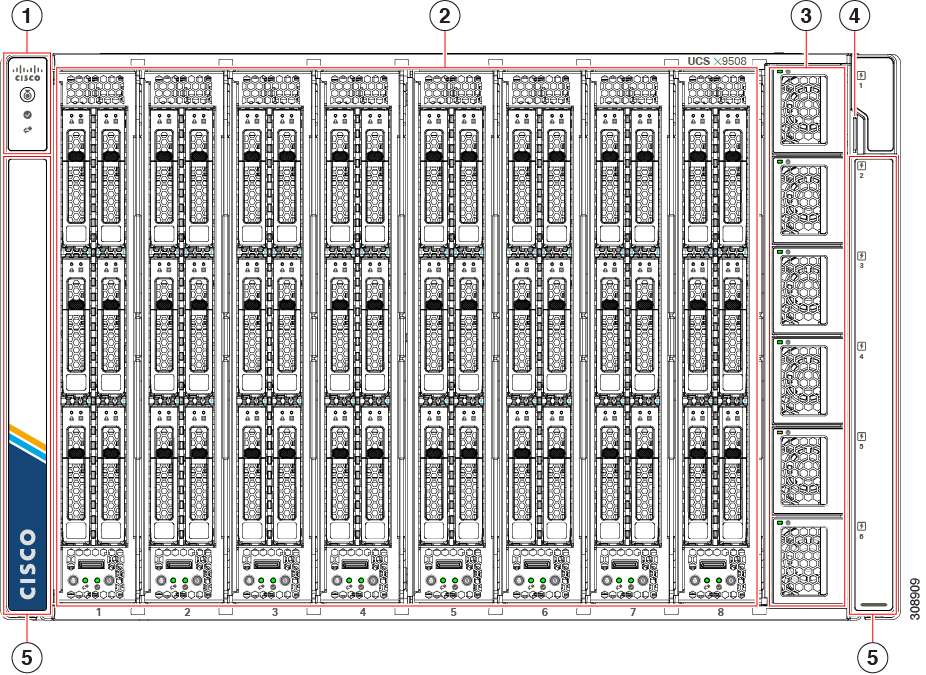

1 |

System LEDs:

For information about System LEDs, see LEDs. |

2 |

Node Slots, a total of 8. Shown populated with compute nodes, but can also contain PCIe Nodes |

|

3 |

Power Supplies, a maximum of 6. |

4 |

System Asset Tag |

|

5 |

System side panels (two), which are removable. The side panels cover the rack mounting brackets. |

|

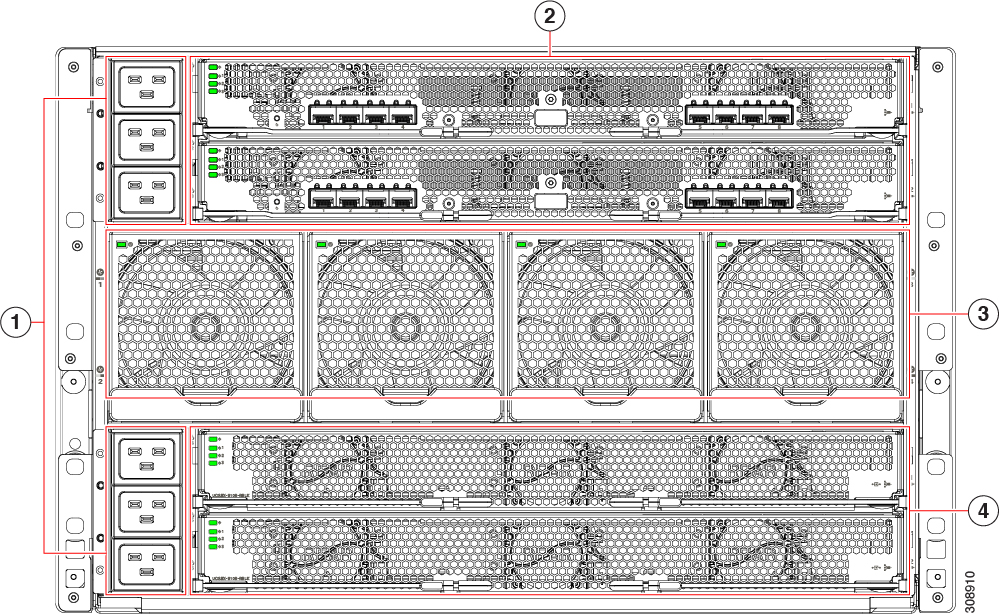

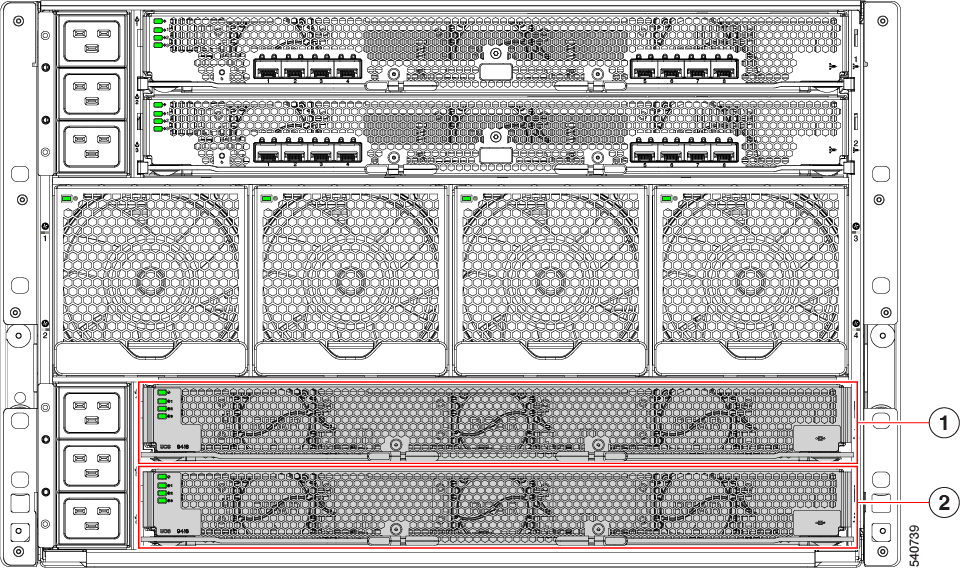

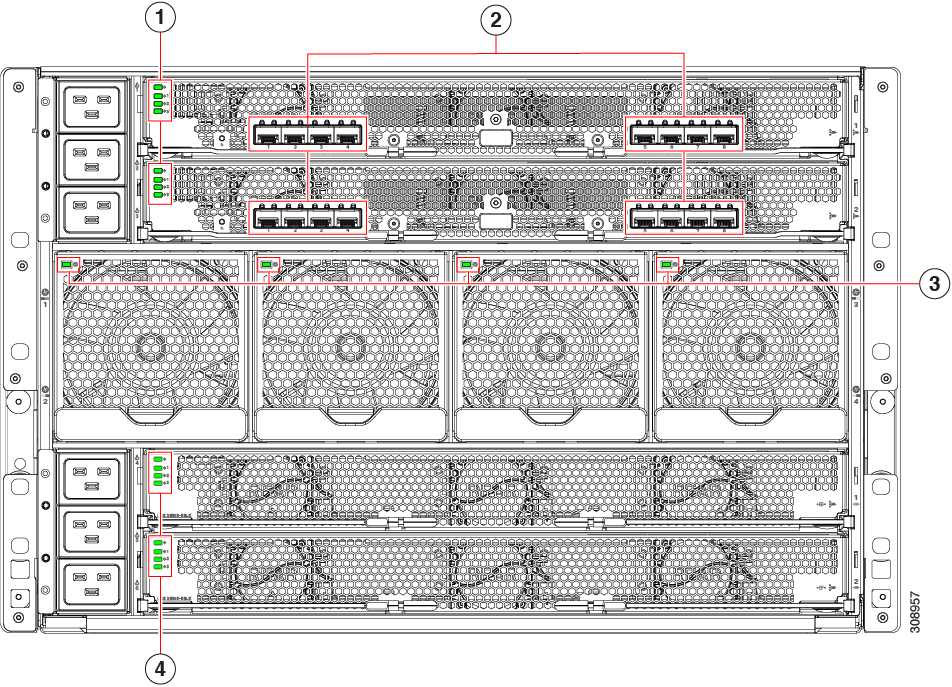

1 |

Power Entry Modules (PEMs) for facility inlet power Each PEM contains 3 IEC 320 C20 inlets.

|

2 |

Intelligent Fabric Modules (shown populated), which are always deployed as a pair of the following:

|

|

3 |

System fans (four) |

4 |

X-Fabric Module slots for either UCS active filler panels (for compute nodes) or up to two UCS X-Fabric Modules (for compute nodes paired with PCIe nodes). |

Feedback

Feedback