- Foreward

- The Authors

- Preface

- Introduction and Industry Overview

- Security Process Lifecycle

- Introduction

- Overview of Cybersecurity Services

- Advisory and Consultation Services

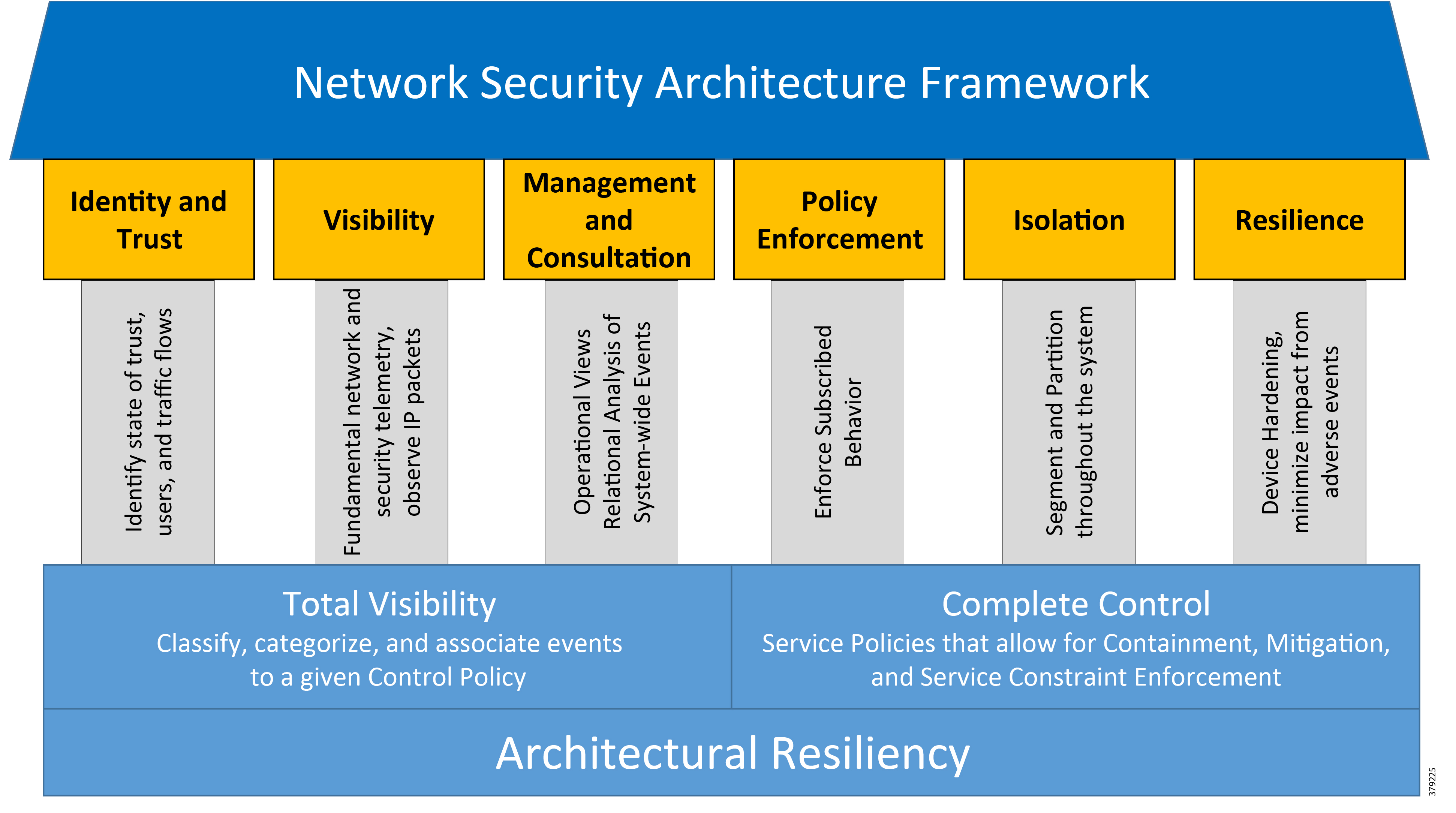

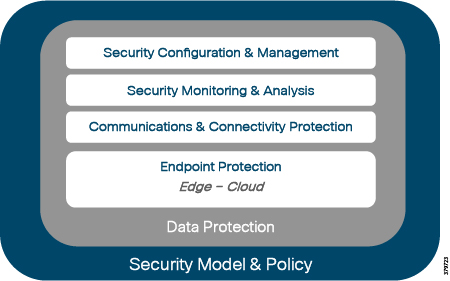

- Security Architectures

- Network Security Architecture Framework

- Security Architecture Principles

- Cybersecurity During the Implementation Project

- Front End Engineering and Design

- Detailed Design

- Installation

- Development—With Embedded Testing

- Factory (Preliminary) Acceptance Testing

- Site Acceptance Testing

- Commissioning—Including Point-to-Point Verification

- Service and Support

- Project Team Communications

- System Hardening Defined

- What We are Trying to Solve

- Process

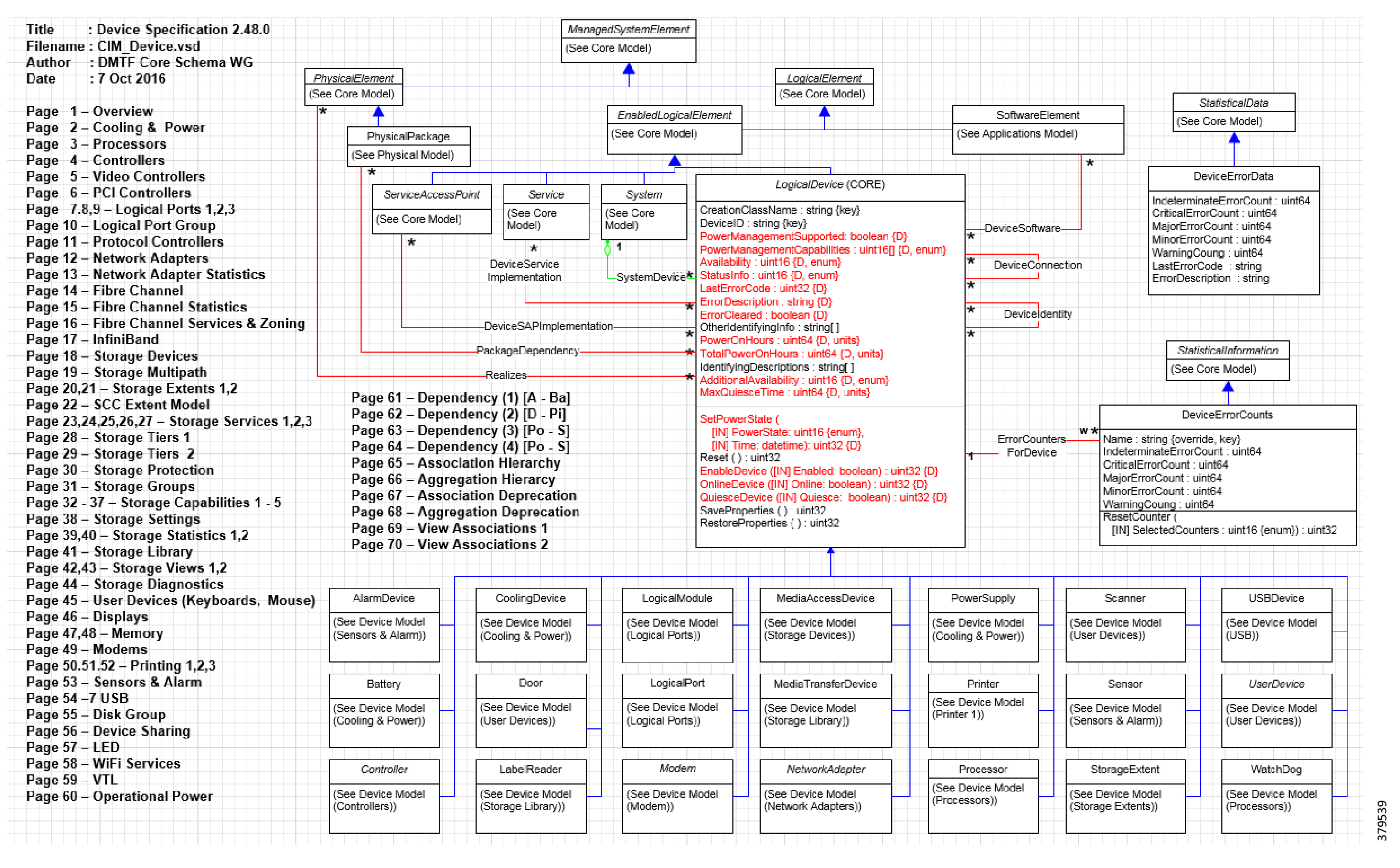

- Technology

- Network System Hardening

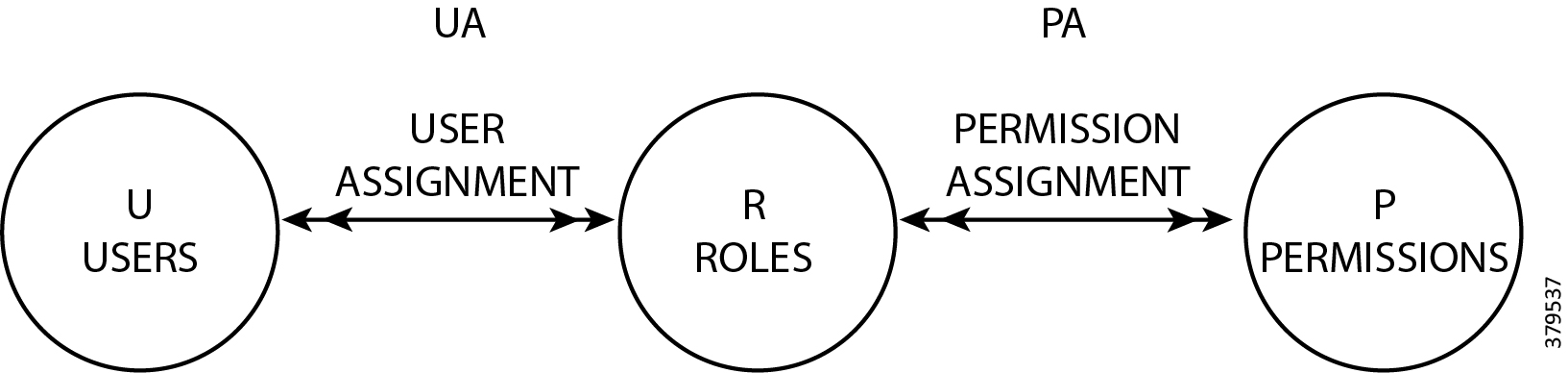

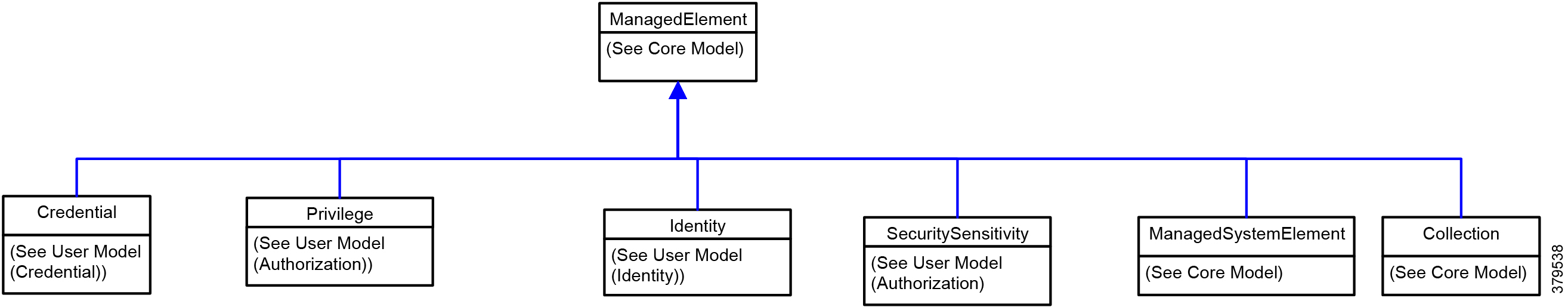

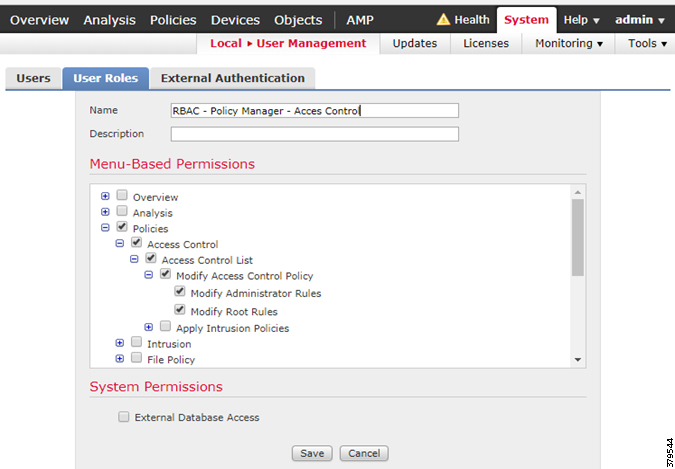

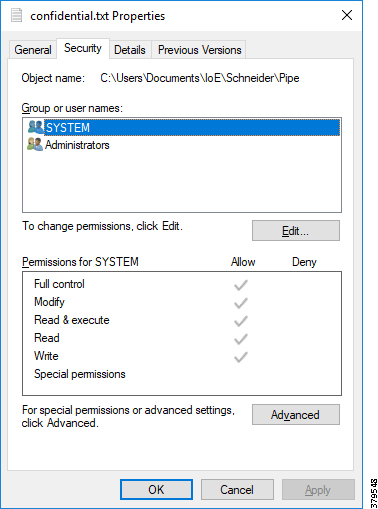

- Access Control

- Practical Considerations for SCADA Hardening

- Hardening the Control Center

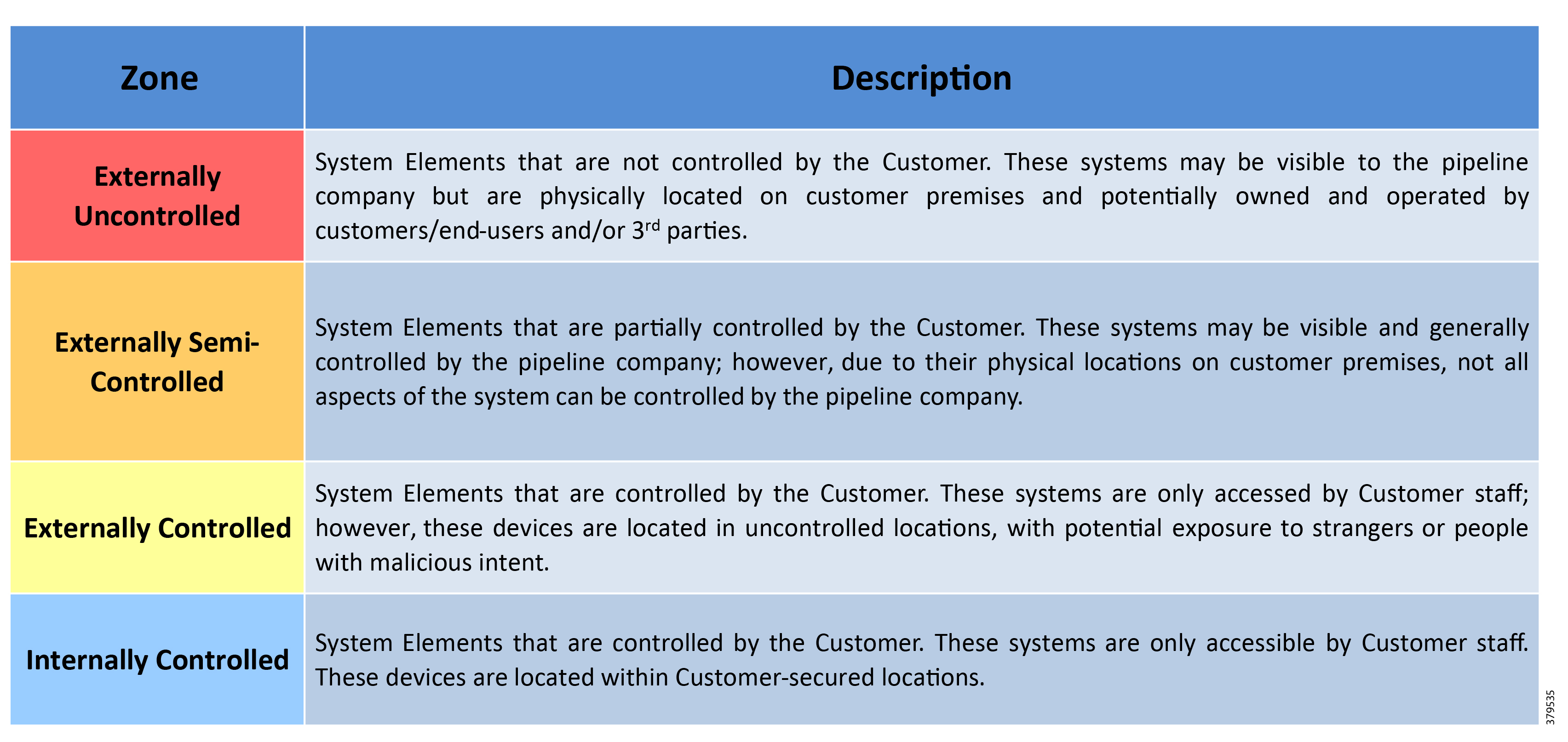

- Security Zones

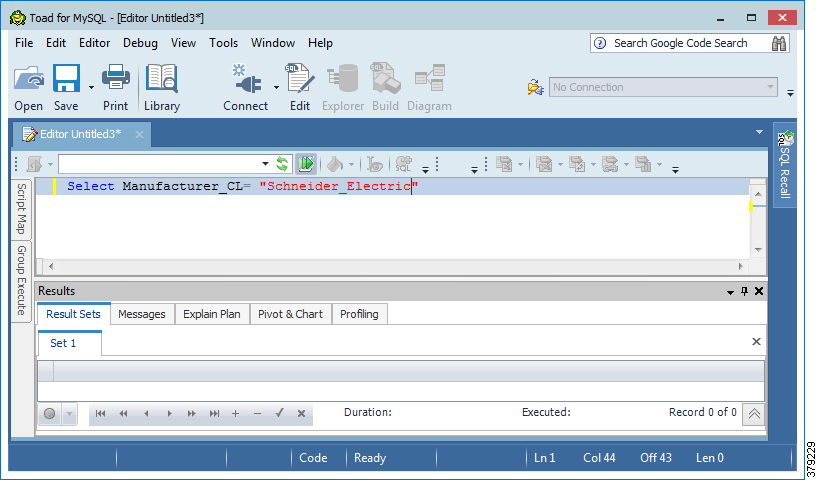

- Software Tools and Applications

- Data Flow Enforcement

- Application Signing and Management of Change (MOC)

- Patch Management

- Anti-Malware

- Internet and Related Access

- Firewall Considerations

- Baseline OS Hardening Considerations

- Baseline Network Hardening Considerations

- Availability in the Control Center

- Hardening the WAN and Field Infrastructure

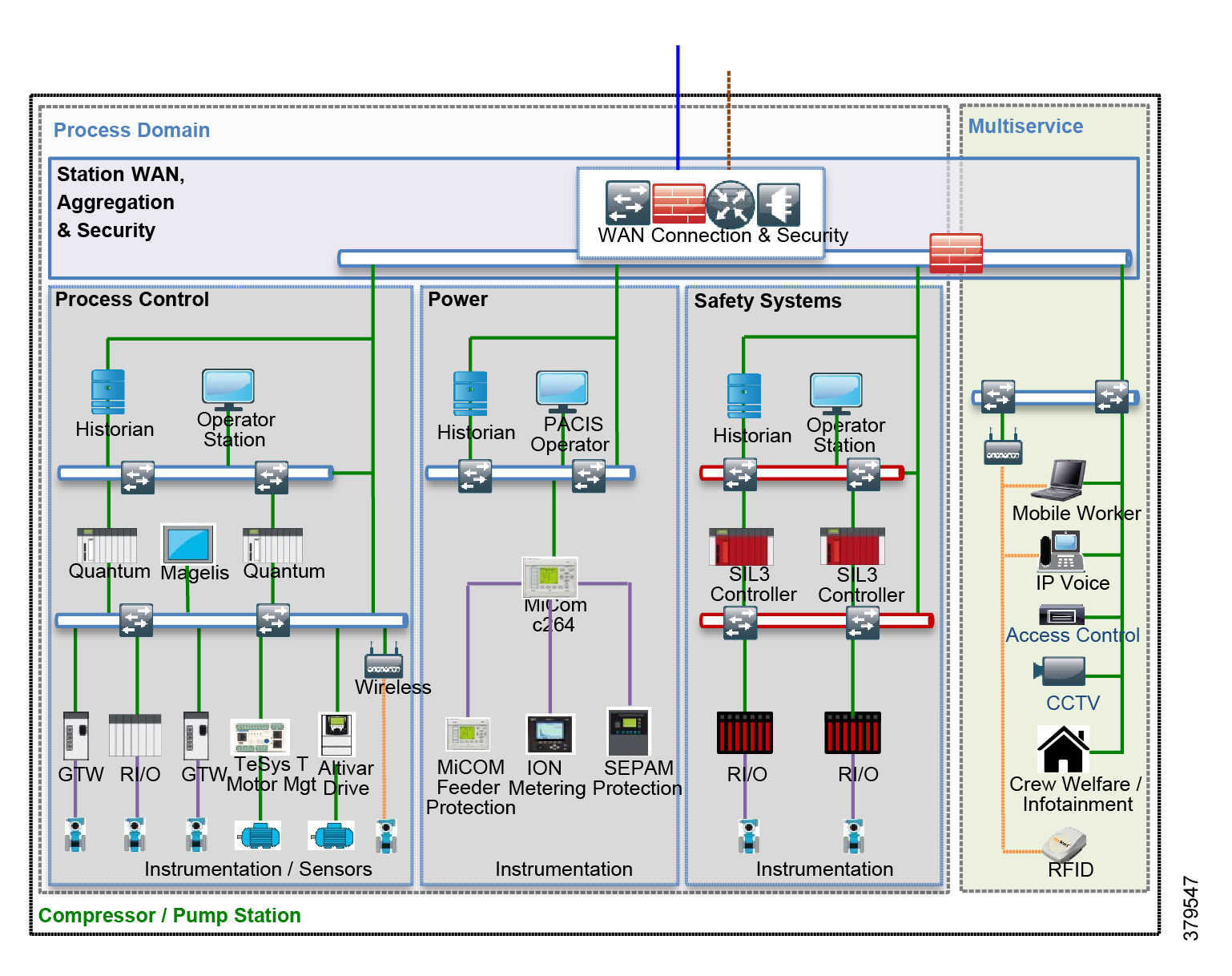

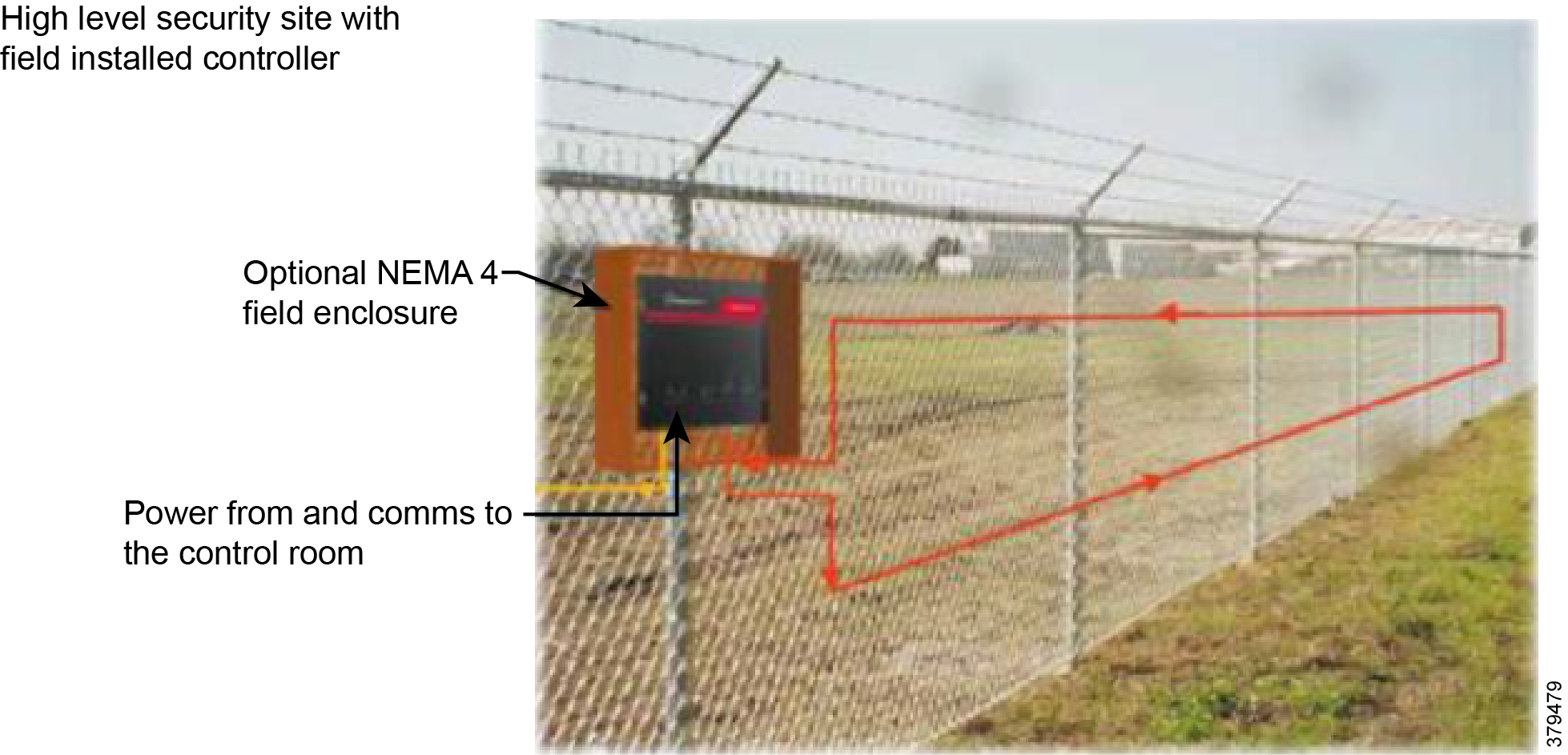

- Hardening the Pipeline Stations

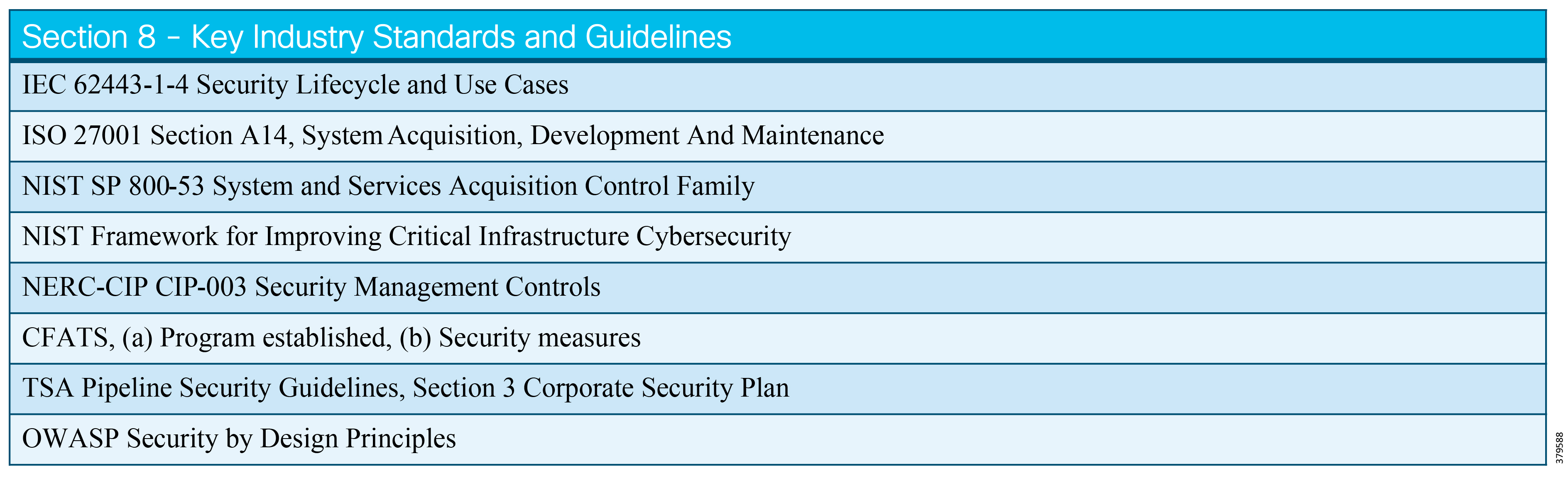

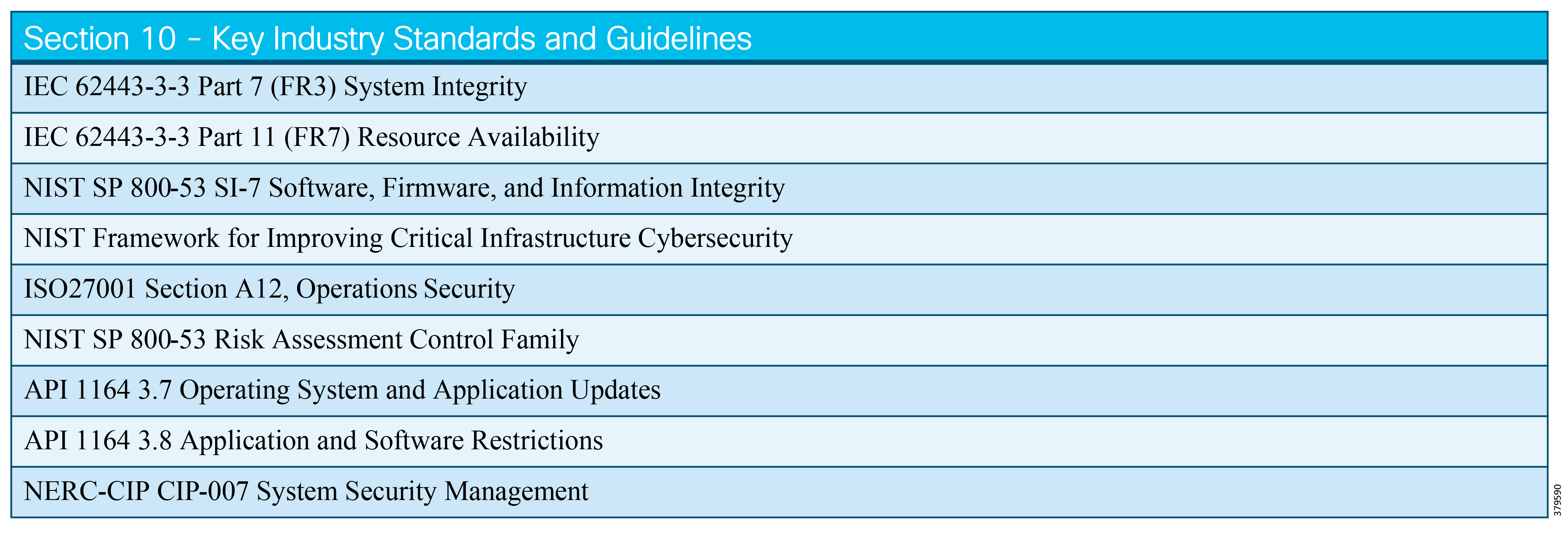

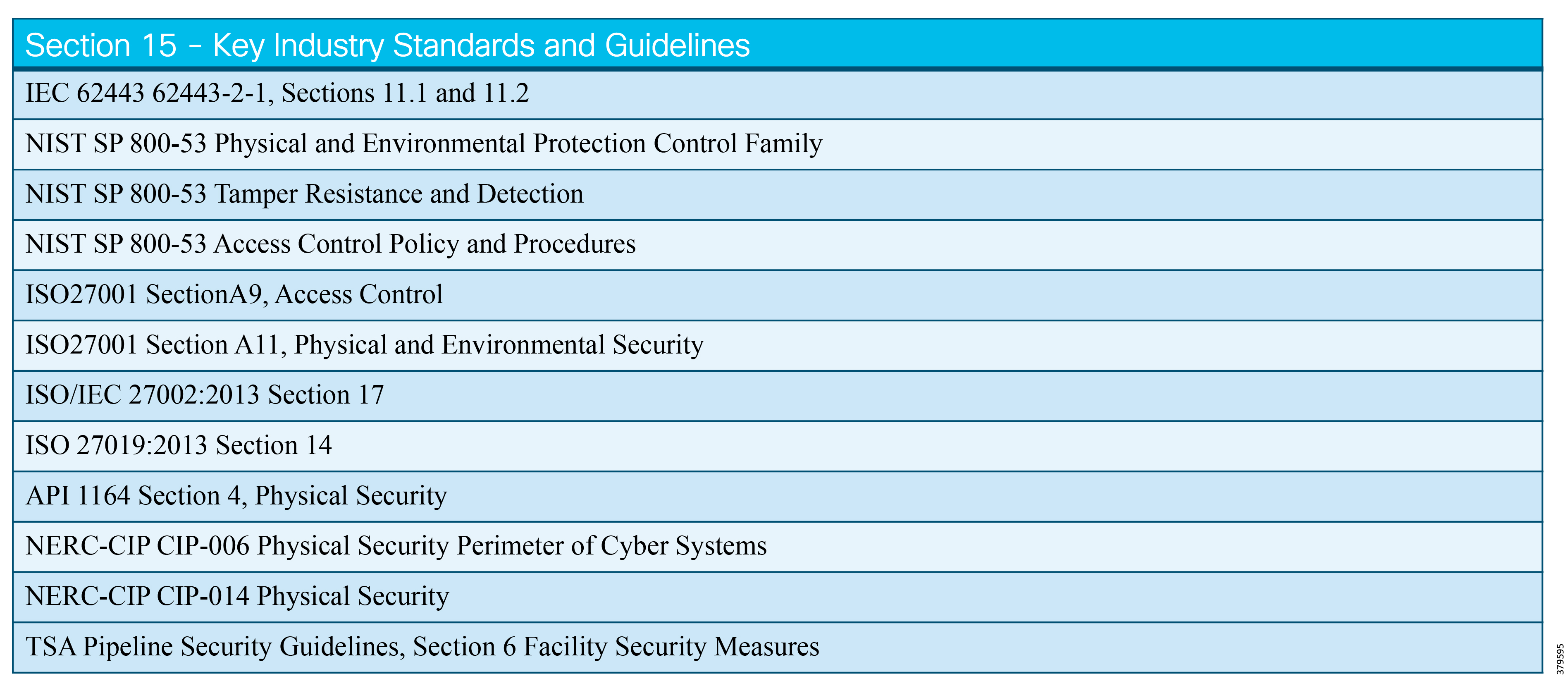

- Industry Standards Cross-Reference: System Hardening

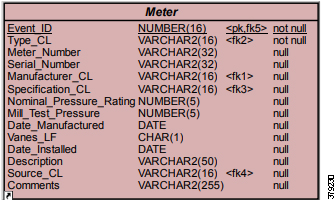

Oil and Gas Pipeline

Industrial Security Reference Design

Foreward

Now more than ever, technological innovations are advancing at a dizzying pace, going mainstream, and changing the world in the process. This is in part due to increased competition, rising regulatory pressures, and public expectations. For Industrial Control Systems (ICS), these factors have increasingly affected pipeline operations and maintenance. Recent technological developments are addressing many complex issues, driving efficiencies, and lowering costs. And these improvements come with risks, such as theft and unauthorized disclosure of proprietary information. Design flaws and technical vulnerabilities in information systems, which are used to manage and control the flow of pipeline products and their associated information, are often exploited. Numerous examples of cyber breaches, which have caused major damage to what is considered to be critical infrastructure, appear in the news. Globally, laws, regulations, and standards have become more stringent in an attempt to address the ever-increasing need to thwart cyber attacks. The public at large, as well as regulators, have formed a rallying cry, compelling the pipeline community to secure their assets to avoid safety hazards, security breaches, penalties, sanctions, and embarrassing news headlines.

Now more than ever, technological innovations are advancing at a dizzying pace, going mainstream, and changing the world in the process. This is in part due to increased competition, rising regulatory pressures, and public expectations. For Industrial Control Systems (ICS), these factors have increasingly affected pipeline operations and maintenance. Recent technological developments are addressing many complex issues, driving efficiencies, and lowering costs. And these improvements come with risks, such as theft and unauthorized disclosure of proprietary information. Design flaws and technical vulnerabilities in information systems, which are used to manage and control the flow of pipeline products and their associated information, are often exploited. Numerous examples of cyber breaches, which have caused major damage to what is considered to be critical infrastructure, appear in the news. Globally, laws, regulations, and standards have become more stringent in an attempt to address the ever-increasing need to thwart cyber attacks. The public at large, as well as regulators, have formed a rallying cry, compelling the pipeline community to secure their assets to avoid safety hazards, security breaches, penalties, sanctions, and embarrassing news headlines.

As a SCADA Integrator in the ICS space, I have worked with many pipeline companies over the years, addressing the layers of complexity involved in securing their assets. This isn't just a technical issue. To prepare for and prevent current and emerging cyber attacks, organizations must balance technology with human-centric defenses. As cyber threats become increasingly prevalent and more sophisticated with each new technological innovation, the efforts to thwart them must adapt. As an organization, security must be integrated into the culture from the Board room to the Production environment. Only a multi-layered defense security strategy (human, physical, and cyber factors) will ensure that attackers penetrating one layer of defense will be stopped by a subsequent layer.

Protecting your company and ensuring security requires significant investment and clear guidelines for training, data integrity, and security. Given the general consensus to secure all manner of assets within pipeline operations, the authors of this paper have endeavored to present a comprehensive reference guide to securing your pipeline operation. This document is a source of information to help you understand the complexity of securing operations and provides a detailed technical solution approach to doing so. It is a well-written, thoughtful, and systematic discussion of all things cybersecurity and lays out the approaches that are in common use today and considered to be standard within the industry. This reference guide is peppered throughout with invaluable commentary from industry experts on best practices, techniques, and relevant technologies. You will gain considerable insight into today's key information security management challenges and concerns as your organization undertakes this formidable task of laying down the groundwork and managing a modern pipeline information security program.

The effort to create this document was the brainchild of a cross-disciplinary team from Cisco, Schneider Electric (SE), and AVEVA. The original intent was to build on the concept of the Smart Connected Pipeline, a fully Tested Validated Documented Architecture (TVDA) solution for pipelines, which includes all components of the solution from SCADA to devices to supporting systems, with a discussion on how security plays into the overall solution and the benefits of a jointly delivered solution. Having worked in this industry over twenty years, I was invited to be a part of this effort as a reviewer of the document, providing feedback and insight into the value it would bring to its intended audience. The authors of this document have created an all-in-one solution reference that can be leveraged to establish a robust cybersecurity strategy for your organization. They have outlined in detail the various elements of a cybersecurity management program, which aligns with many security standards used throughout the world.

Today's business climate has created a demanding landscape where people and companies are more connected than ever and technology is transforming the organization. My hope is that the insights offered by this document will provide a reference to help create a cybersecurity strategy for your organization.

-- Jeff Whitney, Berkana Resources Corporation, October 25, 2018

The Authors

Rik Irons-Mclean—Senior Business Development Manager – Office of the CTO, Industry Solutions Group, Cisco

Kevin J. Rittie—Director, Solutions and Cybersecurity Management, AVEVA

Jason Greengrass—Solution Architect, IoT Solutions, Cisco

Jacques van Dijk—Director, Industry Solution Validation, AVEVA

Robert Albach—Product Manager, Security Business Unit, Cisco

Jose Manuel Pienado—Global Solutions Architect, Schneider Electric

Preface

This reference document describes the Cisco Systems, AVEVA, and Schneider Electric approach to cybersecurity in the Oil and Gas (O&G) industry, specifically for Pipeline Management Systems (PMSs). The following key principles drive the guidelines in later sections:

■![]() Security, an essential component of any properly designed and implemented Industrial Control System (ICS), forms a core part of the strategy to ensure the safety and reliability of O&G pipeline management solutions.

Security, an essential component of any properly designed and implemented Industrial Control System (ICS), forms a core part of the strategy to ensure the safety and reliability of O&G pipeline management solutions.

■![]() Systems and architectures are continuously evolving as technology changes and in response to an increasing attack surface. The accepted security mindset is that O&G PMSs will be subject to cyber threats or attacks and, as such, organizations should prepare accordingly.

Systems and architectures are continuously evolving as technology changes and in response to an increasing attack surface. The accepted security mindset is that O&G PMSs will be subject to cyber threats or attacks and, as such, organizations should prepare accordingly.

■![]() Standards and guidelines are an essential foundation, but they do not describe how to secure specific systems. As all systems are different, standards and guidelines should be leveraged as a best practice framework and tailored specifically to business needs.

Standards and guidelines are an essential foundation, but they do not describe how to secure specific systems. As all systems are different, standards and guidelines should be leveraged as a best practice framework and tailored specifically to business needs.

■![]() The best security strategy is achieved through information technology (IT) and operational technology (OT) teams collaborating with technology vendors and standards organizations (regulatory bodies and industry trade organizations) to understand how individual strengths can be leveraged to best address control system risks.

The best security strategy is achieved through information technology (IT) and operational technology (OT) teams collaborating with technology vendors and standards organizations (regulatory bodies and industry trade organizations) to understand how individual strengths can be leveraged to best address control system risks.

■![]() Any solution is only as strong as its weakest part. The best strategy to mitigate risk is to embrace “ecosystem security,” where all components of a system are designed, built, tested, validated, and certified wherever possible, taking end-to-end cyber and physical security into consideration.

Any solution is only as strong as its weakest part. The best strategy to mitigate risk is to embrace “ecosystem security,” where all components of a system are designed, built, tested, validated, and certified wherever possible, taking end-to-end cyber and physical security into consideration.

■![]() Security is a continuous process, not a single, isolated effort. Every design phase should include a set of security steps to be followed, integrating security directly into the solution throughout its lifecycle. To be most effective, security must be included in the lifecycle design from the outset. By building the system with a robust security architecture at its core, integrating with broader organizational compliance and governance efforts, a more effective, lower-cost security approach can be achieved.

Security is a continuous process, not a single, isolated effort. Every design phase should include a set of security steps to be followed, integrating security directly into the solution throughout its lifecycle. To be most effective, security must be included in the lifecycle design from the outset. By building the system with a robust security architecture at its core, integrating with broader organizational compliance and governance efforts, a more effective, lower-cost security approach can be achieved.

■![]() Security incidents will inevitably occur. Having a well-documented set of processes and procedures on how the organization responds to incidents is essential. It is not only the speed with which a threat is discovered, but also the speed with which security threats are mitigated and remediated that controls the potential risks and costs arising from an incident.

Security incidents will inevitably occur. Having a well-documented set of processes and procedures on how the organization responds to incidents is essential. It is not only the speed with which a threat is discovered, but also the speed with which security threats are mitigated and remediated that controls the potential risks and costs arising from an incident.

Intended Audience

The primary intended audience for this document is anyone with a responsibility or interest specifically in pipeline security or more generally in O&G security, as these principles are equally applicable to all areas of the O&G value chain. The audience would include those involved in security design, architecture, standards and compliance, and risk management.

Industry Perspective and Requirements

Cybersecurity threats and breaches continue to make headline news with impact across all industries and sectors. O&G, like other critical infrastructure environments, rely on highly-available ICSs and supporting infrastructure. In this document, the focus is on PMS; however, the guidelines are applicable to any ICS. The consequences of cybersecurity breaches, such as station shutdown, utilities interruption, production disruption, impact to the environment through detected or undetected leaks, and even the loss of human life, all mean cybersecurity is a critical area of concern within industry.

A 2017 study1 found that industrial cybersecurity is difficult for even the most technologically advanced O&G companies for a number of reasons, including:

■![]() Long life cycles of operational assets

Long life cycles of operational assets

■![]() Inaccurate and outdated asset technology inventories

Inaccurate and outdated asset technology inventories

■![]() 24/7/365 continuous operation times (barring turnarounds and shutdown)

24/7/365 continuous operation times (barring turnarounds and shutdown)

■![]() No single approach to security in the regulations or standards

No single approach to security in the regulations or standards

These factors, coupled with a rapidly evolving technological landscape, pose a serious risk to the industry. This is not merely a concern for the future, but a current reality. A study2 found that sixty-eight percent of respondents to an O&G survey admitted that their organizations had experienced at least one cyber compromise in 2016. For large organizations, the same study found that there were two to five such incidents per organization.

The potential for disruption and damage from cyberattacks is real and a threat that the industry must proactively address to protect the critical infrastructure of a foundational economic industry.

This reference document aims to help organizations address this threat by providing a simplified overview of industry best practices to help protect O&G pipelines from intentional or accidental security threats. While multiple standards and guidelines exist to offer perspectives on this subject, this document addresses the most consistent themes highlighted throughout the standards and guidelines, as well as introduces some of the latest developments that may pose a threat to reliable pipeline operations.

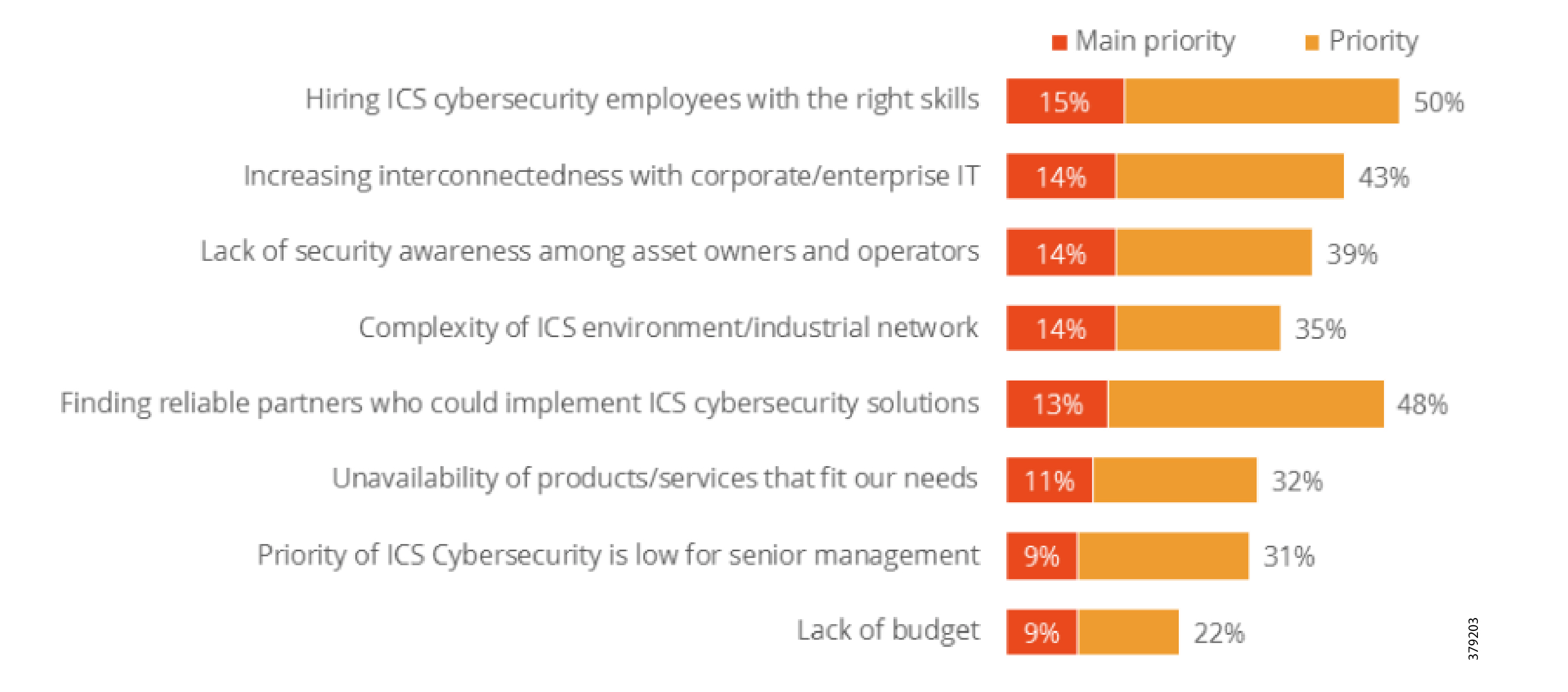

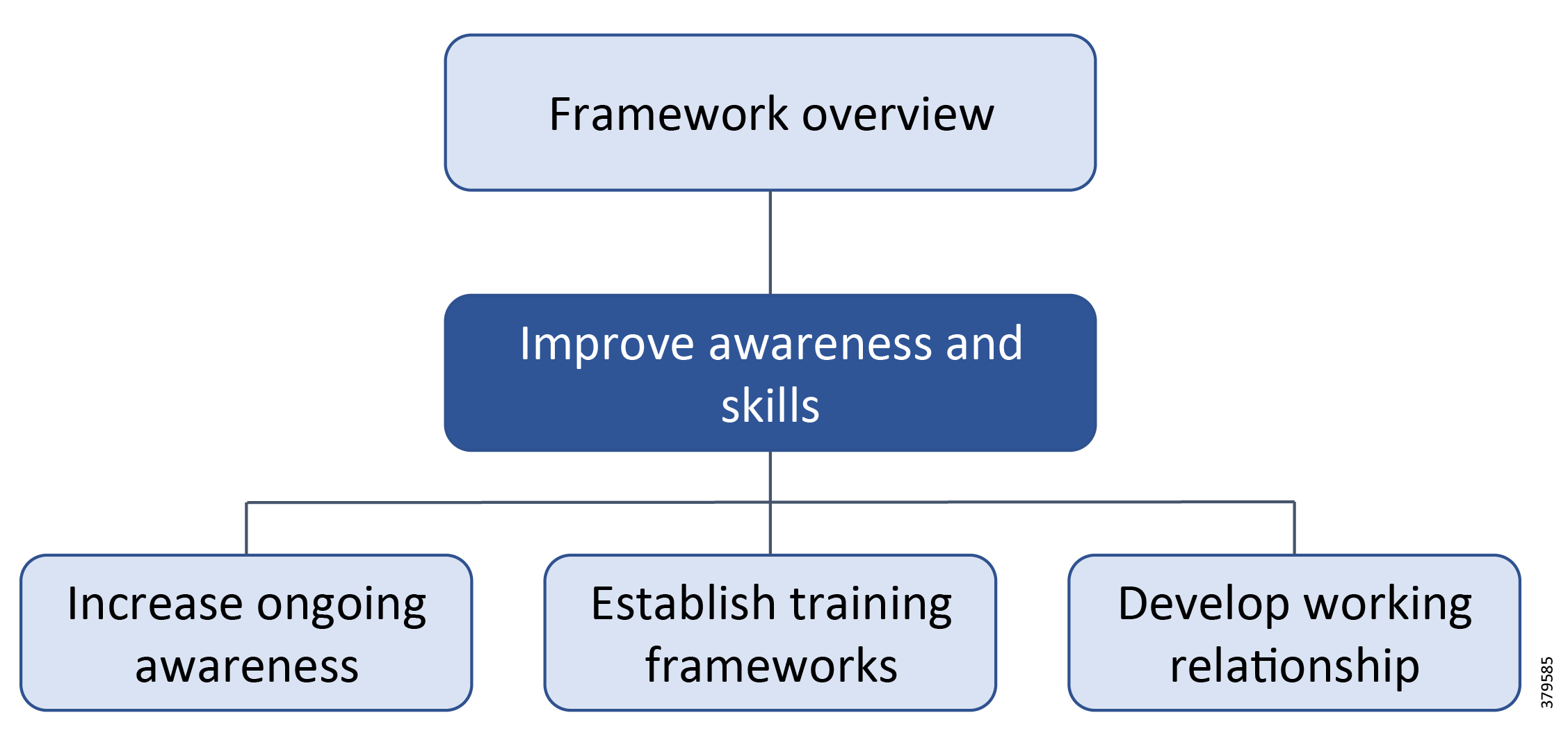

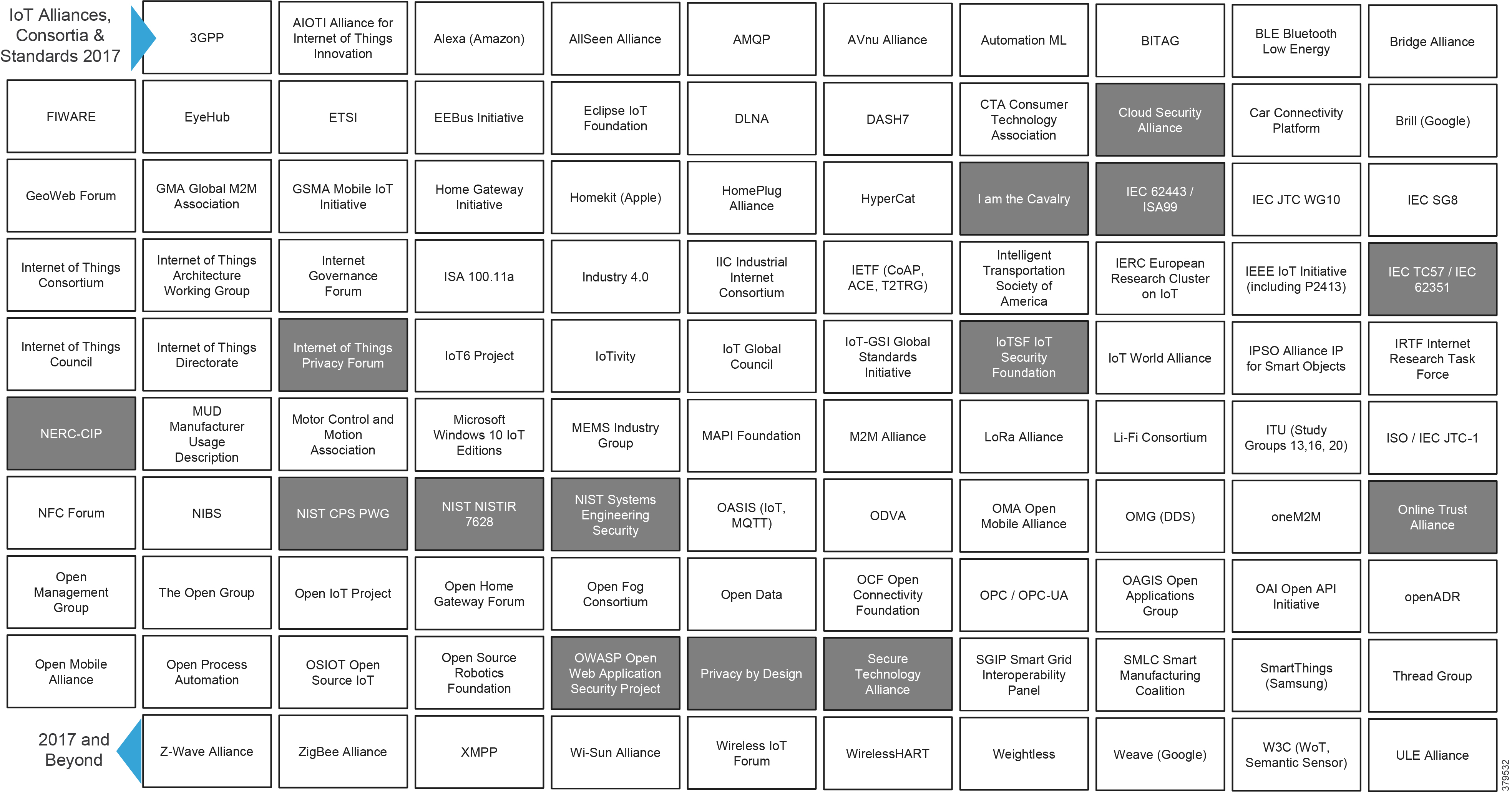

A key theme throughout this document, beyond describing standards, guidelines, and the nature of threats, is to make organizations recognize that they must have the appropriate personnel, internally or externally, to effectively support their cybersecurity practices. The industry is taking steps to meet this need as shown in Figure 1, but additional progress is still required.

Figure 1 Challenges of Managing ICS Security3

A recent SANS Institute report4 found that four out of ten organizations lack appropriate visibility into their ICS networks to monitor assets and operations to identify potential threats. This creates the risk of not being able to recognize and respond to attacks. How do you secure what you do not understand?

Cisco, Schneider Electric, and AVEVA work with a wide range of organizations involved in the design, construction, and operation of critical pipeline assets in midstream and downstream environments. We are consistently asked to help plan a comprehensive security strategy and roadmap, helping organizations navigate through the growing security threat landscape.

This document provides the foundation to develop a strong security posture and roadmap, with practical advice and recommendations as to what to do and when throughout the security lifecycle.

Document Scope

In order to provide best practice guidance versus design and implementation techniques. Cisco, Schneider Electric, and AVEVA services teams are available to jointly build on this guidance and deliver detailed individual customer designs, architectures, roadmaps, and implementations.

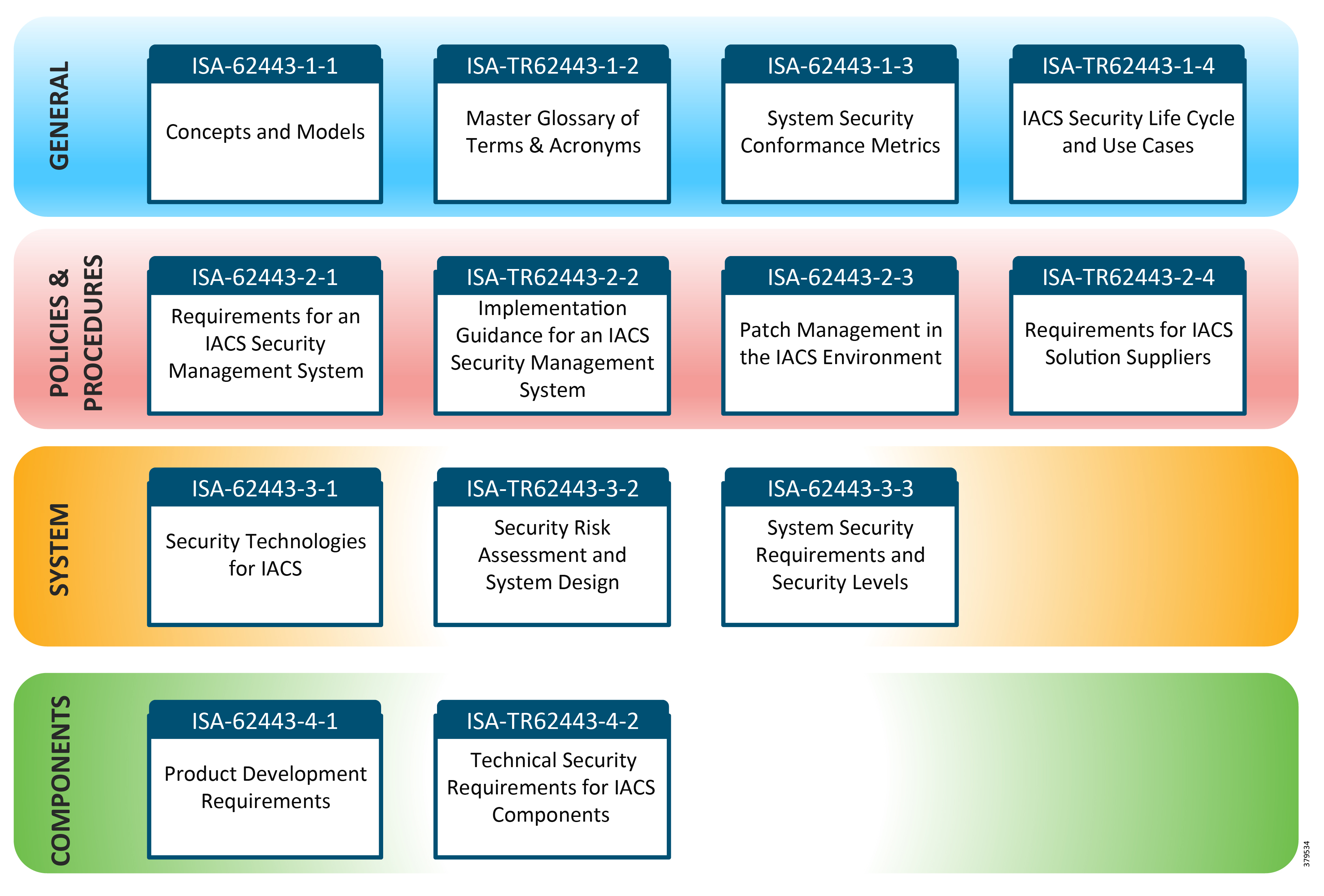

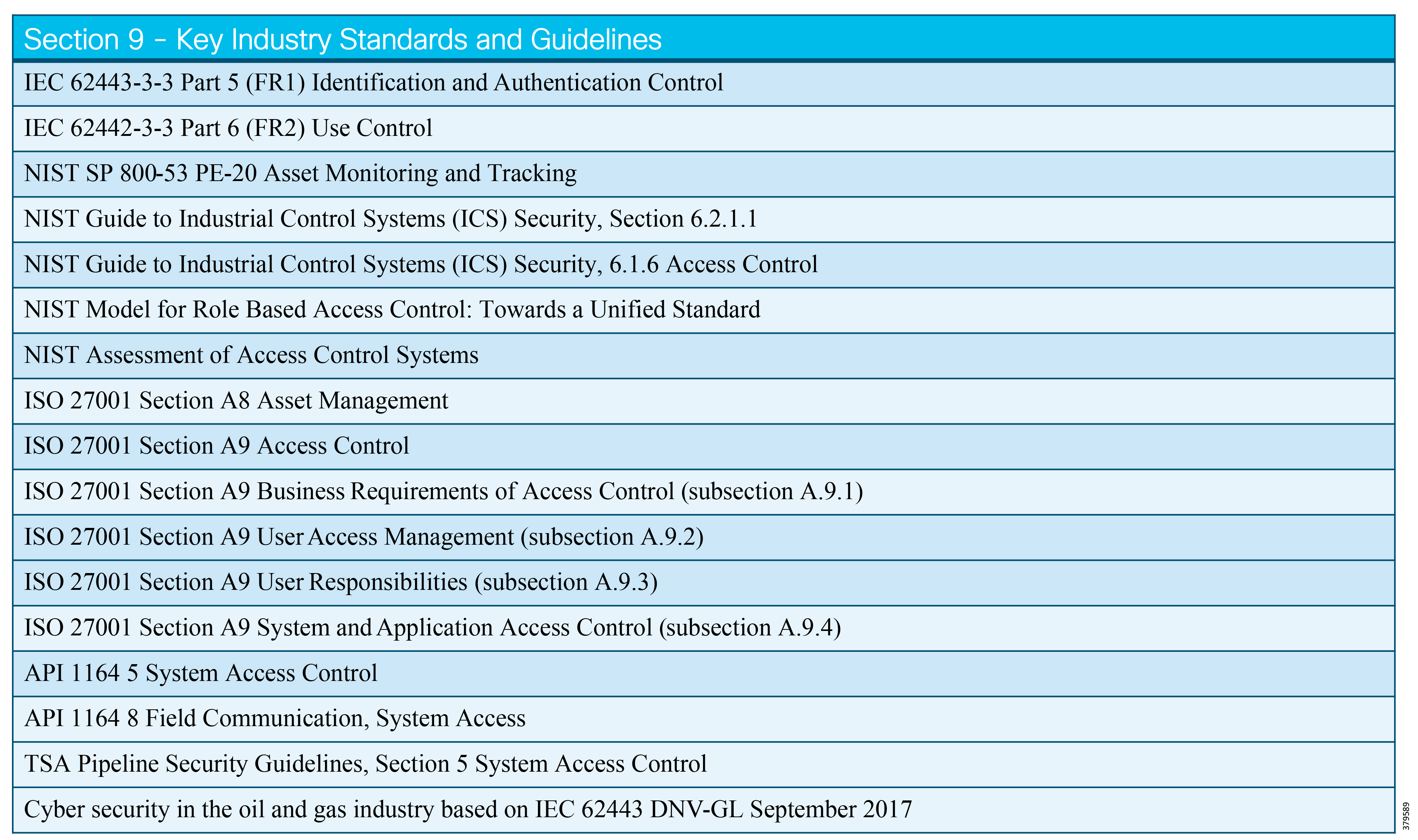

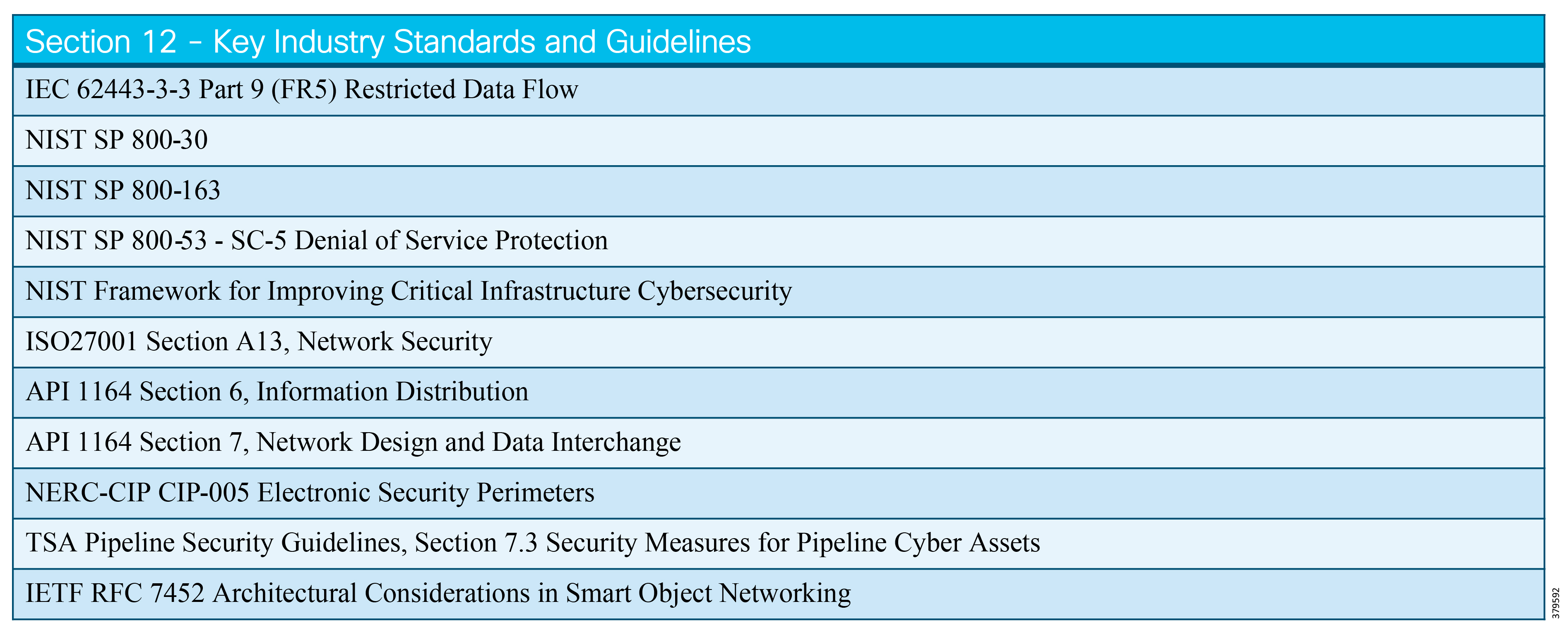

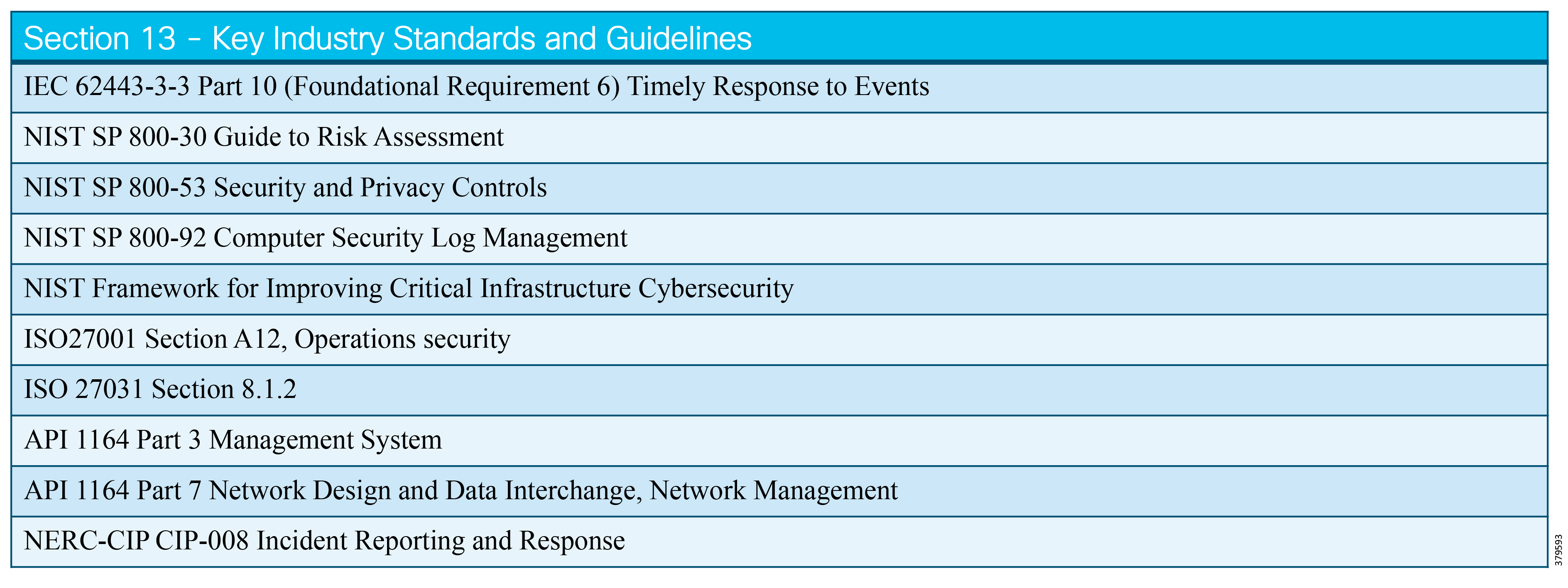

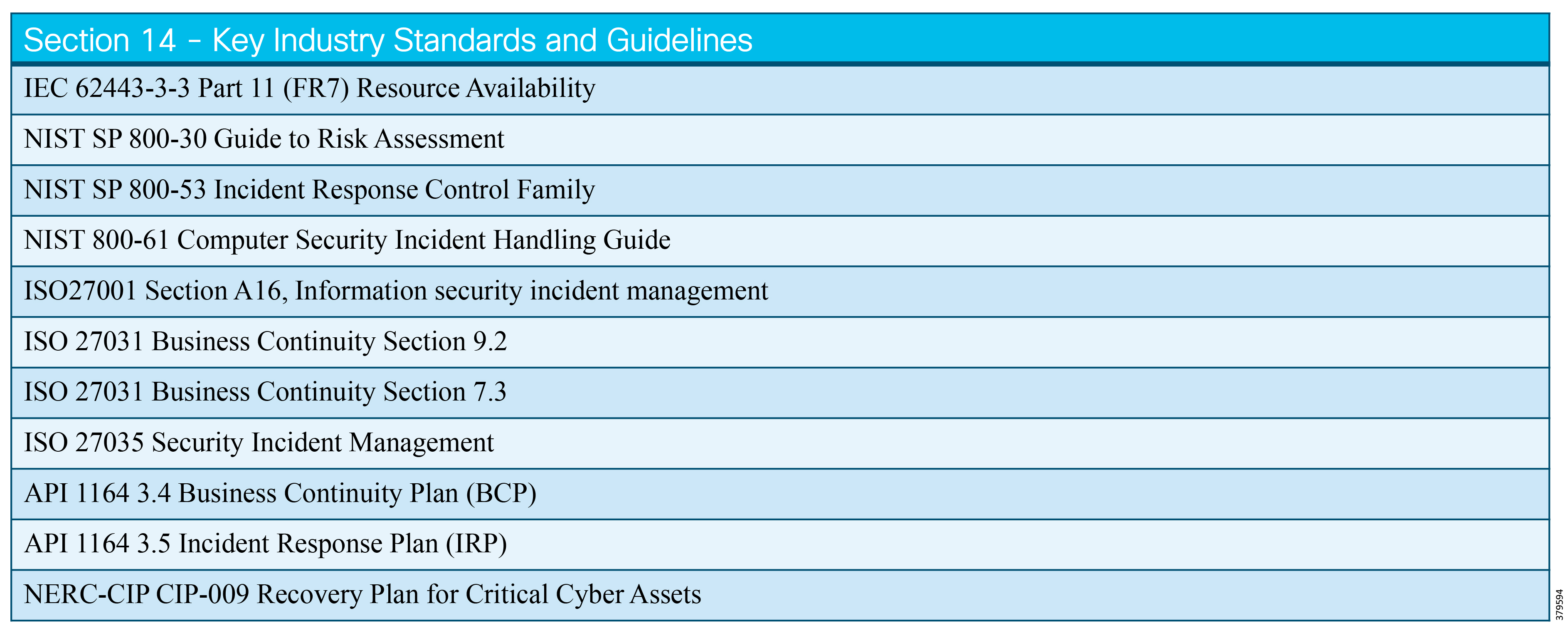

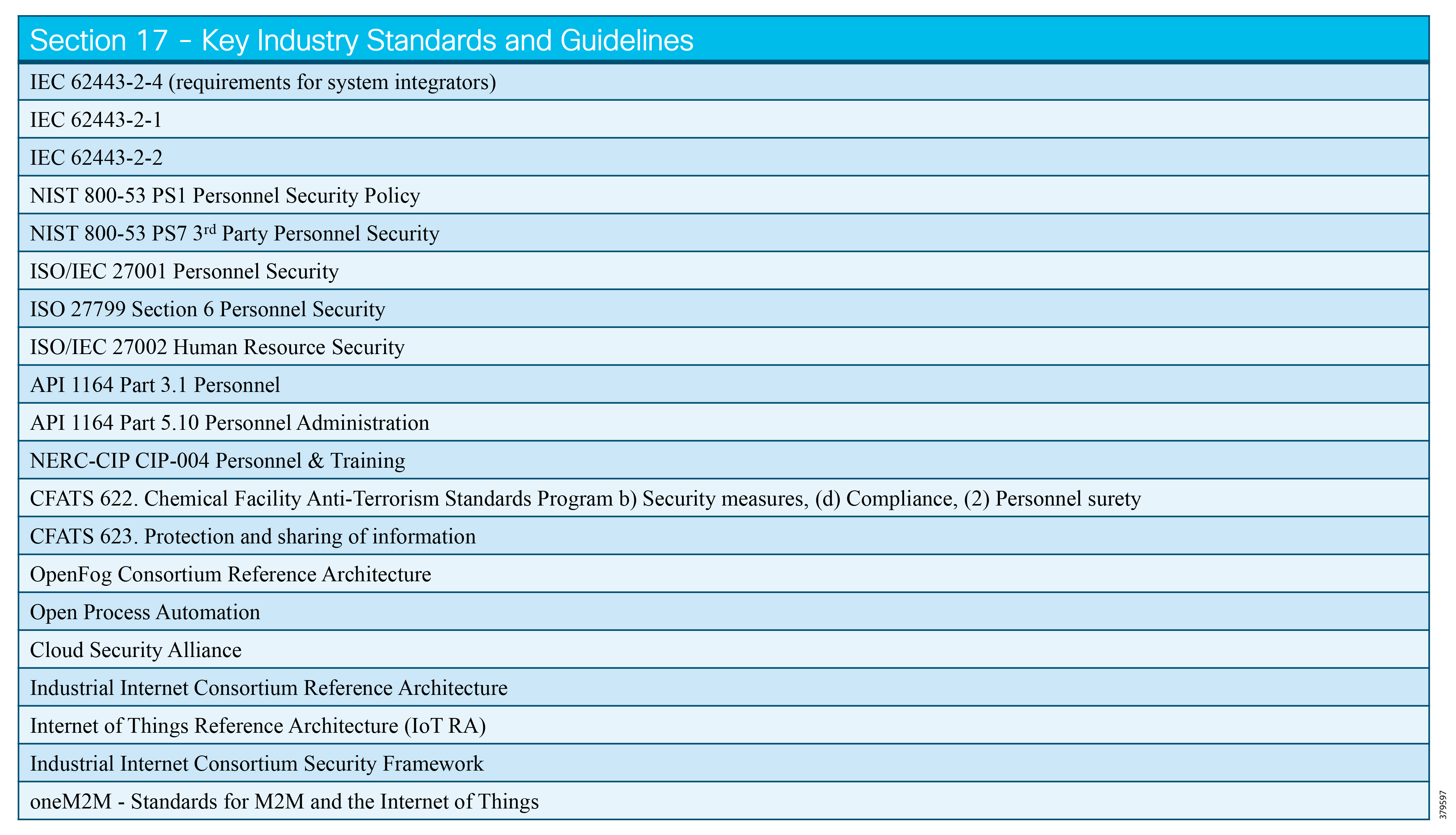

The key standards covered in this document that relate to O&G pipelines and ICS security include:

■![]() IEC 62443 Industrial Control System standard

IEC 62443 Industrial Control System standard

■![]() API 1164 Pipeline Supervisory Control and Data Acquisition (SCADA) Security standard

API 1164 Pipeline Supervisory Control and Data Acquisition (SCADA) Security standard

■![]() NIST 800-82 Guide to Industrial Control System Security

NIST 800-82 Guide to Industrial Control System Security

■![]() IEC 27019 Security Management for Process Control

IEC 27019 Security Management for Process Control

■![]() Chemical Facility Anti-Terrorism Standards (CFATS)

Chemical Facility Anti-Terrorism Standards (CFATS)

■![]() NERC-CIP where interfacing with the power grid, and remote station best practice electronic and physical perimeters

NERC-CIP where interfacing with the power grid, and remote station best practice electronic and physical perimeters

This document is designed to complement and enhance the security advice provided in the jointly-delivered validated design and implementation guides for control centers and operational pipeline communication networks that can be found at:

■![]() Smart Connected Pipeline—Control Centers:

Smart Connected Pipeline—Control Centers:

https://www.cisco.com/c/en/us/solutions/enterprise/design-zone-manufacturing/connected-pipeline-control-center.html

■![]() Smart Connected Pipeline—Operational Telecoms:

Smart Connected Pipeline—Operational Telecoms:

https://www.cisco.com/c/en/us/solutions/enterprise/design-zone-manufacturing/connected-pipeline-operational-telecoms.html

Introduction and Industry Overview

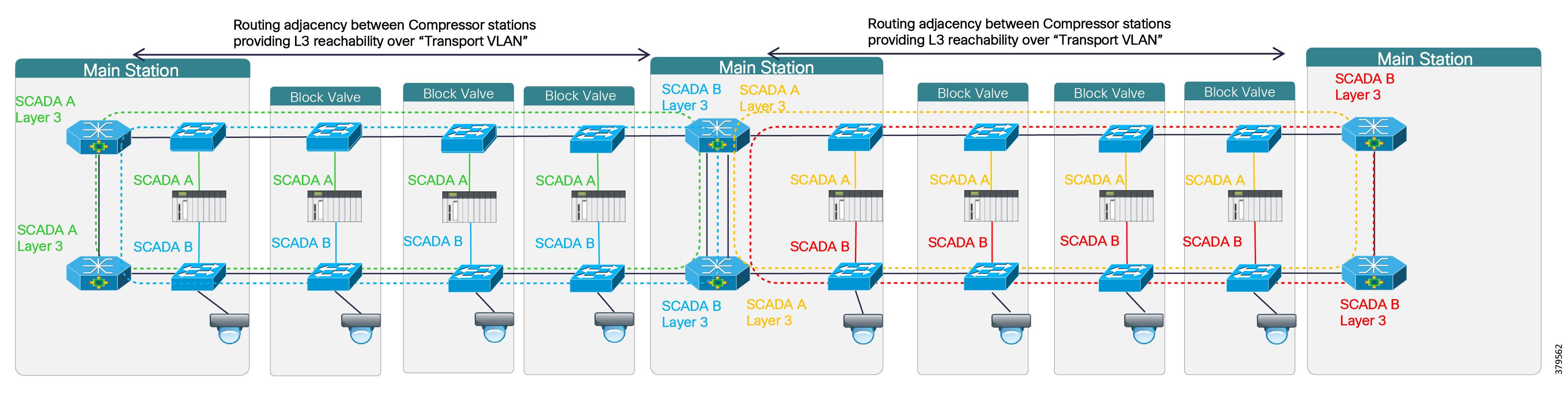

Transmission and distribution pipelines are the key transport mechanism for the O&G industry, operating continuously outside of scheduled maintenance windows. Pipelines provide an efficient, safe, and cost-effective way to transport processed or unprocessed oil, gas, and raw materials and products both on and offshore. It is essential that they operate as safely and efficiently as possible. When problems occur, they must be able to expeditiously restore normal operation to meet environmental, safety, quality, and production requirements.

Oil and Gas Pipeline Environment

For all pipeline operators, the safety and high availability of PMSs and underlying infrastructure is paramount. O&G pipeline management is challenging, with pipelines often running over large geographical distances, through harsh environments, and with limited communications and power infrastructure available. In addition, pipeline operators must operate their lines safely, following regulatory requirements such as Pipeline and Hazardous Materials Safety Administration (PHMSA) and industry best practices, to include ensuring compliance with stringent environmental regulations, while also addressing growing cyber and physical security threats.

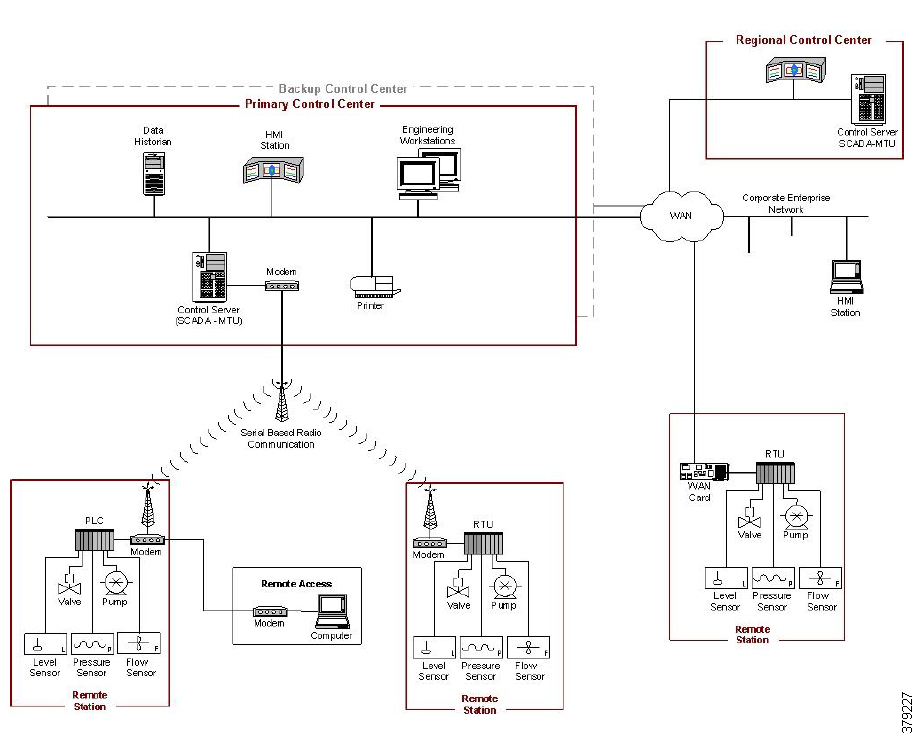

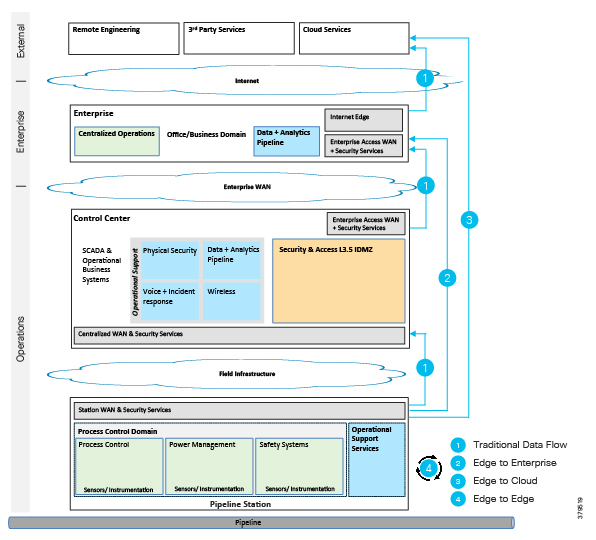

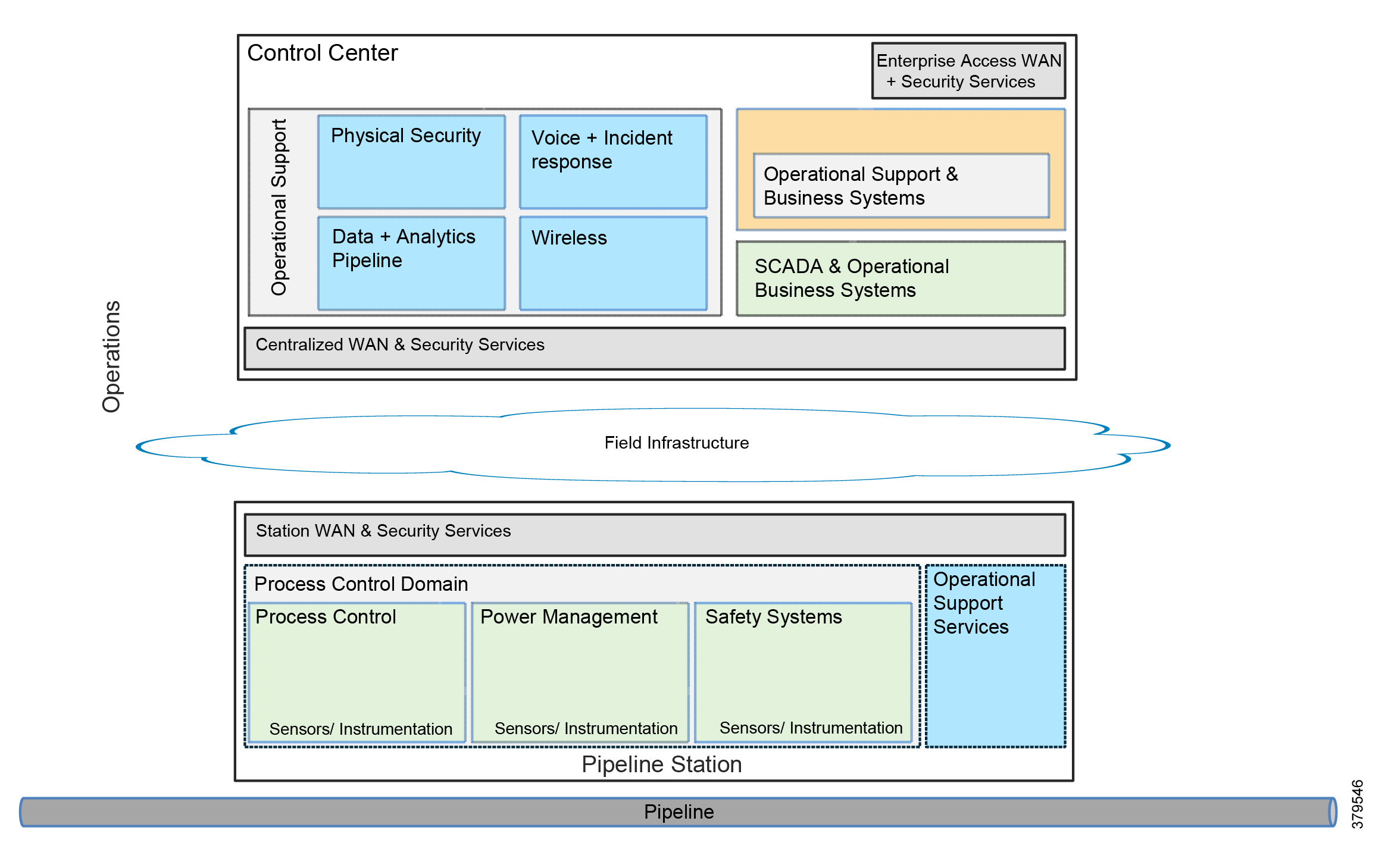

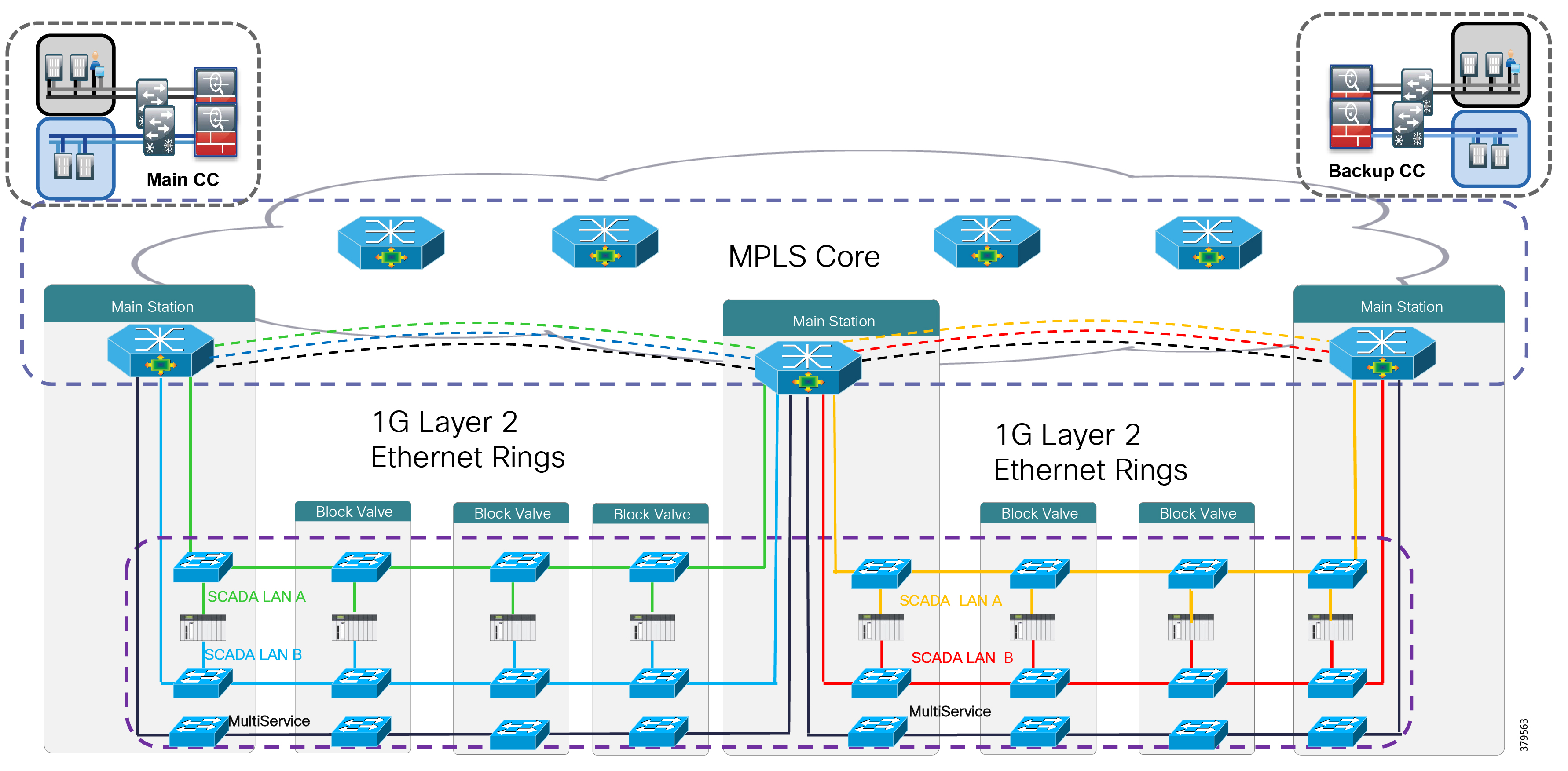

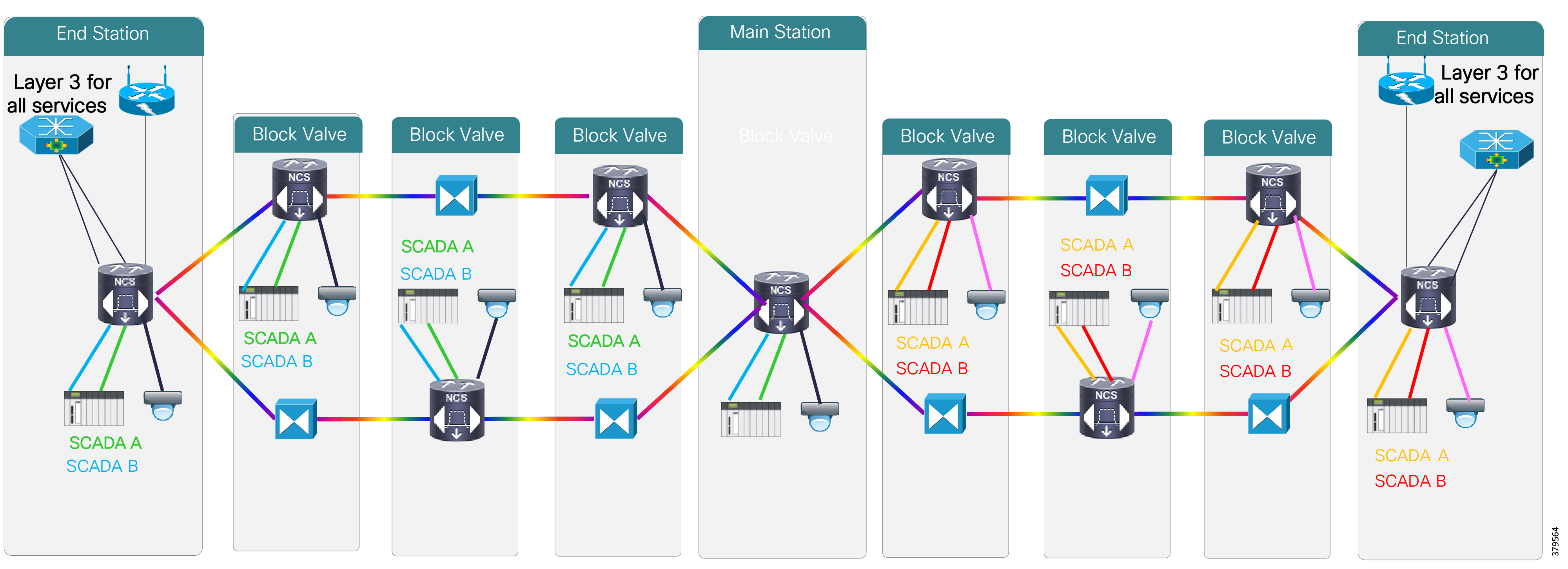

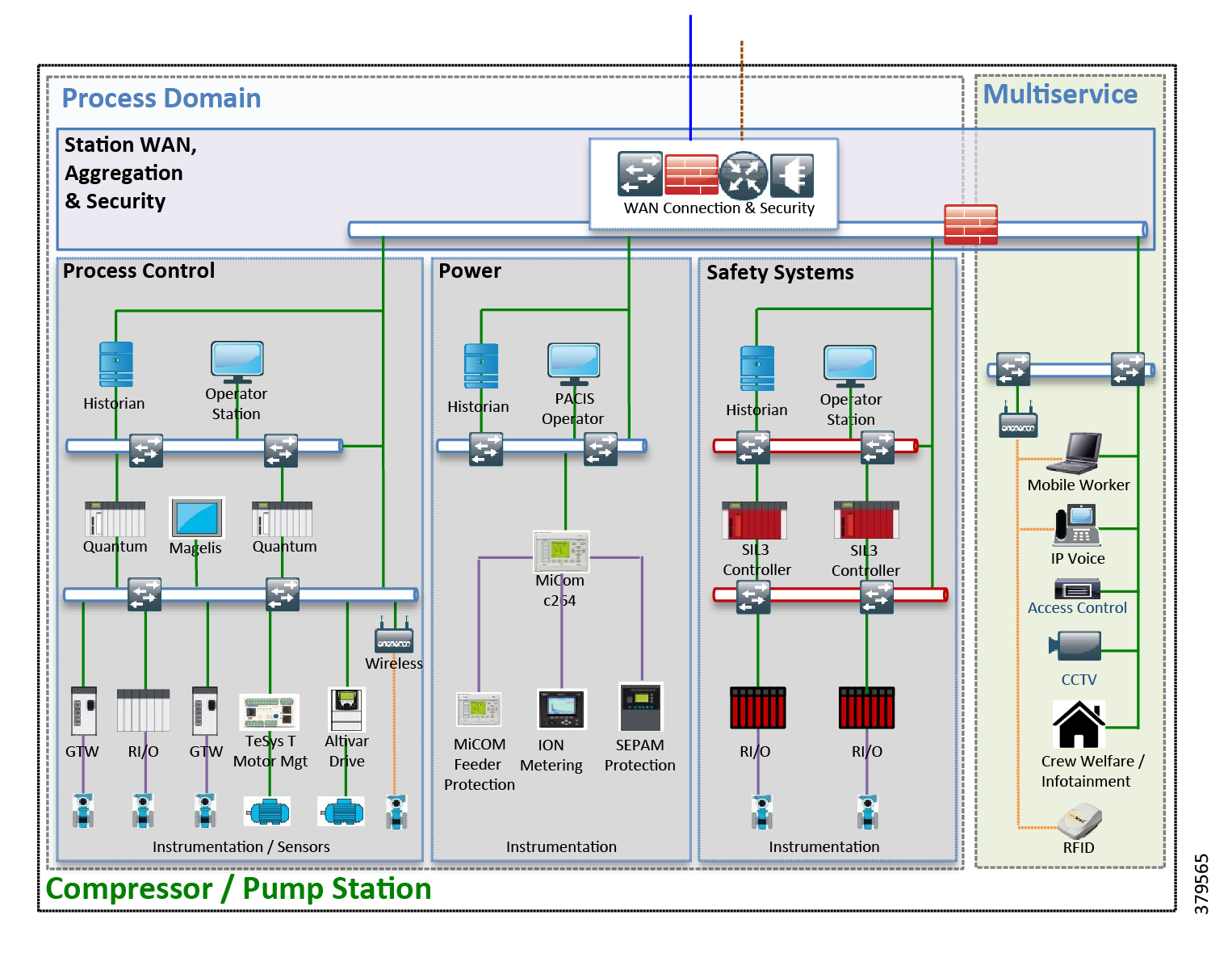

The O&G pipeline architecture (Figure 2) includes operating processes and safety and energy management functions geographically spread along the pipeline for a set of stations.

Figure 2 High-Level Pipeline Architecture

Stations vary in size and function, but typically include compressor or pump stations, metering stations, Pipeline Inspection Gauge (PIG), terminal stations, and block valve stations. Each asset must be linked with the applications and processes at the Control Center(s) (main and backup) and sometimes at other stations through operational field telecoms infrastructure. The infrastructure must be implemented in a reliable and efficient way, avoiding communications outages and data losses. The Control Center(s) should also be securely connected to the enterprise to allow users to improve operational processes, streamline business planning, and optimize energy consumption. It is important to note that some of these services may reside in the operational control center.

Pipeline Applications

The traditional approach to safe and efficient pipeline operations is achieved through real-time monitoring and control using the collection of data to a centralized PMS. The PMS combines SCADA with real-time applications specific to the O&G industry such as leak detection and flow measurement.

These integrated applications provide pipeline operators with:

■![]() Real-time or near real-time control and supervision of operations along the pipeline through a SCADA system based in one or more Control Centers

Real-time or near real-time control and supervision of operations along the pipeline through a SCADA system based in one or more Control Centers

■![]() An accurate measurement of key performance indicators (KPIs) such as flow, volume, and levels to ensure correct product accounting

An accurate measurement of key performance indicators (KPIs) such as flow, volume, and levels to ensure correct product accounting

■![]() The ability to detect and locate pipeline leaks, including time, volume, and location

The ability to detect and locate pipeline leaks, including time, volume, and location

■![]() Safe operations through instrumentation and safety systems where included

Safe operations through instrumentation and safety systems where included

■![]() An energy management system to visualize, manage, and optimize energy consumption within main stations

An energy management system to visualize, manage, and optimize energy consumption within main stations

■![]() Asset performance management such as condition-based monitoring and predictive analytics to proactively assess and address asset health

Asset performance management such as condition-based monitoring and predictive analytics to proactively assess and address asset health

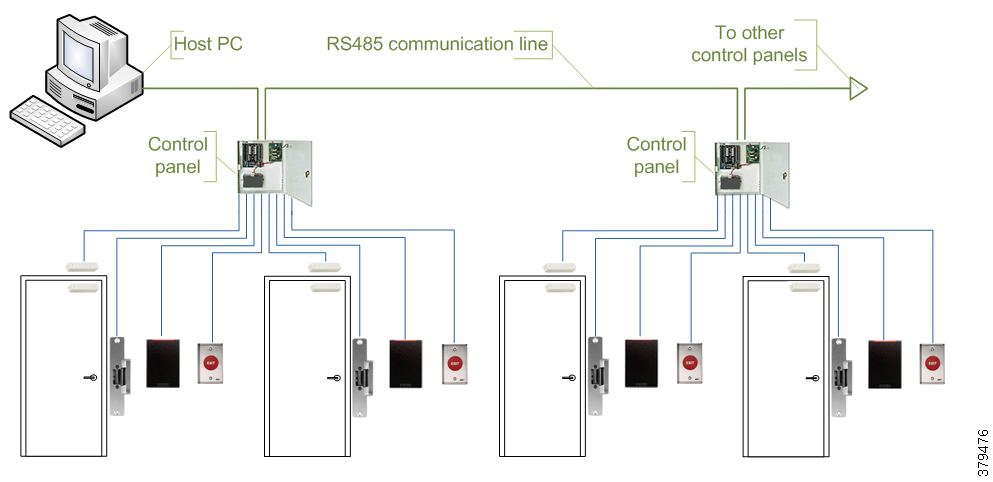

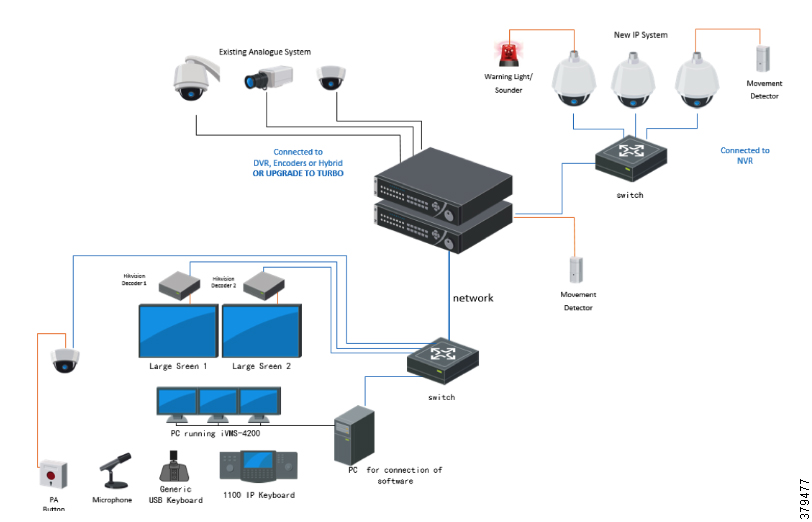

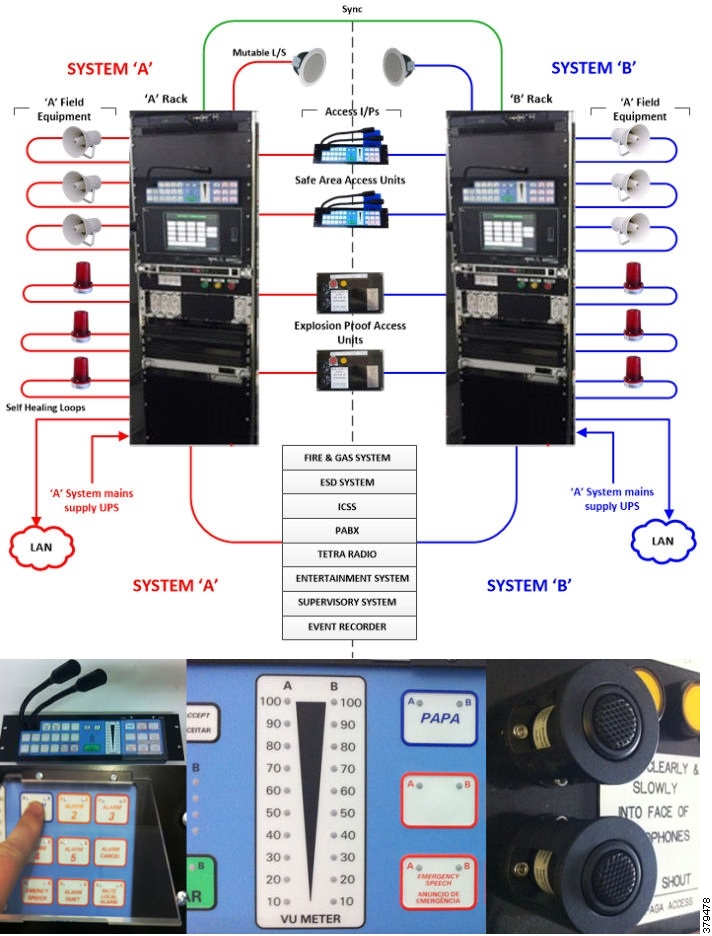

In addition to the pipeline management applications, non-production applications that support pipeline operations, such as physical security, voice, Public Address and General Alarm (PAGA) safety announcements, video, and wireless, also exist.

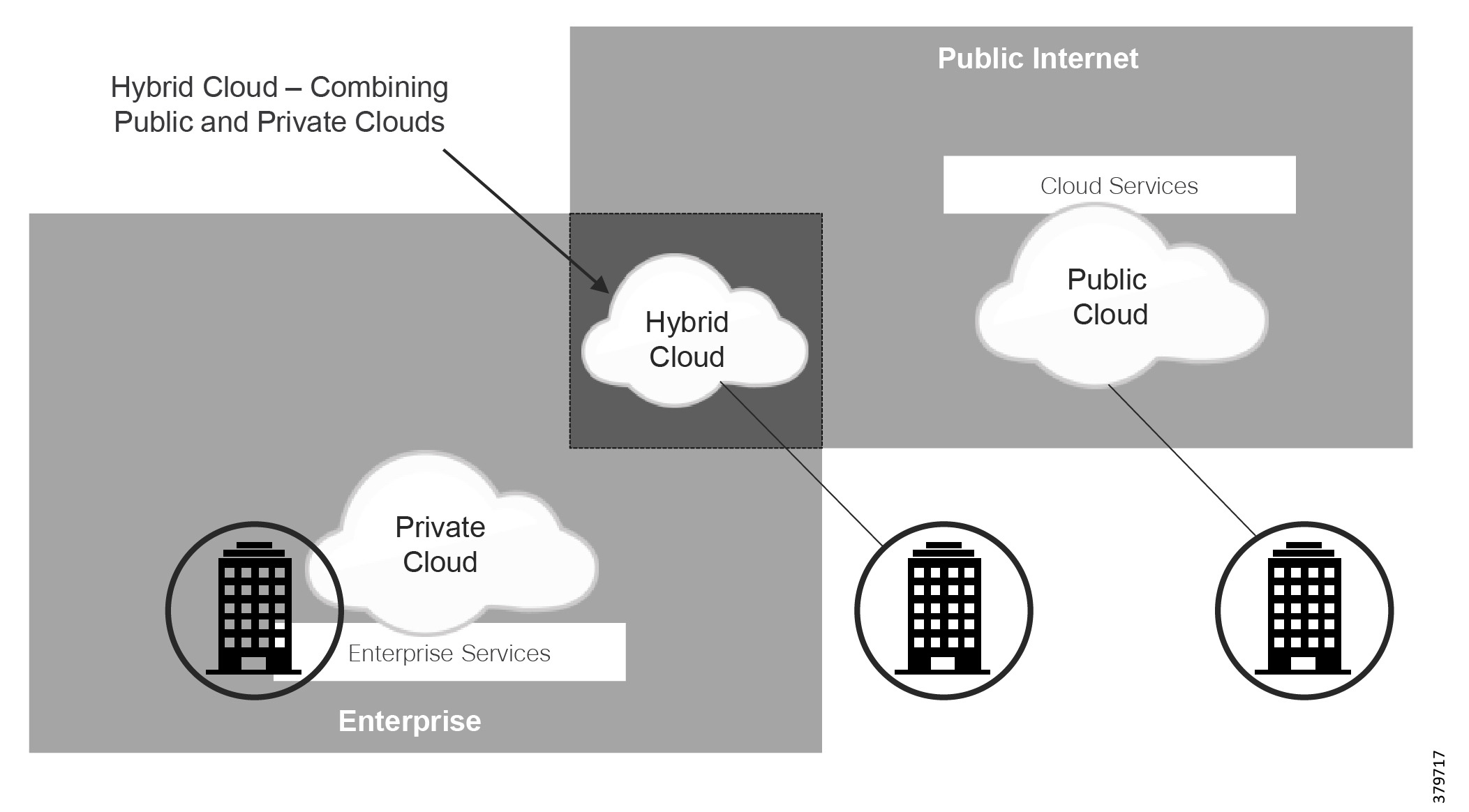

Both types of applications are mentioned because both are subject to threat and may potentially disrupt pipeline operations. As such, they need to be included as part of a holistic security strategy. Furthermore, many of these applications are bridging operations and business. Customers are leveraging solutions that are exclusively on-premise, exclusively in the cloud, or various hybrid solutions. This introduces new areas of security concerns that must be included in any security analysis and planning. The use of the cloud introduces new security concerns, along with the architecture of cloud-based solutions. A true SaaS offering where multiple users share the same common application introduces the risk of cross-user data exposure along with accessibility using the web to sensitive data stored in the cloud. This increases the attack surface and requires both the user and the supplier to consider how data is protected.

The last few years have seen an explosion of new technologies that can help improve the efficiency, availability, and safety of pipeline operations, including:

■![]() Leveraging fiber for acoustic sensing for leak detection and tamper and intrusion prevention

Leveraging fiber for acoustic sensing for leak detection and tamper and intrusion prevention

■![]() Advanced integrity management

Advanced integrity management

■![]() Enterprise asset performance management (EAPM)

Enterprise asset performance management (EAPM)

■![]() Industrial Internet of Things (IIoT)

Industrial Internet of Things (IIoT)

Although they bring new benefits, the challenge is that they also bring potentially new security attack surfaces and expose pipeline operations to new risks.

Traditional Security Architecture Approach

Secure architectures for both the operational and the enterprise layers associated with a pipeline are critical components of any design.

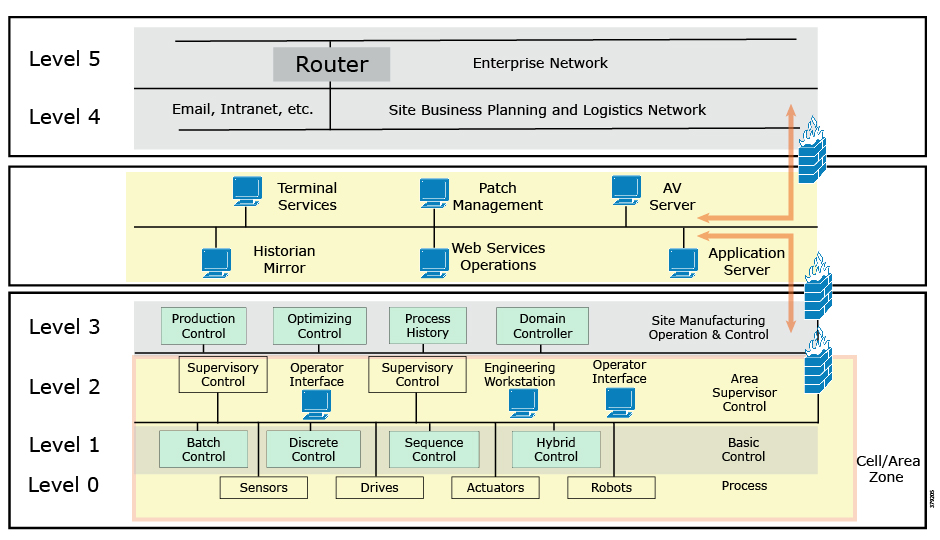

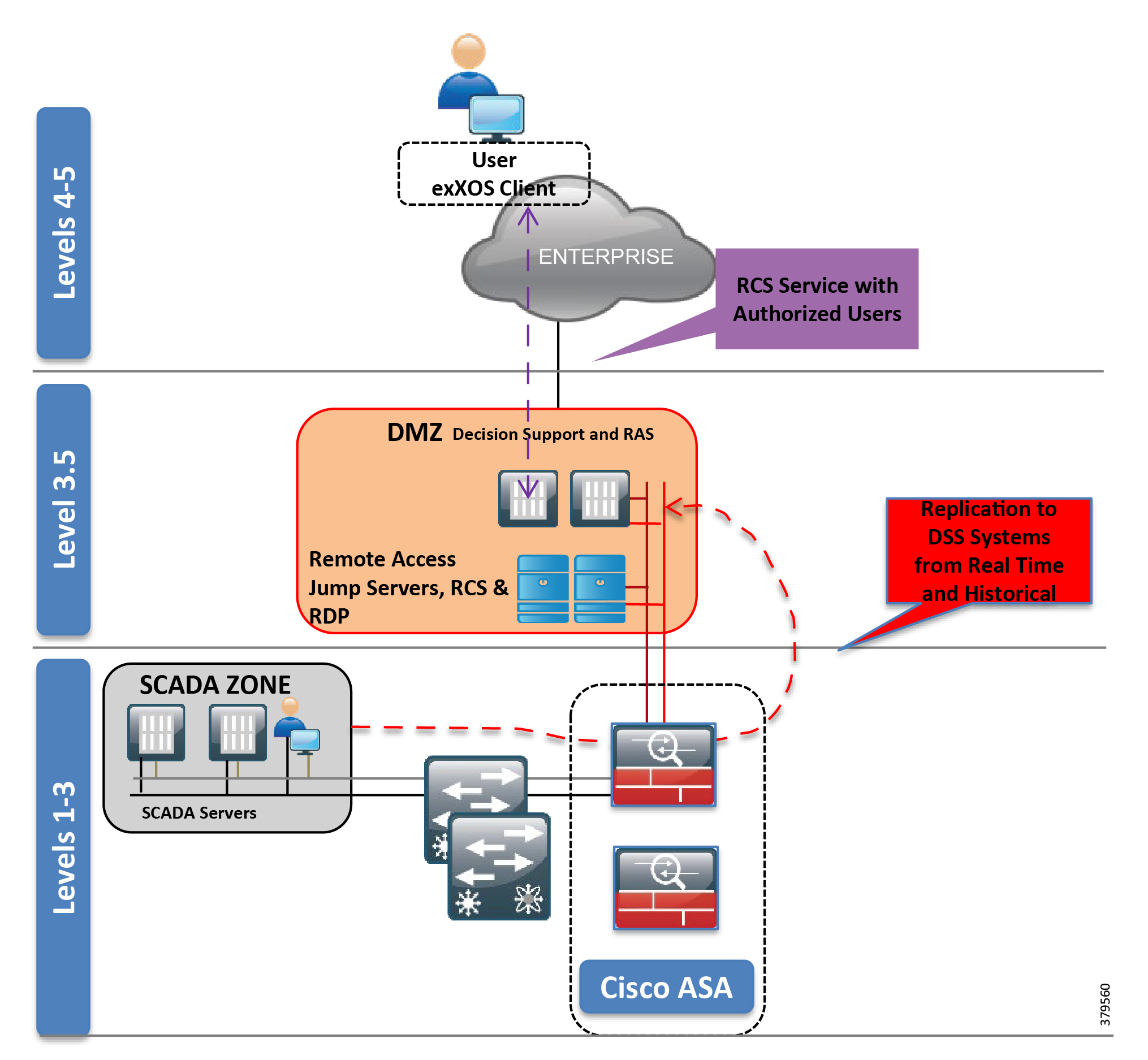

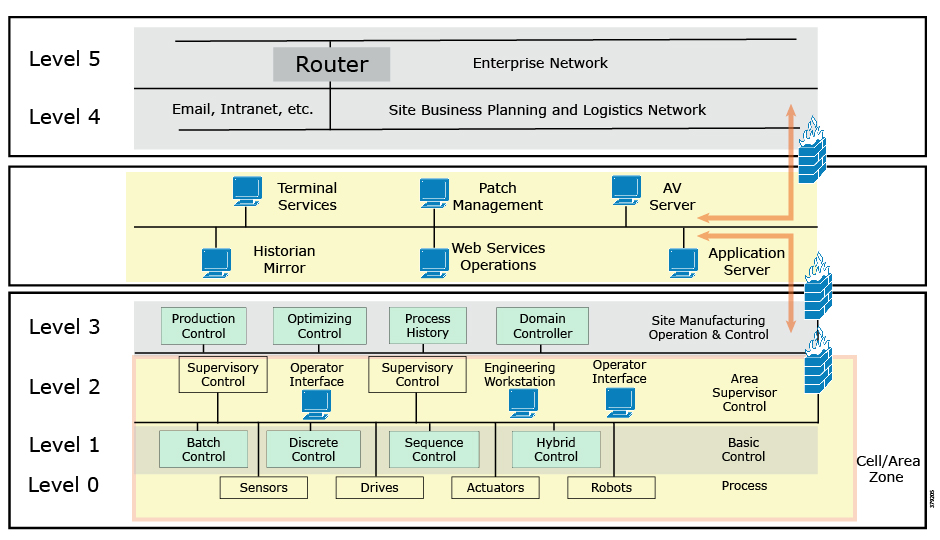

IEC 62443 is the most widely adopted cybersecurity standard globally for ICSs and is the de facto standard in the industry, evolving from the 1990s when the Purdue Model for Control Hierarchy (Figure 3) and ISA 95 established a strong emphasis on security architecture using segmented levels for ICS deployments. This was further developed through ISA 99 and IEC 62443, bringing additional risk assessment and business process focus. This segmented layered architecture forms the basis of many industrial system architectures and ICS security architectures.

The Purdue Model for Control Hierarchy also describes a hierarchical data flow model, where sensors and other field devices are connected to the ICS. The ICS serves the dual purpose of controlling processes or machines and serving processed data to the operations management level of applications. Level 3 applications feed information to the enterprise business system level through Layer 3.5.

Figure 3 Example Purdue Model for Control Hierarchy Architecture

The model has seen an additional level informally integrated, mainly due to the divide between the OT and IT domains. The Level 3.5 Industrial Demilitarized Zone (IDMZ) provides a strict segmentation zone and boundary between layers. However, services and data need to be exchanged between the enterprise layer and the ICS. Systems located in the IDMZ, such as a shadow historian, bring all the data together for company personnel in a near real-time system, publishing near real-time and historical information to the enterprise layer for better business decision-making.

No direct communication is allowed between the enterprise layer and the ICS layer. The IDMZ provides a point of access and control for the provision and exchange of data between these two layers. The IDMZ provides termination points for the enterprise layer and the operational domain and hosts various servers, applications, and security policies to broker and police communications between the two domains.

The traditional model has focused on segmentation and restricted traffic flows, but this is not enough to secure an operational domain. More recently, the National Institute of Standards and Technology (NIST) Cybersecurity Framework—with its charter to protect critical infrastructure—has strongly emphasized the human component of cybersecurity. A number of industry-specific regulations and guidelines for pipeline operators, such as API 1164 for SCADA security, also exist.

The reality is that technology itself can only address about half of the cybersecurity threat, whereas people and process play a critical part in every aspect of threat identification and monitoring. This means that guidelines and standards are not concrete measures to provide protection, but do provide a solid foundational base from which to work. The most comprehensive approach to securing the PMS combines technology, people, and processes, both cyber and physical.

A properly designed standards-based architecture to secure use cases and systems, bringing together the operational (Levels 3 and below) and enterprise (Levels 4 and above) domains, is critical. The architecture should provide an understanding of all components of a use case, map these elements together in a structured way, and show how they interact and work together. A properly designed architecture not only brings together IT and OT technologies, but also includes vendors and third-party content; in other words, it secures the ecosystem. Gartner5 believes that security can be enhanced if IT security teams are shared, seconded, or combined with OT staff to plan a holistic security strategy.

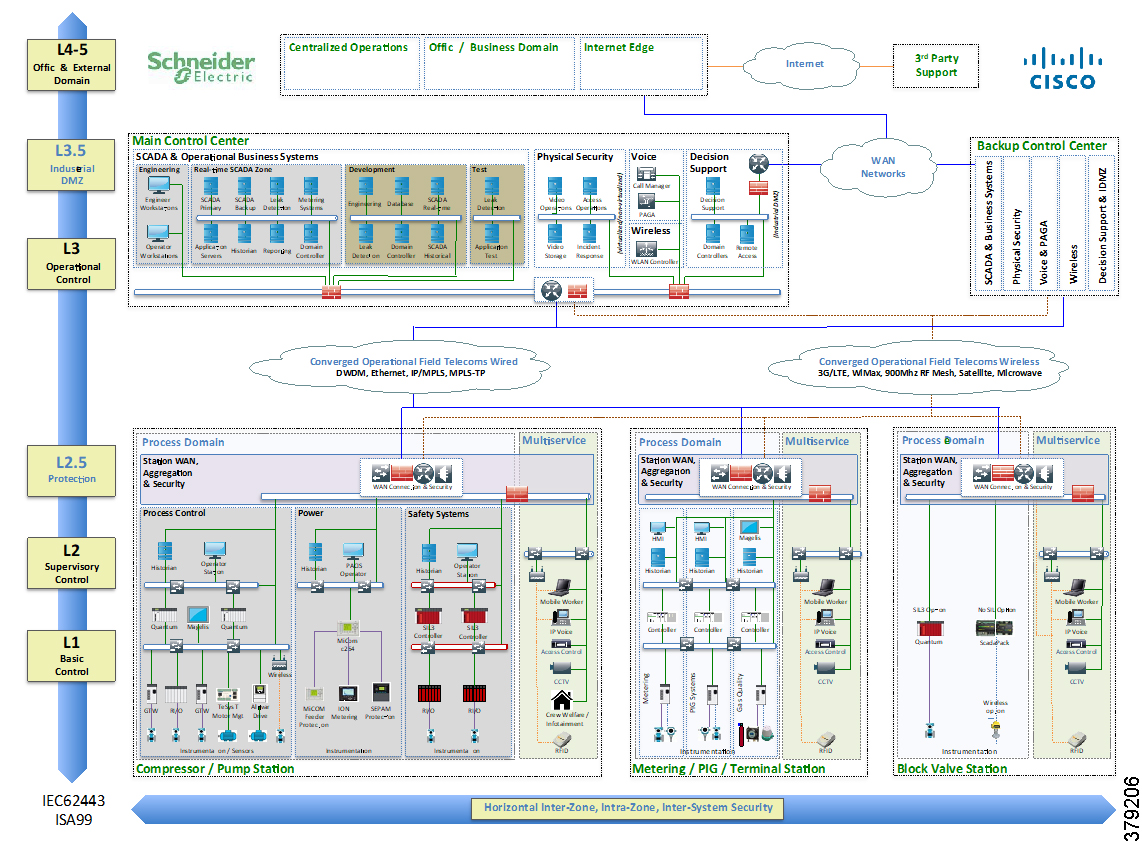

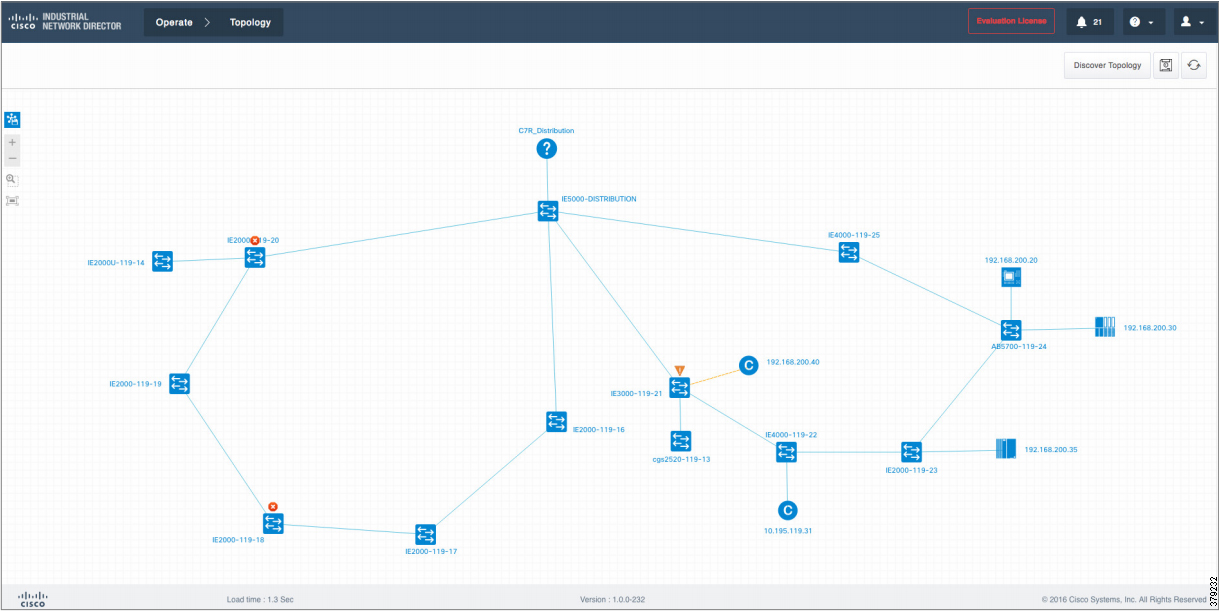

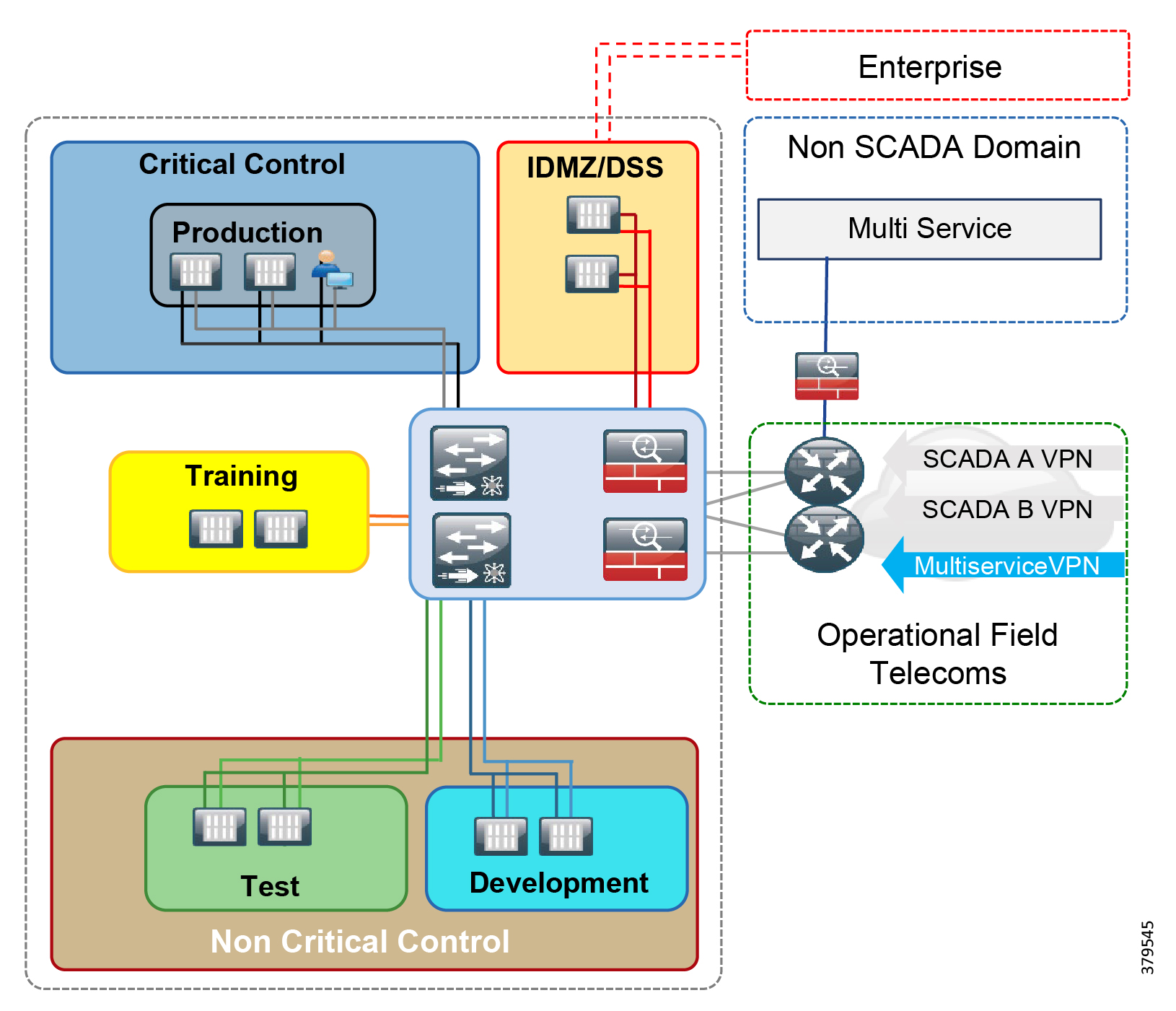

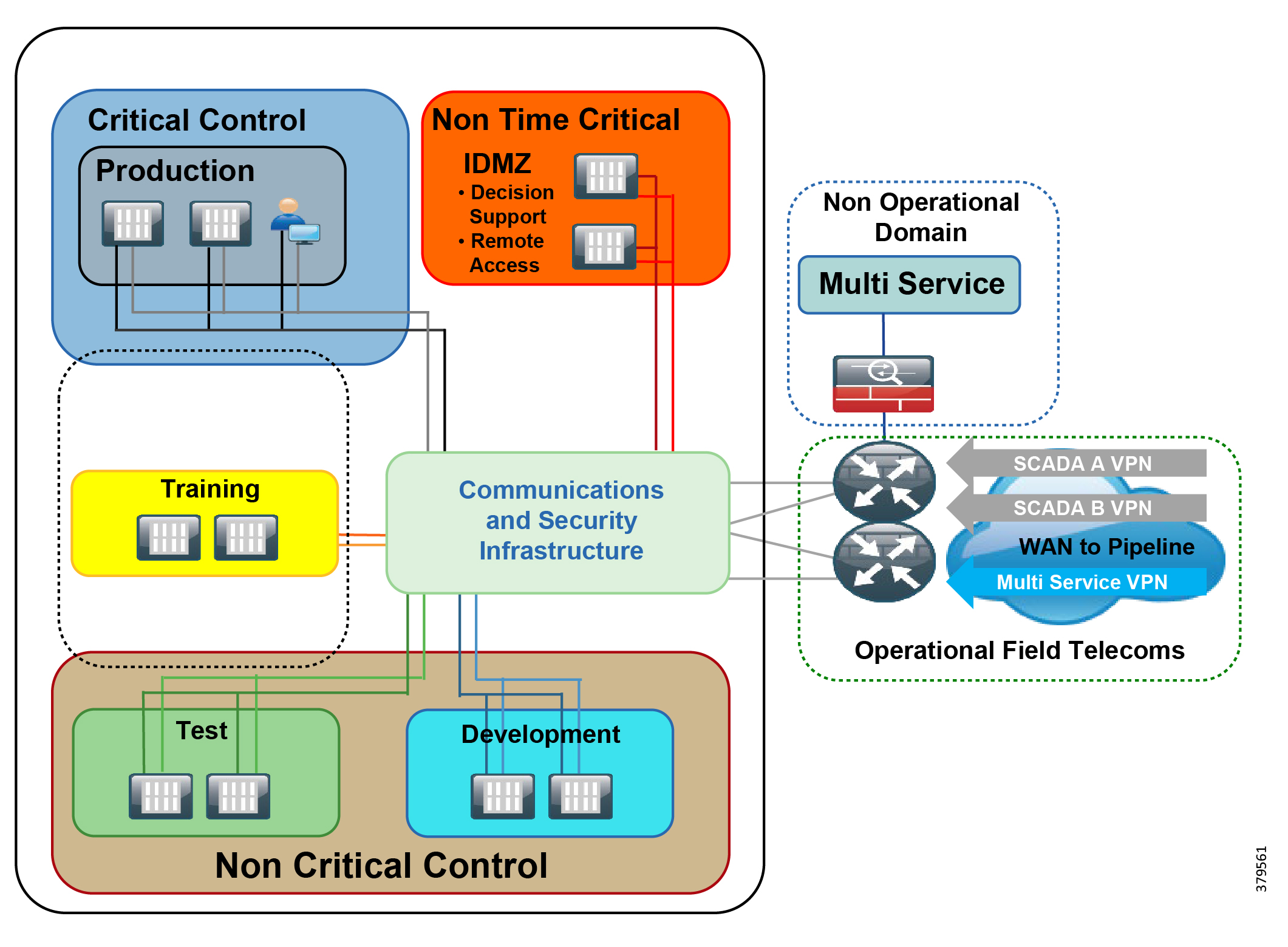

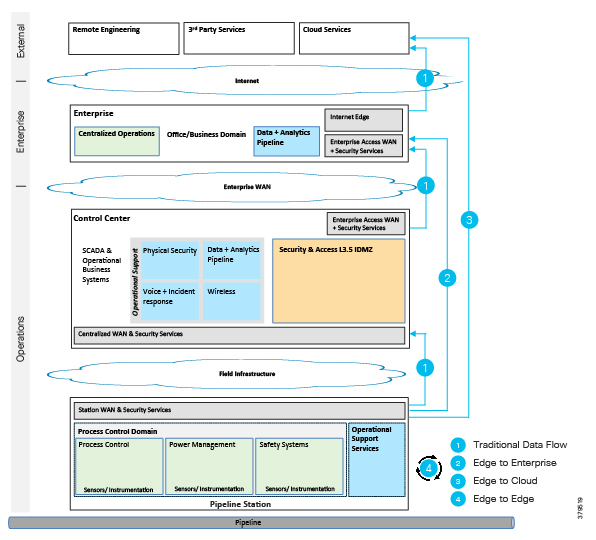

Cisco, Schneider Electric, and AVEVA Joint Reference Architectures

As part of the initial validation efforts for the Smart Connected Pipeline, Cisco, Schneider Electric, and AVEVA created a foundational reference architecture following the principles of IEC 62443. This represents a forward-looking functional architecture for end-to-end pipeline infrastructure. The aim is to provide a flexible and modular approach, supporting a phased roadmap to O&G pipeline operational excellence.

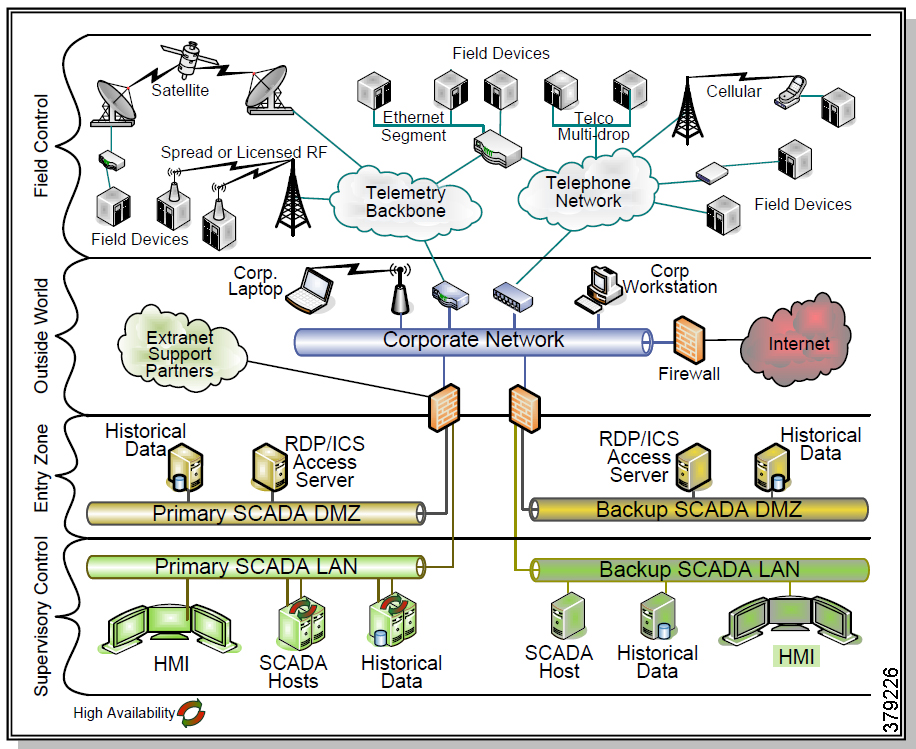

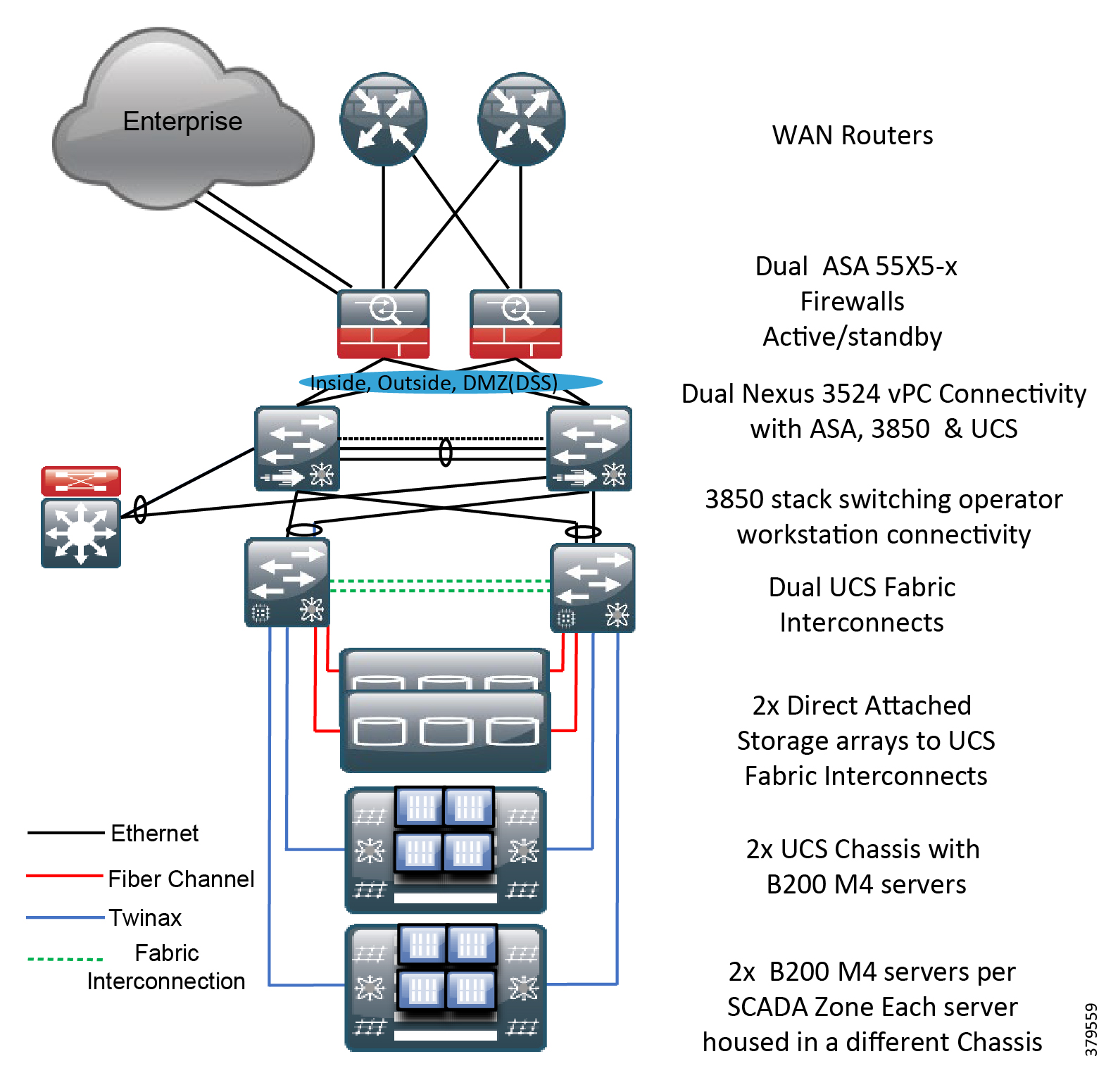

The architecture is based on a three-tier building block approach as defined in the joint Cisco and Schneider Electric reference architecture (Figure 4).

■![]() Control Center—Virtualized, geographically separate, redundant Control Centers

Control Center—Virtualized, geographically separate, redundant Control Centers

■![]() Operational Telecoms Network—End-to-end communication from field device to Control Center application for operational and multi-service applications

Operational Telecoms Network—End-to-end communication from field device to Control Center application for operational and multi-service applications

■![]() Pipeline Stations—Local area networks inside the stations for operational and multiservice applications

Pipeline Stations—Local area networks inside the stations for operational and multiservice applications

Figure 4 Cisco, Schneider Electric, and AVEVA Reference Architecture

This solution architecture has been implemented in a number of deployments. It provides a strong foundation, following recognized security standards, and as it is jointly validated, helps to reduce risk for operators. However, due to the changing industry discussed in the next section, it is already evolving to include a number of new areas.

It is important to note that the reference architecture should be considered as a framework for a comprehensive PMS, but for operators that have less complex solutions, their needs are still recognized within the overall reference architecture. The design components and security infrastructure define a best practices structure that is recommended for midstream systems, thus the security concepts are applicable regardless of the complexity or design of the system in relation to the presented reference architecture.

An Evolving Industry

The continuous evolution of technology directly affects the O&G segment. Most of the global technology trends are producing significant changes in the way the industry faces current and future challenges.

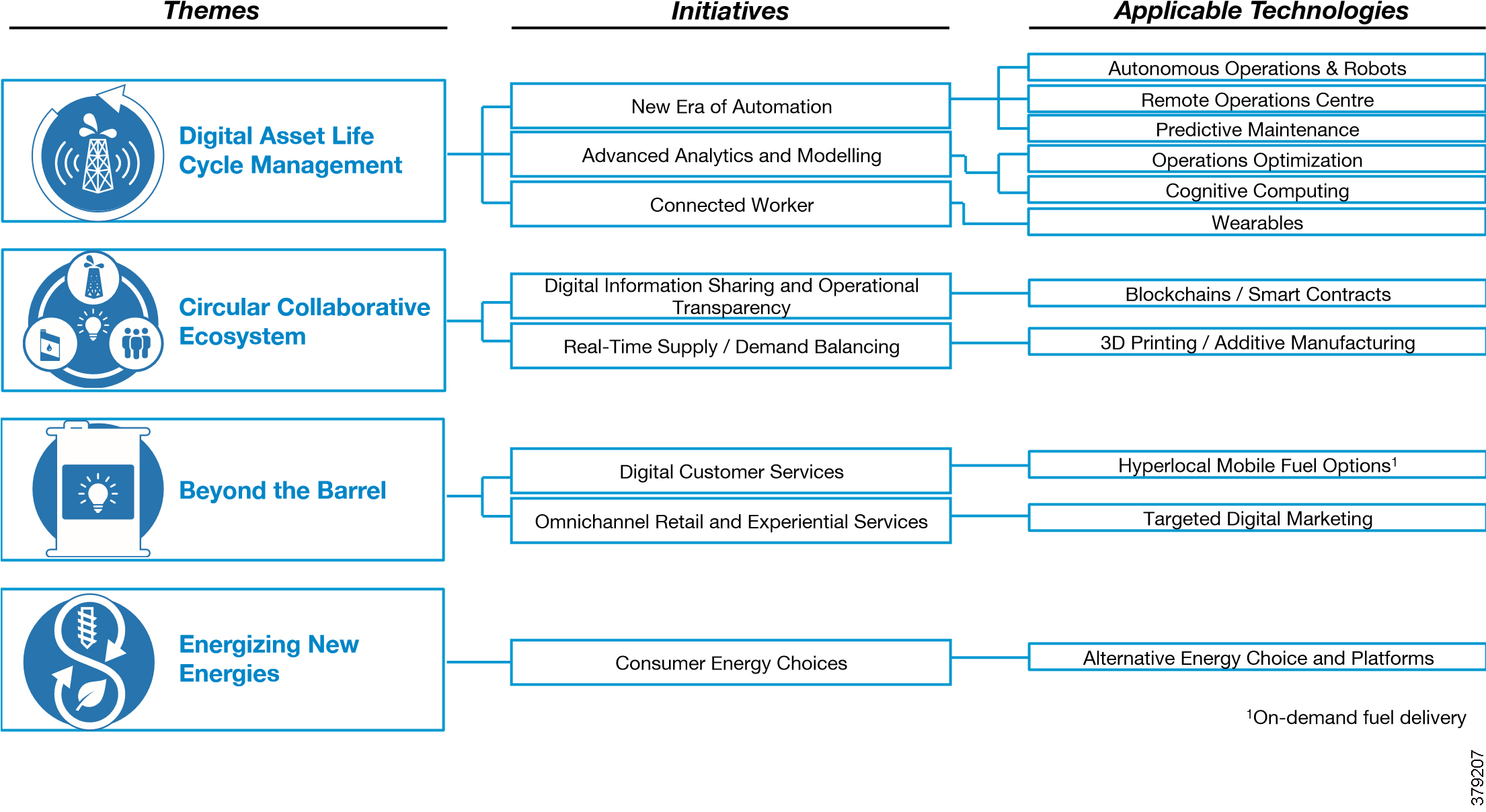

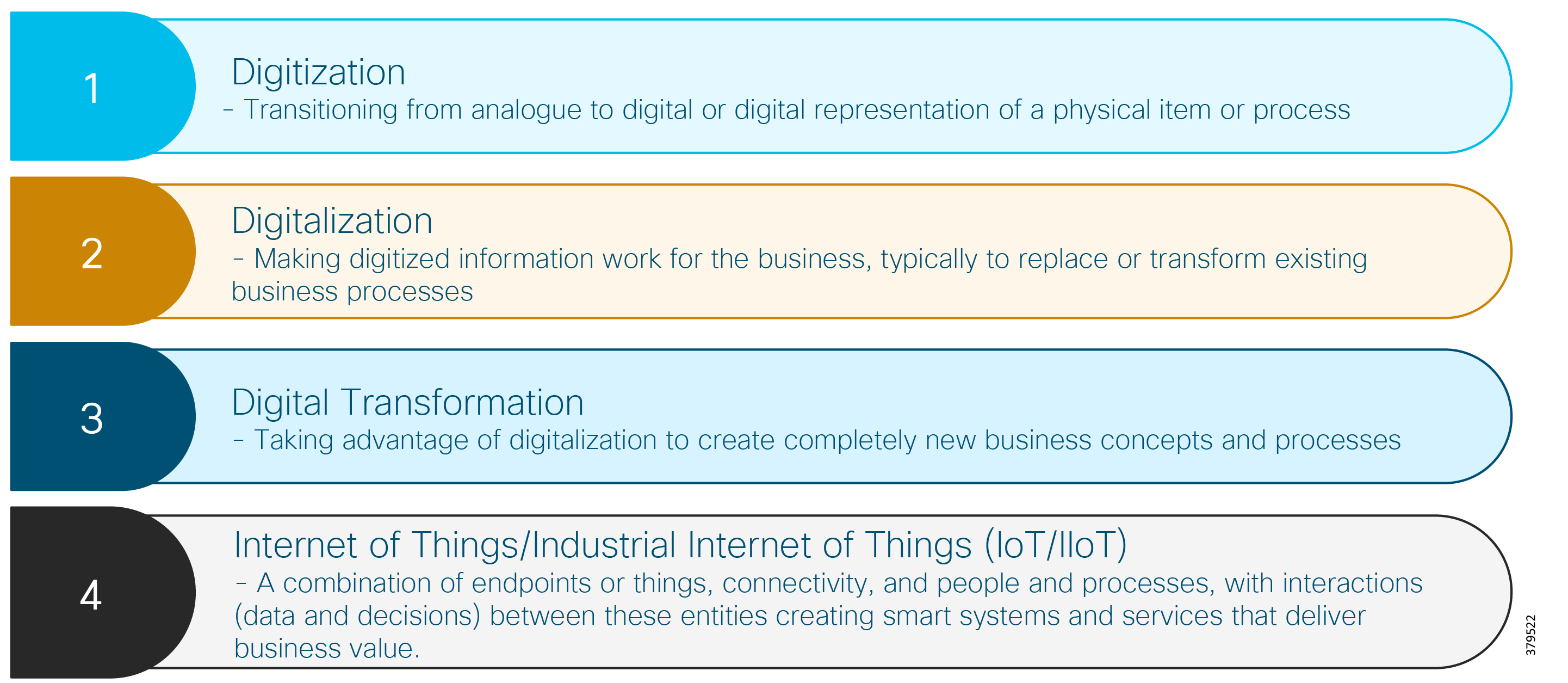

Most industrial environments, including O&G, are subject to transformation due to digitization and the benefits it brings, a number of which are shown in Figure 5. A 2017 World Economic Forum report6 highlighted operations optimization and predictive maintenance as the two leading opportunities to provide value to the industry, with both residing in the digital asset life cycle management category. Remote operations centers, the connected worker, and autonomous operations and robots came third, fifth, and sixth, respectively. All of these areas that are needed to provide additional value to an evolving industry require new technologies to be realized, all of which need to be considered from a security perspective.

A number of enabling trends that are associated with digitization are discussed in Figure 5.

Figure 5 Digital Initiatives in the Oil and Gas Industry (Source: World Economic Forum)

Interoperability and Openness

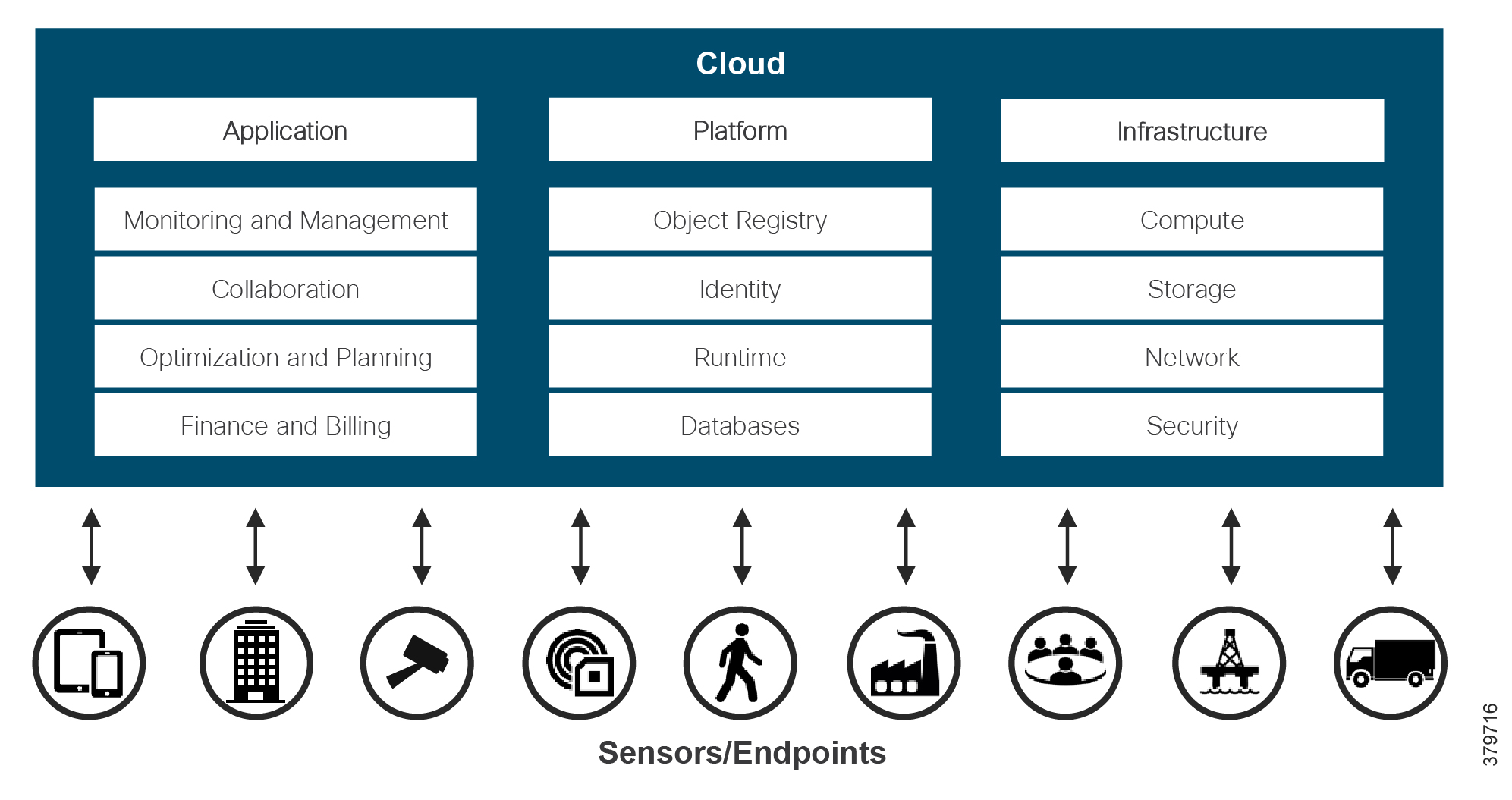

The standard security architecture model has historically been a security-by-obscurity approach with physically isolated networks. Control systems were standalone with limited public access and proprietary or industrial-specific protocols, which were deemed too difficult to compromise. This approach may have been appropriate for control systems with highly restricted access (communications and people) and limited connection to IT; however, changes become necessary as systems developed with new features and functions to enhance performance and efficiencies and organizations seek to take advantage of newly available data. In addition, newer enabling technologies such as cloud computing, distributed fog and edge computing, real-time streaming analytics, and IIoT platforms are leveraging open interoperable protocols along with standardized interfaces. This means both traditional and newer systems communications architectures are based by default on Internet Protocol (IP) and Ethernet wired and wireless infrastructure (Figure 6).

Figure 6 Digitization and Evolving Architectures

Commercialization

Commercial off-the-shelf (COTS) technology is increasingly being introduced into the pipeline environment to perform monitor and control tasks, replacing devices that were built specifically for the operational environment. Devices such as mobile handsets and tablets, servers, video cameras, and wearable technology, as opposed to specifically designed ICSs hardware, are being implemented. These devices are necessary to enable new use cases, but their deployment that they achieve, along with operational technology, need careful consideration and appropriate architectural implementation to ensure the same levels of security as the operational systems to which they contribute.

Industrial Internet of Things

The O&G industry has experienced the connection of a proliferation of new devices to systems to replace existing functions or perform new ones. IIoT technologies are being used to connect pipeline networks, sensors, leak detection devices, alarms, and emergency shutdowns allowing companies to analyze and interpret data to reduce major risks.

As pipeline operators continue to adopt new technologies and use cases, new and diverse devices are being connected to the network, which provides potential new areas of security vulnerability.

Big Data and Analytics

Big Data and analytics (streaming, real-time, and historical) trends are leading to increased business intelligence through data derived from connecting new sensors, instrumentation, and previously-unconnected devices to the network. In parallel, business units and external vendors need secure remote access to operational data and systems to provide additional support and optimization services. These business requirements lead to a multitude of new entry points with the potential to compromise PMS security.

IT and OT Convergence

Organizationally, a shift has occurred to an increasing convergence between historically separate IT and OT teams and tools. This has led to more IT-centric technologies being leveraged for PMS. Information derived from operations is typically used to make physical decisions such as closing a valve, IT data is typically leveraged to make business decisions, and convergence enables the business to perform tasks such as process optimization or measurement. Regardless of the type of technology or information, the business must treat any security challenges in a similar manner. As the borders continue to blur between these traditionally separate domains, strategies should be aligned and IT and OT teams should work more closely together to ensure end-to-end security. At a minimum, this means organizations need to rethink how they address architectures, management, administration, policies, and infrastructure.

However, OT and IT security solutions cannot simply be deployed interchangeably. The same technology may be leveraged, but how it is designed and implemented may be very different. Although IT and OT teams may be a part of the same organization, they have very different priorities and often skill sets. The reality is that some organizations still have a gap between IT and OT, with some being very siloed while others are much more closely aligned. Regardless, this separation, and in some cases, antagonism, is unlikely to disappear in the short-term. Gartner research7 argues that a shared set of standards, platforms, and architectures across IT and OT offers the opportunity in some circumstances to reduce both risk and cost and cover external threats and internal errors. However consideration must be made for some of the differences related to high availability and architectural reliability for OT.

Virtualization and Traditionally IT-centric Technologies

In line with operational efficiencies introduced with virtualization and hyperconvergence, the advancement of these technologies has begun to affect system architectures. Historically, control systems were deployed on servers dedicated to specific applications or functions and on separate communication networks to isolate specific operational segments. In addition, multiservice applications supporting operational processes had separate dedicated infrastructures.

In current deployments, we now see virtualized data center server infrastructures not only being introduced, but actually becoming the standard deployment offering for PMSs and adopted by ICS vendors and end customers. The operational field telecoms and WAN networks also leverage virtualization technologies like VPNs, VLANs, and Multiprotocol Label Switching (MPLS) to logically segment traffic across common infrastructure.

Although there are many examples of physically separated systems, due to customer philosophy, standards, and compliance requirements, virtualized implementations are on the rise. As such, the security requirements and necessary skills to implement and manage these deployments are moving away from being operations-based (i.e., OT) and becoming more IT-centric. This aligns to the aging workforce in the industry, where many newer engineers come with more of an IT-centric approach due to their education, experience, and daily engagement with technology.

New Approaches to Security Design

Even with the Level 3.5 IDMZ, the traditional segmented architecture poses challenges for a digitally evolving PMS. Neither current nor future data are hierarchical in nature. Data has many sources and many clients that will leverage it, so that we are already seeing “smart” systems that can autonomously leverage data and initiate processes. Federated data structures with storage exist all over the organization, not just in central locations. Even though the segmented approach has been the de facto manner to architect PMS, it segregates IT/enterprise and OT services, with physical or heavily virtualized segmentation implemented. This separation is inevitably blurring and use cases may often need a combination of IT and OT services to provide the optimal business benefit. The ICS environment for pipeline operators is changing, but the need for secure data management and control of operating assets is still a primary concern.

These trends continue to drive the need for a more connected ICS, along with a more open architecture. In whatever manner we look at it, the need for a comprehensive approach to security that acknowledges the need for control system protection, while facilitating the open data demands of the new digitization era, has never been stronger.

Newer Architectural Approaches

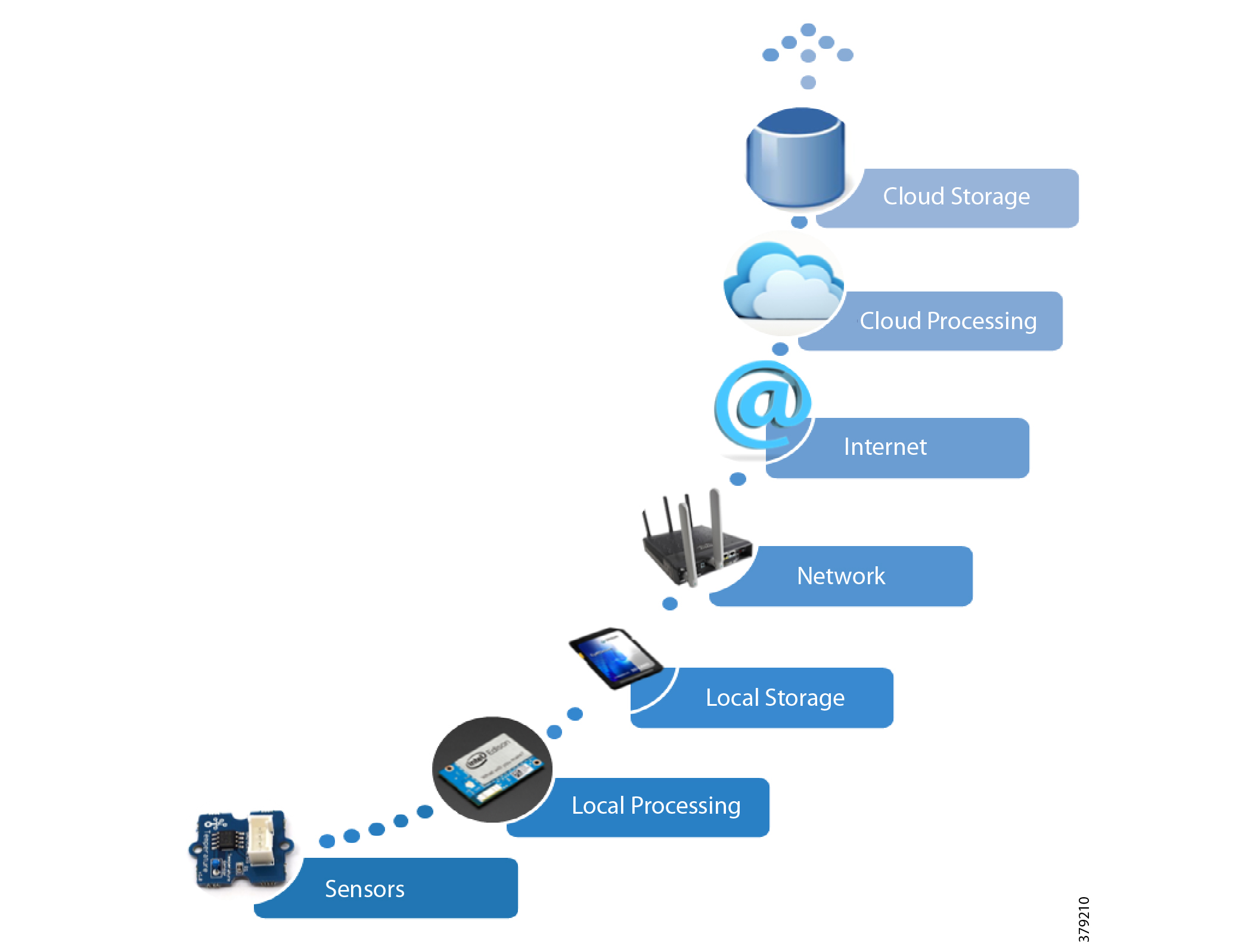

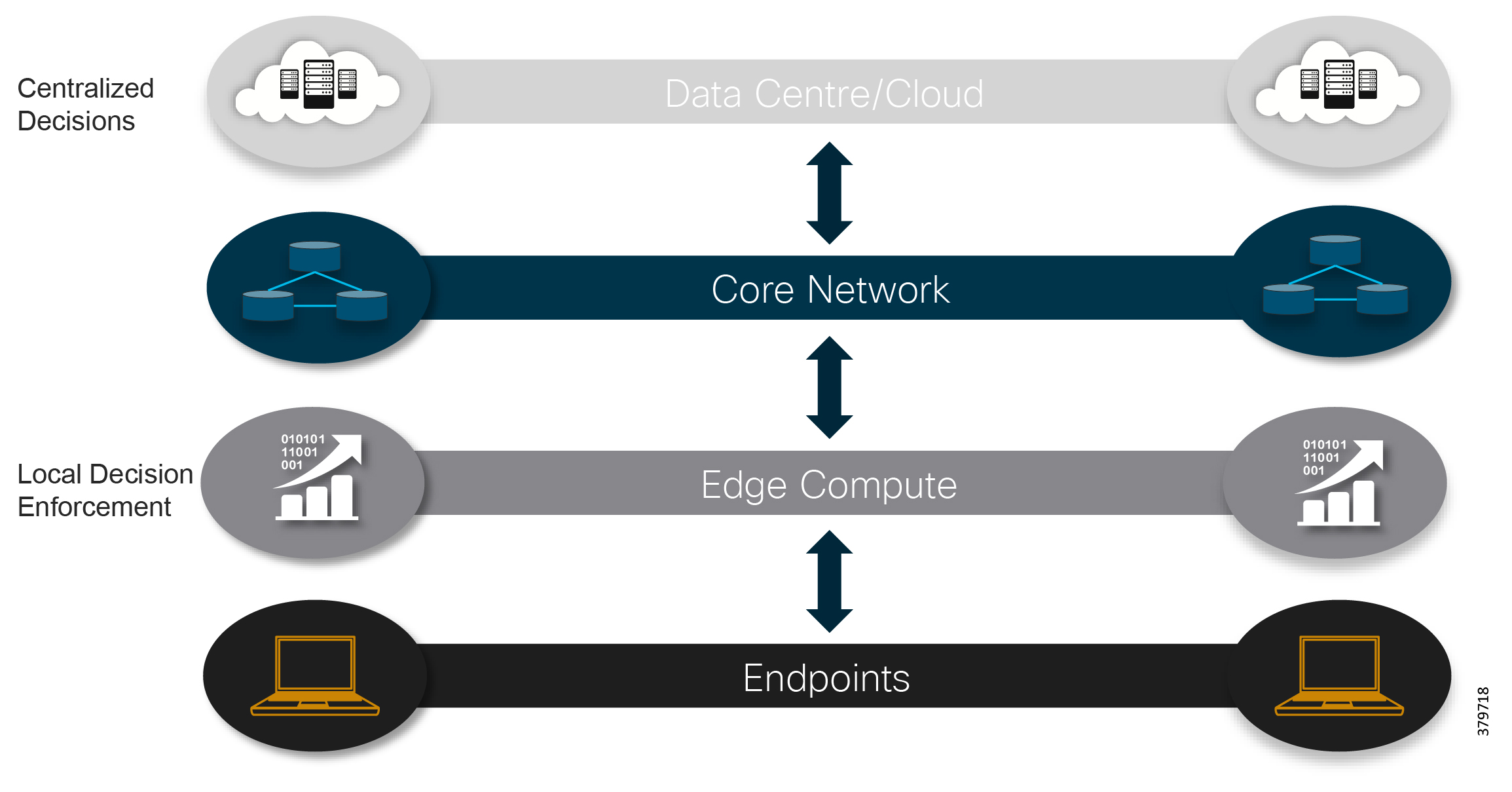

IT and OT convergence is inevitable, although the extent of this convergence, and to which parts of the business it will be applied, is not well understood. As shown in Figure 7, to deliver transformational operational use cases, such as real time analytics for machine health monitoring, the full IIoT stack (infrastructure, OS, applications, data pipeline, service assurance, security, and so on) is required. This means integrated IT-centric services must be deployed alongside OT services to generate business value. As such, IT capabilities are now becoming operationalized and pushing the boundaries of traditional security architectures, like the Purdue Model for Control Hierarchy and IEC 62443, where we are seeing more converged approaches based on easier sharing of data.

Figure 7 Addressing the Full IoT Stack Along the Edge to Cloud Continuum

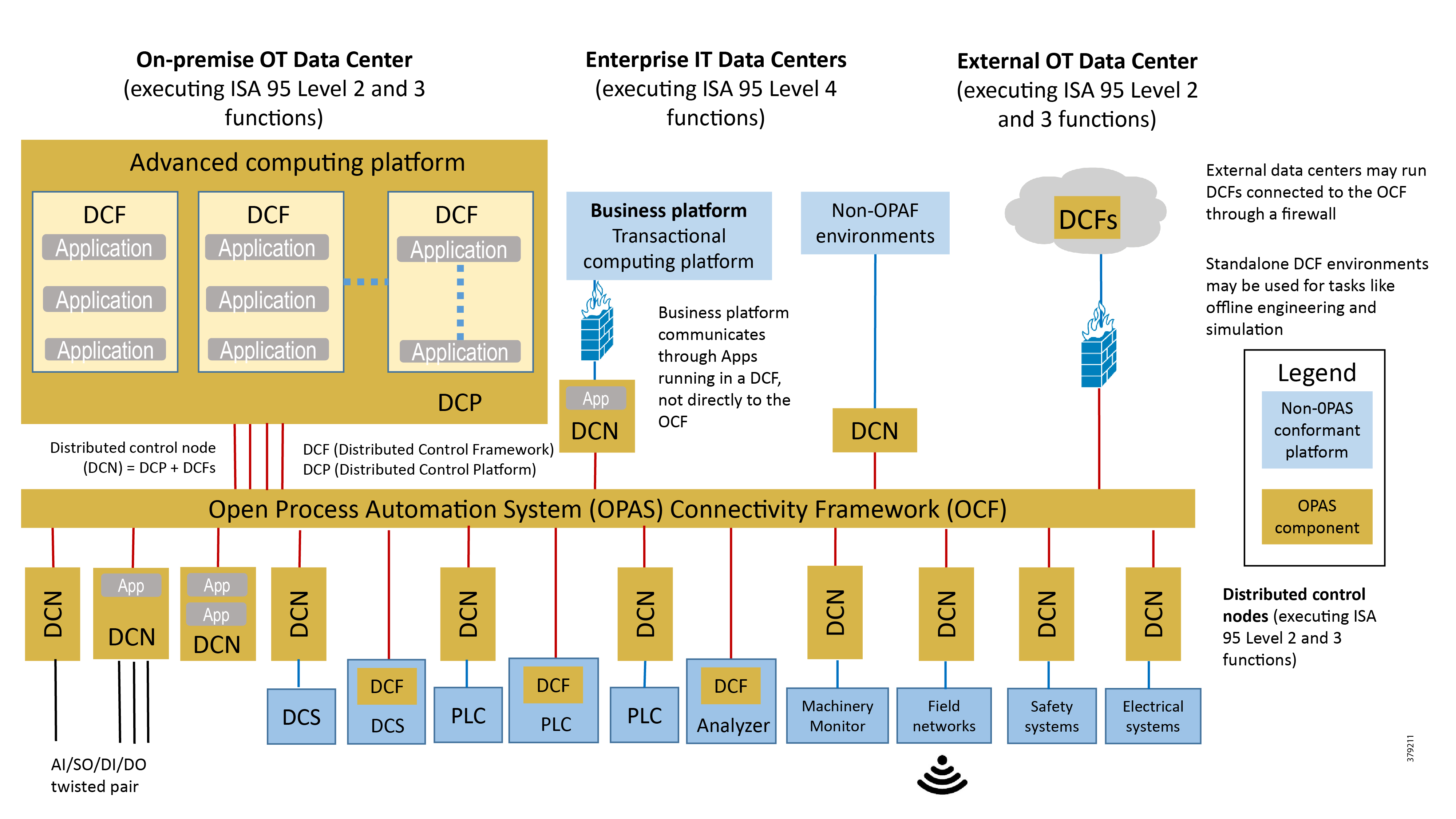

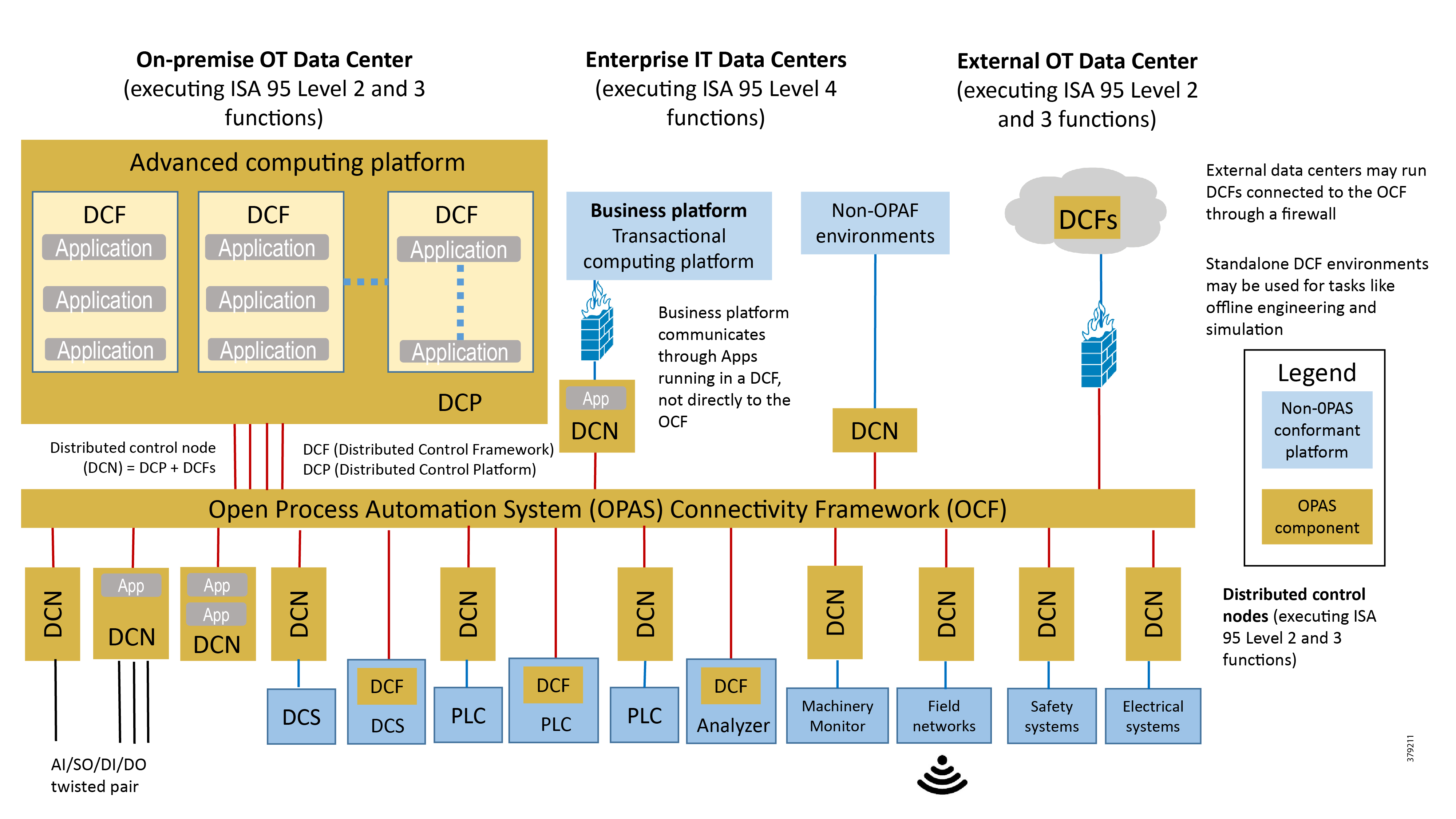

These newer approaches focus on open architectures for peer-to-peer, scalable systems that support edge analytics, local monitoring, decision-making, and control. Recent standardized approaches such as Open Process Automation (Figure 8) are defining open and interoperable architectural approaches.

Figure 8 Open Process Automation Reference Architecture8

In many current traditional industrial environments, different vendor offer multiple control systems, each with its own set of protocols, philosophies, interfaces, development ecosystems, and security. This makes the introduction of new technologies challenging and more expensive to integrate and implement. The Open Process Automation approach is to develop a single industrial architecture to address these challenges. At its core is an integrated real-time service bus, open architecture software applications, and a device integration bus.

The central focus of the architecture in these approaches is a software integration bus acting as a software backplane that facilitates distributed control. Capabilities that include end-to-end security, system management, automated provisioning, and distributed data management build out the rest of the architecture.

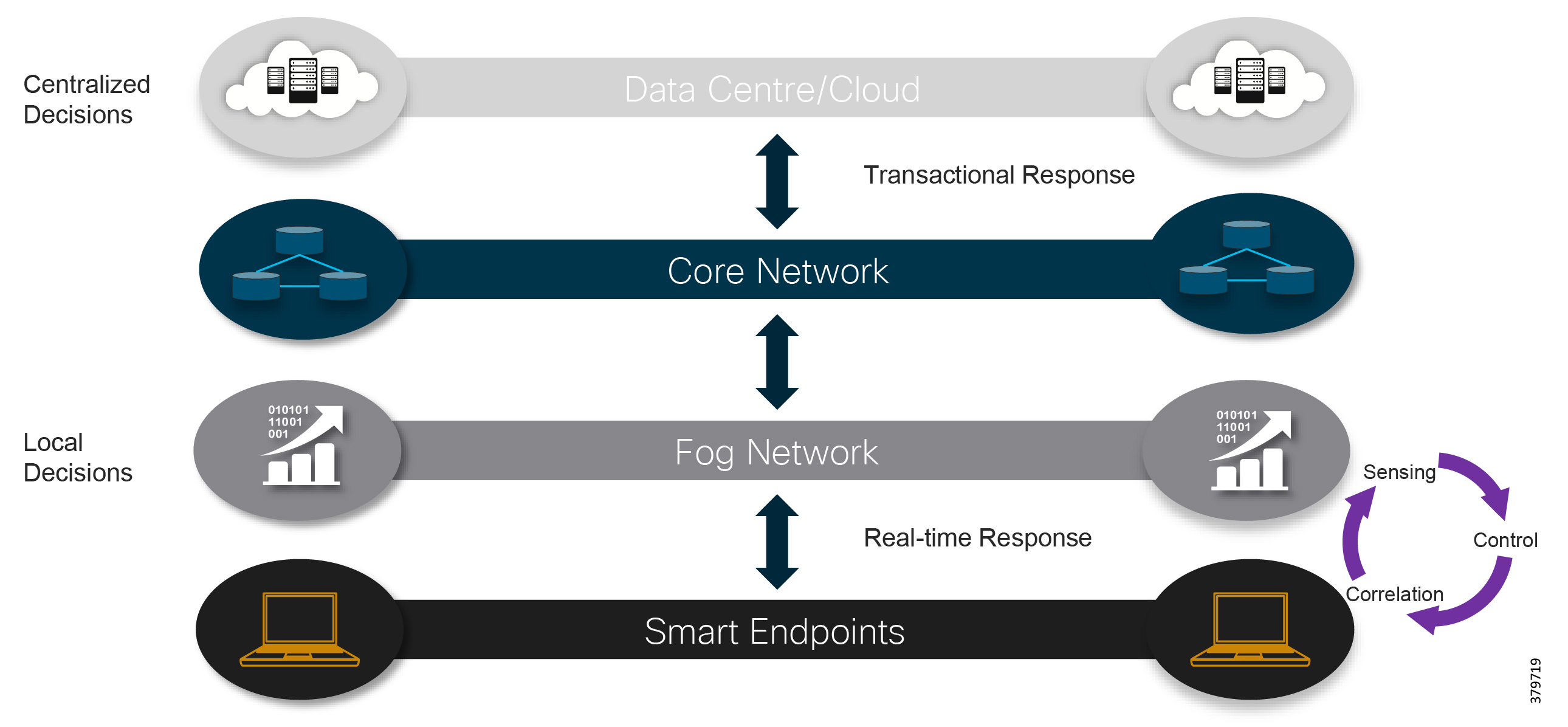

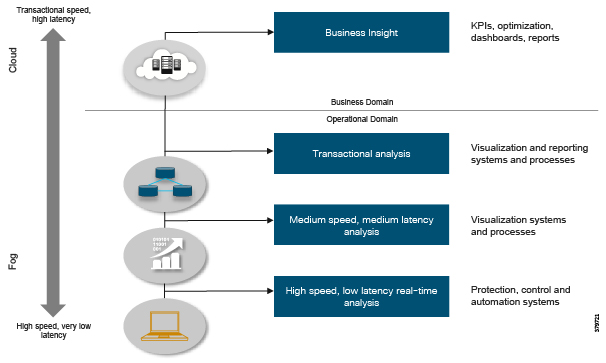

Fog and Edge Computing

Another fundamental shift in the industry is the adoption of fog and edge computing, with microservices and analytics being deployed as close as possible to the edge of the network. In this way, information can be analyzed live at its source and real-time decisions can be made without data being sent across networks to a centralized resource to be actioned. These types of architectures are increasingly critical and leveraged in systems with low bandwidth links (e.g., 3G). Low bandwidth links make it impossible to transfer large amounts of data to a central location that is geographically dispersed or isolated (e.g., an offshore production platform or oilfield wellhead) or where information may need to be shared between devices at the edge in order to make a coordinated decision (oilfield wellhead cluster for reservoir optimization).

Typically these distributed architectures have multiple applications and functions hosted on an edge device, usually a mix of IT and OT. As an example, a single ruggedized server may be deployed that houses virtualization services for operator optimization applications, a virtualized controller from a process control vendor, and data normalization and protocol adaptor services from an industry software company. In addition, it may also include infrastructure services such as a virtualized router, switch, and security functions from a communications vendor, and real-time streaming analytics from another vendor.

This approach requires securing multiple applications, functions, devices, and operating systems for multiple parties, including IT and OT functions.

This shifting landscape means that a different approach to the security posture may be required for the operational domain PMS. It also means a shift in the technical skill set, with a growing emphasis on having a well-rounded IT/OT engineer responsible for operating, maintaining, and securing today’s control systems.

Increasing Threat Landscape

With digitization driving the connection of a plethora of new devices that can be leveraged for monitoring or control, the attack surface for cyber threats is increasing dramatically. Additionally, much of this new equipment is COTS, such as handsets and tablets, servers, video cameras, and wearable technology, versus specifically designed control systems hardware. These devices are necessary to enable new use cases, but careful consideration and appropriate architectural implementation—alongside traditional operational technology such as remote terminal units (RTUs) and programmable logic controllers (PLCs) needs—must be given to their deployment to ensure the same levels of security as operational systems. For example, IoT-enabled video cameras appear to readily address physical security needs easily and cost-effectively, but in actuality can open a network to compromise or be used as a malicious device in botnets.

A 2015 Accenture study9 found that investment in big data, IoT, and digital mobility technologies is set to increase over the next five years. As organizations continue to evolve due to the recent oil price drop, the study found that the largest investments across the industry will be in mobility, infrastructure, and collaboration technologies. These will be leveraged to differentiate and improve competitiveness by enhancing operational processes through automation and business intelligence through new data sources. The study also found that cybersecurity and internal work processes will be the biggest barrier to deriving benefits from these technologies.

In parallel to these changes, non-operational business units, remote workers, and external service or vendor support companies require secure remote access to operational data and systems to provide support and optimization services. Again, these needs give rise to potential entry points, increasing the surface by which operational security may be compromised.

Threats may come from internal or external sources and may be accidental or malicious. The reality is that easy access to cyber information, resources, and tools have increased, making it simpler for hackers to gain an understanding of legacy and traditional protocols with the aim of gaining access to ICSs.

A DNV survey10 found that, although companies are actively managing their information security, just over half (58%) have adopted an ad hoc management strategy, with only 27% setting concrete goals.

To maintain the high availability and reliability pipeline operators require, security cannot be a bolt-on afterthought or a one-off effort and it cannot be dealt with by technology alone. To best secure the PMS, a holistic approach needs to take place at an ecosystem level and it needs to be a continual process built into the governance and compliance efforts of any pipeline operator organization.

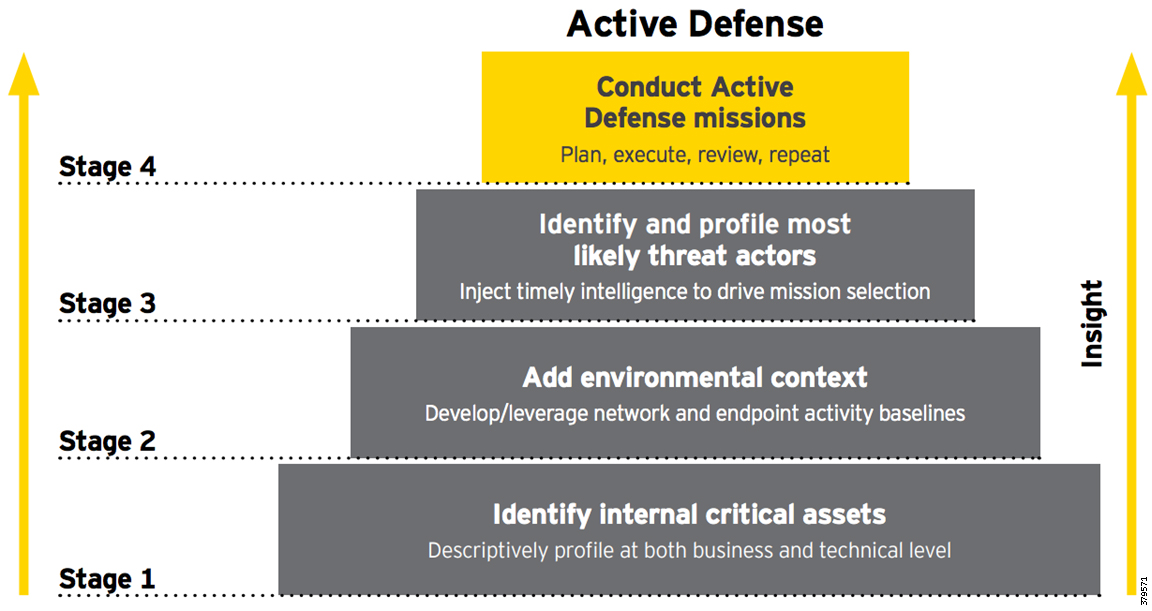

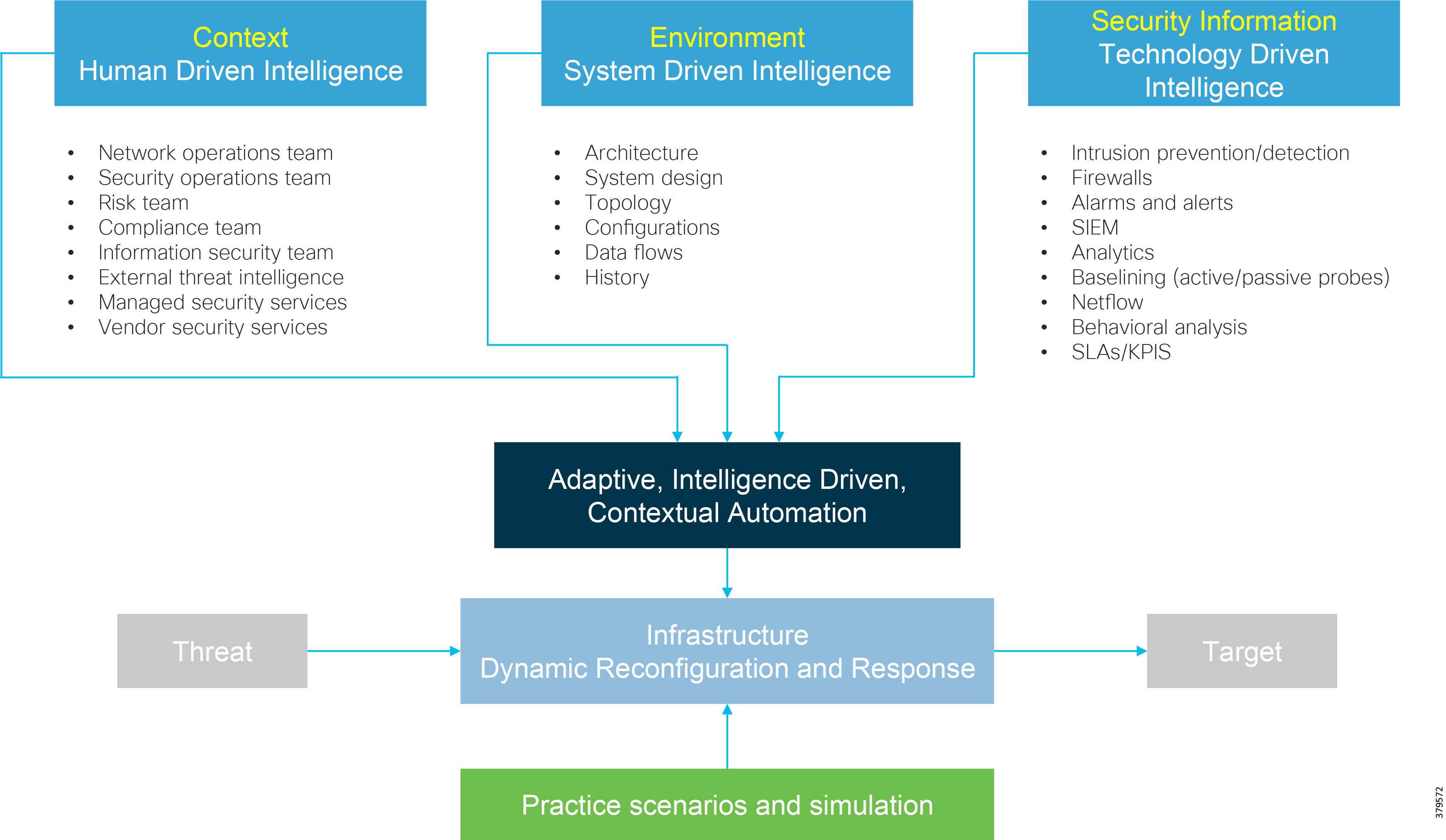

Security Process Lifecycle

Historically, security has been focused on keeping out potential attackers through a perimeter-based defense strategy. Today, the standard thinking is to anticipate a successful attack and to design and defend a network with a defense-in-depth approach to minimize and mitigate damages. This approach involves a multi-layered, multi-technology, and multi-party strategy to protect the organization’s critical assets.

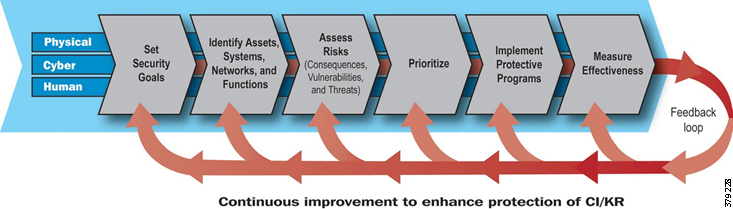

However, security cannot be a one-off incident and reactive response; it must be treated within the context of a life cycle involving everything from awareness to response, with the security life cycle being addressed through an appropriate Risk Control Framework (Figure 9).

Figure 9 Example Risk Control Framework (Source: Cisco)

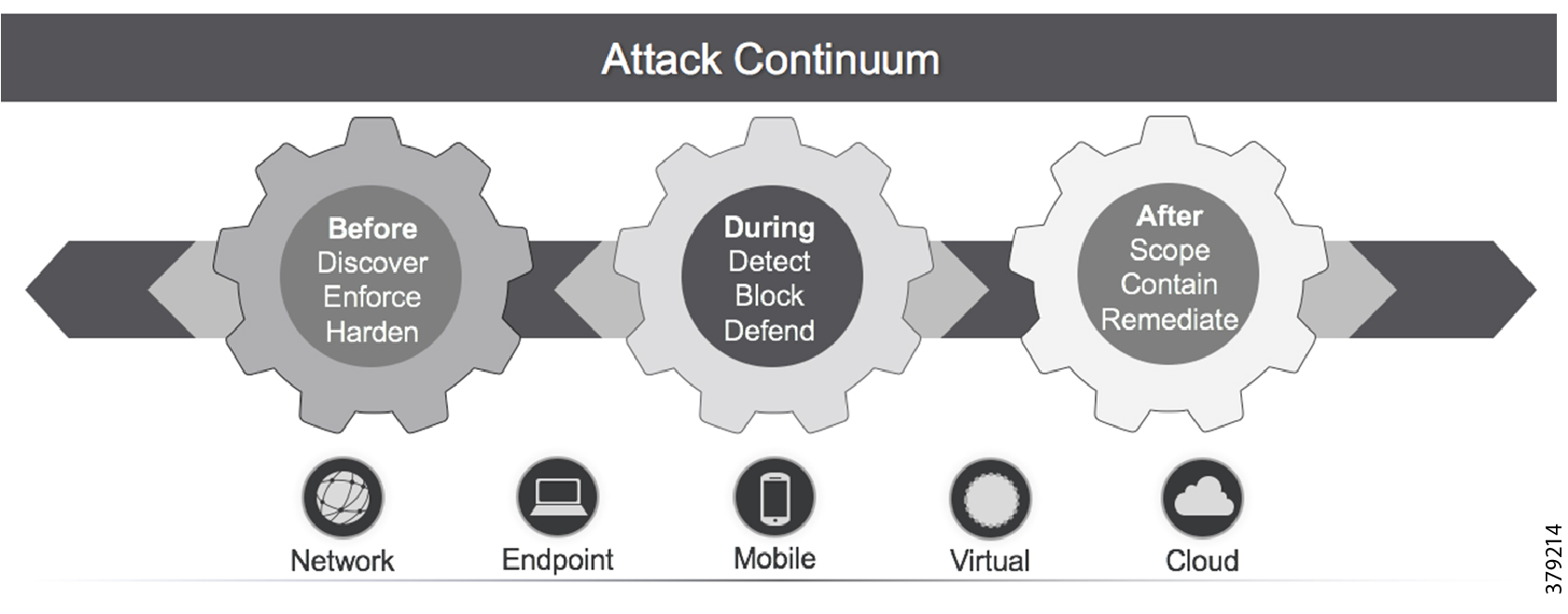

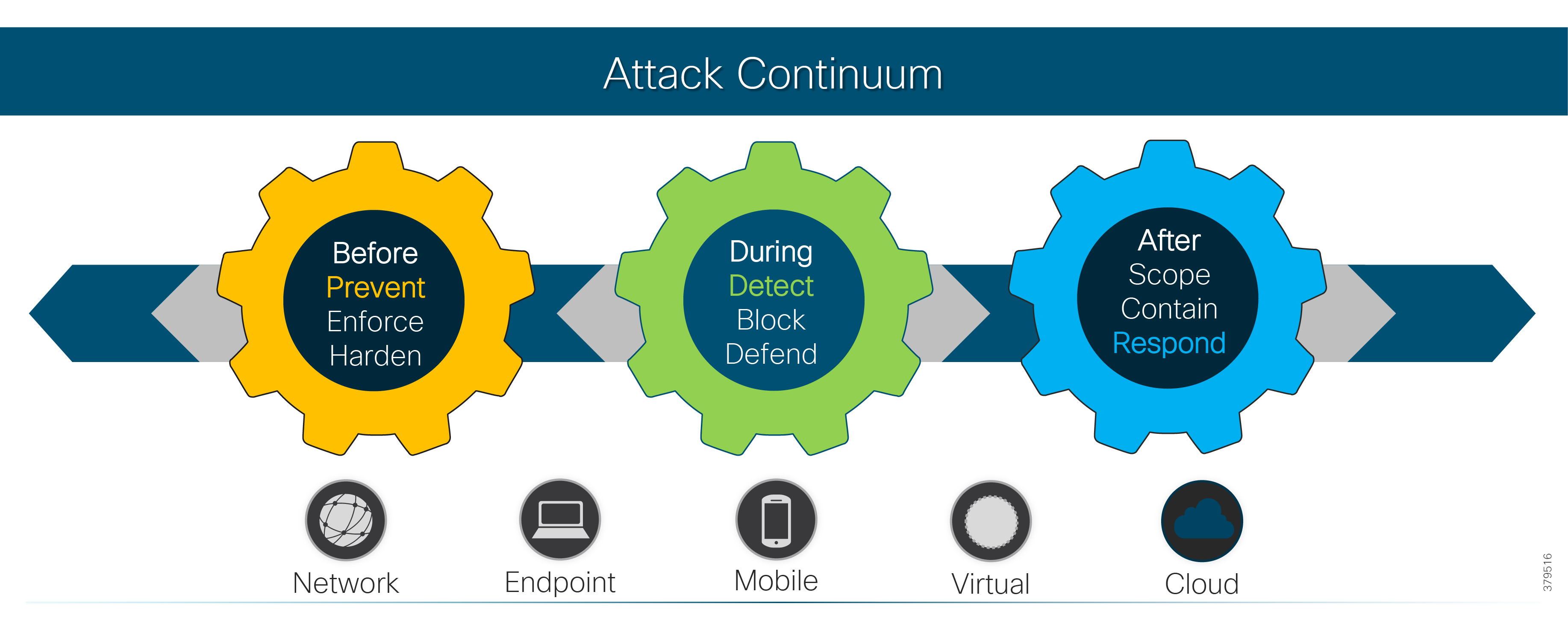

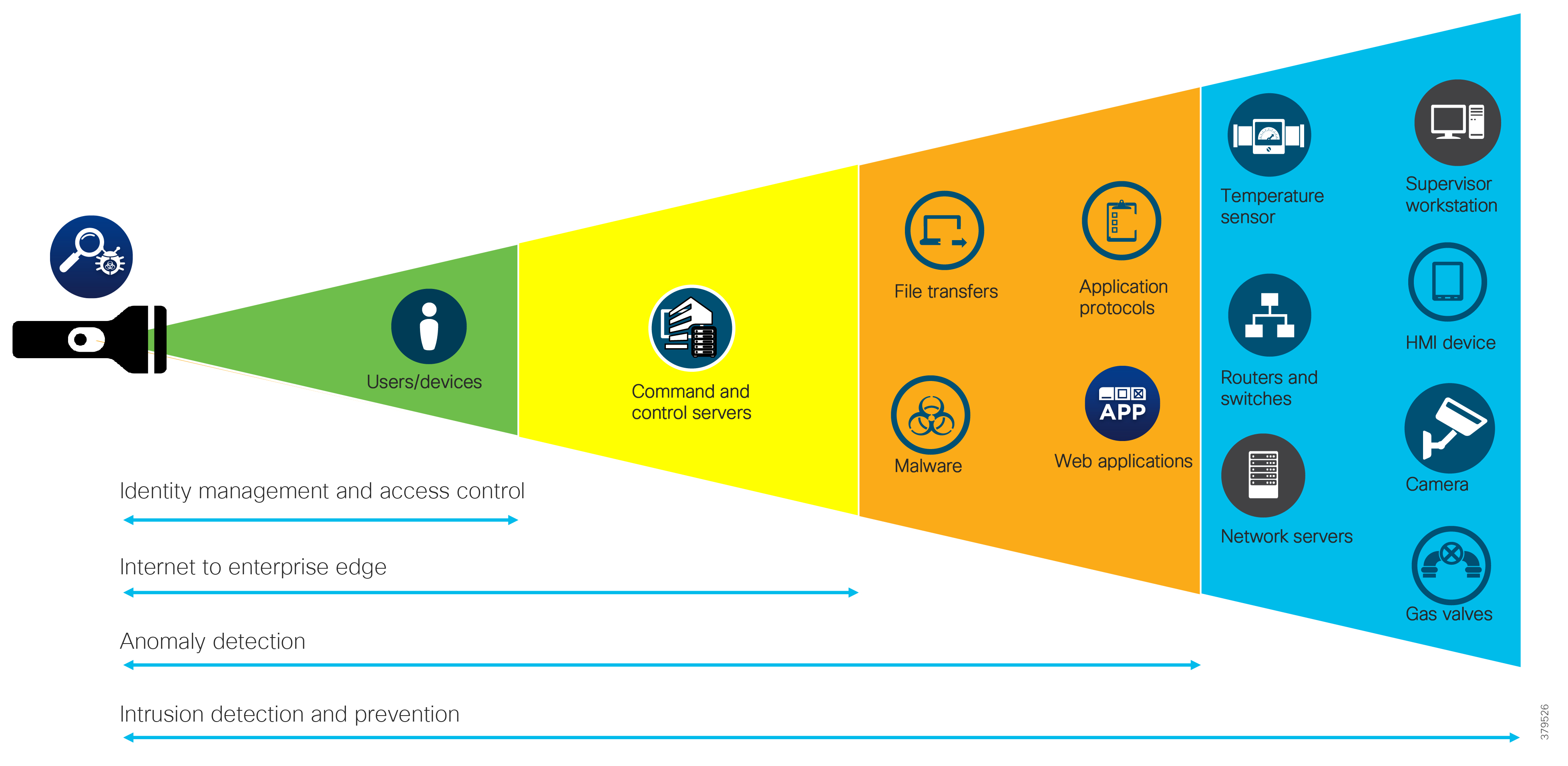

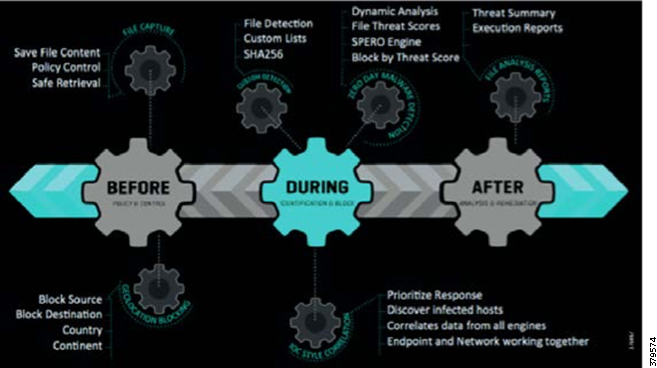

Attack Continuum

As this document has highlighted, a sound security process cannot be a reactive and isolated concept, but must take a holistic view of the system, addressing security in all areas of the system. In Figure 10, the attack continuum is shown consisting of three phases: before, during, and after an attack. In each phase, take note of the actions associated and how they identify activities that should be undertaken to reduce the risk of a successful attack proceeding undetected. Remember, an attack can come from many different sources, therefore, a broad perspective should be maintained throughout the lifecycle process.

Figure 10 The Security Attack Continuum

The reality is that no single security technology can address all security threats and point-in-time technologies are often bypassed as threats are designed to evade initial detection. The security approach must address detection and the ability to mitigate impact after an attack occurs. The security model for pipelines should not just concentrate on the endpoints that are often cited as the most vulnerable, but across the extended network from the edge to the control center to the enterprise, considering the entire attack continuum before an event occurs, during the event, and after the event.

As noted, the full attack continuum can be broken into three phases: before, during, and after. Security measures must not only address detecting and blocking threats, but also include ongoing analysis following an attack to adapt the environment before the next one occurs. No system is truly secure and security cannot be guaranteed. Therefore, to most effectively address customer security and compliance needs, any security approach must offer protection along all phases of the attack continuum for both the PMS and the underlying infrastructure.

Addressing Enterprise Risk

Security for pipelines should take a holistic approach for all parts of the organization that touch the pipeline and associated systems and processes. This may sound obvious, but often security approaches are specific and implemented by a particular part of the organization, even though the system comprises many different components and may be used by many different areas.

The security capability should span the enterprise (Figure 11) and should interweave with existing processes and strategies in addition to being linked to the compliance effort. An organization needs visibility into any and all potential operational risks across a PMS to achieve a comprehensive, effective, and sustainable security program. The security span of influence should encompass OT, IT, and physical assets to best address risk and meet safety and reliability goals and standards.

Figure 11 The Enterprise Security Risk

System Development Lifecycle

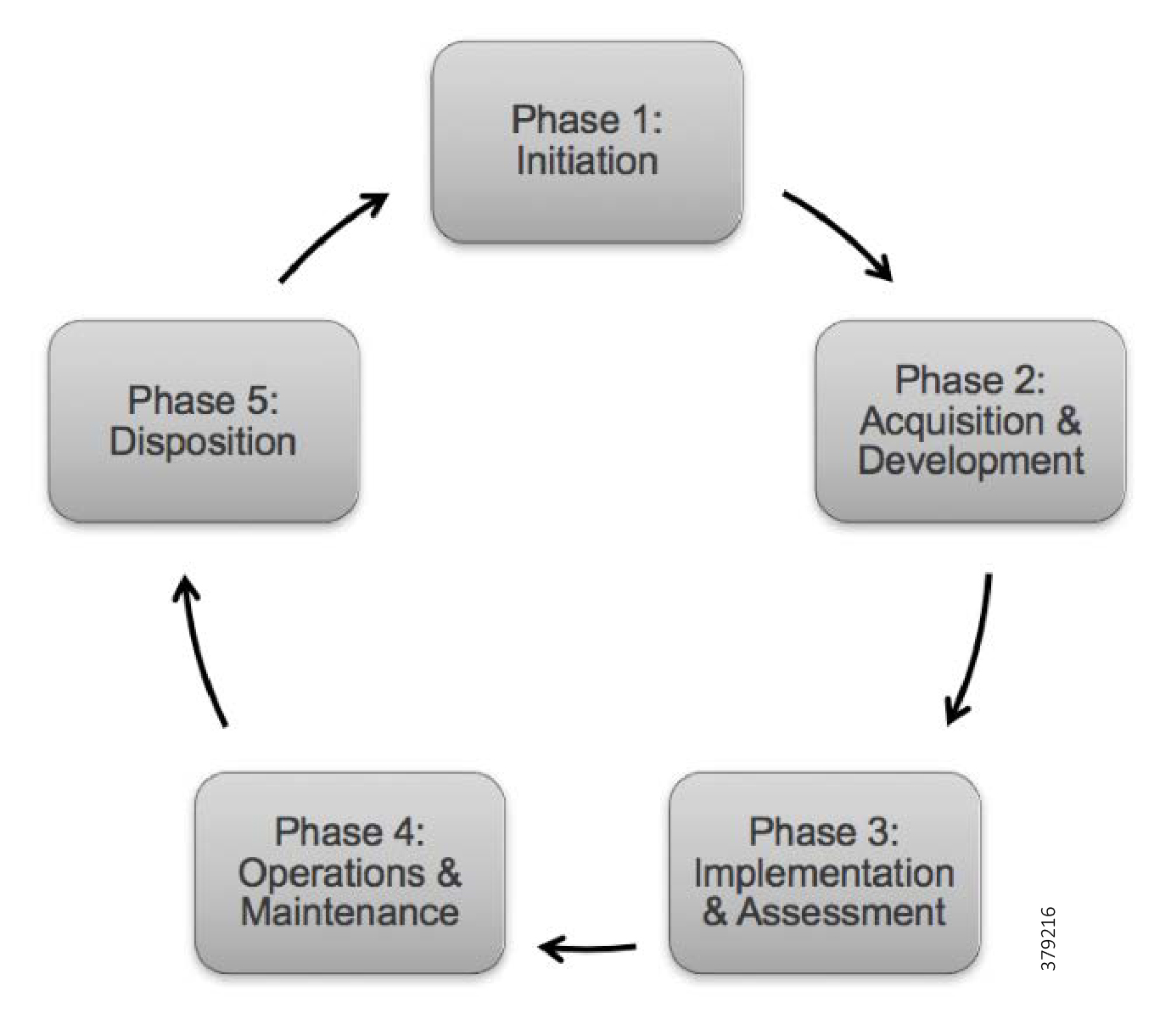

Best practice means that we need to consider how to take a practical and procedural approach to securing the system before deciding on the technologies, products, and processes we will use. System design and best practice usually follows a phased approach known as System Development Lifecycle (SDLC), as outlined in NIST SP 800-63 and IEC 62443-4-1 Product Development Requirements. A general SDLC includes five distinct phases as shown in Figure 12, although this could be further broken out.

Figure 12 Secure Development Lifecycle Phases

Each SDLC phase should include a set of security steps to be followed integrating security directly into the PMS throughout its lifecycle. To be most effective, security must be included in the SDLC from the outset. By building the system with a robust security architecture at its core and integrating with broader organizational compliance and governance efforts, a more effective as well as a more cost-effective development process can be achieved.

NIST recommends this early integration to maximize return on investment through:

■![]() Identifying and mitigating security issues early, resulting in lower cost of implementing security controls

Identifying and mitigating security issues early, resulting in lower cost of implementing security controls

■![]() Awareness of potential engineering issues created by mandatory security controls and addressing in advance

Awareness of potential engineering issues created by mandatory security controls and addressing in advance

■![]() Identifying opportunities for shared security services and reuse of security strategies and tools, reducing the cost and time taken to implement

Identifying opportunities for shared security services and reuse of security strategies and tools, reducing the cost and time taken to implement

■![]() Aiding informed decision-making through risk management in a timely manner

Aiding informed decision-making through risk management in a timely manner

Phase 1—Initiation

This includes security categorization based on potential impact in the event that a security breach occurs, a preliminary risk assessment to define the environment(s) the PMS will operate in, and initial basic security needs of the system. All system components, including process assets, computing, network infrastructure, and physical security should be considered in this phase.

Phase 2—Acquisition and Development

This phase has a number of security-focused areas including:

■![]() Formal Risk Assessment—Security risk assessments allow organizations to assess, identify, and amend their overall posture and to enable stakeholders from all parts of the organization to better understand organizational risk from security attacks. The goal is to obtain leadership commitment to allocate resources and implement appropriate security solutions. A security risk assessment helps determine the value of data throughout the business. Without full awareness of how data is used and shared, it is incredibly difficult to prioritize and allocate resources where they are most needed. The risk assessment is a formal process, which builds on the preliminary assessment, identifying and documenting protection requirements for the PMS.

Formal Risk Assessment—Security risk assessments allow organizations to assess, identify, and amend their overall posture and to enable stakeholders from all parts of the organization to better understand organizational risk from security attacks. The goal is to obtain leadership commitment to allocate resources and implement appropriate security solutions. A security risk assessment helps determine the value of data throughout the business. Without full awareness of how data is used and shared, it is incredibly difficult to prioritize and allocate resources where they are most needed. The risk assessment is a formal process, which builds on the preliminary assessment, identifying and documenting protection requirements for the PMS.

■![]() Functional Requirements Analysis—These are security services that must be achieved by the system under inspection. Examples could include the security policy, the security architecture, and security functional requirements. These can be derived internally or through best practices, policies, regulations, and standards derived from regulations.

Functional Requirements Analysis—These are security services that must be achieved by the system under inspection. Examples could include the security policy, the security architecture, and security functional requirements. These can be derived internally or through best practices, policies, regulations, and standards derived from regulations.

■![]() Non-Functional Security Requirements Analysis—These are requirements like high availability, reliability, and scalability and are typically derived from architectural principles and best practices or standards. This might also involve assessing whether security was defined the right way according to best practice, ease of use, and minimization of complexity.

Non-Functional Security Requirements Analysis—These are requirements like high availability, reliability, and scalability and are typically derived from architectural principles and best practices or standards. This might also involve assessing whether security was defined the right way according to best practice, ease of use, and minimization of complexity.

■![]() Security Assurance Requirements Analysis—This process should provide credible evidence that justifies a level of confidence in the system and assurance that it meets its initial security requirements. Security assurance refers to security requirements. Assurance must provide evidence that the number of vulnerabilities are reduced to such a degree that it justifies a certain amount of confidence that the security properties of the system meet the established security requirements and that the degree of uncertainty has been sufficiently reduced. Based on both legal and functional security requirements, the analysis determines how much, and of what kind, assurance is required. The focus should be on minimizing vulnerabilities, since there can never be a guarantee that these have been eliminated.

Security Assurance Requirements Analysis—This process should provide credible evidence that justifies a level of confidence in the system and assurance that it meets its initial security requirements. Security assurance refers to security requirements. Assurance must provide evidence that the number of vulnerabilities are reduced to such a degree that it justifies a certain amount of confidence that the security properties of the system meet the established security requirements and that the degree of uncertainty has been sufficiently reduced. Based on both legal and functional security requirements, the analysis determines how much, and of what kind, assurance is required. The focus should be on minimizing vulnerabilities, since there can never be a guarantee that these have been eliminated.

■![]() Cost Considerations and Reporting—Determine how much of the development cost of hardware, software, staff, and training can be associated with information security over the life of the system.

Cost Considerations and Reporting—Determine how much of the development cost of hardware, software, staff, and training can be associated with information security over the life of the system.

■![]() Security Planning—Formal planning that defines the plan of action to secure a system. It includes a systematic approach and techniques for protecting a system from events that can impact the underlying system security. It can be a proposed plan or a plan that is already in place. The aim is to fully document agreed upon security controls, a description of the information system, and all documentation that supports the security program. Examples include a configuration management plan, a physical security plan, a contingency plan, an incident response plan, a training plan, and security accreditations.

Security Planning—Formal planning that defines the plan of action to secure a system. It includes a systematic approach and techniques for protecting a system from events that can impact the underlying system security. It can be a proposed plan or a plan that is already in place. The aim is to fully document agreed upon security controls, a description of the information system, and all documentation that supports the security program. Examples include a configuration management plan, a physical security plan, a contingency plan, an incident response plan, a training plan, and security accreditations.

■![]() Security Control Development—The goal of security control is to protect critical assets, infrastructure, and data. This step ensures that the described security controls are designed, developed, implemented, and modified if needed.

Security Control Development—The goal of security control is to protect critical assets, infrastructure, and data. This step ensures that the described security controls are designed, developed, implemented, and modified if needed.

■![]() Developmental Security Test and Evaluation—Ensures the system security controls developed are working properly and are effective. These controls are typically management and operational controls.

Developmental Security Test and Evaluation—Ensures the system security controls developed are working properly and are effective. These controls are typically management and operational controls.

Phase 3—Implementation

■![]() Inspection and Acceptance—Ensuring the organization validates and verifies that the described functionality is included in the deliverables.

Inspection and Acceptance—Ensuring the organization validates and verifies that the described functionality is included in the deliverables.

■![]() System Integration—Ensuring the system is integrated and ready for operation based on vendor and industry best practices and regulatory requirements.

System Integration—Ensuring the system is integrated and ready for operation based on vendor and industry best practices and regulatory requirements.

■![]() Security Certification—Ensuring implementation follows established verification techniques and procedures, typically provided by third-parties, providing confidence that the appropriate measures are in place to protect the PMS. Certification also describes known system vulnerabilities.

Security Certification—Ensuring implementation follows established verification techniques and procedures, typically provided by third-parties, providing confidence that the appropriate measures are in place to protect the PMS. Certification also describes known system vulnerabilities.

■![]() Security Accreditation—Having a senior member of staff provide the necessary authorization of the system to process, store, or transmit information. For PMSs, common criteria include secure configurations for operating systems, device identity and inventory, key management and trust relationships, operational security verification, and capturing of required audit data.

Security Accreditation—Having a senior member of staff provide the necessary authorization of the system to process, store, or transmit information. For PMSs, common criteria include secure configurations for operating systems, device identity and inventory, key management and trust relationships, operational security verification, and capturing of required audit data.

Phase 4—Operations and Maintenance

This phase includes three main areas:

■![]() Procedural aspects of security including training and awareness.

Procedural aspects of security including training and awareness.

■![]() Configuration Management and Control—Ensuring adequate consideration of potential security impacts caused by changes to a system. Configuration management and configuration control procedures are critical in establishing a baseline of hardware, software, and firmware components for the PMS and subsequently controlling and maintaining an accurate inventory of system changes.

Configuration Management and Control—Ensuring adequate consideration of potential security impacts caused by changes to a system. Configuration management and configuration control procedures are critical in establishing a baseline of hardware, software, and firmware components for the PMS and subsequently controlling and maintaining an accurate inventory of system changes.

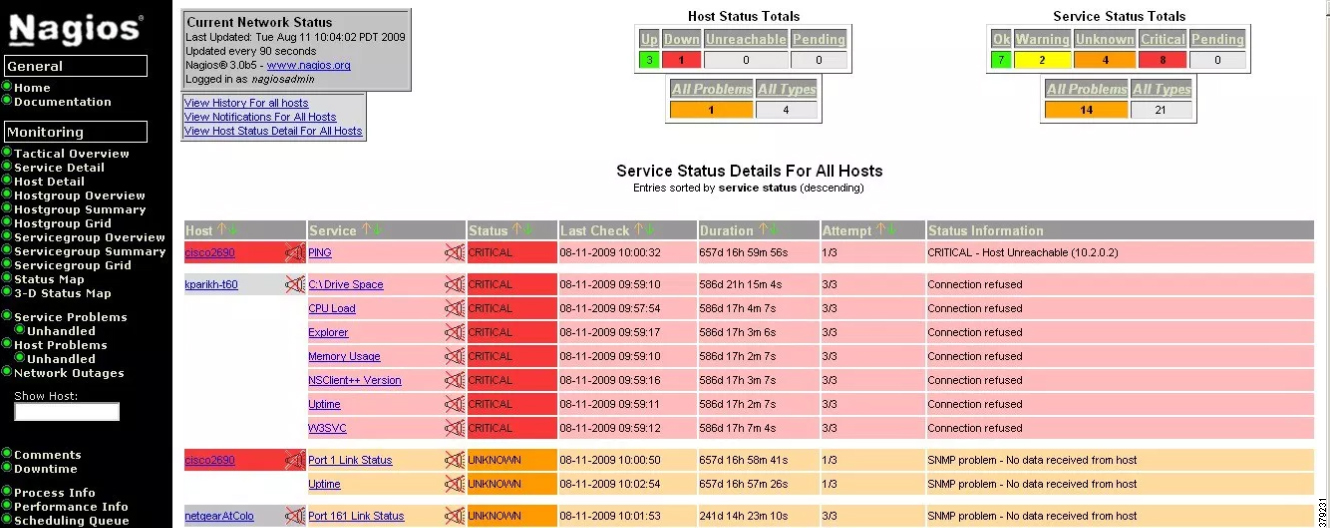

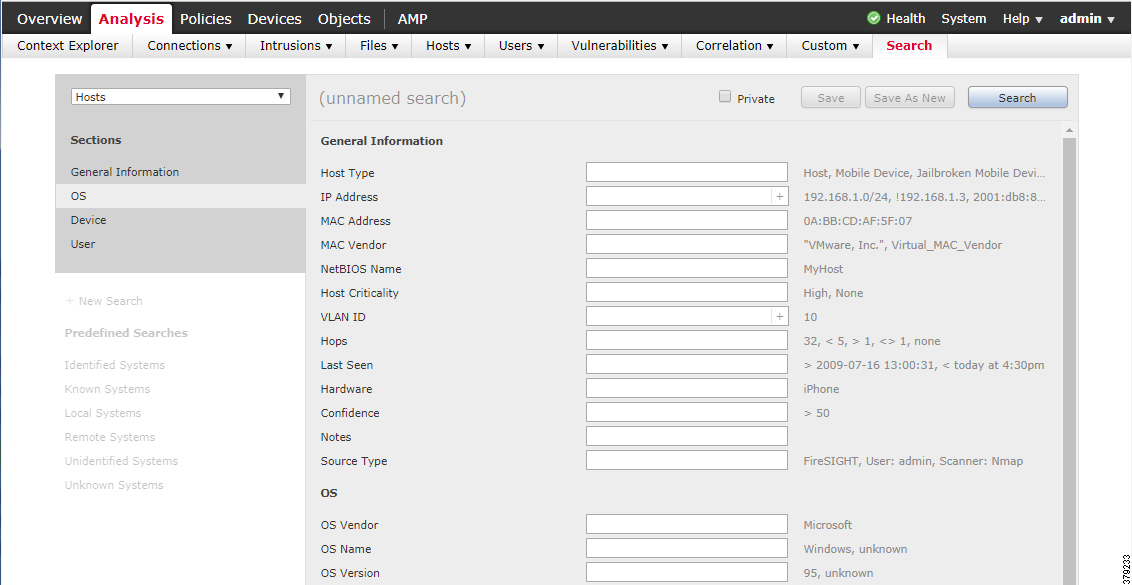

■![]() Continuous Monitoring—Ensuring controls are effective through periodic testing and evaluation. Examples would include verifying the continued effectiveness of controls over time, reporting the security status of the PMS to system operators, developing real time automated adaptive and continuous monitoring of devices to automate threat mitigation, and automating security tasks such as vulnerability assessments and penetration testing.

Continuous Monitoring—Ensuring controls are effective through periodic testing and evaluation. Examples would include verifying the continued effectiveness of controls over time, reporting the security status of the PMS to system operators, developing real time automated adaptive and continuous monitoring of devices to automate threat mitigation, and automating security tasks such as vulnerability assessments and penetration testing.

Phase 5—Disposition

■![]() Information Preservation—Ensuring necessary information is retained for internal compliance or to conform to legal or regulatory requirements, as well as to accommodate future technology changes.

Information Preservation—Ensuring necessary information is retained for internal compliance or to conform to legal or regulatory requirements, as well as to accommodate future technology changes.

■![]() Media Sanitization—Ensuring data is deleted as necessary and following proper disposal protocols as specified for the data type.

Media Sanitization—Ensuring data is deleted as necessary and following proper disposal protocols as specified for the data type.

■![]() Hardware and Software Disposal—Ensuring hardware and software is disposed of as per internal compliance and any legal requirements.

Hardware and Software Disposal—Ensuring hardware and software is disposed of as per internal compliance and any legal requirements.

Establishing policies and procedures for the secure disposition of devices that held sensitive information or data is critical. Devices that have held sensitive information should be securely wiped of all data along with any associated certificates or identifiers.

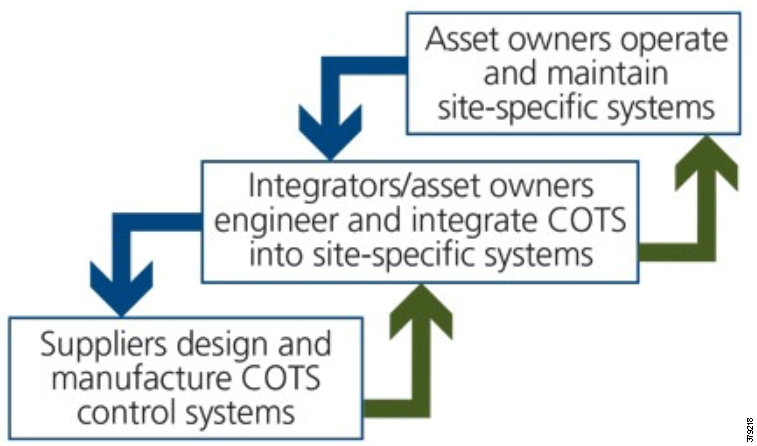

In addition to the recommended steps, it is imperative that all parties in the PMS have a shared role for all phases of the cybersecurity lifecycle. ISA Secure recommends the relational approach (Figure 13) where:

■![]() Product suppliers must securely develop COTS components that include security capabilities to support the intended use of the products in integrated operation and control solutions.

Product suppliers must securely develop COTS components that include security capabilities to support the intended use of the products in integrated operation and control solutions.

■![]() System integrators must use practices that result in secure site-specific solutions to support the cybersecurity requirements for the intended deployment environment at operational sites.

System integrators must use practices that result in secure site-specific solutions to support the cybersecurity requirements for the intended deployment environment at operational sites.

■![]() Asset owners or their designees must configure, commission, operate, and maintain the deployed solution in accordance with the solution’s documented cybersecurity instructions, thereby ensuring that the solution’s cybersecurity capabilities do not degrade over time.

Asset owners or their designees must configure, commission, operate, and maintain the deployed solution in accordance with the solution’s documented cybersecurity instructions, thereby ensuring that the solution’s cybersecurity capabilities do not degrade over time.

Figure 13 Lifecycle Phases and Audiences11

Security Process

Cyber security programs are challenging. Now more than ever, organizations are leveraging digital strategies to optimize their businesses. This involves embracing new technologies, with each new device and associated configuration adding further complexity to an already complex attack surface.

As technology adoption accelerates, the recognized industry shortage of security expertise leads many organization’s cybersecurity teams to struggle to effectively deal with cybersecurity issues and communicate these to leadership.

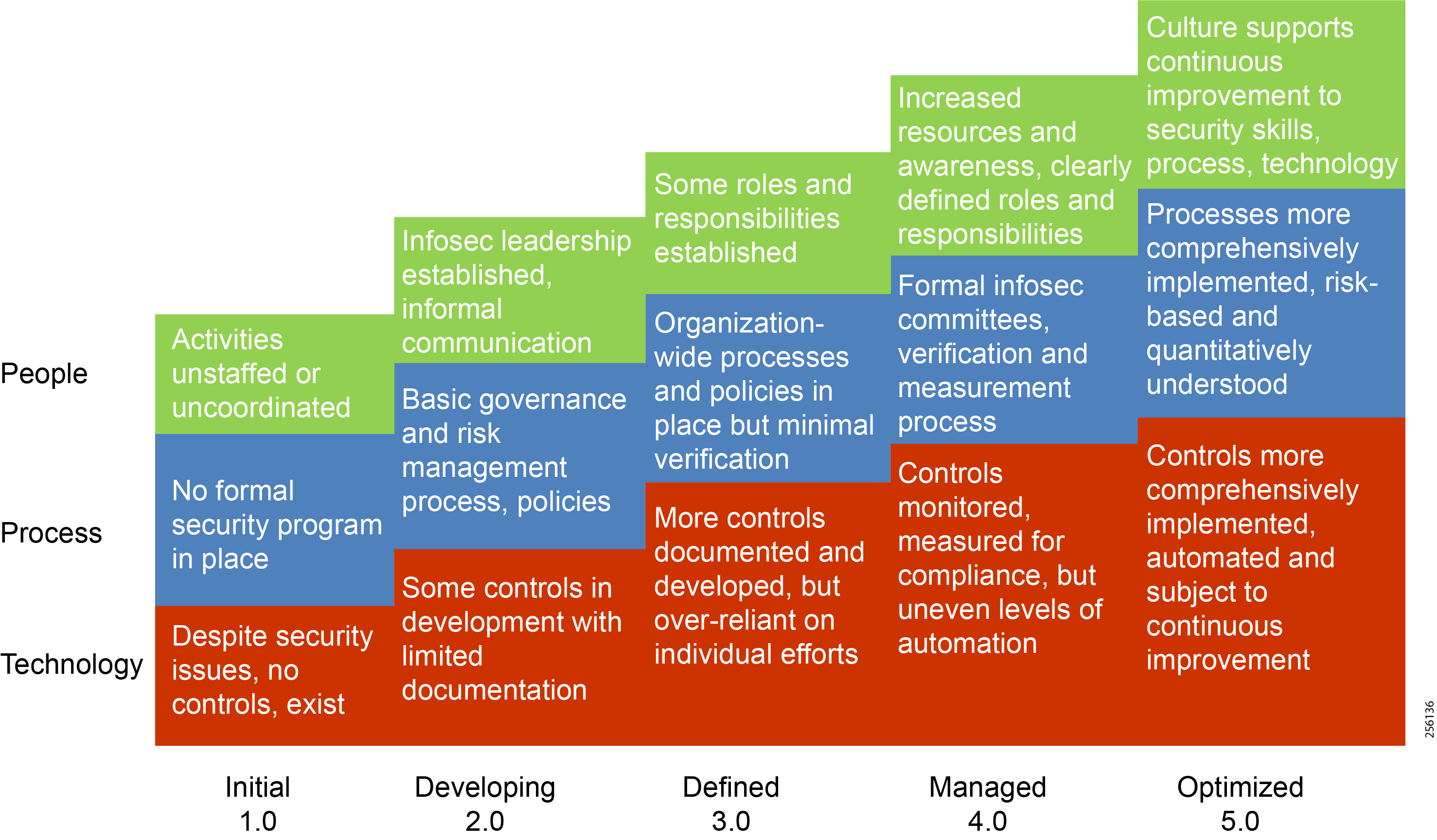

As pipeline companies evolve, cybersecurity management has become an essential and integrated business function. This requires the appropriate set of people, processes, and technologies to help understand the organization’s current security posture. Following this, determining a target state for an improved profile is needed, all while managing budget constraints prior to creating a plan and recommending a roadmap to help deliver said target state. This should involve:

■![]() Evaluation of the current PMS’s cybersecurity management programs and underlying controls

Evaluation of the current PMS’s cybersecurity management programs and underlying controls

■![]() Identification of security gaps, ineffective operational processes, and poorly designed technology security controls

Identification of security gaps, ineffective operational processes, and poorly designed technology security controls

■![]() Defining a security strategy and roadmap to address current and emerging threats to the pipeline system

Defining a security strategy and roadmap to address current and emerging threats to the pipeline system

■![]() Developing and prioritizing security improvements to maximize return on investment and protect data and assets

Developing and prioritizing security improvements to maximize return on investment and protect data and assets

The security lifecycle follows the recommended steps in Figure 14.

Figure 14 Steps in the Security Lifecycle

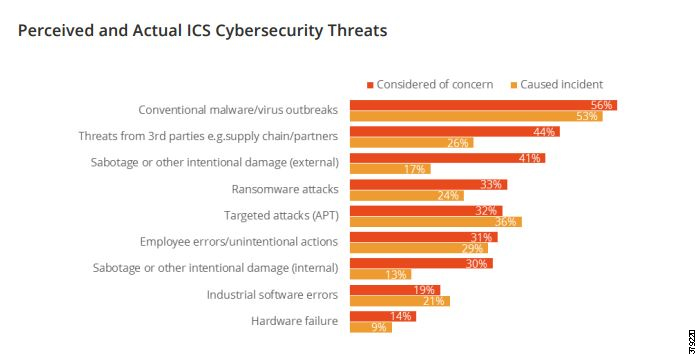

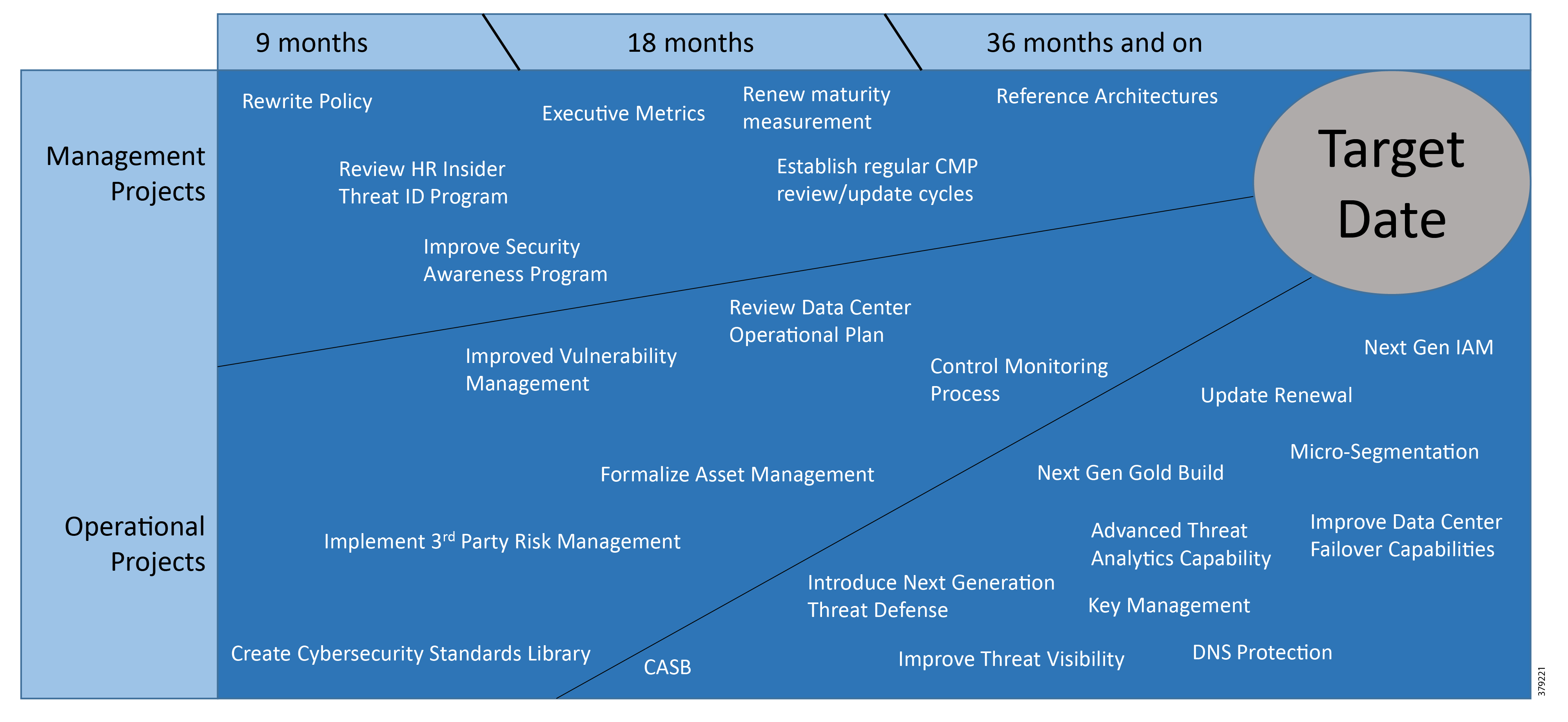

The aim of the security process is to create a security strategy roadmap (Figure 16) to achieve an end goal best practice implementation architecture. This will take the PMS operator from the current to the desired state through a mixture of management, operational, and technology projects. This aligns the cybersecurity strategy with the business objectives and priorities by quantifying the financial impact of cybersecurity risks. In a 2017 Kaspersky report on OT and ICS securityFigure 15 shows where these incidents originated.

Figure 15 Sources of Cybersecurity Incidents

Figure 15 highlights malware as the most typical source of an incident, but targeted attacks were at 36%, a significant risk. The aim in this preventative process is to identify risk-mitigating security controls and financially quantify residual risk, then map security controls to business and technical objectives at specific places in the pipeline architecture.

The cost of an IT-related data breach can be significant, with the 2017 average, as reported by IBM13, reaching $3.62 million at an average cost of $141 per compromised record containing sensitive data. This could be operations or commercial information that provides competitive advantage to your operations or those of your customers.

The result should be to securely integrate IT and OT domains and minimize risk, establish a baseline for security operations and compliance, and justify investments in security solutions to business leaders by rationalizing the implementation of specific security controls that mitigate the business’ largest identified security threats.

Figure 16 Example Security Strategy Roadmap

Administrative Components of a Security Program

To successfully bring security into the basic culture of the organization requires the engagement of its personnel, at all levels, along with the creation of policies that define the framework and enforcement actions required to institutionalize the security program into and throughout an organization.

Personnel

As noted, policies and procedures do not create a successful security profile for any operations company without the full participation of its people. This means that from the executive team to each employee, an organization must be fully engaged in creating a strong security culture. The following sections highlight the roles of individuals and departments in midstream and downstream organizations and how they contribute to the security profile and lifecycle, but these roles are found in all operational companies.

Chief Information Officer (CIO) and Chief Information Security Officer (CISO and CSO)

Generally, executive and managerial support must exist for the security policies and procedures to be implemented within an organization. The CIO or equivalent is generally responsible and accountable to ensure that the security policies and procedures are adequate and enforced throughout the organization. Security hardening is a component of the overall security policy and strategy within an organization. In some organizations, the role of the CIO will also have a focus on supporting operational LOB leaders to secure their environments.

The two categories for discussion within the IT and OT organizations are:

■![]() Implementers—Responsible for the creation, deployment, maintenance, and architecture of the network infrastructure

Implementers—Responsible for the creation, deployment, maintenance, and architecture of the network infrastructure

■![]() Users—Use the network infrastructure to execute the business of the company

Users—Use the network infrastructure to execute the business of the company

Implementers—Network and Security Administrators and Architects

The network and server staff are generally responsible for the overall design, implementation, and maintenance of the communications infrastructure and system. With the borders of separation between OT and IT now merging, security strategies should align and the teams should work more closely together at this level. Security policies adopted through regular IT security hardening are now filtering into OT standards (such as the NIST Energy Sector Asset Management and IEC 62443-2-314) to help secure ICSs and enable the IT staff to understand OT business flows and SLAs to help align the security implementation. Teams that support the multi-service systems (pipeline communications, physical security, business-enabling applications) must work closely with the teams responsible for the SCADA and PMSs.

This team or staff are the workhorses and technical experts for the system hardening policies and procedures. They are involved in all three phases of the attack continuum—before, during, and after. Related to hardening, the team needs to provide:

■![]() Installation and configuration of systems aligned to security hardening policies

Installation and configuration of systems aligned to security hardening policies

■![]() Maintain system updates, patches, and backups on a continual basis

Maintain system updates, patches, and backups on a continual basis

■![]() Continuous monitoring of the system for system integrity and security events

Continuous monitoring of the system for system integrity and security events

■![]() Provide mitigation to security incidents and recovery from a security compromise

Provide mitigation to security incidents and recovery from a security compromise

■![]() Security testing of patches, updates, and any security mitigation before deployment

Security testing of patches, updates, and any security mitigation before deployment

■![]() Planning for improvements to the system hardening

Planning for improvements to the system hardening

■![]() Continuously update technical knowledge to understand the latest threats and provide mitigation policies and procedures

Continuously update technical knowledge to understand the latest threats and provide mitigation policies and procedures

■![]() Document all procedures and policies

Document all procedures and policies

■![]() Work with third-party vendors associated with the pipeline system

Work with third-party vendors associated with the pipeline system

Users—Pipeline Operators, Plant Personnel, and Contractors

Users of the system have to comply with the policies and procedures of the security hardening and should be trained and regularly updated on all security policies. As this relates to hardening, access to the system is a good example where users need to follow security procedures. An end user should have neither the permission nor the ability to connect non-approved devices and applications to the SCADA system. For example, a contractor should use a laptop provided by an operator or only have access to the system through a dedicated terminal.

A significant indicator of the success of a security program is found in the engagement of the members of the organization—from executive management to the summer intern. It is imperative that leadership sets the tone by their full participation and involvement. For example, there should be no exception to security policies such as Bring Your Own Device (BYOD) for executives versus “normal” employees. The process should start at the top and flow throughout the organization.

Policy Development

Policies form the framework upon which the organization functions and control what procedures and processes are defined and used. They define the rules related to security activities and help to characterize how the various organizations within an operational center work together. They also include potential corrective actions needed when policies are violated. Policies, like other components of the SDLC, must be regularly reviewed and updated to reflect organizational and operational changes and recommended best practices.

A policy should contain the following components:

■![]() Policy Statement—Clearly denotes which security components it addresses.

Policy Statement—Clearly denotes which security components it addresses.

■![]() Purpose of the Policy—States why the policy is required.

Purpose of the Policy—States why the policy is required.

■![]() Definitions—Ensures that words and acronyms are clearly defined and unambiguous.

Definitions—Ensures that words and acronyms are clearly defined and unambiguous.

■![]() Responsible Executive or Department—Identifies the executive sponsor of the policy, along with relevant partners and peer organizations that must align with the policy.

Responsible Executive or Department—Identifies the executive sponsor of the policy, along with relevant partners and peer organizations that must align with the policy.

■![]() Entities Affected by the Policy—Specifies all members of the organization that are impacted by and must follow the specified policy.

Entities Affected by the Policy—Specifies all members of the organization that are impacted by and must follow the specified policy.

■![]() Procedures—Specifies the details of the policy, which may include sections such as general rules of behavior, training and enforcement, a comprehensive outline of actions, and rules and guiding principles necessary to implement the desired policy.

Procedures—Specifies the details of the policy, which may include sections such as general rules of behavior, training and enforcement, a comprehensive outline of actions, and rules and guiding principles necessary to implement the desired policy.

By clearly stating the procedures and identifying the parties that must participate and cooperate, the organization can remove ambiguity from the process and create a clear framework to guide and support the continuously improving security posture of the OT organization. Examples of security policies include:

■![]() Passwords, their definition, frequency of change, and how they should be protected

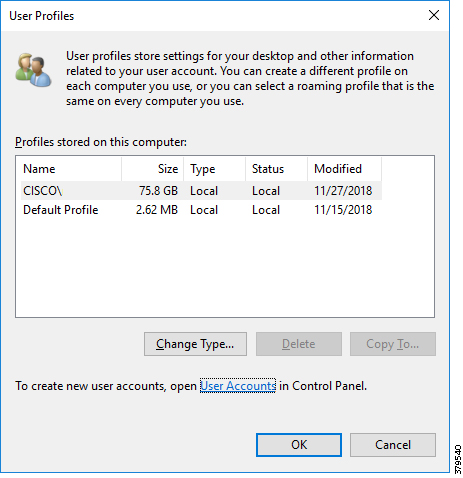

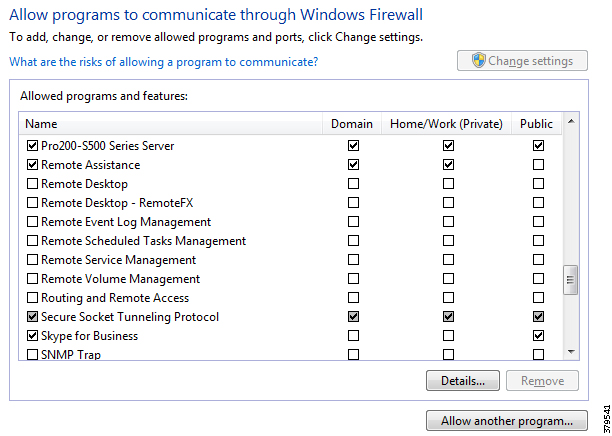

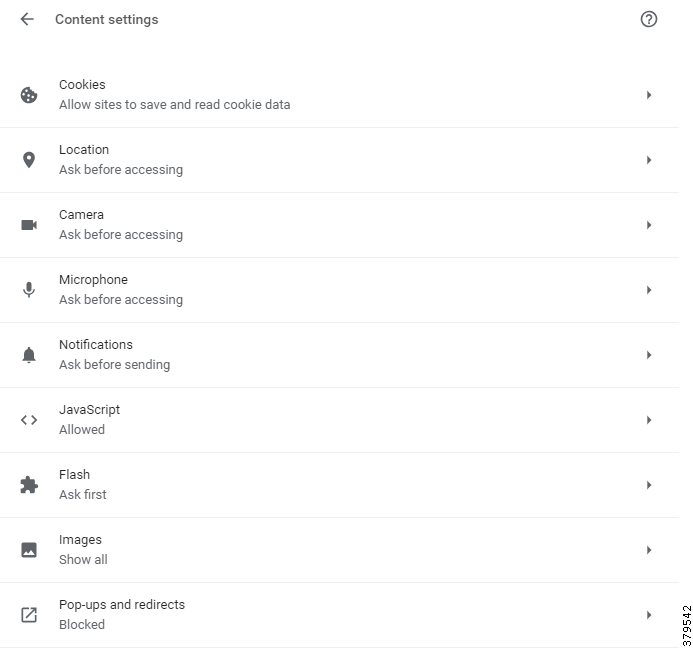

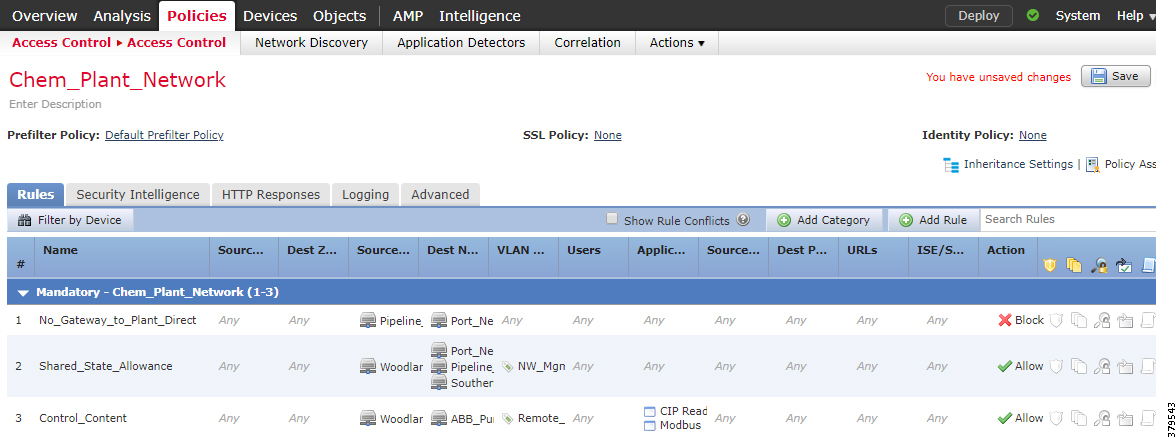

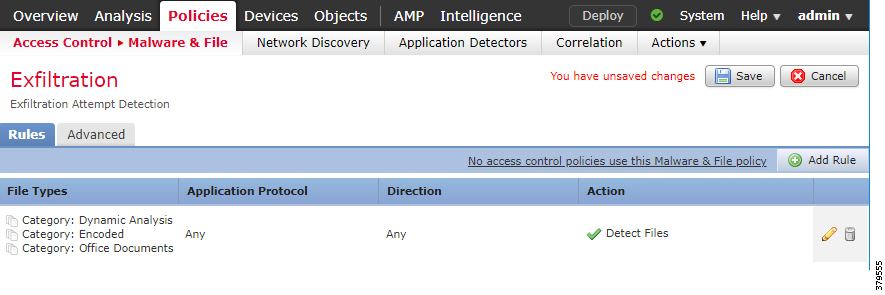

Passwords, their definition, frequency of change, and how they should be protected