Implementing MPLS Traffic Engineering

Traditional IP routing emphasizes on forwarding traffic to the destination as fast as possible. As a result, the routing protocols find out the least-cost route according to its metric to each destination in the network and every router forwards the packet based on the destination IP address and packets are forwarded hop-by-hop. Thus, traditional IP routing does not consider the available bandwidth of the link. This can cause some links to be over-utilized compared to others and bandwidth is not efficiently utilized. Traffic Engineering (TE) is used when the problems result from inefficient mapping of traffic streams onto the network resources. Traffic engineering allows you to control the path that data packets follow and moves traffic flows from congested links to non-congested links that would not be possible by the automatically computed destination-based shortest path.

Multiprotocol Label Switching (MPLS) with its label switching capabilities, eliminates the need for an IP route look-up and creates a virtual circuit (VC) switching function, allowing enterprises the same performance on their IP-based network services as with those delivered over traditional networks such as Frame Relay or Asynchronous Transfer Mode (ATM). MPLS traffic engineering (MPLS-TE) relies on the MPLS backbone to replicate and expand upon the TE capabilities of Layer 2 ATM and Frame Relay networks.

MPLS-TE learns the topology and resources available in a network and then maps traffic flows to particular paths based on resource requirements and network resources such as bandwidth. MPLS-TE builds a unidirectional tunnel from a source to a destination in the form of a label switched path (LSP), which is then used to forward traffic. The point where the tunnel begins is called the tunnel headend or tunnel source, and the node where the tunnel ends is called the tunnel tailend or tunnel destination. A router through which the tunnel passes is called the mid-point of the tunnel.

MPLS uses extensions to a link-state based Interior Gateway Protocol (IGP), such as Intermediate System-to-Intermediate System (IS-IS) or Open Shortest Path First (OSPF). MPLS calculates TE tunnels at the LSP head based on required and available resources (constraint-based routing). If configured, the IGP automatically routes the traffic onto these LSPs. Typically, a packet that crosses the MPLS-TE backbone travels on a single LSP that connects the ingress point to the egress point. MPLS TE automatically establishes and maintains the LSPs across the MPLS network by using the Resource Reservation Protocol (RSVP).

Note |

Combination of unlabelled paths protected by labelled paths is not supported. |

Overview of MPLS-TE Features

In MPLS traffic engineering, IGP extensions flood the TE information across the network. Once the IGP distributes the link attributes and bandwidth information, the headend router calculates the best path from head to tail for the MPLS-TE tunnel. This path can also be configured explicitly. Once the path is calculated, RSVP-TE is used to set up the TE LSP (Labeled Switch Path).

To forward the traffic, you can configure autoroute, forward adjacency, or static routing. The autoroute feature announces the routes assigned by the tailend router and its downstream routes to the routing table of the headend router and the tunnel is considered as a directly connected link to the tunnel.

If forward adjacency is enabled, MPLS-TE tunnel is advertised as a link in an IGP network with the link's cost associated with it. Routers outside of the TE domain can see the TE tunnel and use it to compute the shortest path for routing traffic throughout the network.

MPLS-TE provides protection mechanism known as fast reroute to minimize packet loss during a failure. For fast reroute, you need to create back up tunnels. The autotunnel backup feature enables a router to dynamically build backup tunnels when they are needed instead of pre-configuring each backup tunnel and then assign the backup tunnel to the protected interfaces.

DiffServ Aware Traffic Engineering (DS-TE) enables you to configure multiple bandwidth constraints on an MPLS-enabled interface to support various classes of service (CoS). These bandwidth constraints can be treated differently based on the requirement for the traffic class using that constraint.

The MPLS traffic engineering autotunnel mesh feature allows you to set up full mesh of TE tunnels automatically with a minimal set of MPLS traffic engineering configurations. The MPLS-TE auto bandwidth feature allows you to automatically adjusts bandwidth based on traffic patterns without traffic disruption.

The MPLS-TE interarea tunneling feature allows you to establish TE tunnels spanning multiple Interior Gateway Protocol (IGP) areas and levels, thus eliminating the requirement that headend and tailend routers should reside in a single area.

For detailed information about MPLS-TE features, see MPLS-TE Features - Details.

Note |

MPLS-TE Nonstop Routing (NSR) is enabled by default without any user configuration and cannot be disabled. MPLS-TE NSR means the application is in hot-standby mode and standby MPLS-TE instance is ready to take over from the active instance quickly on RP failover. Note that the MPLS-TE does not do routing. If there is standby card available then the MPLS-TE instance is in a hot-standby position. The following output shows the status of MPLS-TE NSR: During any issues with the MPLS-TE, the NSR on the router gets affected which is displayed in the show redundancy output as follows: |

How MPLS-TE Works

MPLS-TE automatically establishes and maintains label switched paths (LSPs) across the backbone by using RSVP. The path that an LSP uses is determined by the LSP resource requirements and network resources, such as bandwidth. Available resources are flooded by extensions to a link state based Interior Gateway Protocol (IGP). MPLS-TE tunnels are calculated at the LSP headend router, based on a fit between the required and available resources (constraint-based routing). The IGP automatically routes the traffic to these LSPs. Typically, a packet crossing the MPLS-TE backbone travels on a single LSP that connects the ingress point to the egress point.

The following sections describe the components of MPLS-TE:

Tunnel Interfaces

From a Layer 2 standpoint, an MPLS tunnel interface represents the headend of an LSP. It is configured with a set of resource requirements, such as bandwidth and media requirements, and priority. From a Layer 3 standpoint, an LSP tunnel interface is the headend of a unidirectional virtual link to the tunnel destination.

MPLS-TE Path Calculation Module

This calculation module operates at the LSP headend. The module determines a path to use for an LSP. The path calculation uses a link-state database containing flooded topology and resource information.

RSVP with TE Extensions

RSVP operates at each LSP hop and is used to signal and maintain LSPs based on the calculated path.

MPLS-TE Link Management Module

This module operates at each LSP hop, performs link call admission on the RSVP signaling messages, and keep track on topology and resource information to be flooded.

Link-state IGP

Either Intermediate System-to-Intermediate System (IS-IS) or Open Shortest Path First (OSPF) can be used as IGPs. These IGPs are used to globally flood topology and resource information from the link management module.

Label Switching Forwarding

This forwarding mechanism provides routers with a Layer 2-like ability to direct traffic across multiple hops of the LSP established by RSVP signaling.

Configuring MPLS-TE

MPLS-TE requires co-ordination among several global neighbor routers. RSVP, MPLS-TE and IGP are configured on all routers and interfaces in the MPLS traffic engineering network. Explicit path and TE tunnel interfaces are configured only on the head-end routers. MPLS-TE requires some basic configuration tasks explained in this section.

Building MPLS-TE Topology

Building MPLS-TE topology, sets up the environment for creating MPLS-TE tunnels. This procedure includes the basic node and interface configuration for enabling MPLS-TE. To perform constraint-based routing, you need to enable OSPF or IS-IS as IGP extension.

Before You Begin

Before you start to build the MPLS-TE topology, the following pre-requisites are required:

-

Stable router ID is required at either end of the link to ensure that the link is successful. If you do not assign a router ID, the system defaults to the global router ID. Default router IDs are subject to change, which can result in an unstable link.

-

Enable RSVP on the port interface.

Example

This example enables MPLS-TE on a node and then specifies the interface that is part of the MPLS-TE. Here, OSPF is used as the IGP extension protocol for information distribution.

RP/0/RP0/CPU0:router# configure

RP/0/RP0/CPU0:router(config)# mpls traffic-eng

RP/0/RP0/CPU0:router(config-mpls-te)# interface hundredGigE0/0/0/3

RP/0/RP0/CPU0:router(config)# router ospf area 1

RP/0/RP0/CPU0:router(config-ospf)# area 0

RP/0/RP0/CPU0:router(config-ospf-ar)# mpls traffic-eng

RP/0/RP0/CPU0:router(config-ospf-ar)# interface hundredGigE0/0/0/3

RP/0/RP0/CPU0:router(config-ospf-ar-if)# exit

RP/0/RP0/CPU0:router(config-ospf)# mpls traffic-eng router-id 192.168.70.1

RP/0/RP0/CPU0:router(config)# commitExample

This example enables MPLS-TE on a node and then specifies the interface that is part of the MPLS-TE. Here, IS-IS is used as the IGP extension protocol for information distribution.

RP/0/RP0/CPU0:router# configure

RP/0/RP0/CPU0:router(config)# mpls traffic-eng

RP/0/RP0/CPU0:router(config-mpls-te)# interface hundredGigE0/0/0/3

RP/0/RP0/CPU0:router(config)# router isis 1

RP/0/RP0/CPU0:router(config-isis)# net 47.0001.0000.0000.0002.00

RP/0/RP0/CPU0:router(config-isis)# address-family ipv4 unicast

RP/0/RP0/CPU0:router(config-isis-af)# metric-style wide

RP/0/RP0/CPU0:router(config-isis-af)# mpls traffic-eng level 1

RP/0/RP0/CPU0:router(config-isis-af)# exit

RP/0/RP0/CPU0:router(config-isis)# interface hundredGigE0/0/0/3

RP/0/RP0/CPU0:router(config-isis-if)# exit

RP/0/RP0/CPU0:router(config)# commitRelated Topics

Creating an MPLS-TE Tunnel

Creating an MPLS-TE tunnel is a process of customizing the traffic engineering to fit your network topology. The MPLS-TE tunnel is created at the headend router. You need to specify the destination and path of the TE LSP.

To steer traffic through the tunnel, you can use the following ways:

-

Static Routing

-

Autoroute Announce

-

Forwarding Adjacency

From the 7.1.1 release, IS-IS autoroute announce function is enhanced to redirect traffic from a source IP address prefix to a matching IP address assigned to an MPLS-TE tunnel destination interface.

Before You Begin

The following prerequisites are required to create an MPLS-TE tunnel:

-

You must have a router ID for the neighboring router.

-

Stable router ID is required at either end of the link to ensure that the link is successful. If you do not assign a router ID to the routers, the system defaults to the global router ID. Default router IDs are subject to change, which can result in an unstable link.

Configuration Example

This example configures an MPLS-TE tunnel on the headend router with a destination IP address 192.168.92.125. The bandwidth for the tunnel, path-option, and forwarding parameters of the tunnel are also configured. You can use static routing, autoroute announce or forwarding adjacency to steer traffic through the tunnel.

RP/0/RP0/CPU0:router# configure

RP/0/RP0/CPU0:router(config)# interface tunnel-te 1

RP/0/RP0/CPU0:router(config-if)# destination 192.168.92.125

RP/0/RP0/CPU0:router(config-if)# ipv4 unnumbered Loopback0

RP/0/RP0/CPU0:router(config-if)# path-option 1 dynamic

RP/0/RP0/CPU0:router(config-if)# autoroute announce or forwarding adjacency

RP/0/RP0/CPU0:router(config-if)# signalled-bandwidth 100

RP/0/RP0/CPU0:router(config)# commit

Verification

Verify the configuration of MPLS-TE tunnel using the following command.

RP/0/RP0/CPU0:router# show mpls traffic-engineering tunnels brief

Signalling Summary:

LSP Tunnels Process: running

RSVP Process: running

Forwarding: enabled

Periodic reoptimization: every 3600 seconds, next in 2538 seconds

Periodic FRR Promotion: every 300 seconds, next in 38 seconds

Auto-bw enabled tunnels: 0 (disabled)

TUNNEL NAME DESTINATION STATUS STATE

tunnel-te1 192.168.92.125 up up

Displayed 1 up, 0 down, 0 recovering, 0 recovered heads Automatic Modification Of An MPLS-TE Tunnel’s Metric

If the IGP calculation on a router results in an equal cost multipath (ECMP) scenario where next-hop interfaces are a mix of MPLS-TE tunnels and physical interfaces, you may want to ensure that a TE tunnel is preferred. Consider this topology:

-

All links in the network have a metric of 5.

-

To offload a congested link between R3 and R4, an MPLS-TE tunnel is created from R3 to R2.

-

If the metric of the tunnel is also 5, traffic from R3 to R5 is load-balanced between the tunnel and the physical R3-R4 link.

To ensure that the MPLS-TE tunnel is preferred in such scenarios, configure the autoroute metric command on the tunnel interface. The modified metric is applied in the routing information base (RIB), and the tunnel is preferred over the physical path of the same metric. Sample configuration:

Router# configure

Router(config)# interface tunnel-te 1

Router(config-if)# autoroute metric relative -1

The autoroute metric command syntax is autoroute metric {absolute|relative} value

-

absolute enables the absolute metric mode, for a metric range between 1 and 2147483647.

-

relative enables the relative metric mode, for a metric range between -10 and 10, including zero.

Note |

Since the relative metric is not saved in the IGP database, the advertised metric of the MPLS-TE tunnel remains 5, and doesn't affect SPF calculation outcomes on other nodes. |

Related Topics

Teardown and Reestablishment of RSVP-TE Tunnels

|

Feature Name |

Release Information |

Feature Description |

|---|---|---|

|

Teardown and Reestablishment of RSVP-TE Tunnels |

Release 7.11.1 |

Introduced in this release on: NCS 5500 fixed port routers; NCS 5700 fixed port routers; NCS 5500 modular routers (NCS 5500 line cards; NCS 5700 line cards [Mode: Compatibility; Native]) You can now tear down and reestablish the existing tunnels of headend, midend, or tailend router tunnels of an MPLS network for optimized distribution of the traffic across MPLS and RSVP-TE to improve network performance and enhance resource utilization. Previously, you could reestablish tunnels only at the headend router using the mpls traffic-eng resetup command. The feature introduces these changes: CLI: mpls traffic-eng teardown YANG Data Model: Cisco-IOS-XR-mpls-te-act.yang (see GitHub, YANG Data Models Navigator) |

In an MPLS-TE network which is configured with RSVP-TE, the headend, midend, and tailend router work together to establish and maintain tunnels or adjacencies for traffic engineering purposes. When the headend router boots up, it plays a critical role in MPLS-TE tunnel establishment using RSVP-TE signaling along the computed path with the tailend router.

During a system reboot, if a tailend router comes up first, the Interior Gateway Protocol (IGP) adjacency establishes a path for the tailend with the adjacent network devices. However, if the headend router comes up and starts creating tunnels during this tailend path creation, it may result in a poor distribution of tunnels at the tailend. In such cases, it's necessary to tear down all the tunnels and recreate them to ensure optimal distribution and functioning of the RSVP-TE as per the network conditions.

From Release 7.11.1, you can tear down and reestablish tunnels across all headend, midend, and tailend routers using the mpls traffic-eng teardown command with all, head, mid, tail parameters.

|

Router Name |

Router Function |

Tunnel Tear-Down and Reestablish Command |

|---|---|---|

|

Headend Router |

The headend router is responsible for determining the tunnel's path. It initiates the signaling process, specifying the tunnel's parameters and requirements. It sets up and manages the MPLS-TE tunnels. |

mpls traffic-eng teardown head |

|

Midend Router |

The midend router, or an intermediate router or network device, is located between the headend and the tailend along the tunnel's path. It’s involved in the signaling process and ensures the proper forwarding of traffic along the established tunnel. |

mpls traffic-eng teardown mid |

|

Tailend Router |

The tailend router is the terminating router or network device located at the other end of the tunnel. It receives and processes the traffic that traverses through the tunnel and ensures proper traffic delivery to the destination. |

mpls traffic-eng teardown tail |

When RSVP-TE tunnel teardown message is triggered and:

-

Router B is configured as tailend and Router A is configured as headend: A ResvTear upstream message is sent to the headend router. This message informs the headend router to tear down the RSVP-TE tunnel and release the resources associated with the tunnel.

-

Router B is configured as headend and Router C as tailend: A PathTear downstream message is sent to the tailend router. A headend router triggers the process of recomputing the tunnels. The headend router (Router B) initiates the process of recomputing the tunnels, which involves recalculating the path and parameters for establishing new tunnels.

-

Router B is configured as midend where Router A and Router B as headend and tailend respectively: A ResvTear upstream message is sent to the headend router and a PathTear downstream message is sent to the tailend router. These messages inform the respective routers to tear down the RSVP-TE tunnel and release the associated resources.

You can tear down and set up all types of tunnels including P2P, P2MP, numbered, named, and auto tunnels.

Limitations

-

A maximum 90 seconds are required for the tunnels to get reestablished once they are torn.

-

Use the mpls traffic-eng resetup command to reestablish the tunnels only at the headend router.

Configure Tear down and Reestablishment of RSVP-TE Tunnels

Configuration Example

Use the mpls traffic-eng teardown all command to tear down and reestablish all the RSVP-TE tunnels in a network node. This command must be executed in XR EXEC mode.

Router# mpls traffic-eng teardown allUse the mpls traffic-eng teardown head command to tear down and reestablish the RSVP-TE tunnels at the headend router. This command must be executed in XR EXEC mode.

Router# mpls traffic-eng teardown head Note |

You can also use the mpls traffic-eng resetup command to reestablish tunnels only at the headend router in XR EXEC mode. |

Use the mpls traffic-eng teardown mid command to tear down and reestablish the RSVP-TE tunnels at the midend router. This command must be executed in XR EXEC mode.

Router# mpls traffic-eng teardown midUse the mpls traffic-eng teardown tail command to tear down and reestablish the RSVP-TE tunnels at the tailend router. This command must be executed in XR EXEC mode.

Router# mpls traffic-eng teardown tailUse the show mpls traffic-eng tunnels summary command to check RSVP-TE tunnel status after you run the teardown command.

Router# show mpls traffic-eng tunnels summary

Output received:

Thu Sep 14 10:48:45.007 UTC

Path Selection Tiebreaker: Min-fill (default)

LSP Tunnels Process: running

RSVP Process: running

Forwarding: enabled

Periodic reoptimization: every 3600 seconds, next in 2806 seconds

Periodic FRR Promotion: every 300 seconds, next in 9 seconds

Periodic auto-bw collection: 5 minute(s) (disabled)

Signalling Summary:

Head: 14006 interfaces, 14006 active signalling attempts, 14006 established

14006 explicit, 0 dynamic

14006 activations, 0 deactivations

0 recovering, 0 recovered

Mids: 2000

Tails: 4003

Fast ReRoute Summary:

Head: 14000 FRR tunnels, 14000 protected, 0 rerouted

Mid: 2000 FRR tunnels, 2000 protected, 0 rerouted

Summary: 16000 protected, 13500 link protected, 2500 node protected, 0 bw protected

Backup: 6 tunnels, 4 assigned

Interface: 10 protected, 0 rerouted

Bidirectional Tunnel Summary:

Tunnel Head: 0 total, 0 connected, 0 associated, 0 co-routed

Tunnel Tail: 0 total, 0 connected, 0 associated, 0 co-routed

LSPs Head: 0 established, 0 proceeding, 0 associated, 0 standby

LSPs Mid: 0 established, 0 proceeding, 0 associated, 0 standby

LSPs Tail: 0 established, 0 proceeding, 0 associated, 0 standby

Configuring Fast Reroute

Fast reroute (FRR) provides link protection to LSPs enabling the traffic carried by LSPs that encounter a failed link to be rerouted around the failure. The reroute decision is controlled locally by the router connected to the failed link. The headend router on the tunnel is notified of the link failure through IGP or through RSVP. When it is notified of a link failure, the headend router attempts to establish a new LSP that bypasses the failure. This provides a path to reestablish links that fail, providing protection to data transfer. The path of the backup tunnel can be an IP explicit path, a dynamically calculated path, or a semi-dynamic path. For detailed conceptual information on fast reroute, see MPLS-TE Features - Details

Before You Begin

The following prerequisites are required to create an MPLS-TE tunnel:

-

You must have a router ID for the neighboring router.

-

Stable router ID is required at either end of the link to ensure that the link is successful. If you do not assign a router ID to the routers, the system defaults to the global router ID. Default router IDs are subject to change, which can result in an unstable link.

Configuration Example

This example configures fast reroute on an MPLS-TE tunnel. Here, tunnel-te 2 is configured as the back-up tunnel. You can use the protected-by command to configure path protection for an explicit path that is protected by another path.

RP/0/RP0/CPU0:router # configure

RP/0/RP0/CPU0:router(config)# interface tunnel-te 1

RP/0/RP0/CPU0:router(config-if)# fast-reroute

RP/0/RP0/CPU0:router(config-if)# exit

RP/0/RP0/CPU0:router(config)# mpls traffic-eng

RP/0/RP0/CPU0:router(config-mpls-te)# interface HundredGigabitEthernet0/0/0/3

RP/0/RP0/CPU0:router(config-mpls-te-if)# backup-path tunnel-te 2

RP/0/RP0/CPU0:router(config)# interface tunnel-te 2

RP/0/RP0/CPU0:router(config-if)# backup-bw global-pool 5000

RP/0/RP0/CPU0:router(config-if)# ipv4 unnumbered Loopback0

RP/0/RP0/CPU0:router(config-if)# destination 192.168.92.125

RP/0/RP0/CPU0:router(config-if)# path-option l explicit name backup-path protected by 10

RP/0/RP0/CPU0:router(config-if)# path-option l0 dynamic

RP/0/RP0/CPU0:router(config)# commit

Verification

Use the show mpls traffic-eng fast-reroute database command to verify the fast reroute configuration.

RP/0/RP0/CPU0:router# show mpls traffic-eng fast-reroute database

Tunnel head FRR information:

Tunnel Out intf/label FRR intf/label Status

---------- ---------------- ---------------- -------

tt4000 HundredGigabitEthernet 0/0/0/3:34 tt1000:34 Ready

tt4001 HundredGigabitEthernet 0/0/0/3:35 tt1001:35 Ready

tt4002 HundredGigabitEthernet 0/0/0/3:36 tt1001:36 Ready

Related Topics

Configuring Auto-Tunnel Backup

|

Feature Name |

Release Information |

Feature Description |

|---|---|---|

|

Bandwidth Protection Functions to Enhance auto-tunnel backup Capabilities |

Release 7.5.1 |

This feature introduces bandwidth protection functions for auto-tunnel backups, such as signaled bandwidth, bandwidth protection, and soft-preemption. These functions provide better bandwidth usage and prevent traffic congestion and traffic loss. In earlier releases, auto-tunnel backups provided only link protection and node protection. Backup tunnels were signaled with zero bandwidth, causing traffic congestion when FRR went active. This feature introduces the following commands and keywords: |

The MPLS Traffic Engineering Auto-Tunnel Backup feature enables a router to dynamically build backup tunnels on the interfaces that are configured with MPLS TE tunnels instead of building MPLS-TE tunnels statically.

The MPLS-TE Auto-Tunnel Backup feature has these benefits:

-

Backup tunnels are built automatically, eliminating the need for users to pre-configure each backup tunnel and then assign the backup tunnel to the protected interface.

-

Protection is expanded—FRR does not protect IP traffic that is not using the TE tunnel or Label Distribution Protocol (LDP) labels that are not using the TE tunnel.

The TE attribute-set template that specifies a set of TE tunnel attributes, is locally configured at the headend of auto-tunnels. The control plane triggers the automatic provisioning of a corresponding TE tunnel, whose characteristics are specified in the respective attribute-set.

Configuration Example

This example configures Auto-Tunnel backup on an interface and specifies the attribute-set template for the auto tunnels. In this example, unused backup tunnels are removed every 20 minutes using a timer and also the range of tunnel interface numbers are specified.

RP/0/RP0/CPU0:router # configure

RP/0/RP0/CPU0:router(config)# mpls traffic-eng

RP/0/RP0/CPU0:router(config-mpls-te)# interface HundredGigabitEthernet0/0/0/3

RP/0/RP0/CPU0:router(config-mpls-te-if)# auto-tunnel backup

RP/0/RP0/CPU0:router(config-mpls-te-if-auto-backup)# attribute-set ab

RP/0/RP0/CPU0:router(config-mpls-te)# auto-tunnel backup timers removal unused 20

RP/0/RP0/CPU0:router(config-mpls-te)# auto-tunnel backup tunnel-id min 6000 max 6500

RP/0/RP0/CPU0:router(config)# commit

Verification

RP/0/RP0/CPU0:router# show mpls traffic-eng tunnels brief

TUNNEL NAME DESTINATION STATUS STATE

tunnel-te0 200.0.0.3 up up

tunnel-te1 200.0.0.3 up up

tunnel-te2 200.0.0.3 up up

tunnel-te50 200.0.0.3 up up

*tunnel-te60 200.0.0.3 up up

*tunnel-te70 200.0.0.3 up up

*tunnel-te80 200.0.0.3 up up Related Topics

Bandwidth Protection Functions to Enhance auto-tunnel backup Capabilities

Without bandwidth protection, auto-tunnel backups provide only link protection and node protection (per next-next-hop), and backup tunnels are signalled with zero bandwidth. This causes traffic congestion when FRR goes active, since the backup tunnels might be protecting huge amount of data, such as LSPs with large bandwidth or multiple LSPs.

To address the congestion issue, bandwidth protection capabilities are added for auto-tunnel backups. Bandwidth protection, signalled bandwidth, and soft-preemption settings are provided. Details:-

Bandwidth protection – A link or node protection backup might not provide bandwidth protection. But with this setting (bandwidth-protection maximum-aggregate), you can set the maximum bandwidth value that an auto-tunnel can protect.

-

Signalled bandwidth – Without bandwidth protection, auto-tunnel backups are signaled with zero bandwidth too, with no guarantee that at least some bandwidth is backed up. So, the backup tunnels might be setup on links that are highly utilized, causing congestion drops when the backup tunnels start to transmit traffic after FRR is triggered.

This setting (signalled-bandwidth) addresses the issue, since you can set the signalled bandwidth of the tunnel (and reserve minimal bandwidth for an auto-tunnel backup). When you set the signal bandwidth value for auto-backup tunnels, congestion over backup links reduces.

-

Soft-preemption – Since bandwidth can be reserved for autobackup tunnels, a setting (soft-preemption) is provided for soft-preemption of the reserved bandwidth, if it is needed for a higher-priority tunnel.

Configurations

/*Enable Bandwidth Protection On a TE Auto-Tunnel Backup*/

Router # configure

Router(config)# mpls traffic-eng

Router(config-mpls-te)# interface GigabitEthernet 0/2/0/0 auto-tunnel backup

Router(config-te-if-auto-backup)# bandwidth-protection maximum-aggregate 100000

Router(config-te-if-auto-backup)# commit

/*Enable Signalled Bandwidth On a TE Auto-Tunnel Backup*/

Router # configure

Router(config)# mpls traffic-eng attribute-set auto-backup MyBackupConfig

Router(config-te-attribute-set)# signalled-bandwidth 700000

Router(config-te-attribute-set)# commit

After creating the auto backup attribute-set (MyBackupConfig in this case), associate with the auto-tunnel backup interface.

Router# configure

Router(config)# mpls traffic-eng

Router(config-mpls-te)# interface GigabitEthernet 0/2/0/0 auto-tunnel backup

Router(config-te-if-auto-backup)# attribute-set MyBackupConfig

Router(config-te-if-auto-backup)# auto-tunnel backup tunnel-id min 6000 max 6500

Router(config-mpls-te)# commit

/*Enable Soft-Preemption Bandwidth On a TE Auto-Tunnel Backup*/

Router# configure

Router(config)# mpls traffic-eng attribute-set auto-backup MyBackupConfig

Router(config-te-attribute-set)# soft-preemption

Router(config-te-attribute-set)# commit

Verification

/*Verify Auto-Tunnel Backup Configuration*/

In the output, bandwidth protection details are displayed, as denoted by BW.

Router# show mpls traffic-eng auto-tunnel backup

AutoTunnel Backup Configuration:

Interfaces count: 1

Unused removal timeout: 1h 0m 0s

Configured tunnel number range: 6000-6500

AutoTunnel Backup Summary:

AutoTunnel Backups:

0 created, 0 up, 0 down, 0 unused

0 NHOP, 0 NNHOP, 0 SRLG strict, 0 SRLG preferred, 0 SRLG weighted, 0 BW protected

Protected LSPs:

0 NHOP, 0 NHOP+SRLG, 0 NHOP+BW, 0 NHOP+BW+SRLG

0 NNHOP, 0 NNHOP+SRLG, 0 NNHOP+BW, 0 NNHOP+BW+SRLG

Protected S2L Sharing Families:

0 NHOP, 0 NHOP+SRLG, 0 NNHOP+BW, 0 NNHOP+BW+SRLG

0 NNHOP, 0 NNHOP+SRLG, 0 NNHOP+BW, 0 NNHOP+BW+SRLG

Protected S2Ls:

0 NHOP, 0 NHOP+SRLG, 0 NNHOP+BW, 0 NNHOP+BW+SRLG

0 NNHOP, 0 NNHOP+SRLG, 0 NNHOP+BW, 0 NNHOP+BW+SRLG

Cumulative Counters (last cleared 00:08:47 ago):

Total NHOP NNHOP

Created: 0 0 0

Connected: 0 0 0

Removed (down): 0 0 0

Removed (unused): 0 0 0

Removed (in use): 0 0 0

Range exceeded: 0 0 0

Configuring Next Hop Backup Tunnel

The backup tunnels that bypass only a single link of the LSP path are referred as Next Hop (NHOP) backup tunnels because they terminate at the LSP's next hop beyond the point of failure. They protect LSPs, if a link along their path fails, by rerouting the LSP traffic to the next hop, thus bypassing the failed link.

Configuration Example

This example configures next hop backup tunnel on an interface and specifies the attribute-set template for the auto tunnels. In this example, unused backup tunnels are removed every 20 minutes using a timer and also the range of tunnel interface numbers are specified.

RP/0/RP0/CPU0:router # configure

RP/0/RP0/CPU0:router(config)# mpls traffic-eng

RP/0/RP0/CPU0:router(config-mpls-te)# interface HundredGigabitEthernet0/0/0/3

RP/0/RP0/CPU0:router(config-mpls-te-if)# auto-tunnel backup nhop-only

RP/0/RP0/CPU0:router(config-mpls-te-if-auto-backup)# attribute-set ab

RP/0/RP0/CPU0:router(config-mpls-te)# auto-tunnel backup timers removal unused 20

RP/0/RP0/CPU0:router(config-mpls-te)# auto-tunnel backup tunnel-id min 6000 max 6500

RP/0/RP0/CPU0:router(config)# commit

Related Topics

Configuring SRLG Node Protection

Shared Risk Link Groups (SRLG) in MPLS traffic engineering refer to situations in which links in a network share common resources. These links have a shared risk, and that is when one link fails, other links in the group might fail too.

OSPF and IS-IS flood the SRLG value information (including other TE link attributes such as bandwidth availability and affinity) using a sub-type length value (sub-TLV), so that all routers in the network have the SRLG information for each link.

MPLS-TE SRLG feature enhances backup tunnel path selection by avoiding using links that are in the same SRLG as the interfaces it is protecting while creating backup tunnels.

Configuration Example

This example creates a backup tunnel and excludes the protected node IP address from the explicit path.

RP/0/RP0/CPU0:router # configure

RP/0/RP0/CPU0:router(config)# mpls traffic-eng

RP/0/RP0/CPU0:router(config-mpls-te)# interface HundredGigabitEthernet0/0/0/3

RP/0/RP0/CPU0:router(config-mpls-te-if)# backup-path tunnl-te 2

RP/0/RP0/CPU0:router(config-mpls-te-if)# exit

RP/0/RP0/CPU0:router(config)# interface tunnel-te 2

RP/0/RP0/CPU0:router(config-if)# ipv4 unnumbered Loopback0

RP/0/RP0/CPU0:router(config-if)# path-option 1 explicit name backup-srlg

RP/0/RP0/CPU0:router(config-if)# destination 192.168.92.125

RP/0/RP0/CPU0:router(config-if)# exit

RP/0/RP0/CPU0:router(config)# explicit-path name backup-srlg-nodep

RP/0/RP0/CPU0:router(config-if)# index 1 exclude-address 192.168.91.1

RP/0/RP0/CPU0:router(config-if)# index 2 exclude-srlg 192.168.92.2

RP/0/RP0/CPU0:router(config)# commit

Related Topics

Configuring Pre-Standard DS-TE

Regular traffic engineering does not provide bandwidth guarantees to different traffic classes. A single bandwidth constraint is used in regular TE that is shared by all traffic. MPLS DS-TE enables you to configure multiple bandwidth constraints on an MPLS-enabled interface. These bandwidth constraints can be treated differently based on the requirement for the traffic class using that constraint. Cisco IOS XR software supports two DS-TE modes: Pre-standard and IETF. Pre-standard DS-TE uses the Cisco proprietary mechanisms for RSVP signaling and IGP advertisements. This DS-TE mode does not interoperate with third-party vendor equipment. Pre-standard DS-TE is enabled only after configuring the sub-pool bandwidth values on MPLS-enabled interfaces.

Pre-standard Diff-Serve TE mode supports a single bandwidth constraint model a Russian Doll Model (RDM) with two bandwidth pools: global-pool and sub-pool.

Before You Begin

The following prerequisites are required to configure a Pre-standard DS-TE tunnel.

-

You must have a router ID for the neighboring router.

-

Stable router ID is required at either end of the link to ensure that the link is successful. If you do not assign a router ID to the routers, the system defaults to the global router ID. Default router IDs are subject to change, which can result in an unstable link.

Configuration Example

This example configures a pre-standard DS-TE tunnel.

RP/0/RP0/CPU0:router # configure

RP/0/RP0/CPU0:router(config)# rsvp interface HundredGigabitEthernet 0/0/0/3

RP/0/RP0/CPU0:router(config-rsvp-if)# bandwidth 100 150 sub-pool 50

RP/0/RP0/CPU0:router(config-rsvp-if)# exit

RP/0/RP0/CPU0:router(config)# interface tunnel-te 2

RP/0/RP0/CPU0:router(config-if)# signalled bandwidth sub-pool 10

RP/0/RP0/CPU0:router(config)# commit

Verification

Use the show mpls traffic-eng topology command to verify the pre-standard DS-TE tunnel configuration.

Related Topics

Configuring an IETF DS-TE Tunnel Using RDM

IETF DS-TE mode uses IETF-defined extensions for RSVP and IGP. This mode interoperate with third-party vendor equipment.

IETF mode supports multiple bandwidth constraint models, including Russian Doll Model (RDM) and Maximum Allocation Model (MAM), both with two bandwidth pools. In an IETF DS-TE network, identical bandwidth constraint models must be configured on all nodes.

Before you Begin

The following prerequisites are required to create a IETF mode DS-TE tunnel using RDM:

-

You must have a router ID for the neighboring router.

-

Stable router ID is required at either end of the link to ensure that the link is successful. If you do not assign a router ID to the routers, the system defaults to the global router ID. Default router IDs are subject to change, which can result in an unstable link.

Configuration Example

This example configures an IETF DS-TE tunnel using RDM.

RP/0/RP0/CPU0:router # configure

RP/0/RP0/CPU0:router(config)# rsvp interface HundredGigabitEthernet 0/0/0/3

RP/0/RP0/CPU0:router(config-rsvp-if)# bandwidth rdm 100 150

RP/0/RP0/CPU0:router(config-rsvp-if)# exit

RP/0/RP0/CPU0:router(config)# mpls traffic-eng

RP/0/RP0/CPU0:router(config-mpls-te)# ds-te mode ietf

RP/0/RP0/CPU0:router(config-mpls-te)# exit

RP/0/RP0/CPU0:router(config)# interface tunnel-te 2

RP/0/RP0/CPU0:router(config-if)# signalled bandwidth sub-pool 10 class-type 1

RP/0/RP0/CPU0:router(config)# commit

Verification

Use the show mpls traffic-eng topology command to verify the IETF DS-TE tunnel using RDM configuration.

Related Topics

Configuring an IETF DS-TE Tunnel Using MAM

IETF DS-TE mode uses IETF-defined extensions for RSVP and IGP. This mode interoperates with third-party vendor equipment. IETF mode supports multiple bandwidth constraint models, including Russian Doll Model (RDM) and Maximum Allocation Model (MAM), both with two bandwidth pools.

Configuration Example

This example configures an IETF DS-TE tunnel using MAM.

RP/0/RP0/CPU0:router # configure

RP/0/RP0/CPU0:router(config)# rsvp interface HundredGigabitEthernet 0/0/0/3

RP/0/RP0/CPU0:router(config-rsvp-if)# bandwidth mam max-reservable-bw 1000 bc0 600 bc1 400

RP/0/RP0/CPU0:router(config-rsvp-if)# exit

RP/0/RP0/CPU0:router(config)# mpls traffic-eng

RP/0/RP0/CPU0:router(config-mpls-te)# ds-te mode ietf

RP/0/RP0/CPU0:router(config-mpls-te)# ds-te bc-model mam

RP/0/RP0/CPU0:router(config-mpls-te)# exit

RP/0/RP0/CPU0:router(config)# interface tunnel-te 2

RP/0/RP0/CPU0:router(config-if)# signalled bandwidth sub-pool 10

RP/0/RP0/CPU0:router(config)# commit

Verification

Use the show mpls traffic-eng topology command to verify the IETF DS-TE tunnel using MAM configuration.

Related Topics

Configuring Flexible Name-Based Tunnel Constraints

MPLS-TE Flexible Name-based Tunnel Constraints provides a simplified and more flexible means of configuring link attributes and path affinities to compute paths for the MPLS-TE tunnels.

In traditional TE, links are configured with attribute-flags that are flooded with TE link-state parameters using Interior Gateway Protocols (IGPs), such as Open Shortest Path First (OSPF).

MPLS-TE Flexible Name-based Tunnel Constraints lets you assign, or map, up to 32 color names for affinity and attribute-flag attributes instead of 32-bit hexadecimal numbers. After mappings are defined, the attributes can be referred to by the corresponding color name.

Configuration Example

This example shows assigning a how to associate a tunnel with affinity constraints.

RP/0/RP0/CPU0:router# configure

RP/0/RP0/CPU0:router(config)# mpls traffic-eng

RP/0/RP0/CPU0:router(config-mpls-te)# affinity-map red 1

RP/0/RP0/CPU0:router(config-mpls-te)# interface HundredGigabitEthernet0/0/0/3

RP/0/RP0/CPU0:router(config-mpls-te-if)# attribute-names red

RP/0/RP0/CPU0:router(config)# interface tunnel-te 2

RP/0/RP0/CPU0:router(config-if)# affinity include red

RP/0/RP0/CPU0:router(config)# commit

Configuring Automatic Bandwidth

Automatic bandwidth allows you to dynamically adjust bandwidth reservation based on measured traffic. MPLS-TE automatic bandwidth monitors the traffic rate on a tunnel interface and resizes the bandwidth on the tunnel interface to align it closely with the traffic in the tunnel. MPLS-TE automatic bandwidth is configured on individual Label Switched Paths (LSPs) at every headend router.

The following table specifies the parameters that can be configured as part of automatic bandwidth configuration.

|

Bandwidth Parameters |

Description |

|---|---|

|

Application frequency |

Configures how often the tunnel bandwidths changed for each tunnel. The default value is 24 hours. |

|

Bandwidth limit |

Configures the minimum and maximum automatic bandwidth to set on a tunnel. |

|

Bandwidth collection frequency |

Enables bandwidth collection without adjusting the automatic bandwidth. The default value is 5 minutes. |

|

Overflow threshold |

Configures tunnel overflow detection. |

|

Adjustment threshold |

Configures the tunnel-bandwidth change threshold to trigger an adjustment. |

Configuration Example

This example enables automatic bandwidth on MPLS-TE tunnel interface and configure the following automatic bandwidth variables.

-

Application frequency

-

Bandwidth limit

-

Adjustment threshold

-

Overflow detection

RP/0/RP0/CPU0:router# configure

RP/0/RP0/CPU0:router(config)# interface tunnel-te 1

RP/0/RP0/CPU0:router(config-if)# auto-bw

RP/0/RP0/CPU0:router(config-if-tunte-autobw)# application 1000

RP/0/RP0/CPU0:router(config-if-tunte-autobw)# bw-limit min 30 max 1000

RP/0/RP0/CPU0:router(config-if-tunte-autobw)# adjustment-threshold 50 min 800

RP/0/RP0/CPU0:router(config-if-tunte-autobw)# overflow threshold 100 limit 1

RP/0/RP0/CPU0:router(config)# commit

Verification

Verify the automatic bandwidth configuration using the show mpls traffic-eng tunnels auto-bw brief command.

RP/0/RP0/CPU0:router# show mpls traffic-eng tunnels auto-bw brief

Tunnel LSP Last appl Requested Signalled Highest Application

Name ID BW(kbps) BW(kbps) BW(kbps) BW(kbps) Time Left

-------------- ------ ---------- ---------- ---------- ---------- --------------

tunnel-te1 5 500 300 420 1h 10mRelated Topics

Configuring Autoroute Announce

The segment routing tunnel can be advertised into an Interior Gateway Protocol (IGP) as a next hop by configuring the autoroute announce statement on the source router. The IGP then installs routes in the Routing Information Base (RIB) for shortest paths that involve the tunnel destination. Autoroute announcement of IPv4 prefixes can be carried through either OSPF or IS-IS. Autoroute announcement of IPv6 prefixes can be carried only through IS-IS. IPv6 forwarding over tunnel needs additional parameter in tunnel configuration.

Restrictions

The Autoroute Announce feature is supported with the following restrictions:

-

A maximum on 128 tunnels can be announced.

-

Tunnels in ECMP may not be path diverse.

Note |

Configuring Segment Routing and Autoroute Destination together is not supported. If autoroute functionality is required in an Segment Routing network, we recommend you to configure Autoroute Announce. |

Configuration

To specify that the Interior Gateway Protocol (IGP) should use the tunnel (if the tunnel is up) in its enhanced shortest path first (SPF) calculation, use the autoroute announce command in interface configuration mode.

RP/0/RP0/CPU0:router# configure

RP/0/RP0/CPU0:router(config)# interface tunnel-te 10

RP/0/RP0/CPU0:router(config-if)# autoroute announce [include-ipv6]

RP/0/RP0/CPU0:router(config-if)# commitVerification

Verify the route using the following commands:

RP/0/RP0/CPU0:router# Show route

Mon Aug 8 00:31:29.406 UTC

Codes: C - connected, S - static, R - RIP, B - BGP, (>) - Diversion path

D - EIGRP, EX - EIGRP external, O - OSPF, IA - OSPF inter area

N1 - OSPF NSSA external type 1, N2 - OSPF NSSA external type 2

E1 - OSPF external type 1, E2 - OSPF external type 2, E - EGP

i - ISIS, L1 - IS-IS level-1, L2 - IS-IS level-2

ia - IS-IS inter area, su - IS-IS summary null, * - candidate default

U - per-user static route, o - ODR, L - local, G - DAGR, l - LISP

A - access/subscriber, a - Application route

M - mobile route, r - RPL, (!) - FRR Backup path

Gateway of last resort is not set

i L1 9.1.0.0/24 [115/30] via 9.9.9.9, 2d19h, tunnel-te3195

[115/30] via 9.9.9.9, 2d19h, tunnel-te3196

[115/30] via 9.9.9.9, 2d19h, tunnel-te3197

[115/30] via 9.9.9.9, 2d19h, tunnel-te3198

[115/30] via 9.9.9.9, 2d19h, tunnel-te3199

[115/30] via 9.9.9.9, 2d19h, tunnel-te3200

[115/30] via 9.9.9.9, 2d19h, tunnel-te3201

[115/30] via 9.9.9.9, 2d19h, tunnel-te3202

i L1 9.9.9.9/32 [115/30] via 9.9.9.9, 2d22h, tunnel-te3195

[115/30] via 9.9.9.9, 2d22h, tunnel-te3196

[115/30] via 9.9.9.9, 2d22h, tunnel-te3197

[115/30] via 9.9.9.9, 2d22h, tunnel-te3198

[115/30] via 9.9.9.9, 2d22h, tunnel-te3199

[115/30] via 9.9.9.9, 2d22h, tunnel-te3200

[115/30] via 9.9.9.9, 2d22h, tunnel-te3201

[115/30] via 9.9.9.9, 2d22h, tunnel-te3202RP/0/RP0/CPU0:router# show route ipv6

Tue Apr 26 04:02:07.968 UTC

Codes: C - connected, S - static, R - RIP, B - BGP, (>) - Diversion path

D - EIGRP, EX - EIGRP external, O - OSPF, IA - OSPF inter area

N1 - OSPF NSSA external type 1, N2 - OSPF NSSA external type 2

E1 - OSPF external type 1, E2 - OSPF external type 2, E - EGP

i - ISIS, L1 - IS-IS level-1, L2 - IS-IS level-2

ia - IS-IS inter area, su - IS-IS summary null, * - candidate default

U - per-user static route, o - ODR, L - local, G - DAGR, l - LISP

A - access/subscriber, a - Application route

M - mobile route, r - RPL, (!) - FRR Backup path

Gateway of last resort is not set

L ::ffff:127.0.0.0/104

[0/0] via ::, 00:17:36

C 2002::/64 is directly connected,

00:39:01, TenGigE0/0/0/2

L 2002::1/128 is directly connected,

00:39:01, TenGigE0/0/0/2

i L1 2003::/64

[115/20] via ::, 00:02:32, tunnel-te992

[115/20] via ::, 00:02:32, tunnel-te993

[115/20] via ::, 00:02:32, tunnel-te994

[115/20] via ::, 00:02:32, tunnel-te995

[115/20] via ::, 00:02:32, tunnel-te996

[115/20] via ::, 00:02:32, tunnel-te997

[115/20] via ::, 00:02:32, tunnel-te998

[115/20] via ::, 00:02:32, tunnel-te999

C 2006::/64 is directly connected,

00:39:01, TenGigE0/0/0/6

L 2006::1/128 is directly connected,

00:39:01, TenGigE0/0/0/6

i L1 2007::/64

[115/20] via ::, 00:02:32, tunnel-te992

[115/20] via ::, 00:02:32, tunnel-te993

[115/20] via ::, 00:02:32, tunnel-te994

[115/20] via ::, 00:02:32, tunnel-te995

[115/20] via ::, 00:02:32, tunnel-te996

[115/20] via ::, 00:02:32, tunnel-te997

[115/20] via ::, 00:02:32, tunnel-te998

[115/20] via ::, 00:02:32, tunnel-te999

show cef ipv6 2007::5 hardware egress location 0/0/CPU0

Sun May 1 03:18:55.151 UTC 2007::/64, version 50, internal 0x1000001 0x0 (ptr 0x895e819c) [1], 0x0 (0x894d3678), 0x0 (0x0) Updated May 1 03:13:49.066 Prefix Len 64, traffic index 0, precedence n/a, priority 2

via ::/128, tunnel-te1, 2 dependencies, weight 0, class 0 [flags 0x0]

path-idx 0 NHID 0x0 [0x8b5ea420 0x0]

next hop ::/128

local adjacency

via ::/128, tunnel-te2, 2 dependencies, weight 0, class 0 [flags 0x0]

path-idx 1 NHID 0x0 [0x8b5ea5e0 0x0]

next hop ::/128

local adjacency

LEAF - HAL pd context :

sub-type : IPV6, ecd_marked:0, has_collapsed_ldi:0 collapse_bwalk_required:0, ecdv2_marked:0 HW Walk:

LEAF:

Handle: 0xaabbccdd type: 1 FEC handle: 0x8999fb18

LWLDI:

PI:0x894d3678 PD:0x894d36b8 rev:214 p-rev:212 ldi type:3

FEC hdl: 0x8999fb18 fec index: 0x0(0) num paths:2, bkup: 0

SHLDI:

PI:0x893658e8 PD:0x89365968 rev:212 p-rev:0 flag:0x0

FEC hdl: 0x8999fb18 fec index: 0x20000002(2) num paths: 2 bkup paths: 2

Path:0 fec index: 0x20004000(16384) DSP:0x440 Dest fec index: 0x2000101c(4124)

Path:1 fec index: 0x20004002(16386) DSP:0x440 Dest fec index: 0x2000101c(4124)

Path:2 fec index: 0x20004001(16385) DSP:0x440 Dest fec index: 0x2000101c(4124)

Path:3 fec index: 0x20004003(16387) DSP:0x440 Dest fec index: 0x2000101c(4124)

V6TE NH HAL PD context :

pdptr 0x8b5ea488, flags :1, index:0

TE-NH:

Flag: PHP HW Tun_O_Tun frr_active_chg te_protect_chg , PD(0x880d1640), Push: 0, Swap: 0, Link: 0

ifhandle:0x800001c llabel:24001 FEC hdl: 0x896d4eb8 fec index: 0x20001022(4130), SRTE fec hdl:(nil)

V6TE NH HAL PD context :

pdptr 0x8b5ea648, flags :1, index:0

TE-NH:

Flag: PHP HW , PD(0x880d12d0), Push: 0, Swap: 0, Link: 0x8b34d518

ifhandle:0x8000024 llabel:24000 FEC hdl: 0x896d4238 fec index: 0x2000101c(4124), SRTE fec hdl:(nil)

Autoroute Announce with IS-IS for Anycast Prefixes

|

Feature Name |

Release |

Feature Description |

|---|---|---|

|

Autoroute Announce with IS-IS for Anycast Prefixes |

Release 7.5.4 |

We have enabled seamless migration from autoroute announce (AA) tunnels with OSPF to AA tunnels with IS-IS. This is possible because you can now use AA tunnels with IS-IS to calculate the underlying native IGP metric for anycast prefixes to select the shortest path and then select a tunnel on that shortest path. Previously, autoroute announce tunnels with IS-IS behaved differently as compared to OSPF. IS-IS used the autoroute announce metric whereas, OSPF uses the underlying native IGP cost. With this feature the router uses the same method of cost calculation in autoroute announce in both OSPF and IS-IS. This feature introduces these:

|

When you configure Autoroute Announce (AA) tunnels, IGP installs the tunnel to the destination in the Routing Information Base (RIB) for the shortest paths. However, the behavior of AA for anycast destination is different from IS-IS compared to OSPF.

-

IS-IS: AA tunnel with IS-IS considers the AA metric to calculate the prefix reachability for the shortest path.

-

OSPF: AA with OSPF uses the lowest IGP cost to find the shortest path. And, then use the TE tunnel which is on that shortest path.

We now introduce the anycast-prefer-igp-cost command for AA tunnel with IS-IS anycast prefixes to allow the router to choose the shortest IGP path and select the tunnel on that shortest path, similar to OSPF behavior.

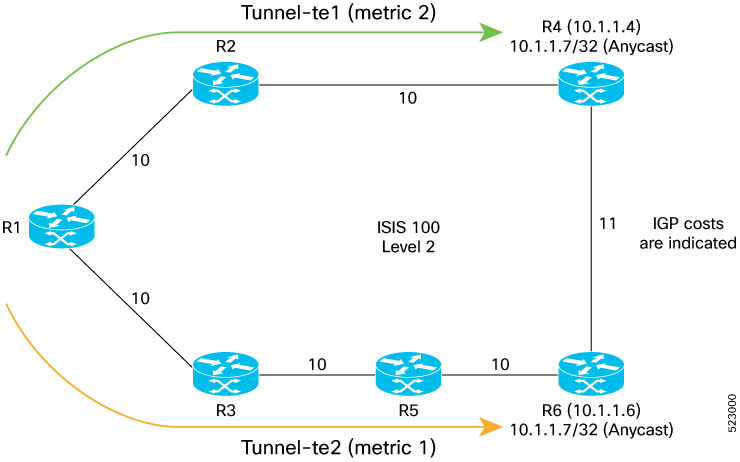

Topology

Using this topology, let’s see how the shortest path is chosen based on the IGP cost:

-

In this topology, R1 is the headend and has MPLS RSVP-TE tunnels to R2 and R3.

-

The tunnel destinations are loopback addresses of R4 and R6, which is 10.1.1.4 and 10.1.1.6.

-

R1 uses AA to allow traffic to prefixes advertised by R2 and R3 to go over the MPLS-TE tunnel.

-

Two AA tunnels are configured with different metrics and destinations. Tunnel-te1 has a metric value of 2 and tunnel-te2 has a metric value of 1.

-

R1 can reach the anycast prefix (10.1.1.7/32) using two paths R1-R2-R4 and R1-R3-R5-R6.

-

To reach the anycast prefix, the IGP cost is 20 for the R1-R2-R4 path and it is 30 for the R1-R3-R5-R6 path. Based on the IGP metric, the R1-R2-R4 path is the shortest path.

-

With IS-IS, by default, the AA tunnel considers the tunnel metric to forward the traffic. In this topology, tunnel-te2 has a lower metric value when compared to tunnel-te1. As the AA metric is considered and the traffic is forwarded through te-2.

-

When this feature is enabled, the IGP cost is first considered for the shortest path like AA with OSPF.

In this topology, the R1-R2-R4 path is considered as the IGP cost is 20 that lower when compared to tunnel-te2, which is 30. Then the MPLS-TE tunnel on that path is considered, which is tunnel-te1.

Configure IGP Path Selection for Anycast Prefixes using AA with ISIS

Perform the following tasks to configure IGP Path Selection for Anycast Prefixes using Autoroute Announce with ISIS:

-

Configure MPLS RSVP-TE.

-

Enable anycast-prefer-igp-cost wish ISIS.

/* Configure MPLS RSVP-TE */

Router(config)#interface tunnel-te1

Router(config-if)#ipv4 unnumbered Loopback0

Router(config-if)#autoroute announce

Router(config-if-tunte-aa)#metric 2

Router(config-if-tunte-aa)#exit

Router(config-if)#destination 10.1.1.4

Router(config-if)#path-option 1 dynamic

Router(config-if)#commit

Router(config)#interface tunnel-te2

Router(config-if)#ipv4 unnumbered Loopback0

Router(config-if)#autoroute announce

Router(config-if-tunte-aa)#metric 1

Router(config-if-tunte-aa)#exit

Router(config-if)#destination 10.1.1.6

Router(config-if)#path-option 1 dynamic

Router(config-if)#exit

Router(config)#commit

/* Enable anycast-prefer-igp-cost with ISIS */

Router#configure

Router(config)#router isis 100

Router(config-isis)#is-type level-2-only

Router(config-isis)#net 47.2377.50ea.ffff.988a.2d13.00

Router(config-isis)#address-family ipv4 unicast

Router(config-isis-af)#metric-style wide

Router(config-isis-af)#mpls traffic-eng level-2-only

Router(config-isis-af)#mpls traffic-eng router-id Loopback0

Router(config-isis-af)#mpls traffic-eng tunnel anycast-prefer-igp-cost

Router(config-isis-af)#maximum-paths 64

Router(config-isis-af)#commit

Running Configuration

Router#show running-config

interface tunnel-te1

ipv4 unnumbered Loopback0

autoroute announce

metric 2

!

destination 10.1.1.4

path-option 1 dynamic

!

interface tunnel-te2

ipv4 unnumbered Loopback0

autoroute announce

metric 1

!

destination 10.1.1.6

path-option 1 dynamic

!

router isis 100

is-type level-2-only

net 47.2377.50ea.ffff.988a.2d13.00

address-family ipv4 unicast

metric-style wide

mpls traffic-eng level-2-only

mpls traffic-eng router-id Loopback0

mpls traffic-eng tunnel anycast-prefer-igp-cost

maximum-paths 64

!

!

end

Verification

When you enable anycast-prefer-igp-cost , the traffic is forwarded through tunnel-te1:

Router#show route 10.1.1.7/32

Routing entry for 10.1.1.7/32

Known via "isis 100", distance 115, metric 12, type level-2

Installed Nov 3 09:48:39.520 for 00:00:05

Routing Descriptor Blocks

10.1.1.4, from 10.1.1.4, via tunnel-te1

Route metric is 20

When you disable anycast-prefer-igp-cost , the traffic is forwarded through tunnel-te2:

Router#show route 10.1.1.7/32

Routing entry for 10.1.1.7/32

Known via "isis 100", distance 115, metric 11, type level-2

Installed Nov 3 09:25:38.162 for 00:18:23

Routing Descriptor Blocks

10.1.1.6, from 10.1.1.6, via tunnel-te2

Route metric is 11

Configuring Auto-Tunnel Mesh

The MPLS-TE auto-tunnel mesh (auto-mesh) feature allows you to set up full mesh of TE Point-to-Point (P2P) tunnels automatically with a minimal set of MPLS traffic engineering configurations. You can configure one or more mesh-groups and each mesh-group requires a destination-list (IPv4 prefix-list) listing destinations, which are used as destinations for creating tunnels for that mesh-group.

You can configure MPLS-TE auto-mesh type attribute-sets (templates) and associate them to mesh-groups. Label Switching Routers (LSRs) can create tunnels using the tunnel properties defined in this attribute-set.

Auto-Tunnel mesh configuration minimizes the initial configuration of the network. You can configure tunnel properties template and mesh-groups or destination-lists on TE LSRs that further creates full mesh of TE tunnels between those LSRs. It eliminates the need to reconfigure each existing TE LSR in order to establish a full mesh of TE tunnels whenever a new TE LSR is added in the network.

Configuration Example

This example configures an auto-tunnel mesh group and specifies the attributes for the tunnels in the mesh-group.

RP/0/RP0/CPU0:router # configure

RP/0/RP0/CPU0:router(config)# mpls traffic-eng

RP/0/RP0/CPU0:router(config-mpls-te)# auto-tunnel mesh

RP/0/RP0/CPU0:router(config-mpls-te-auto-mesh)# tunnel-id min 1000 max 2000

RP/0/RP0/CPU0:router(config-mpls-te-auto-mesh)# group 10

RP/0/RP0/CPU0:router(config-mpls-te-auto-mesh-group)# attribute-set 10

RP/0/RP0/CPU0:router(config-mpls-te-auto-mesh-group)# destination-list dl-65

RP/0/RP0/CPU0:router(config-mpls-te)# attribute-set auto-mesh 10

RP/0/RP0/CPU0:router(config-mpls-te-attribute-set)# autoroute announce

RP/0/RP0/CPU0:router(config-mpls-te-attribute-set)# auto-bw collect-bw-only

RP/0/RP0/CPU0:router(config)# commit

Verification

Verify the auto-tunnel mesh configuration using the show mpls traffic-eng auto-tunnel mesh command.

RP/0/RP0/CPU0:router# show mpls traffic-eng auto-tunnel mesh

Auto-tunnel Mesh Global Configuration:

Unused removal timeout: 1h 0m 0s

Configured tunnel number range: 1000-2000

Auto-tunnel Mesh Groups Summary:

Mesh Groups count: 1

Mesh Groups Destinations count: 3

Mesh Groups Tunnels count:

3 created, 3 up, 0 down, 0 FRR enabled

Mesh Group: 10 (3 Destinations)

Status: Enabled

Attribute-set: 10

Destination-list: dl-65 (Not a prefix-list)

Recreate timer: Not running

Destination Tunnel ID State Unused timer

---------------- ----------- ------- ------------

192.168.0.2 1000 up Not running

192.168.0.3 1001 up Not running

192.168.0.4 1002 up Not running

Displayed 3 tunnels, 3 up, 0 down, 0 FRR enabled

Auto-mesh Cumulative Counters:

Last cleared: Wed Oct 3 12:56:37 2015 (02:39:07 ago)

Total

Created: 3

Connected: 0

Removed (unused): 0

Removed (in use): 0

Range exceeded: 0Configuring an MPLS Traffic Engineering Interarea Tunneling

The MPLS TE Interarea Tunneling feature allows you to establish MPLS TE tunnels that span multiple Interior Gateway Protocol (IGP) areas and levels. This feature removes the restriction that required the tunnel headend and tailend routers both to be in the same area. The IGP can be either Intermediate System-to-Intermediate System (IS-IS) or Open Shortest Path First (OSPF).To configure an inter-area tunnel, you specify on the headend router a loosely routed explicit path for the tunnel label switched path (LSP) that identifies each area border router (ABR) the LSP should traverse using the next-address loose command. The headend router and the ABRs along the specified explicit path expand the loose hops, each computing the path segment to the next ABR or tunnel destination.

Configuration Example

This example configures an IPv4 explicit path with ABR configured as loose address on the headend router.

Router# configure

Router(config)# explicit-path name interarea1

Router(config-expl-path)# index1 next-address loose ipv4 unicast 172.16.255.129

Router(config-expl-path)# index 2 next-address loose ipv4 unicast 172.16.255.131

Router(config)# interface tunnel-te1

Router(config-if)# ipv4 unnumbered Loopback0

Router(config-if)# destination 172.16.255.2

Router(config-if)# path-option 10 explicit name interarea1

Router(config)# commit

Configuring LDP over MPLS-TE

LDP and RSVP-TE are signaling protocols used for establishing LSPs in MPLS networks. While LDP is easy to configure and reilable, it lacks the traffic engineering capabilities of RSVP that helps to avoid traffic congestions. LDP over MPLS-TE feature combines the benefits of both LDP and RSVP. In LDP over MPLS-TE, an LDP signalled label-switched path (LSP) runs through a TE tunnel established using RSVP-TE.

The following diagram explains a use case for LDP over MPLS-TE. In this diagram, LDP is used as the signalling protocol between provider edge (PE) router and provider (P) router. RSVP-TE is used as the signalling protocol between the P routers to establish an LSP. LDP is tunneled over the RSVP-TE LSP.

Restrictions and Guidelines for LDP over MPLS-TE

The following restrictions and guidelines apply for this feature in Cisco IOS-XR release 6.3.2:

-

MPLS services over LDP over MPLS-TE are supported when BGP neighbours are on the head or tail node of the TE tunnel.

-

MPLS services over LDP over MPLS-TE are supported when the TE headend router is acting as transit point for that service.

-

If MPLS services are originating from the TE headend, but the TE tunnel is ending before the BGP peer, LDP over MPLS-TE feature is not supported.

-

If LDP optimization is enabled using the hw-module fib mpls ldp lsr-optimized command, the following restrictions apply:

-

EVPN is not supported.

-

For any prefix or label all outgoing paths has to be LDP enabled.

-

-

Do not use the hw-module fib mpls ldp lsr-optimized command on a Provider Edge (PE) router because already configured features such as EVPN, MPLS-VPN, and L2VPN might not work properly.

Configuration Example:

This example shows how to configure an MPLS-TE tunnel from provider router P1 to P2 and then enbale LDP over MPLS-TE. In this example, the destination of the tunnel from P1 is configured as the loop back for P2.

RP/0/RP0/CPU0:router # configure

RP/0/RP0/CPU0:router(config)# interface tunnel-te 1

RP/0/RP0/CPU0:router(config-if)# ipv4 unnumbered Loopback0

RP/0/RP0/CPU0:router(config-if)# autoroute announce

RP/0/RP0/CPU0:router(config-if)# destination 4.4.4.4

RP/0/RP0/CPU0:router(config-if)# path-option 1 dynamic

RP/0/RP0/CPU0:router(config-if)# exit

RP/0/RP0/CPU0:Router(config)# mpls ldp

RP/0/RP0/CPU0:Router(config-ldp)# router-id 192.168.1.1

RP/0/RP0/CPU0:Router(config-ldp)# interface TenGigE 0/0/0/0

RP/0/RP0/CPU0:Router(config-ldp-if)# interface tunnel-te 1

RP/0/RP0/CPU0:Router(config-ldp-if)# exit

Configuring MPLS-TE Path Protection

Path protection provides an end-to-end failure recovery mechanism for MPLS-TE tunnels. A secondary Label Switched Path (LSP) is established, in advance, to provide failure protection for the protected LSP that is carrying a tunnel's TE traffic. When there is a failure on the protected LSP, the source router immediately enables the secondary LSP to temporarily carry the tunnel's traffic. Failover is triggered by a RSVP error message sent to the LSP head end. Once the head end received this error message, it switches over to the secondary tunnel. If there is a failure on the secondary LSP, the tunnel no longer has path protection until the failure along the secondary path is cleared. Path protection can be used within a single area (OSPF or IS-IS), external BGP [eBGP], and static routes. Both the explicit and dynamic path-options are supported for the MPLS-TE path protection feature. You should make sure that the same attributes or bandwidth requirements are configured on the protected option.

Before You Begin

The following prerequisites are required for enabling path protection.

-

You should ensure that your network supports MPLS-TE, Cisco Express Forwarding, and Intermediate System-to-Intermediate System (IS-IS) or Open Shortest Path First (OSPF).

-

You should configure MPLS-TE on the routers.

Configuration Example

This example configures how to configure path protection for a mpls-te tunnel. The primary path-option should be present to configure path protection. In this configuration, R1 is the headend router and R3 is the tailend router for the tunnel while R2 and R4 are mid-point routers. In this example, 6 explicit paths and 1 dynamic path is created for path protection. You can have upto 8 path protection options for a primary path.

RP/0/RP0/CPU0:router # configure

RP/0/RP0/CPU0:router(config)# interface tunnel-te 0

RP/0/RP0/CPU0:router(config-if)# destination 192.168.3.3

RP/0/RP0/CPU0:router(config-if)# ipv4 unnumbered Loopback0

RP/0/RP0/CPU0:router(config-if)# autoroute announce

RP/0/RP0/CPU0:router(config-if)# path-protection

RP/0/RP0/CPU0:router(config-if)# path-option 1 explicit name r1-r2-r3-00 protected-by 2

RP/0/RP0/CPU0:router(config-if)# path-option 2 explicit name r1-r2-r3-01 protected-by 3

RP/0/RP0/CPU0:router(config-if)# path-option 3 explicit name r1-r4-r3-01 protected-by 4

RP/0/RP0/CPU0:router(config-if)# path-option 4 explicit name r1-r3-00 protected-by 5

RP/0/RP0/CPU0:router(config-if)# path-option 5 explicit name r1-r2-r4-r3-00 protected-by 6

RP/0/RP0/CPU0:router(config-if)# path-option 6 explicit name r1-r4-r2-r3-00 protected-by 7

RP/0/RP0/CPU0:router(config-if)# path-option 7 dynamic

RP/0/RP0/CPU0:router(config-if)# exit

RP/0/RP0/CPU0:router(config)# commit

Verification

Use the show mpls traffic-eng tunnels command to verify the MPLS-TE path protection configuration.

RP/0/RP0/CPU0:router# show mpls traffic-eng tunnels 0

Fri Oct 13 16:24:39.379 UTC

Name: tunnel-te0 Destination: 192.168.92.125 Ifhandle:0x8007d34

Signalled-Name: router

Status:

Admin: up Oper: up Path: valid Signalling: connected

path option 1, type explicit r1-r2-r3-00 (Basis for Setup, path weight 2)

Protected-by PO index: 2

path option 2, type explicit r1-r2-r3-01 (Basis for Standby, path weight 2)

Protected-by PO index: 3

path option 3, type explicit r1-r4-r3-01

Protected-by PO index: 4

path option 4, type explicit r1-r3-00

Protected-by PO index: 5

path option 5, type explicit r1-r2-r4-r3-00

Protected-by PO index: 6

path option 6, type explicit r1-r4-r2-r3-00

Protected-by PO index: 7

path option 7, type dynamic

G-PID: 0x0800 (derived from egress interface properties)

Bandwidth Requested: 0 kbps CT0

Creation Time: Fri Oct 13 15:05:28 2017 (01:19:11 ago)

Config Parameters:

Bandwidth: 0 kbps (CT0) Priority: 7 7 Affinity: 0x0/0xffff

Metric Type: TE (global)

Path Selection:

Tiebreaker: Min-fill (default)

Hop-limit: disabled

Cost-limit: disabled

Delay-limit: disabled

Path-invalidation timeout: 10000 msec (default), Action: Tear (default)

AutoRoute: enabled LockDown: disabled Policy class: not set

Forward class: 0 (not enabled)

Forwarding-Adjacency: disabled

Autoroute Destinations: 0

Loadshare: 0 equal loadshares

Auto-bw: disabled

Fast Reroute: Disabled, Protection Desired: None

Path Protection: Enabled

BFD Fast Detection: Disabled

Reoptimization after affinity failure: Enabled

Soft Preemption: Disabled

History:

Tunnel has been up for: 01:14:13 (since Fri Oct 13 15:10:26 UTC 2017)

Current LSP:

Uptime: 01:14:13 (since Fri Oct 13 15:10:26 UTC 2017)

Reopt. LSP:

Last Failure:

LSP not signalled, identical to the [CURRENT] LSP

Date/Time: Fri Oct 13 15:08:41 UTC 2017 [01:15:58 ago]

Standby Reopt LSP:

Last Failure:

LSP not signalled, identical to the [STANDBY] LSP

Date/Time: Fri Oct 13 15:08:41 UTC 2017 [01:15:58 ago]

First Destination Failed: 192.3.3.3

Prior LSP:

ID: 8 Path Option: 1

Removal Trigger: path protection switchover

Standby LSP:

Uptime: 01:13:56 (since Fri Oct 13 15:10:43 UTC 2017)

Path info (OSPF 1 area 0):

Node hop count: 2

Hop0: 192.168.1.2

Hop1: 192.168.3.1

Hop2: 192.168.3.2

Hop3: 192.168.3.3

Standby LSP Path info (OSPF 1 area 0), Oper State: Up :

Node hop count: 2

Hop0: 192.168.2.2

Hop1: 192.168.3.1

Hop2: 192.168.3.2

Hop3: 192.168.3.3

Displayed 1 (of 4001) heads, 0 (of 0) midpoints, 0 (of 0) tails

Displayed 1 up, 0 down, 0 recovering, 0 recovered heads

Configuring Auto-bandwidth Bundle TE++

|

Feature Name |

Release Information |

Feature Description |

|---|---|---|

|

Automatic Bandwidth Bundle TE++ for Numbered Tunnels |

Release 7.10.1 |

Introduced in this release on: NCS 5700 fixed port routers; NCS 5500 modular routers (NCS 5700 line cards [Mode: Compatibility; Native]) We have optimized network performance and enabled efficient utilization of resources for numbered tunnels based on real-time traffic by automatically adding or removing tunnels between two endpoints. This is made possible because this release introduces support for auto-bandwidth TE++ for numbered tunnels, expanding upon the previous support for only named tunnels, letting you define explicit paths and allocate the bandwidth to each tunnel. The feature introduces these changes:

|

MPLS-TE tunnels are used to set up labeled connectivity and to provide dynamic bandwidth capacity between endpoints. The auto-bandwidth feature addresses the dynamic bandwidth capacity demands by dynamically resizing the MPLS-TE tunnels based on the measured traffic loads. However, many customers require multiple auto-bandwidth tunnels between endpoints for load balancing and redundancy. When the aggregate bandwidth demand increases between two endpoints, you can either configure auto-bandwidth feature to resize the tunnels or create new tunnels and load balance the overall demand over all the tunnels between two endpoints. Similarly, when the aggregate bandwidth demand decreases between two endpoints you can either configure the auto-bandwidth feature to decrease the sizes of the tunnel or delete the new tunnels and load balance the traffic over the remaining tunnels between the endpoints. The autobandwidth bundle TE++ feature is an extension of the auto-bandwidth feature and allows you to automatically increase or decrease the number of MPLS-TE tunnels to a destination based on real time traffic needs.

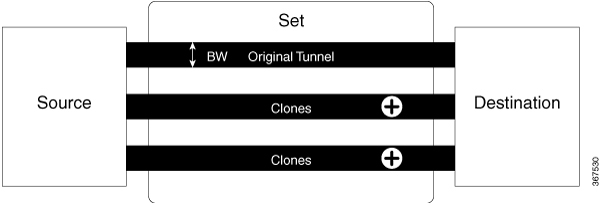

Tunnels that are automatically created as a response to the increasing bandwidth demands are called clones. The cloned tunnels inherit properties of the main configured tunnel. However, user configured load interval cannot be inherited. The original tunnel and its clones are collectively called a set. You can specify an upper limit and lower limit on the number of clones that can be created for the original tunnel.

Splitting is the process of cloning a new tunnel when there is a demand for bandwidth increase. When the size of any of the tunnels in the set crosses a configured split bandwidth, then splitting is initiated and clone tunnels are created.

The following figure explains creating clone tunnels when the split bandwidth is exceeded.

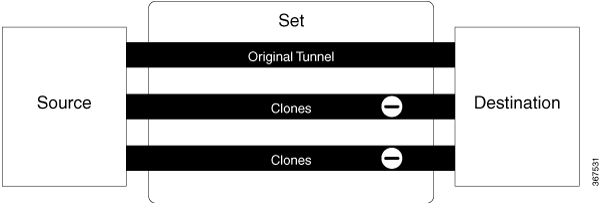

Merging is the process of removing a clone tunnel when the bandwidth demand decreases. If the bandwidth goes below the configured merge bandwidth in any one of the tunnels in the set, clone tunnels are removed.

The following figure explains removing clone tunnels to merge with the original tunnel when the bandwidth falls below the

merge bandwidth.

There are multiple ways to equally load-share the aggregate bandwidth demand among the tunnels in the set. This means that an algorithm is needed to choose the pair which satisfies the aggregate bandwidth requirements. You can configure a nominal bandwidth to guide the algorithm to determine the average bandwidths of the tunnels. If nominal bandwidth is not configured, TE uses the average of split and merge bandwidth as nominal bandwidth.

Restrictions and Usage Guidelines

The following usage guidelines apply for the auto-bandwidth bundle TE++ feature.

-

This feature is only supported for the named tunnels and not supported on tunnel-te interfaces.

-

The range for the lower limit on the number of clones is 0 to 63 and the default value for the lower limit on the number of clones is 0.

-

The range for the upper limit on the number of clones is 1 to 63 and the default value for the upper limit on the number of clones is 63.

Configuration Example

This example shows how to configure the autobandwidth bundle TE++ feature for a named MPLS-TE traffic tunnel. You should configure the following values for this feature to work:

-

min-clones: Specifies the minimum number of clone tunnels that the original tunnel can create.

-

max-clones: Specifies the maximum number of clone tunnels that the original tunnel can create.

-

nominal-bandwidth: Specifies the average bandwidth for computing the number of tunnels to satisfy the overall demand.

-

split-bandwidth: Specifies the bandwidth value for splitting the original tunnel. If the tunnel bandwidth exceeds the configured split bandwidth, clone tunnels are created.

-

merge-bandwidth: Specifies the bandwidth for merging clones with the original tunnel. If the bandwidth goes below the configured merge bandwidth, clone tunnels are removed.

In this example, the lower limit on the number of clones is configured as two and the upper limit on the number of clones is configured as four. The bandwidth size for splitting and merging is configured as 200 and 100 kbps.

RP/0/RP0/CPU0:router(config)# mpls traffic-eng

RP/0/RP0/CPU0:router(config-mpls-te)# named-tunnels

RP/0/RP0/CPU0:router(config-te-named-tunnels)# tunnel-te xyz

RP/0/RP0/CPU0:router(config-te-tun-name)# auto-bw

RP/0/RP0/CPU0:router(config-mpls-te-tun-autobw)# auto-capacity

RP/0/RP0/CPU0:router(config-te-tun-autocapacity)# min-clones 2

RP/0/RP0/CPU0:router(config-te-tun-autocapacity)# max-clones 4

RP/0/RP0/CPU0:router(config-te-tun-autocapacity)# nominal-bandwidth 150

RP/0/RP0/CPU0:router(config-te-tun-autocapacity)# split-bandwidth 200

RP/0/RP0/CPU0:router(config-te-tun-autocapacity)# merge-bandwidth 100

Configure Autoroute Tunnel as Designated Path

|

Feature Name |

Release Information |

Feature Description |

|---|---|---|

|

Configure Autoroute Tunnel as Designated Path |

Release 7.6.2 |

We now provide you the flexibility to simplify the path selection for a traffic class and split traffic among multiple TE tunnels. To split traffic, you can specify an autoroute tunnel to forward traffic to a particular tunnel destination address without considering the IS-IS metric for traffic path selection. IS-IS metric provides the shortest IGP path to a destination based only on link costs along the path. However, you may want to specify a tunnel interface to carry traffic regardless of IGP cost to meet your specific organizational requirements. Earlier, MPLS-TE considered either the Forwarding Adjacency (FA) or Autoroute (AA) tunnel for forwarding traffic based only on the IS-IS metric. The feature introduces the mpls traffic-eng tunnel restricted command. |

MPLS-TE builds a unidirectional tunnel from a source to a destination using label switched path (LSP) to forward traffic.

To forward the traffic through MPLS tunneling, you can use autoroute, forwarding adjacency, or static routing:

-

Autoroute (AA) functionality allows to insert the MPLS TE tunnel in the Shortest Path First (SPF) tree for the tunnel to transport all the traffic from the headend to all destinations behind the tail-end. AA is only known to the tunnel headend router.

-

Forwarding Adjacency (FA) allows the MPLS-TE tunnel to be advertised as a link in an IGP network with the cost of the link associated with it. Routers outside of the TE domain can see the TE tunnel and use it to compute the shortest path for routing traffic throughout the network.

-

Static routing allows you to inject static IP traffic into a tunnel as the output interface for the routing decision.

Prior to this release, by default, MPLS-TE considers FA or AA tunnels to forward traffic based on the IS-IS metric. The lower metric is always used to forward traffic. There was no mechanism to forward traffic to a specific tunnel interface.

For certain prefixes to achieve many benefits such as security and service-level agreements, there might be a need to forward traffic to a specific tunnel interface that has a matching destination address.

With this feature, you can exclusively use AA tunnels to forward traffic to their tunnel destination address irrespective of IS-IS metric. Traffic steering is performed based on the prefixes and not metrics. Traffic to other prefixes defaults to the forwarding-adjacency (FA) tunnels.

To enable this feature, use the mpls traffic-eng tunnel restricted command.

Also, you may require more than one AA tunnel to a particular remote PE and use ECMP to forward traffic across AA tunnels. You can configure a loopback interface with one primary address and multiple secondary addresses on the remote PE, using one IP for the FA tunnel destination, and others for the AA tunnels destinations. Multiple IP addresses are advertised in the MPLS TE domain using the typed length value (TLV) 132 in IS-IS. A TLV-encoded data stream contains code related to the record type, the record length of the value, and value. TLV 132 represents the IP addresses of the transmitting interface.

Feature Behavior

When MPLS-TE tunnel restricted is configured, the following is the behavior:

-

A complete set of candidate paths is available for selection on a per-prefix basis during RIB update as the first hop computation includes all the AA tunnels terminating on a node up to a limit of 64 and the lowest cost forwarding-adjacency or native paths terminating on the node or inherited from the parent nodes in the first hops set for the node.

-