Table 5. Feature History Table

|

Feature Name

|

Release Information

|

Feature Description

|

|

SR-TE Explicit Path with a BGP Prefix SID as First Segment

|

Release 24.4.1

|

Introduced in this release on: Fixed Systems (8700 [ASIC: P100]) (select variants only*)

This feature allows you to configure an SR-TE policy with an explicit path that uses a remote BGP prefix SID as its first

segment. This path is achieved by leveraging the recursive resolution of the first SID, which is a BGP-Label Unicast (BGP-LU)

SID. BGP-LU labels are used as the first SID in the SR policy to determine the egress paths for the traffic and program the

SR-TE forwarding chain accordingly.

This allows users to enable Segment Routing to leverage their existing BGP infrastructure and integrate it with the required

Segment Routing functionalities.

* This feature is supported on Cisco 8712-MOD-M routers.

|

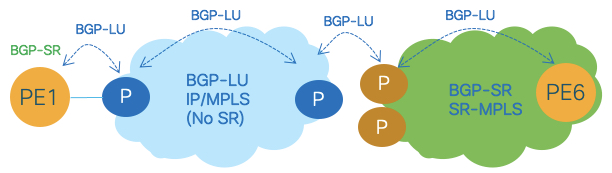

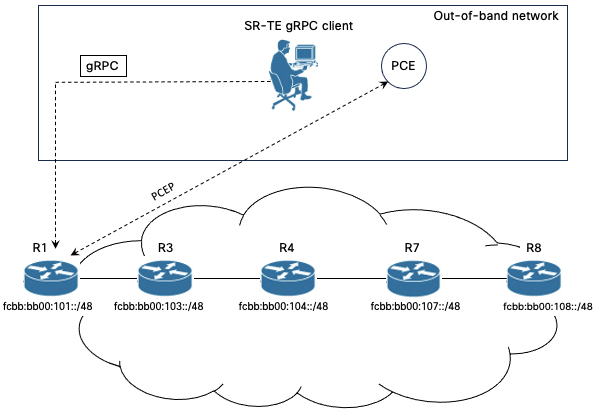

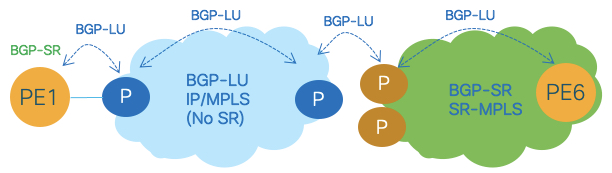

The figure below shows a network setup where a PE router (PE1) is using BGP-SR as the routing protocol and is connected to

a classic BGP-LU domain. The classic BGP-LU domain is also connected to other domains running BGP-SR

In BGP-SR, a BGP Prefix-SID is advertised along with a prefix in BGP Labeled Unicast (BGP-LU). This Prefix-SID attribute contains

information about the label value and index for the route. This allows for interworking between the classic BGP-LU and BGP-SR

domains.

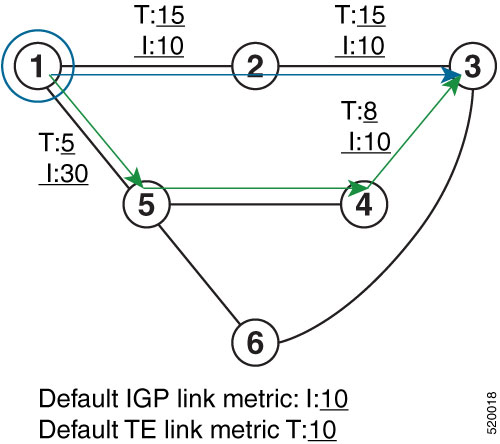

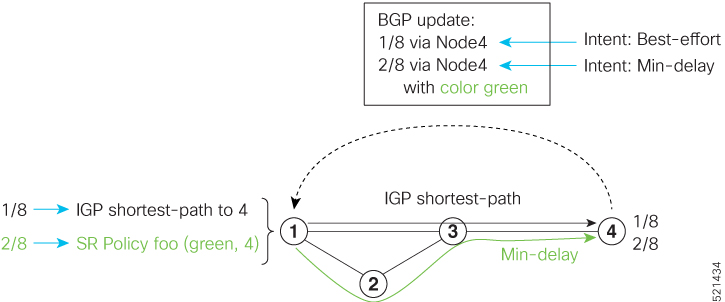

The figure below illustrates a best-effort BGP-LU Label Switched Path (LSP) from PE1 to PE6, passing through transit Label

Switching Router (LSR) nodes 2, 3, and 5.

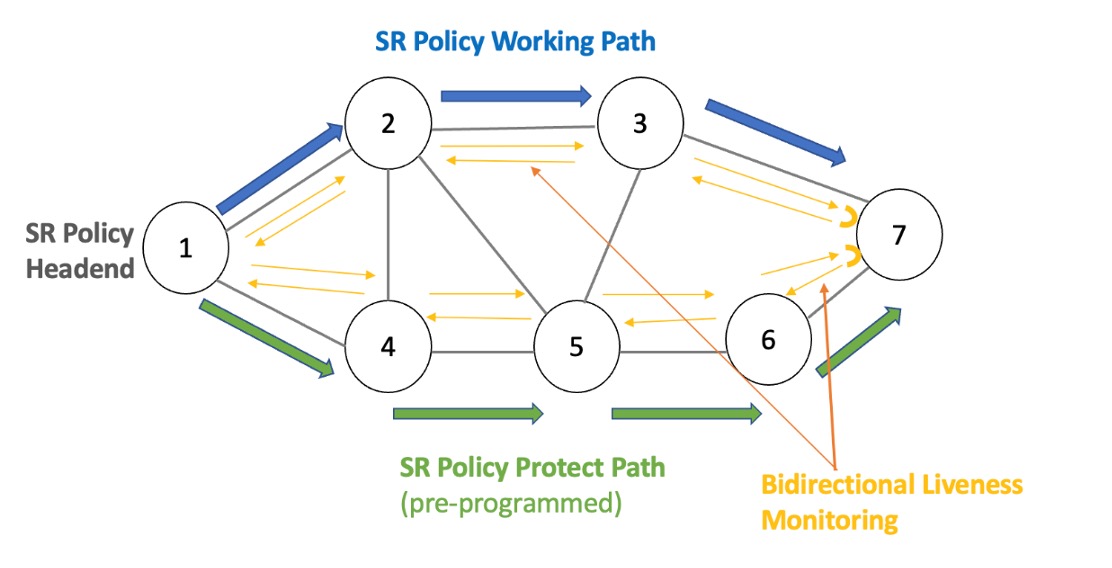

With this setup, the operator can create an alternate path using an SR-TE policy at the ingress BGP-SR PE (PE1). The explicit

path for this alternate path will follow a different transit node, using the BGP prefix SID for that transit node as the first

segment.

For example, in the figure below, the SID-list shows that the explicit path uses the BGP prefix SID of transit LSR node 4

(16004) followed by the BGP prefix SID of PE6 (16006). The PE1 router resolves the first segment of this explicit path to

the outgoing interface towards Node 2, using the outgoing label advertised by Node 2 for the BGP-LU prefix 10.1.1.4/32.

Example

The following output shows the BGP-LU routes learned at the head-end (PE1 in the illustration above). Consider the IP prefix

1.1.1.4/32. A BGP-LU local label is assigned from the SRGB with a prefix SID index of 4, resulting in the value 16004. Additionally,

the classic BGP-LU upstream neighbor (P2) advertises an outgoing label of 78000 for this IP prefix.

RP/0/RP0/CPU0:R1# show bgp ipv4 unicast labels

BGP router identifier 1.1.1.1, local AS number 1

BGP generic scan interval 60 secs

Non-stop routing is enabled

BGP table state: Active

Table ID: 0xe0000000 RD version: 13

BGP main routing table version 13

BGP NSR Initial initsync version 6 (Reached)

BGP NSR/ISSU Sync-Group versions 0/0

BGP scan interval 60 secs

Status codes: s suppressed, d damped, h history, * valid, > best

i - internal, r RIB-failure, S stale, N Nexthop-discard

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Rcvd Label Local Label

*> 1.1.1.1/32 0.0.0.0 nolabel 3

*> 1.1.1.2/32 10.1.2.2 3 24007

*> 1.1.1.3/32 10.1.2.2 78005 24002

*> 1.1.1.4/32 10.1.2.2 78000 16004

*> 1.1.1.5/32 10.1.2.2 78002 16005

*> 1.1.1.6/32 10.1.2.2 78001 16006

...

RP/0/RP0/CPU0:R1# show bgp ipv4 labeled-unicast 1.1.1.4/32

BGP routing table entry for 1.1.1.4/32

Versions:

Process bRIB/RIB SendTblVer

Speaker 74 74

Local Label: 16004

Last Modified: Sep 29 19:52:18.155 for 00:07:22

Paths: (1 available, best #1)

Advertised to update-groups (with more than one peer):

0.2

Path #1: Received by speaker 0

Advertised to update-groups (with more than one peer):

0.2

3

10.1.2.2 from 10.1.2.2 (1.1.1.2)

Received Label 78000

Origin IGP, metric 0, localpref 100, valid, external, best, group-best

Received Path ID 0, Local Path ID 1, version 74

Origin-AS validity: not-found

Label Index: 4

The following output shows the corresponding label forwarding entry for BGP prefix SID 16004 with corresponding outgoing label

and outgoing interface.

RP/0/RP0/CPU0:R1# show mpls forwarding labels 16002

Local Outgoing Prefix Outgoing Next Hop Bytes

Label Label or ID Interface Switched

------ ----------- ------------------ ------------ --------------- ------------

16004 78000 SR Pfx (idx 2) Te0/0/0/0 10.1.2.2 44

The configuration below depicts an SR policy (color 100 and end-point 1.1.1.6) with an explicit path to PE6 using the BGP

prefix SID of transit LSR node 4 (16004) as its first segment followed by the BGP prefix SID of PE6 (16006).

RP/0/RP0/CPU0:R1# configure

RP/0/RP0/CPU0:R1(config)# segment-routing

RP/0/RP0/CPU0:R1(config-sr)# traffic-eng

RP/0/RP0/CPU0:R1(config-sr-te)# segment-list SL-R4-R6

RP/0/RP0/CPU0:R1(config-sr-te-sl)# index 10 mpls label 16004

RP/0/RP0/CPU0:R1(config-sr-te-sl)# index 20 mpls label 16006

RP/0/RP0/CPU0:R1(config-sr-te-sl)# exit

RP/0/RP0/CPU0:R1(config-sr-te)# policy POL-to-R6

RP/0/RP0/CPU0:R1(config-sr-te-policy)# color 100 end-point ipv4 1.1.1.6

RP/0/RP0/CPU0:R1(config-sr-te-policy)# candidate-paths

RP/0/RP0/CPU0:R1(config-sr-te-policy-path)# preference 100

RP/0/RP0/CPU0:R1(config-sr-te-policy-path-pref)# explicit segment-list SL-R4-R6

RP/0/RP0/CPU0:R1# show running-configuration

segment-routing

traffic-eng

segment-list SL-R4-R6

index 10 mpls label 16004

index 20 mpls label 16006

!

policy POL-to-R6

color 100 end-point ipv4 1.1.1.6

candidate-paths

preference 100

explicit segment-list SL-R4-R6

!

!

!

!

!

!

The following output depicts the forwarding information for the SR policy including the outgoing interface and outgoing label

stack.

Observe how the first segment configured (MPLS label 16004) is replaced in the forwarding with the label advertised by the

BGP-LU neighbor of the SRTE head-end (MPLS label value 78000).

RP/0/RP0/CPU0:R1# show segment-routing traffic-eng forwarding policy color 100

SR-TE Policy Forwarding database

--------------------------------

Color: 100, End-point: 1.1.1.6

Name: srte_c_100_ep_1.1.1.6

Binding SID: 24006

Active LSP:

Candidate path:

Preference: 100 (configuration)

Name: POL-to-R6

Local label: 24005

Segment lists:

SL[0]:

Name: SL-R4-R6

Switched Packets/Bytes: 100/10000

[MPLS -> MPLS]: 100/10000

Paths:

Path[0]:

Outgoing Label: 78000

Outgoing Interfaces: TenGigE0/0/0/0

Next Hop: 10.1.2.2

Switched Packets/Bytes: 100/10000

[MPLS -> MPLS]: 100/10000

FRR Pure Backup: No

ECMP/LFA Backup: No

Internal Recursive Label: Unlabelled (recursive)

Label Stack (Top -> Bottom): { 78000, 16006 }

Policy Packets/Bytes Switched: 100/9600

With the SR-TE policy installed, the head-end can apply SR-TE automated steering principles when programming BGP service overlay

routes.

In the following output, BGP prefix 10.0.0.0/8 with next-hop 1.1.1.6 and color 100 is automatically steered over the SR policy

configured above (color 100 and end-point 1.1.1.6).

RP/0/RP0/CPU0:R1# show bgp

BGP router identifier 1.1.1.1, local AS number 1

BGP generic scan interval 60 secs

Non-stop routing is enabled

BGP table state: Active

Table ID: 0xe0000000 RD version: 13

BGP main routing table version 13

BGP NSR Initial initsync version 6 (Reached)

BGP NSR/ISSU Sync-Group versions 0/0

BGP scan interval 60 secs

Status codes: s suppressed, d damped, h history, * valid, > best

i - internal, r RIB-failure, S stale, N Nexthop-discard

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

. . .

*> 10.0.0.0/8 1.1.1.6 C:100 0 0 3 i

Feedback

Feedback