Congestion Avoidance

|

Feature Name |

Release Information |

Feature Description |

|---|---|---|

|

Queueing for Congestion Avoidance |

Release 24.4.1 |

Introduced in this release on: Fixed Systems (8700) (select variants only*) You can shape traffic to control the traffic flow from queues and also configure queues to ensure certain traffic classes get a guaranteed amount of bandwidth. *This functionality is now supported on Cisco 8712-MOD-M routers. |

|

Queueing for Congestion Avoidance |

Release 24.3.1 |

Introduced in this release on: Modular Systems (8800 [LC ASIC: P100]) (select variants only*), Fixed Systems (8200) (select variants only*), Fixed Systems (8700 (P100, K100)) (select variants only*) You can shape traffic to control the traffic flow from queues and also configure queues to ensure certain traffic classes get a guaranteed amount of bandwidth. *This feature is supported on:

|

|

Queueing for Congestion Avoidance |

Release 24.2.1 |

Introduced in this release on: Modular Systems (8800 [LC ASIC: P100])(select variants only*) By placing packets in different queues based on priority, queueing helps prevent traffic congestion and ensures that high-priority traffic is transmitted with minimal delay. You can shape traffic to control the traffic flow from queues and also configure queues to ensure certain traffic classes get a guaranteed amount of bandwidth. Queueing provides buffers to temporarily store packets during bursts of traffic and also supports strategies that enable dropping lower-priority packets when congestion builds up. *This feature is supported on 88-LC1-36EH. |

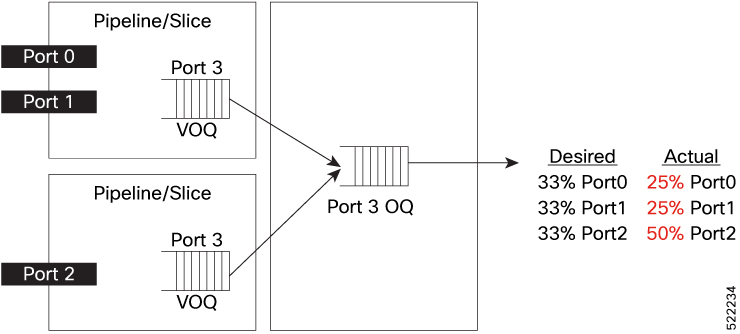

Queuing provides a way to temporarily store data when the received rate of data is larger than what can be sent. Managing the queues and buffers is the primary goal of congestion avoidance. As a queue starts to fill up with data, it is important to try to make sure that the available memory in the ASIC/NPU does not fill up completely. If this happens, subsequent packets coming into the port are dropped, irrespective of the priority that they received. This could have a detrimental effect on the performance of critical applications. For this reason, congestion avoidance techniques are used to reduce the risk of a queue from filling up the memory completely and starving non-congested queues for memory. Queue thresholds are used to trigger a drop when certain levels of occupancy are exceeded.

Scheduling is the QoS mechanism that is used to empty the queues of data and send the data onward to its destination.

Shaping is the act of buffering traffic within a port or queue until it is able to be scheduled. Shaping smoothens traffic, making traffic flows much more predictable. It helps ensure that each transmit queue is limited to a maximum rate of traffic.

Feedback

Feedback