cnBR Prerequisites

Feature History

|

Feature Name |

Release Information |

Feature Description |

|---|---|---|

|

100G NIC Support |

Cisco cnBR 21.3 |

100G NIC allows you to use 100G or 400G ports on the SPR and a breakout cable to connect multiple 100G NICs to a single SPR port. It makes significant difference in how to size, order, and architect your network for cnBR. |

|

Deployment on Customer Managed VMware ESXi Infrastructure |

Cisco cnBR 21.3 |

This feature allows you to deploy Cisco Operations Hub on a customer managed VMware ESXi infrastructure. It supports normal and small size multi-node cluster deployment to fit in different application environments. |

The following components are required to install, operate, and manage a Cisco cnBR:

-

Cisco cnBR servers

-

Cisco Operations Hub servers

-

Cisco cnBR Network Components

-

VMware vSphere Infrastructure including vCenter Server

Hardware Prerequisites for the Cisco cnBR

The Cisco cnBR runs exclusively on Cisco Unified Computing System (UCS) servers.

-

Cisco UCS server requirement

Three Cisco UCS C220 M5 servers are required to run Cisco cnBR. The UCSC-C220-M5SX is supported in two configurations with minimum compute, storage, and networking requirements listed in the following tables:

Minimum Requirements for the Cisco UCS Server

Table 2. Minimum Requirements for the Cisco UCS Server Component

Specification

Chassis

UCSC-C220-M5SX

Processor

Intel 6248 2.5GHz/150W 20C/27.5MB DCP DDR4 2933 MHz

Memory

384GB DDR4-2933-MHz RDIMM

Storage

4 x 800GB SSD

NIC

2 x Intel XL710-QDA2 (40G)

Or

Minimum Requirements for the Cisco UCS Server

Component

Specification

Chassis

UCSC-C220-M5SX

Processor

Intel 6248R 3.0GHz/205W 24C/35.75MB DCP DDR4 2933 MHz

Memory

384GB DDR4-2933-MHz RDIMM

Storage

4 x 800GB SSD

NIC

2 x Intel XL710-QDA2 (40G)

Or

Minimum Requirements for the Cisco UCS Server

Component

Specification

Chassis

UCSC-C220-M5SX

Processor

Intel 6248R 3.0GHz/205W 24C/35.75MB DCP DDR4 2933 MHz

Memory

384GB DDR4-2933-MHz RDIMM

Storage

4 x 800GB SSD

NIC

2 x Mellanox UCSC-P-M5D100GF (100G)

Prerequisites for the Cisco Operations Hub

-

ESXi hosts requirement

Three ESXi hosts are required to run a Cisco Operations Hub multi-node cluster.

You can deploy Cisco Operations Hub on a non-UCS environment like customer-managed VMware infrastructure. The preferred deployment environment is Cisco Unified Computing System (UCSC-C220-M5SX). Cisco Operations Hub supports two deployment options: Small and Normal.

For Normal deployment, minimum compute, storage, and networking requirements for the ESXi hosts are listed in the following table:

Minimum Requirements for Normal Deployment

Table 3. Minimum Requirements for Normal Deployment Component

Specification

Processor

34 vCPUs

Memory

304 GB

Storage

2400 GB SSD, Minimum 50000 IOPS (Input/output operations per second) Latency of < 5 ms

NIC

2 x 10G vNIC

For Small deployment, minimum compute, storage, and networking requirements for the ESXi hosts are listed in the following table:

Minimum Requirements for Small Deployment

Table 4. Minimum Requirements for Small Deployment Component

Specification

Processor

20 vCPUs

Memory

160 GB

Storage

1600 GB SSD, Minimum 50000 IOPS (Input/output operations per second) Latency of < 5 ms

NIC

2 x 10G vNIC

-

VMware requirements

-

Hypervisor: Choose either of the following:

-

VMware ESXi 6.5, minimum recommended patch release for security updates ESXi650-202006001

-

VMware ESXi 6.7, minimum recommended patch release for security updates ESXi670-202006001

-

-

Host Management - VMware vCenter Server 6.5 or VMware vCenter Server 6.7

If the VMware ESXi 6.7 is installed on host, ensure that the vCenter version is VMware vCenter Server 6.7.

-

-

Browser support

For the Cisco cnBR, the Cisco Operations Hub functionality is supported for the following browser versions:

-

Mozilla Firefox 86 and later

-

Google Chrome 88 and later

-

Microsoft Edge 88 and later

Note

For Windows OS, the recommended resolution is 1920x1080 and a scale and layout setting of 125%.

-

Prerequisites components in the Cisco cnBR Topology

-

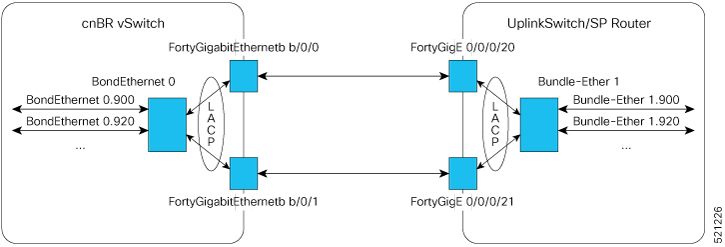

Cisco cnBR Data Switch

You must use a data center switch with the requisite 40G port density between the Cisco cnBR servers and the service provider router to aggregate the Cisco cnBR data path links.

-

Management Switch

A dedicated data center switch can be used for Cisco cnBR and Cisco Operations Hub management traffic. Cisco UCS servers provide 1G, 10G, and 40G network interface connectivity options for the different management networks that are used in the system.

Cisco cnBR UCS servers require connectivity for Host Operating System Management and Cisco Integrated Management Controller (IMC) Lights-Out-Management.

Cisco Operations Hub UCS servers require connectivity for VMware ESXi host management, VM traffic for Guest Operating System Management, and Cisco Integrated Management Controller (IMC) Lights-Out-Management.

-

Service Provider Router

The SP Router is responsible for forwarding L3 packets between the core network, RPHY CIN, and Cisco cnBR. The SP Router and Cisco cnBR establishes connections through BGP, SG, RPHY-core for RPD session setup and traffic forwarding.

We recommend the following Cisco Network Convergence System 5500 Series models:

-

NCS-55A1-36H-S

-

NCS-55A1-24H

The required software version must be Cisco IOS XR 6.5.3 or later.

-

-

DHCP Server

A standard Dynamic Host Configuration Protocol (DHCP) server is required, and typically included in an existing DOCSIS infrastructure. For example, the DHCP server that is included is the Cisco Network Registrar (CNR).

-

PTP Server Configuration

A Precision Time Protocol (PTP) server is required and typically included in an existing DOCSIS infrastructure. For example, an OSA 5420.

-

TFTP Server

A standard Trivial File Transfer Protocol (TFTP) server is required and typically included in an existing DOCSIS infrastructure.

-

RPHY CIN

A Remote PHY Converged Interconnect Network (CIN) is required. A Remote PHY Device, and Cable Modems are also required. For example, Cisco Smart PHY 600 Shelf.

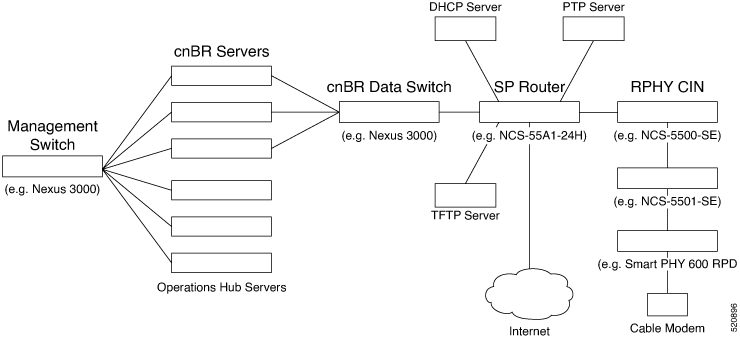

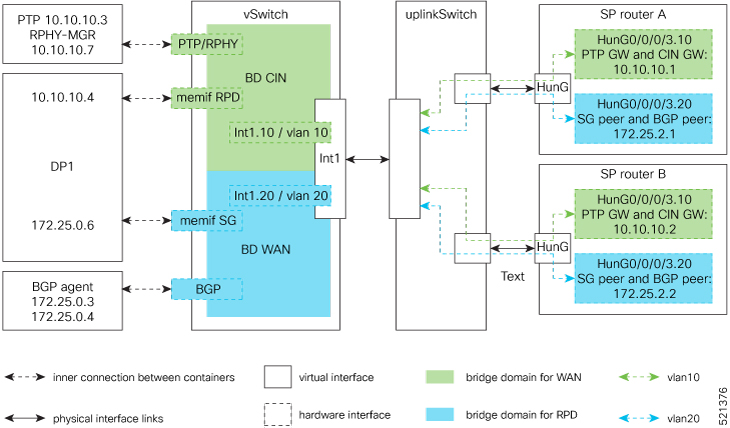

The following image is a simplified, high-level overview of an end-to-end system and shows how these Cisco cnBR components are connected in the topology with provisioning systems and a Remote PHY CIN: Figure 1. Simplified cnBR Topology

Prerequisites Required for Deployment

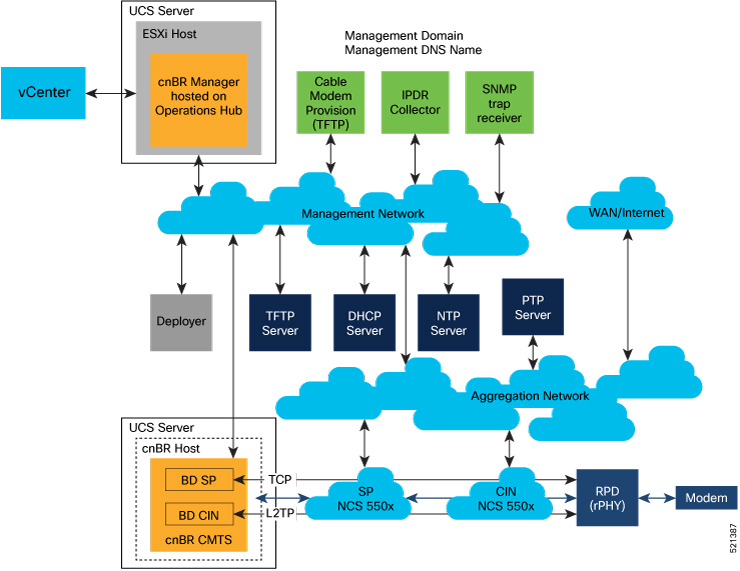

A VMware vSphere ESXi infrastructure with a vCenter server instance is required for end-to-end automated deployment. The SMI Deployer Cluster Manager and Cisco Operations Hub cluster nodes run as virtual machines.

A generalized Cisco cnBR deployment with the Cisco Operations Hub hosted in a VMware cluster is depicted in the following image:

-

Networks

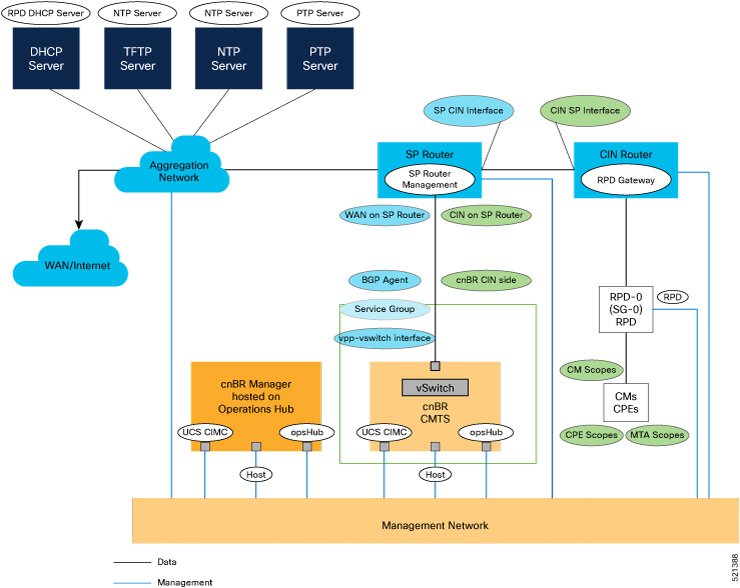

The following table provides guidance for the networks that are needed in the management, WAN, and CIN routing domains:

Network Information for Routing Domains

Table 5. Network Information for Routing Domains Name

Subnet Mask

Function

Management

-

2 addresses for each cluster

-

Operations Hub/cnBR UCS

-

1 for each cluster

-

1 for each service device

Management

CIN

Network requirements for each customer

Connection RPD and CCAP core

WAN

Network requirements for each customer

Internet access for CPE

cnBR CIN side

Network requirements for each customer

-

BGP network to SP router

Network requirements for each customer

Management

Network for data

Network requirements for each customer

-

SG IP cnBR side

Network requirements for each customer

The peer IP for Service Group on cnBR

RPD address pool

Customer selected

DHCP scope for RPD sized to cover total number of RPDs.

DHCP scope for CM

Customer selected

-

DHCP scope for CPE

Customer selected

-

DHCP scope for MTA

Customer selected

-

You must provide domain and DNS name for the management network.

-

-

Device Addresses

The followings tables provide information on the IP address that is needed for device and router interfaces.

-

Management IP Address: Each management interface that is listed in the following table requires 1 IP address:

Management Interface and Associated IP Addresses

Table 6. Management Interface and Associated IP Addresses Device name

Number of Addresses

CIMC cnBR

1 per cnBR UCS

Host OS cnBR

1 per cnBR UCS

cnBR VIP

1 per cnBR Cluster

CIMC Operations Hub

1 per Operations Hub UCS

ESXi Operations Hub

1 per Operations Hub UCS

Operations Hub

1 per Operations Hub VM

Operations Hub VIP

1 per Operations Hub Cluster

Deployer

1

vCenter

1

SP router

1

CIN router

1

-

DOCSIS Network Addresses: The following table lists the DOCSIS network-related information:

DOCSIS Network-Related Information

Table 7. DOCSIS Network-Related Information Device Name

Network Name

Description

Number of Addresses

SP router to CIN

CIN

SP connection to CIN router

1

CIN router to SP

CIN

CIN connection to SP router

1

SP router to WAN

WAN

SP connection to WAN/Internet

1

RPD Gateway

CIN

RPD gateway router Address

1

cnBR CIN side

CIN

cnBR connection to CIN

Customer specific

BGP Agent

WAN

WAN router BGP Agent IP

Customer specific

Service Group

WAN

Service Group WAN IP

Customer specific

WAN on SP Router

WAN

SP connection to WAN network

Customer specific

-

Customer Provisioned Services: The following table lists the various customer services:

Customer Provisioned Services

Table 8. Customer Provisioned Services Service

Notes

DHCP

Needed for both RPD and subscriber devices

TFTP

RPD only uses it during software upgrade

TOD

Time of day clock

PTP

One connection that is required for the cnBR and for each RPD

NTP

Network Time Protocol Server

DNS

Domain Name Server

-

).

).

Feedback

Feedback