vPC Migration from M1/F1 to F2 Modules

Available Languages

Contents

Introduction

This document describes the procedure used in order to migrate from a Virtual Port Channel (vPC) domain that uses M1 or M1/F1 modules to a vPC domain that is based on F2 modules. Migration from M1 or M1/F to F2 module-based vPC switches is a disruptive migration that must be planned in a scheduled outage window. The procedure described in this document minimizes the disruption.

Migration Scope

The procedure described in this document covers a Cisco Nexus 7000 Series (N7k) switch, or a non-default Virtual Device Context (VDC), that is part of a vPC domain and has any combination of M1 and F1 modules on the vPC peer-link and the non-peer-link interfaces. Here are some example combinations that can be used:

- All M1 modules

- Mixed M1 and F1 modules with peer-link on M1

- Mixed M1 and F1 modules with peer-link on F1

- All F1 modules

Constraints and Prerequisites

These constraints or restrictions make the migration procedure more difficult:

- F2 modules cannot coexist in the same VDC with M1 or F1 modules. F2 interfaces require their own F2-only VDC. If you do not follow this configuration, the F2 interfaces are automatically allocated to VDC #0, which is an unusable VDC.

Example:N7k1# show vdc membership

vdc_id: 0 vdc_name: Unallocated interfaces:

Ethernet3/1 Ethernet3/2 Ethernet3/3

Ethernet3/4 Ethernet3/5 Ethernet3/6

<snip> - The vPC peer-link interfaces must be on the same module type on both vPC peers as described in the vPC Peer Link and I/O Modules Support In Cisco NX-OS Release 6.2 section of the Cisco Nexus 7000 Series NX-OS Interfaces Configuration Guide, Release 6.x.

For instance, a vPC peer-link made of M1 interfaces on one side and of F2 interfaces on the other side is not supported. The peer-link should consist of either M1-only ports, F1-only ports, or F2-only ports on both vPC peer switches. - If the current N7k chassis does not have enough empty slots to host all the required F2 modules, a new chassis is needed for the migration procedure for each fully-loaded switch that already exists.

- It is preferred to have free IP addresses in the L3 subnets used on an M1 vPC domain.

Migration Procedure

The procedure for M1 to F2 migration where the chassis can host all the needed F2 modules is illustrated here. F1 to F2 migration is very similar.

Initial Setup

- Complete the preliminary steps. (Network impact: None)

- Back up the current running configuration.

- Upgrade the Nexus Operating System (NX-OS) software to Release 6.0(x) or a later release that supports F2 modules. Details about the upgrade paths are available in the release notes:

- Refer to the Upgrade/Downgrade Caveats section of the Cisco Nexus 7000 Series NX-OS Release Notes, Release 6.0 for more information about Release 6.0 code.

- Refer to the Supported Upgrade and Downgrade Paths of the Cisco Nexus 7000 Series NX-OS Release Notes, Release 6.1 for more information about Release 6.1 code

- Install the VDC license if it is not already installed.

- Back up the current running configuration.

- Create a new F2 VDC. (Network impact: None)

- Create a new VDC for the F2 modules (limit module-type to F2 only), and allocate the F2 interfaces to it.

- Create a new vPC domain for the F2 VDC that has a unique vPC domain ID. The vPC domain ID should be unique per each contiguous L2 network.

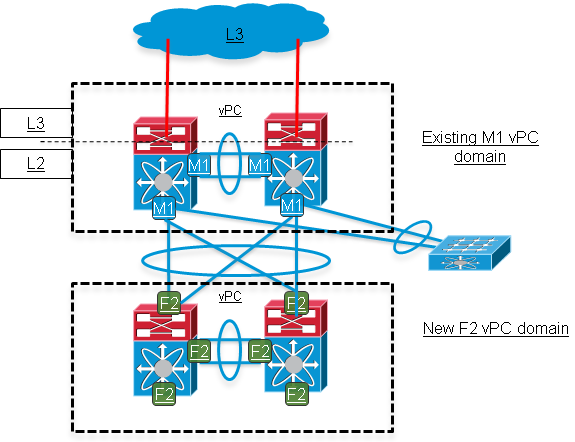

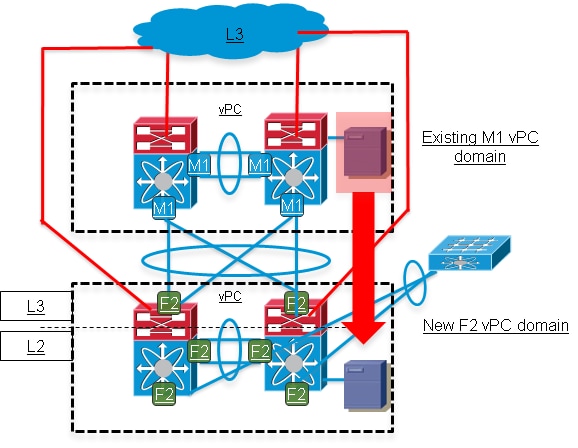

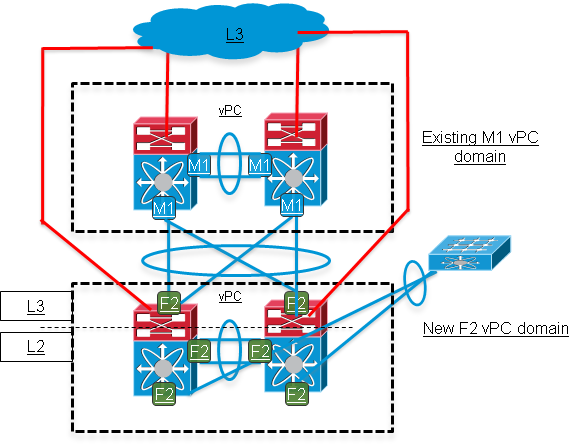

- The F2 vPC domain is then connected to the current M1 vPC domain via a double-sided vPC. The M1 vPC domain should remain the Spanning Tree Protocol (STP) root. Once the new F2 VDC is created, the network looks like this:

- Create a new VDC for the F2 modules (limit module-type to F2 only), and allocate the F2 interfaces to it.

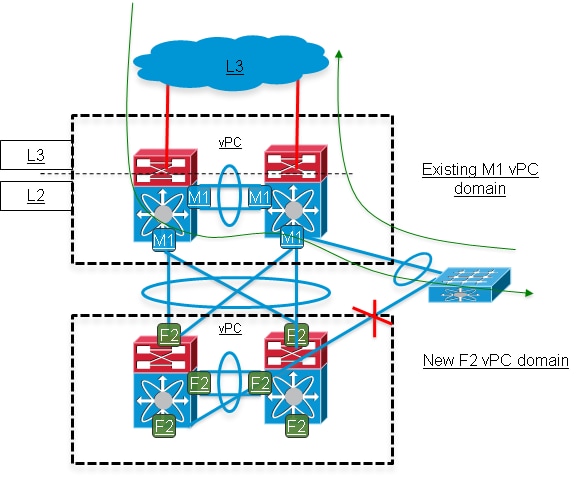

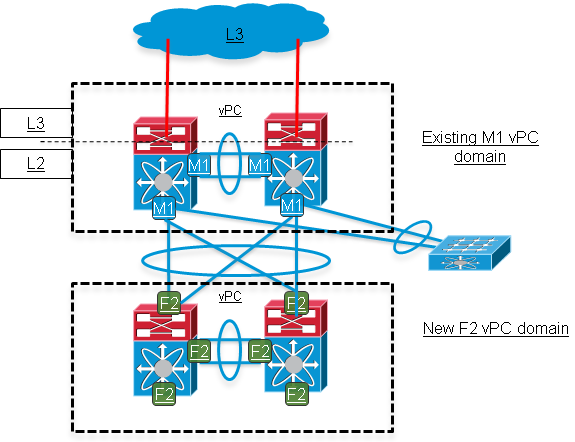

- Start moving vPC links from the M1 domain to F2 domain. (Network impact: Moderate)

On the downstream-access switches connected via vPC, shut down the members of the Multichassis EtherChannel (MEC) uplink port-channel that connect to one of the two Nexus switches in the M1 domain. Those links are then moved to the F2 domain.

When this step is completed, downstream switches have reduced network bandwidth. Also the vPC peer-link in the M1 domain is more used for data-plane traffic that hashes on switch one and that is destined to a downstream switch that was disconnected from switch one.

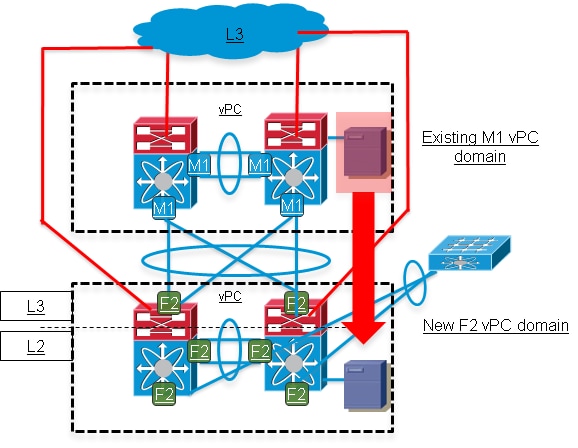

The network looks like this:

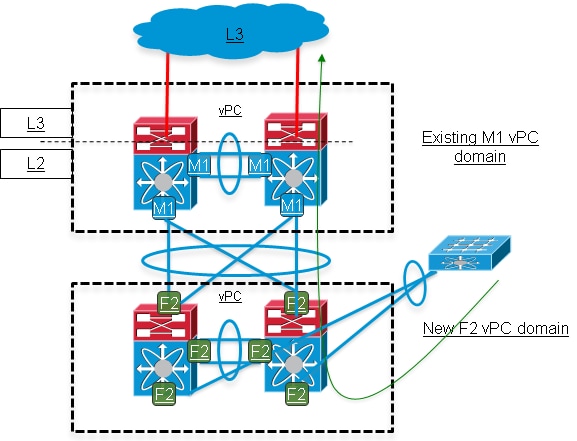

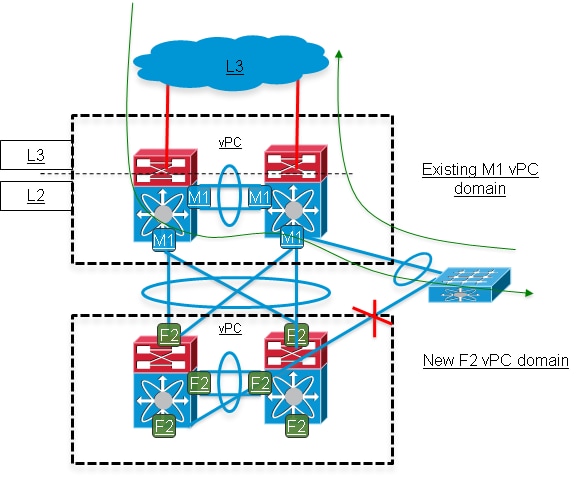

- Move the remaining links from the M1 domain to the F2 domain. (Network impact: High)

- On the access switches, shut down the remaining MEC member links that are still up and enable (not shutdown) the links that were migrated to the F2 domain in step 3. This step is highly disruptive.

During this step, all L3 services still run on the M1 domain. The F2 domain provides an L2 connectivity between the downstream switches and the M1 domain. - Move the links that were shut down in step 4 to the F2 domain and enable them (not shutdown) on the access switches. The original bandwidth of the access switch uplinks is now restored.

- On the access switches, shut down the remaining MEC member links that are still up and enable (not shutdown) the links that were migrated to the F2 domain in step 3. This step is highly disruptive.

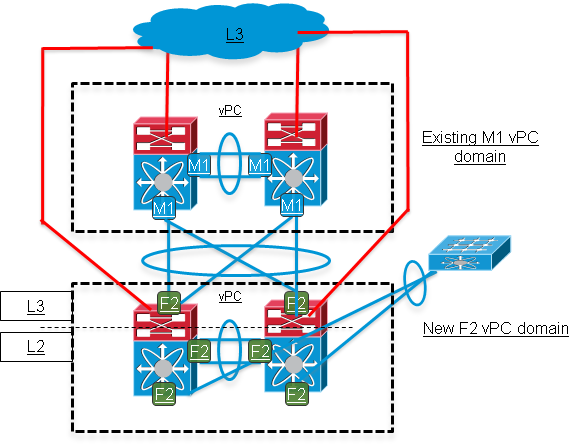

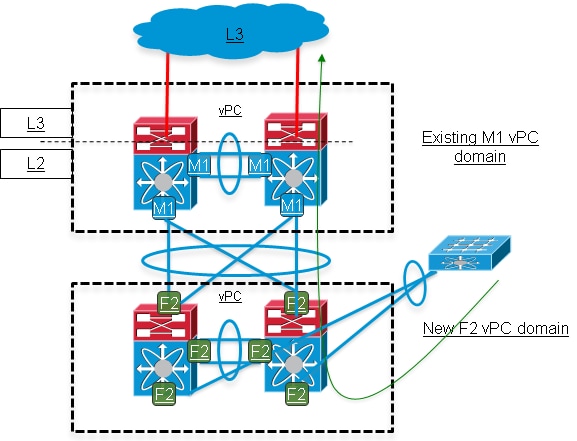

- Add L3 uplinks on the F2 domain. (Network impact: Moderate*)

* If free IP addresses are available in the L3 subnets used for the M1 domain uplink, then this step is less disruptive. Otherwise, the original IP addresses on Nexus M1 domain is reused on the F2 domain uplinks, which results in more disruption.

- The L2/L3 demarcation point is moved from the M1 domain to the F2 domain by migrating the configuration of the Switch Virtual Interface (SVI) to the F2 domain, which includes the First Hop Redundancy Protocol (FHRP) configuration [Hot Standby Router Protocol (HSRP)/Virtual Router Redundancy Protocol (VRRP)/Gateway Load Balancing Protocol (GLBP)].

- The same FHRP group can be used on both M1 and F2 domain. The priority field is tuned in order to influence which domain should be the active gateway. In the example of HSRP, the group then has four members: one active, one standby, and two in listen state.

- The routing configuration is applied on the F2 domain (OSPF/Enhanced Interior Gateway Routing Protocol (EIGRP)/static routes) depending on the current routing setup. A good option is to configure the routing and SVI interfaces on F2 domain and keep the upstream and downstream preferred L3 path via the M1 domain.

- Once all L3 interfaces are up and FHRP and Interior Gateway Protocol (IGP) adjacencies are established, make the preferred downstream L3 path more preferred via the F2 domain.

- In order to migrate the L3 gateway for vPC VLANs to the F2 domain, change the FHRP priority.

- The L2/L3 demarcation point is moved from the M1 domain to the F2 domain by migrating the configuration of the Switch Virtual Interface (SVI) to the F2 domain, which includes the First Hop Redundancy Protocol (FHRP) configuration [Hot Standby Router Protocol (HSRP)/Virtual Router Redundancy Protocol (VRRP)/Gateway Load Balancing Protocol (GLBP)].

- Move the remaining used features to the F2 domain. (Network impact: Moderate)

Move the remaining L3 features that are used - such as multicast Protocol Independent Multicast (PIM), DHCP relay, Policy-Based Routing (PBR) as well as any Quality of Service (QoS) or security configuration, to the F2 domain. - Migrate the orphan ports to the F2 domain. (Network impact: High*)

* For the hosts connected to the orphan ports only.

The orphan ports are the ports that are forwarding vPC VLAN(s), but that are not part of a vPC. Those ports connect single-homed devices to either switch of the vPC domain.

In order to migrate orphan ports, move the configuration and then the physical link(s) to the new vPC domain.

- Remove the M1 domain and run verification checks. (Network impact: None)

Verify the vPC/L2/L3 state on the F2 domain, and verify that connectivity tests are successful.

Contact Cisco

- Open a Support Case

- (Requires a Cisco Service Contract)

Feedback

Feedback