Introduction

This document describes on steps to troubleshoot an issue where blade fails to discover due to server power state-MC Error.

Prerequisites

Requirements

Cisco recommends that you have a working knowledge of these topics:

- Cisco Unified Computing System (UCS)

- Cisco Fabric Interconnect (FI)

Components Used

The information in this document is based on these software and hardware versions:

The information in this document was created from the devices in a specific lab environment. All of the devices used in this document started with a cleared (default) configuration. If your network is live, ensure that you understand the potential impact of any command.

Background Information

- Blade firmware upgrade, the server went down after uptime policy reboot.

- Some power event in the data center.

Above could be the possible trigger of the issue.

Problem

This error message occurs upon a reboot or during discovery.

"Unable to change the blade power state"

UCSM reports this alert for a blade that fails to get powered on

Blade rebooted as a part of firmware upgrade or any other maintenance fails to discover /turn-up with below message in FSM:

“Unable to change server power state-MC Error(-20): Management controller cannot or failed in processing request(sam:dme:ComputePhysicalTurnup:Execute)”

SEL Logs show error entries as below:

CIMC | Platform alert POWER_ON_FAIL #0xde | Predictive Failure deasserted | Deasserted

CIMC | Platform alert POWER_ON_FAIL #0xde | Predictive Failure asserted | Asserted

Troubleshoot

From UCSM CLI shell, connect to cimc of the blade and verify the blade power status using power command

- ssh FI-IP-ADDR

- connect cimc X

- power

Failure Scenario # 1

OP:[ status ]

Power-State: [ on ]

VDD-Power-Good: [ inactive ]

Power-On-Fail: [ active ]

Power-Ctrl-Lock: [ unlocked ]

Power-System-Status: [ Good ]

Front-Panel Power Button: [ Enabled ]

Front-Panel Reset Button: [ Enabled ]

OP-CCODE:[ Success ]

Failure Scenario #2

OP:[ status ]

Power-State: [ off ]

VDD-Power-Good: [ inactive ]

Power-On-Fail: [ inactive ]

Power-Ctrl-Lock: [ permanent lock ] <<<----------------

Power-System-Status: [ Bad ] <<<---------------

Front-Panel Power Button: [ Disabled ]

Front-Panel Reset Button: [ Disabled ]

OP-CCODE:[ Success ]

Output from working scenario #

[ help ]# power

OP:[ status ]

Power-State: [ on ]

VDD-Power-Good: [ active ]

Power-On-Fail: [ inactive ]

Power-Ctrl-Lock: [ unlocked ]

Power-System-Status: [ Good ]

Front-Panel Power Button: [ Enabled ]

Front-Panel Reset Button: [ Enabled ]

OP-CCODE:[ Success ]

[ power ]#

Verify the sesnor value #

POWER_ON_FAIL | disc -> | discrete | 0x0200 | na | na | na | na | na | na | >>> Non-working

Sensor value#

POWER_ON_FAIL | disc -> | discrete | 0x0100 | na | na | na | na | na | na | >>>> Working

Execute sensors command and check the values of power and voltage sensors. Compare the output with the same model of the blade is powered on state.

If the Reading or Status, columns are NA for certain sensors, this may not be the hardware failure all the time.

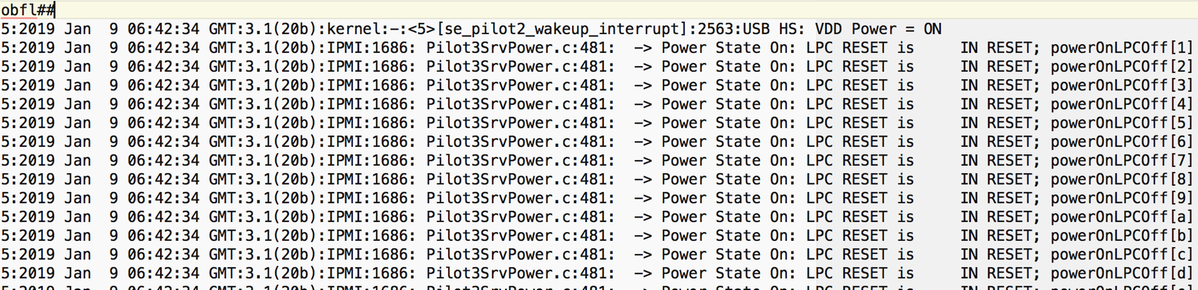

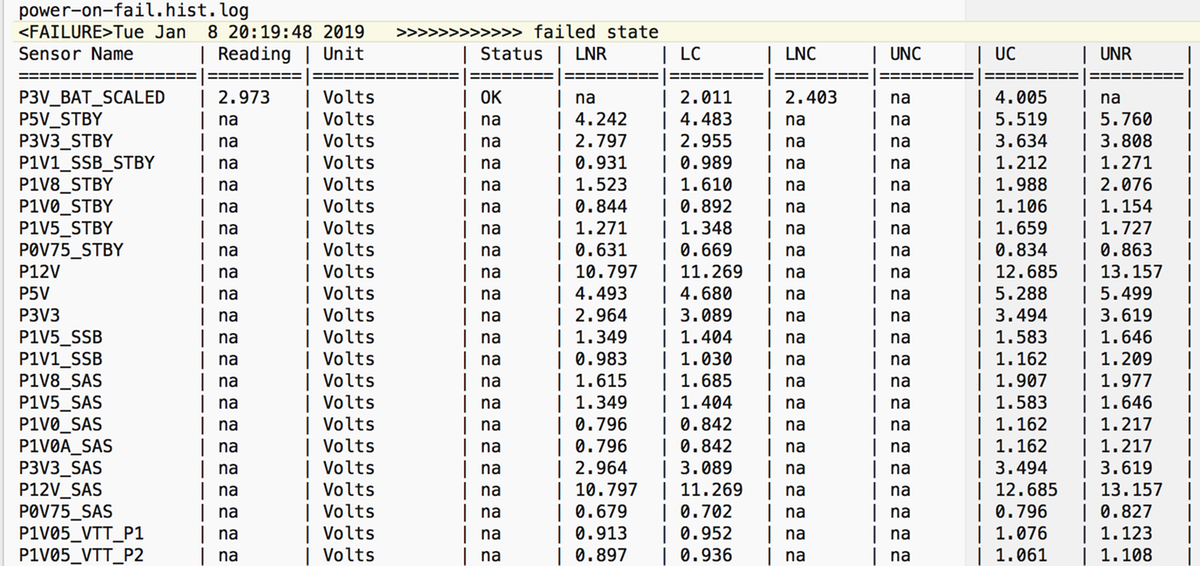

Logs snippet#

Sel.log#

CIMC | Platform alert POWER_ON_FAIL #0xde | Predictive Failure asserted | Asserted

power-on-fail.hist inside the tmp/techsupport_pidXXXX/CIMCX_TechSupport-nvram.tar.gz)

If the above does not help and as next step, collect UCSM and Chassis techsupport log bundle.

It helps to further investigate the issue.

With the previously mentioned symptoms, Try these steps to recover the issue.

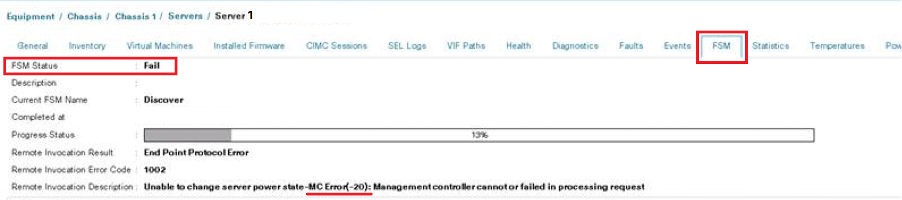

Step 1: Verify that blade FSM Status is “Failed” with description “state-MC Error(-20)”.

Navigate to Equipment > Chassis X > Server Y > FSM

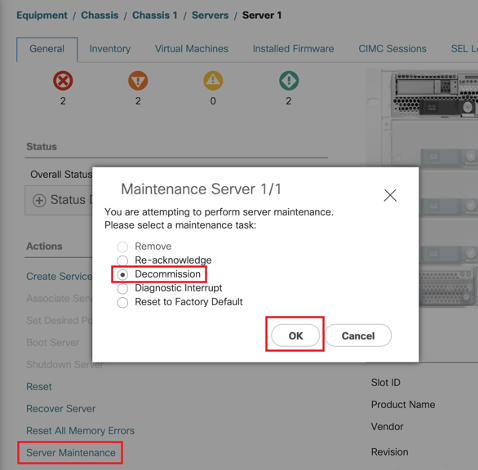

Step 2: Note down impacted blade Serial number and Decommission the Blade.

<<< IMP: Note down Problem blade serial number from General tab before decommissioning it. It will required at later stage in Step-4 >>>

Navigate to Equipment > Chassis X > Server Y > General > Server Maintenance > Decommission > Ok.

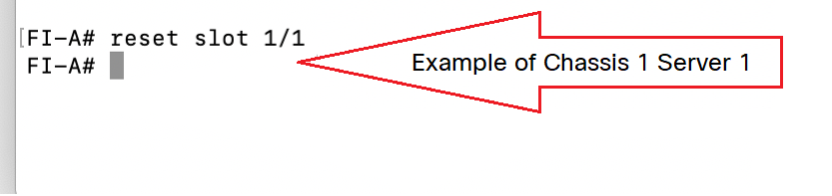

Step 3. FI-A/B# reset slot x/y

For Example #Chassis2-Server 1 is impacted.

FI-A# reset slot 2/1

Wait for 30-40 seconds after running the above command

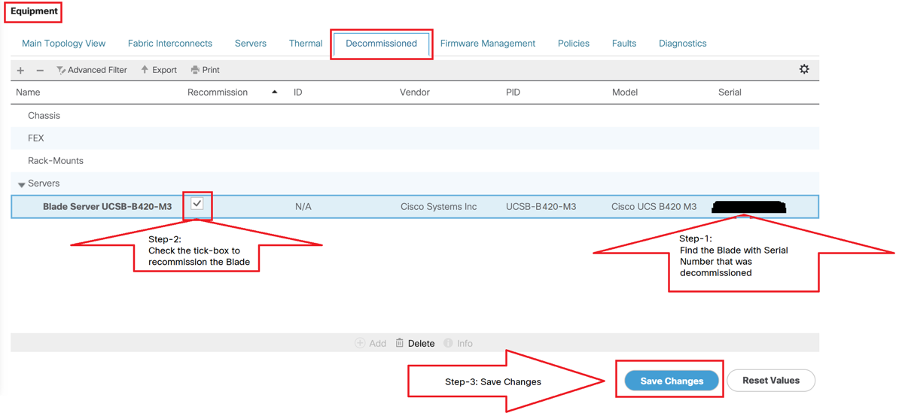

Step-4: Recommission the Blade that has been decommissioned.

Navigate to Equipment > Decommissioned > Servers > Look for the server we decommissioned (Find correct blade with Serial number Noted in Step-2 before decommissioning) > Check Recommission Tick box against correct Blade (Validate with Serial number) > Save Changes.

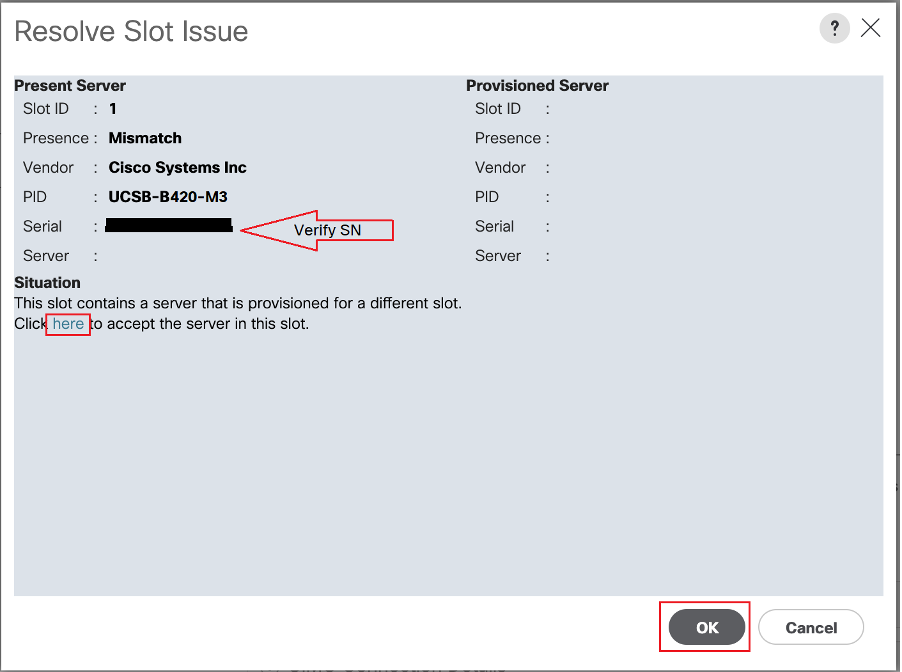

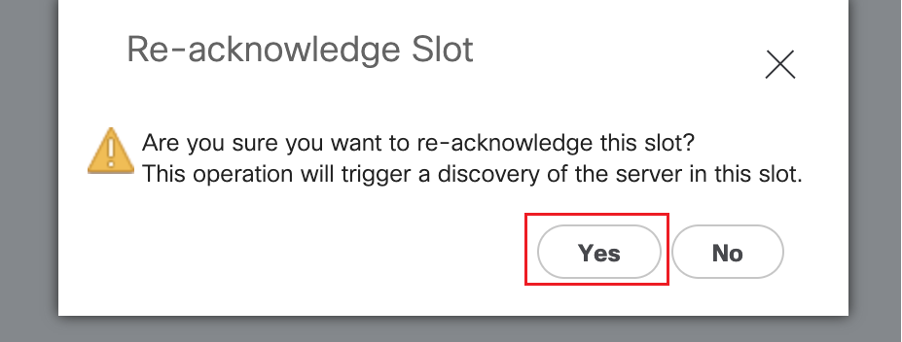

Step-5: Resolve Slot, if observed.

Navigate to Equipment > Chassis X > Server Y.

If you get “Resolve Slot Issue” pop-up for the blade that you recommissioned, then verify its serial number and click “here” to accept the server in slot.

Blade discovery should start now.

Wait till Server Discovery gets completed. Monitor the progress in Server FSM tab.

Step 6. If step one to five don’t help and FSM again fails, then decommission the blade and try to RESEAT it physically.

If still server is unable to discover reach out to Cisco TAC if this is a hardware issue.

NOTE: If you have B200 M4 blade and notice failure scenario #2 , please refer following bug and Contact TAC

CSCuv90289

B200 M4 fails to power on due to POWER_SYS_FLT

Related information

Procedure to discover chassis

UCSM server management guide