Introduction

This document describes the fine-tuning options for the VNIC adapters in Intersight Managed Mode (IMM) through the server profiles.

Prerequisites

OS recommended settings for ethernet adapters:

Operational Compute, Storage, and Management Policies must be configured beforehand.

Requirements

Cisco recommends that you have knowledge of these topics:

- Intersight Managed Mode

- Physical Network Connectivity

- OS recommended ethernet adapter settings

- VNIC fine-tuning elements

Components used

The information in this document is based on these software and hardware versions:

- UCS-B200-M5 firmware 4.2(1a)

- Cisco UCS 6454 Fabric Interconnect, firmware 4.2(1e)

- Intersight software as a service (SaaS)

The information in this document was created from the devices in a specific lab environment. All of the devices used in this document started with a cleared (default) configuration. If your network is live, ensure that you understand the potential impact of any command.

Configure

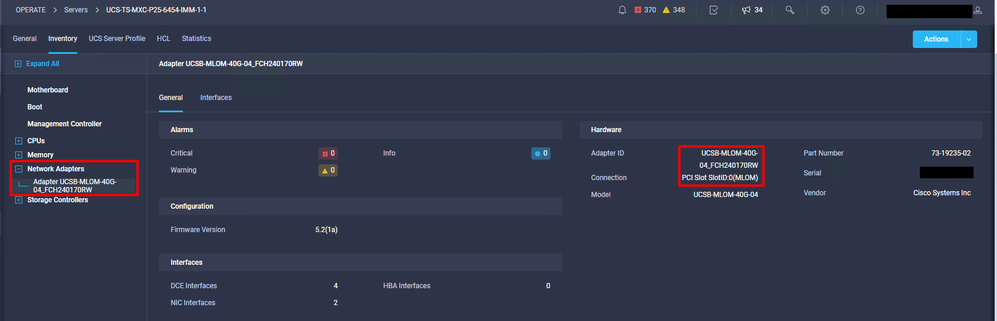

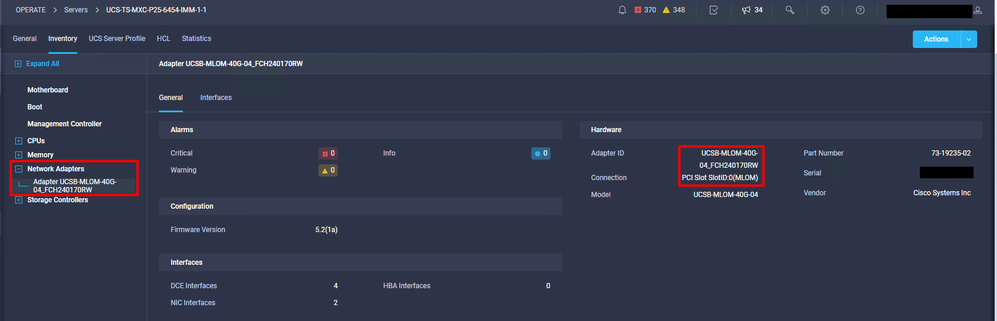

Step 1. Identify VIC Adapter and Slot ID on the server

Navigate to the Servers tab > Inventory > Select the Network Adapters option.

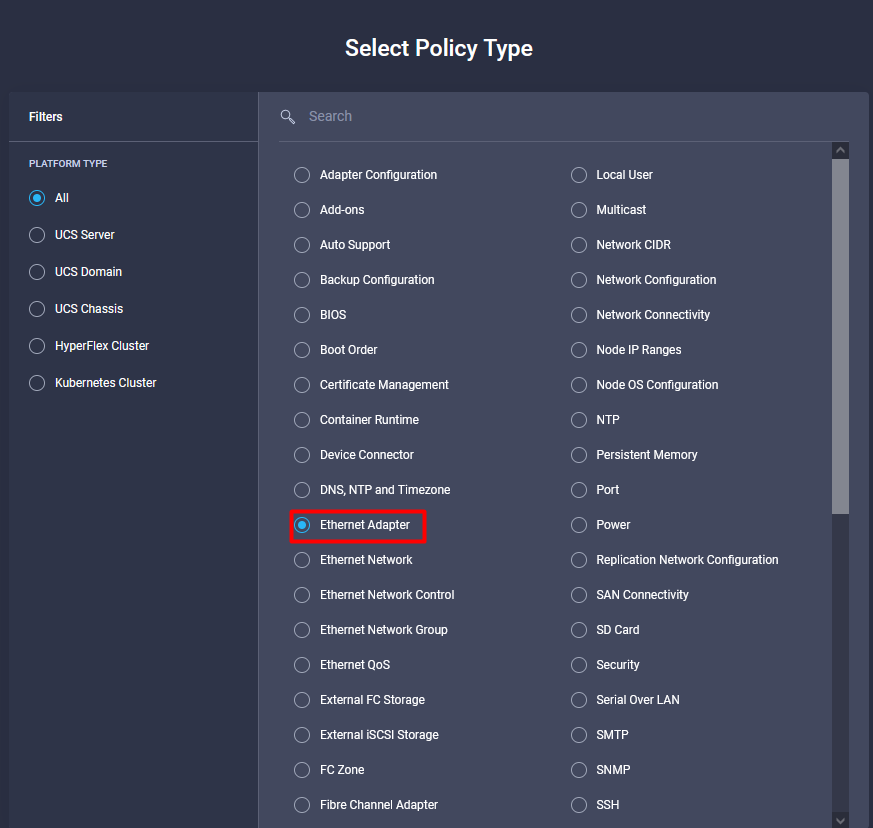

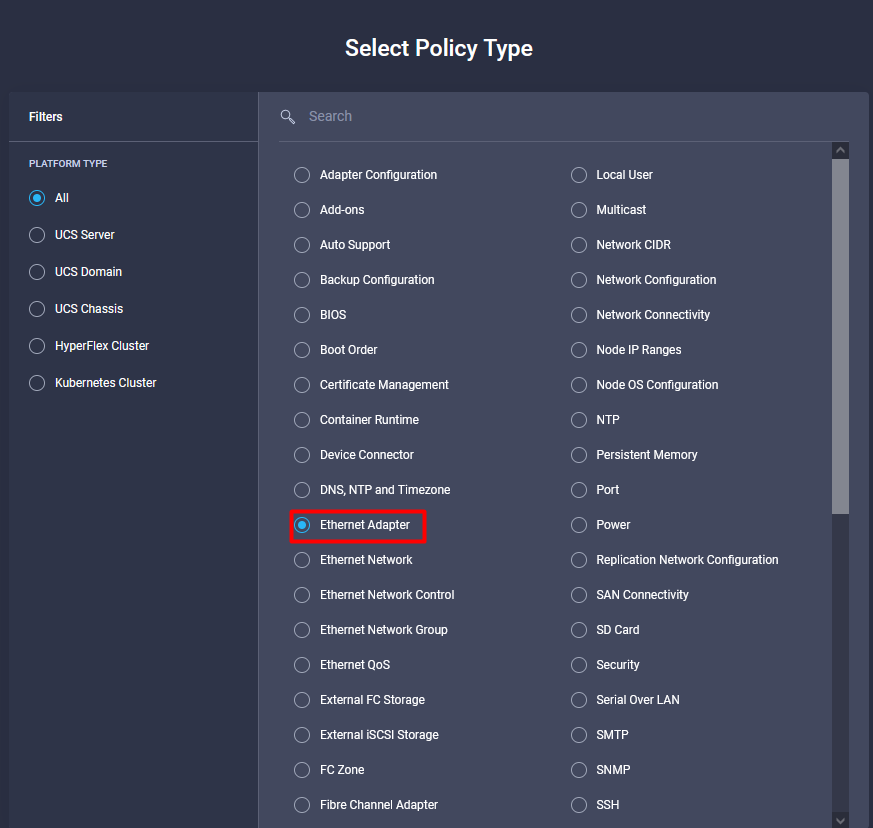

Step 2. Create Ethernet Adapter policy

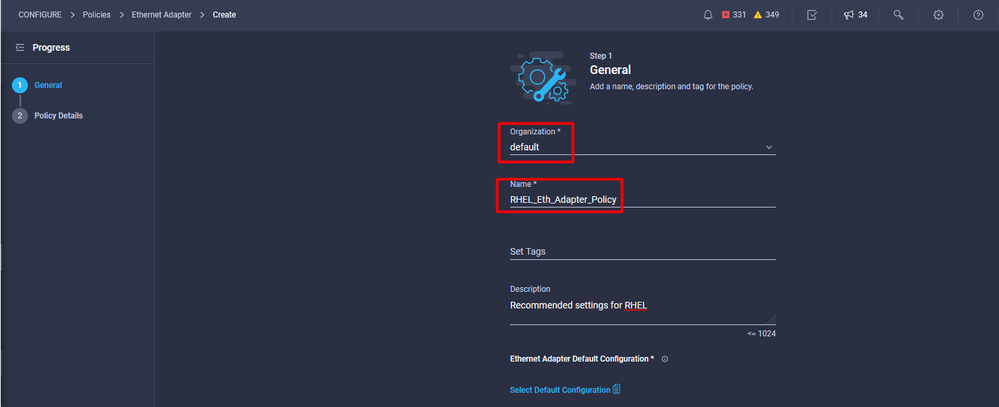

Create the Ethernet Adapter policy with the suggested values by the OS Vendor.

Navigate to Policies tab > Create Policy > Select Ethernet Adapter.

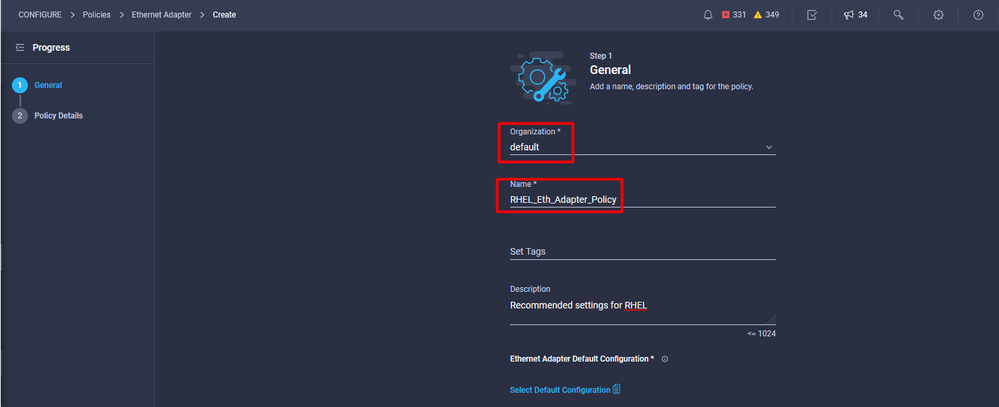

Once within the Create Policy menu, select the Organization and provide the Policy Name.

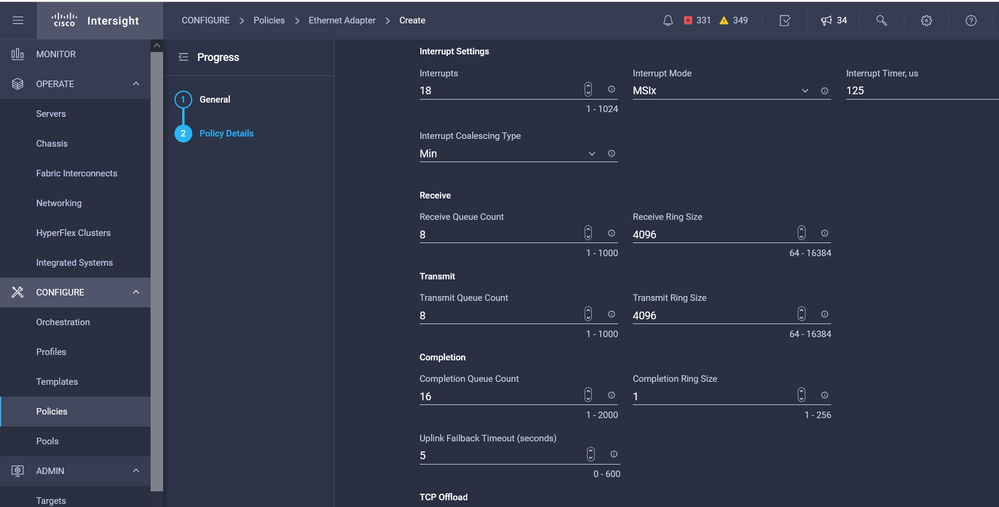

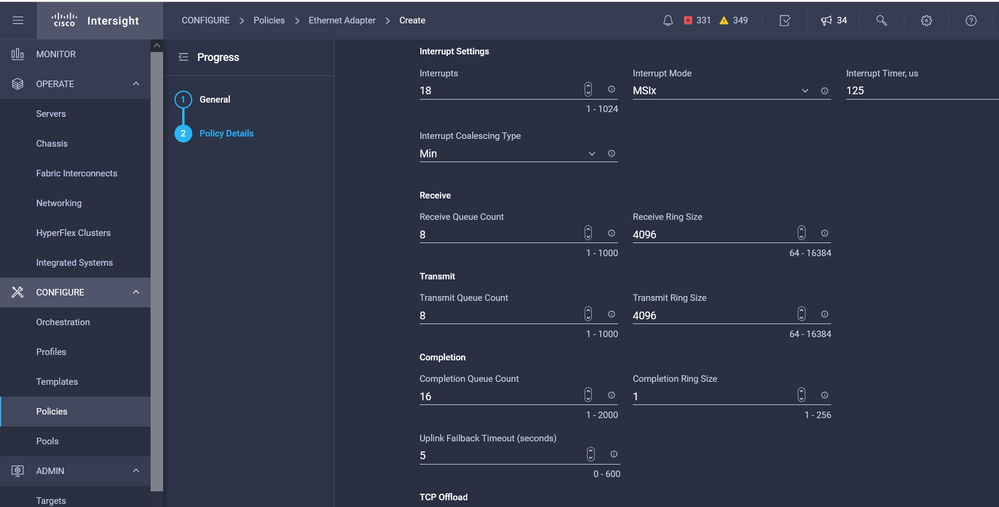

Step 3. Configure the suggested settings by the OS vendor. Usually, the listed features are configured within the Ethernet Adapter Policy:

- Receive Queues

- Transmit Queues

- Ring Size

- Completion Queues

- Interrupts

- Enable Receive Side Scaling (RSS) or Accelerated Receive Flow Steering (ARFS)

Note: RSS and ARFS are mutually exclusive so configure only one. Do not configure both.

Once created, assign the Ethernet Adapter Policy to a LAN Connectivity Policy.

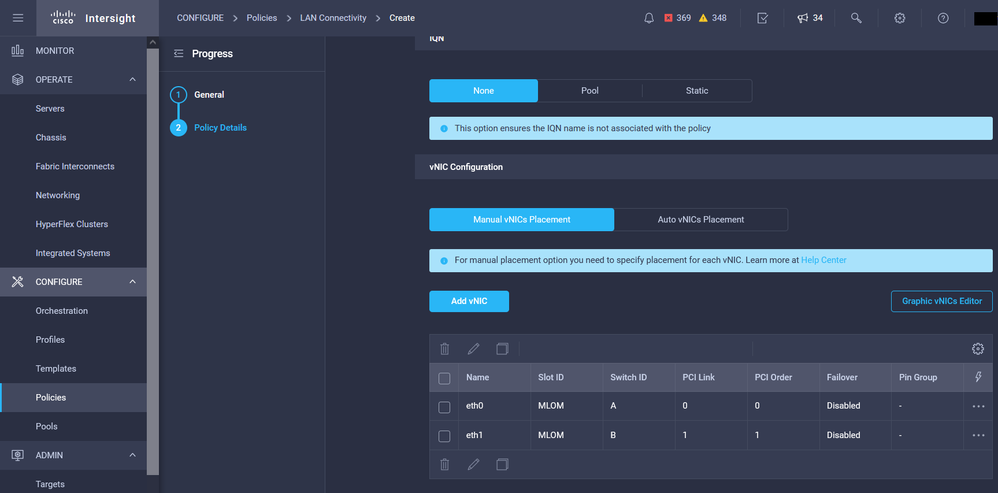

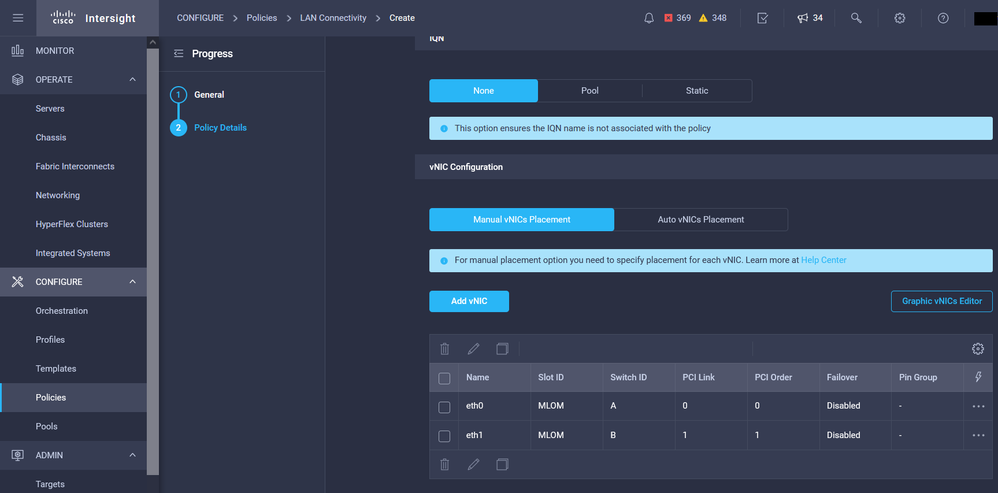

Step 4. Create LAN Connectivity Policy

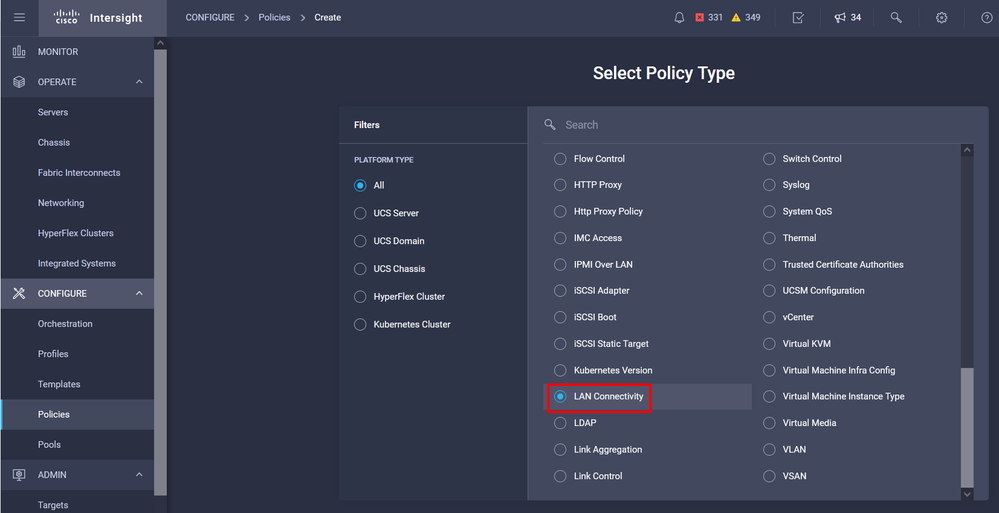

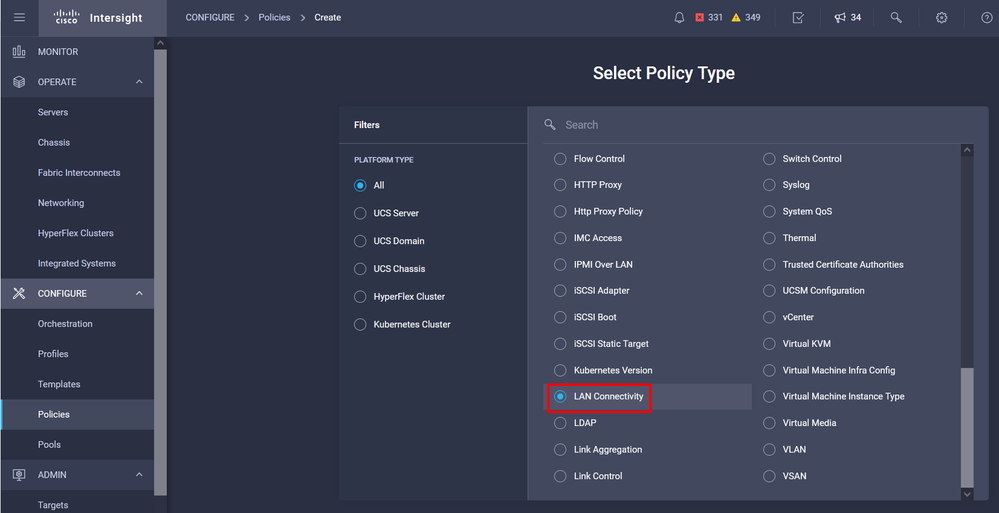

Navigate to the Policies tab > Create Policy > LAN Connectivity

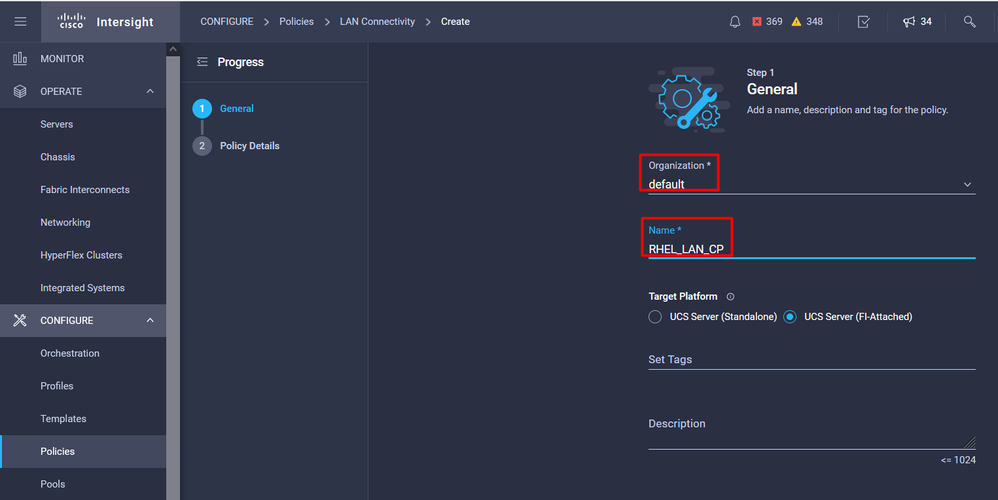

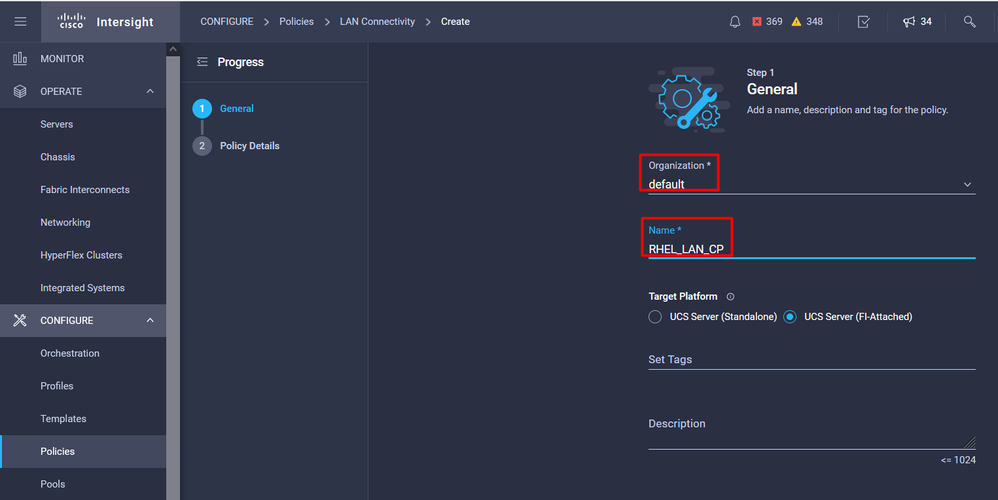

Select the Organization and provide the Policy Name.

Under target, the platform selects UCS Server (FI-Attached).

Within the LAN Connectivity policy, navigate to the vNIC Configuration section and configure at least two network interfaces. In this example, eth0 and eth1 interfaces are created.

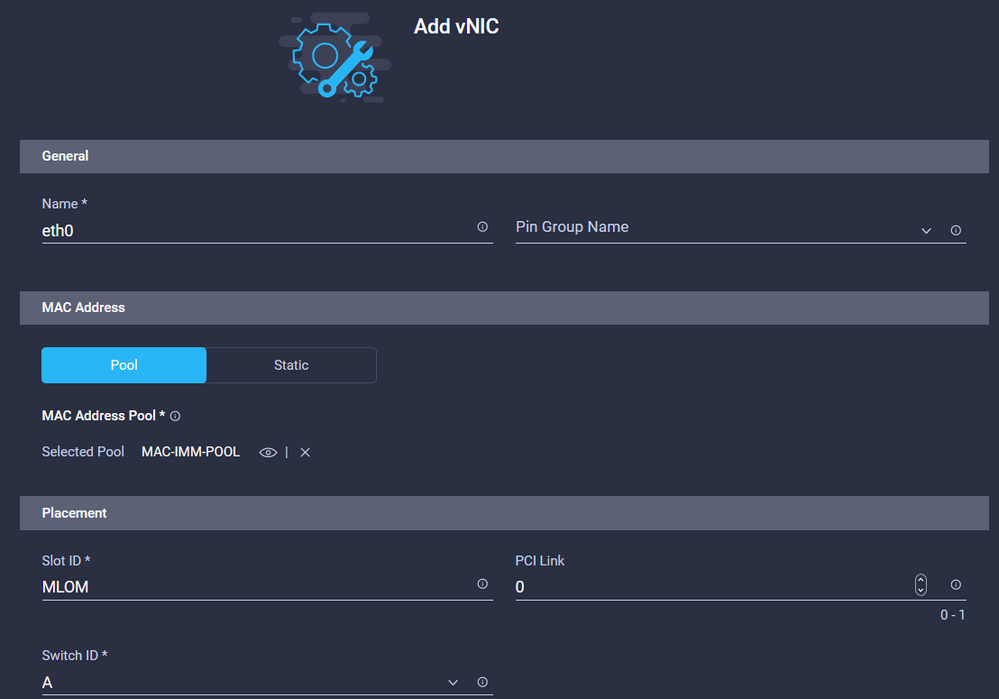

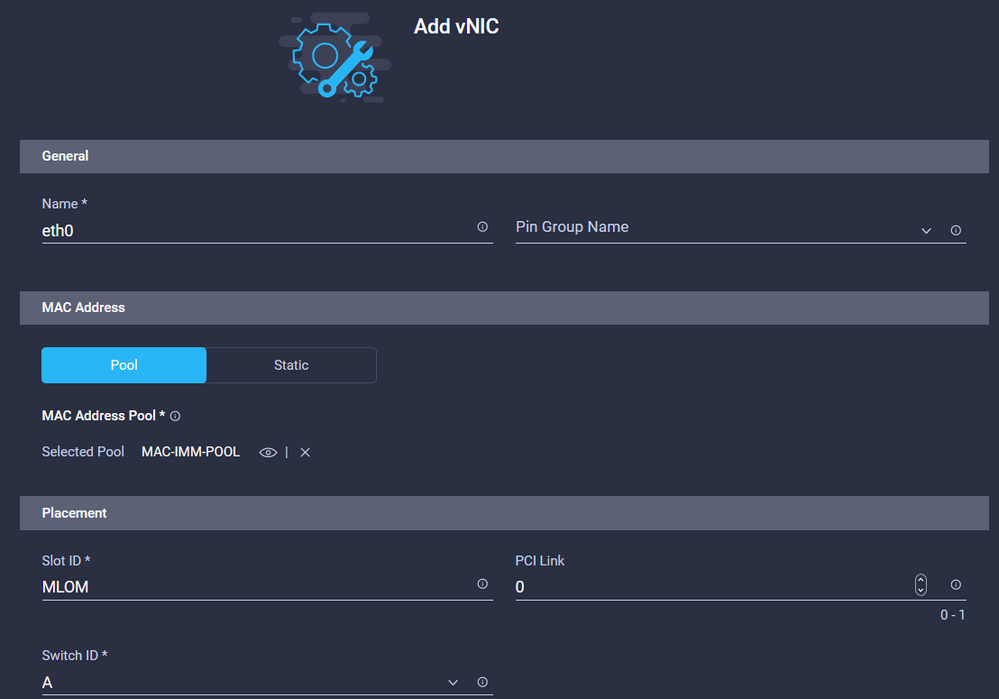

On the Add vNIC configuration tab, under General, provide the name eth0.

Under the MAC Address section, select the appropriate MAC Address Pool.

Under the Placement section, configure the Slot ID as MLOM.

Leave the PCI Link and PCI Order options with value 0 and Switch ID with option A.

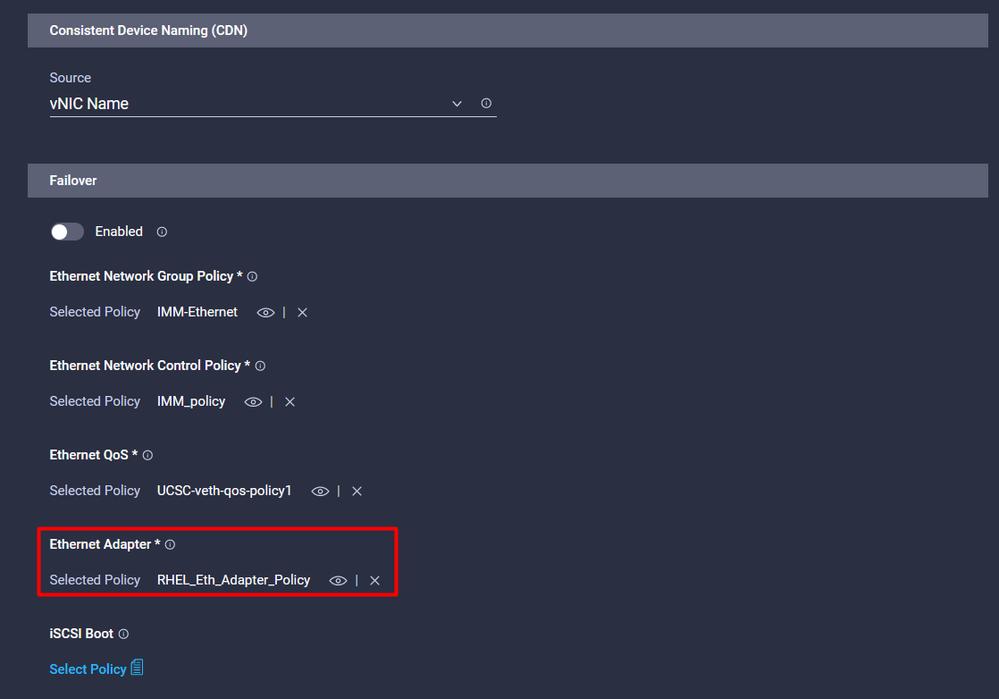

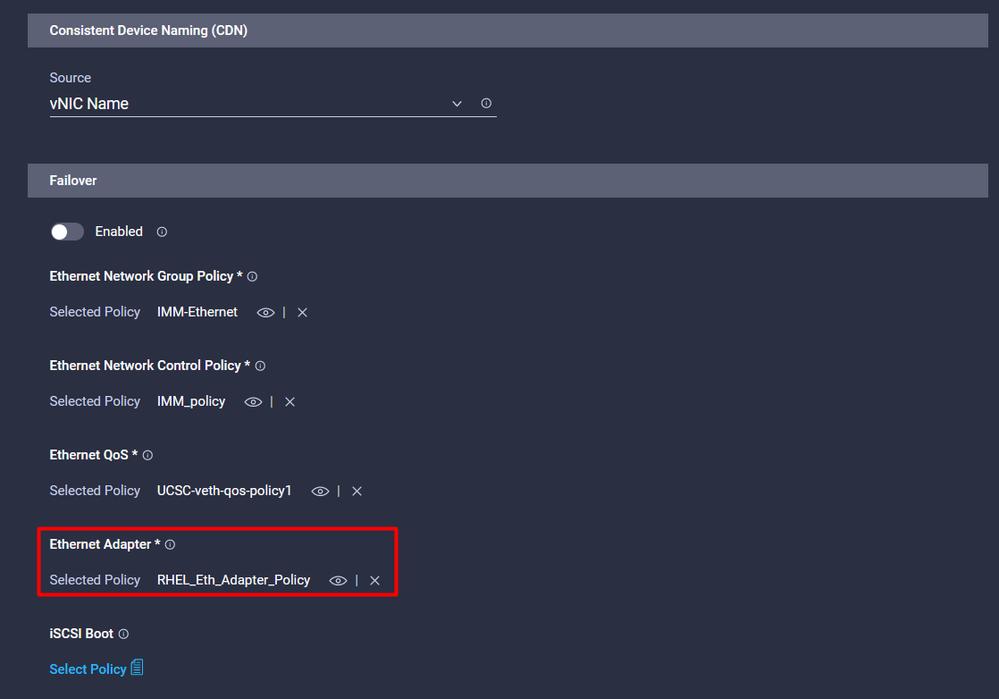

Navigate to the Consistent Device Naming (CDN) menu, and select VNIC Name.

Add the Ethernet Network Group Policy, Ethernet Network Control Policy, Ethernet QoS, and Ethernet Adapter policies.

Repeat the same steps to create the interface eth1, configure the PCI Link, PCI Order and Switch ID values accordingly.

Finally, create the LAN Connectivity Policy. Once created, assign it to a UCS Server Profile.

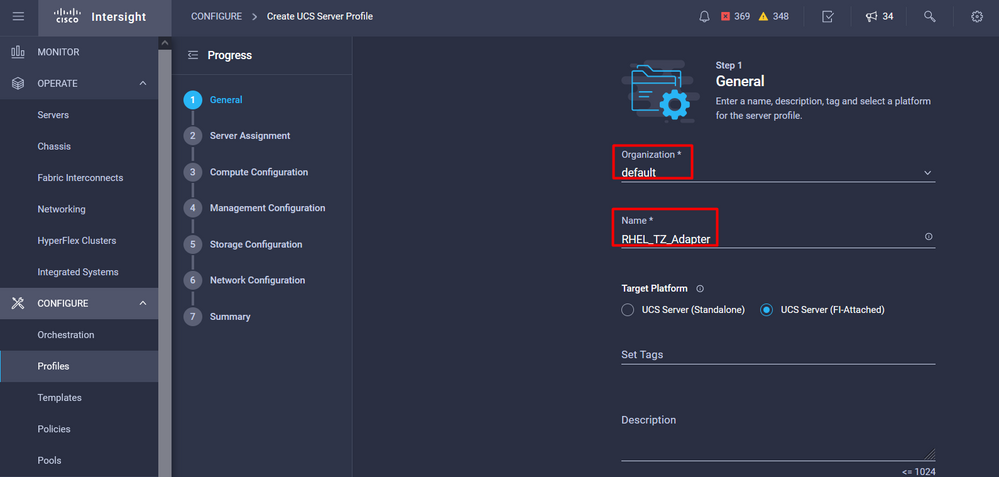

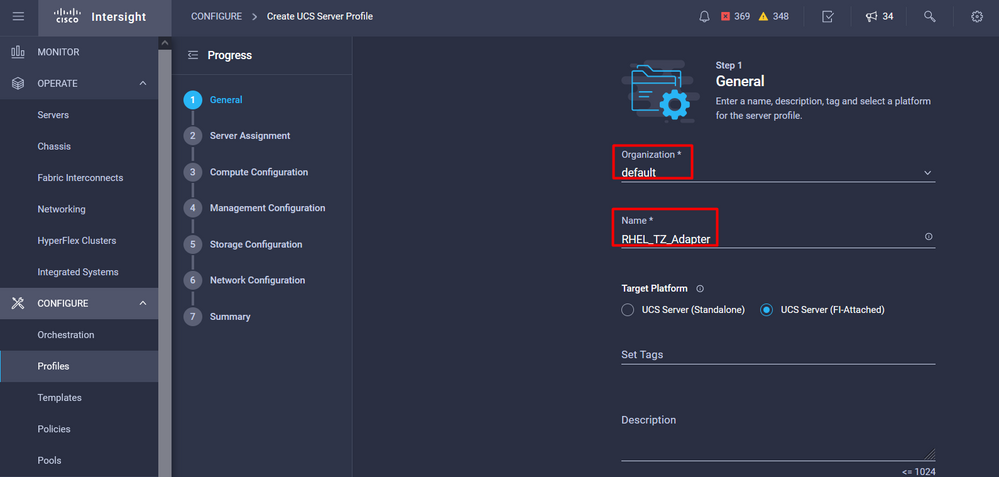

Step 5. Create a Server profile.

Navigate to the Profiles tab, and then select Create UCS Server Profile.

Provide the Organization and Name details.

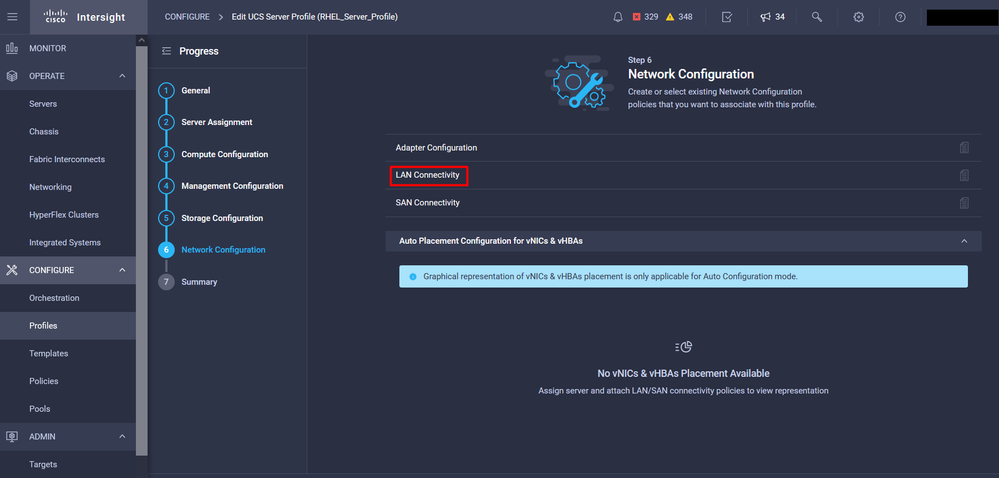

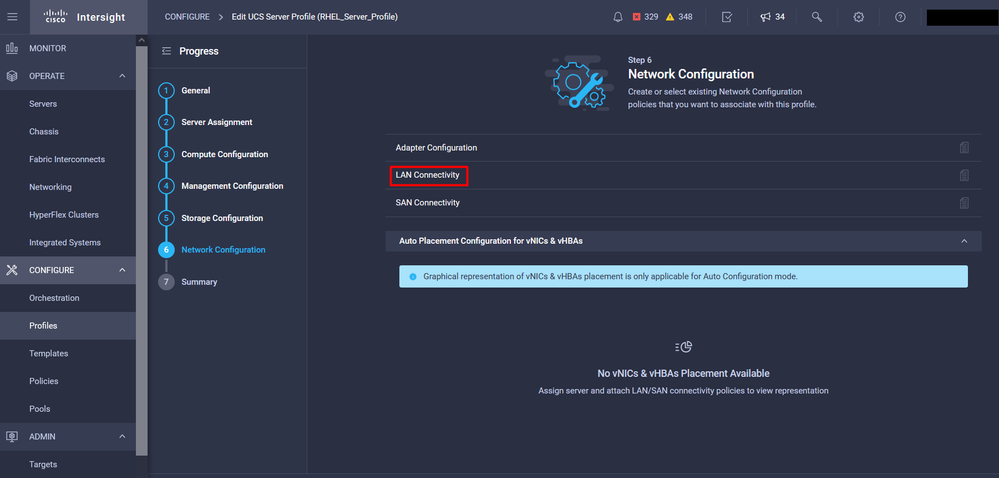

Select all the related configurations such as Compute, Management, and Storage settings.

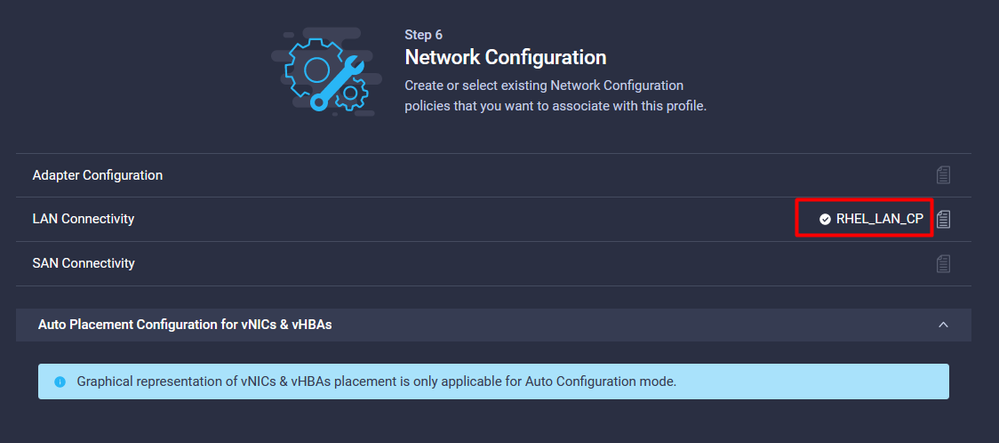

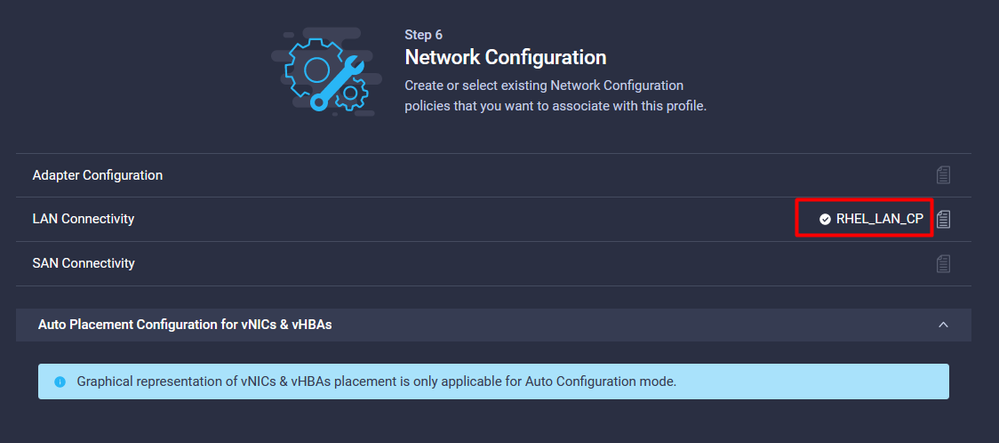

Under Network configuration, select the appropriate LAN Connectivity policy.

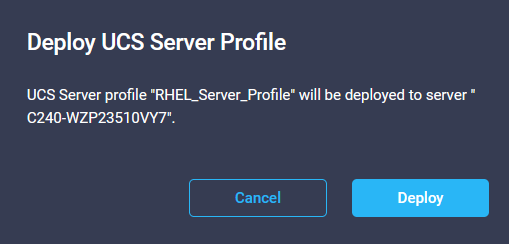

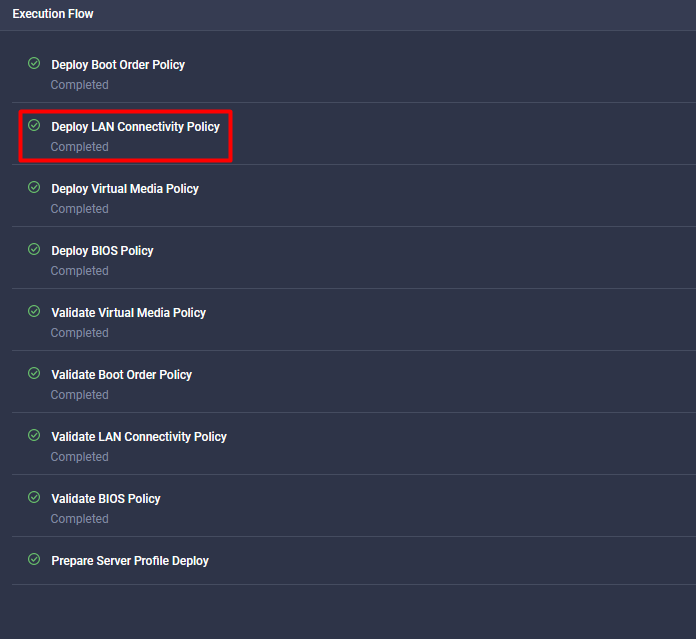

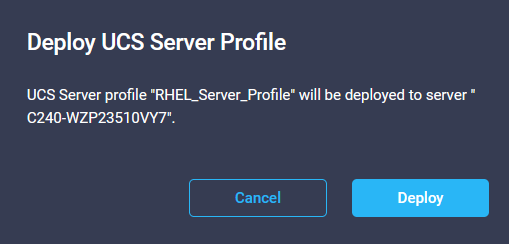

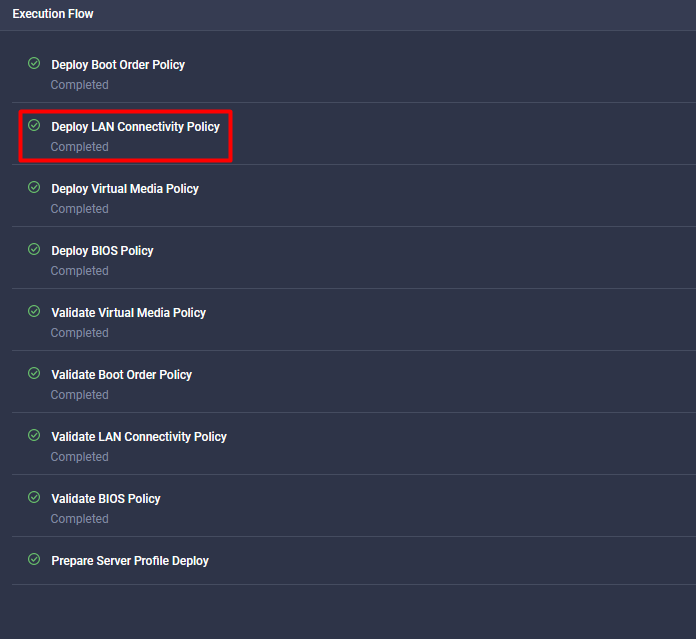

Select Deploy to configure the Server Profile and validate all the steps are completed successfully.

Verify

Use this section to confirm that your configuration works properly.

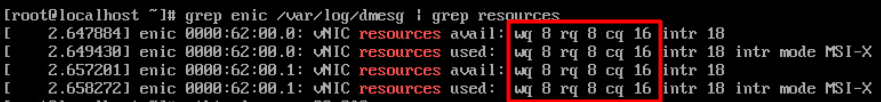

Validate the adapter settings on RHEL.

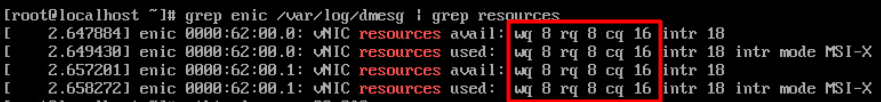

To check the currently available resources provided by the VIC adapter, validate the transmit and receive queues on the dmesg file:

$ grep enic /var/log/dmesg | grep resources

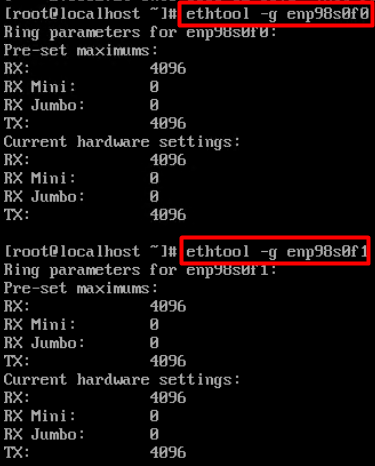

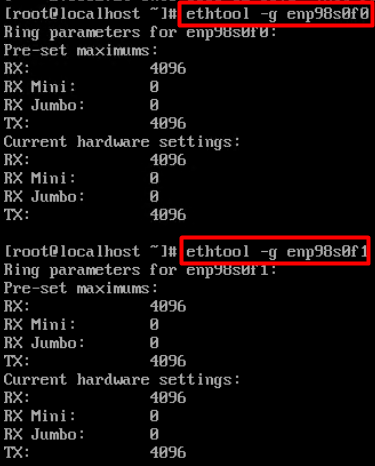

Validate the configured Ring Size.

ethtool -g interface_name

Validate the adapter settings on VMware ESXi.

In order to check the current available resources provided by the VIC adapter, validate the transmit and receive queues with the command below, where X is the vmnic number.

vsish -e get /net/pNics/vmnicX/txqueues/info

vsish -e get /net/pNics/vmnicX/rxqueues/info

Run this command to validate the ring size:

esxcli network nic ring current get -n vmnicX

Validate the adapter settings directly on UCS.

In order to validate the settings, connect to any of the Fabric Interconnects via SSH.

Connect to the server adapter with the command connect adapter x/y/z where x is the chassis number, y is the slot number and z is the adapter number.

When connected to the adapter, on the extra login, enter dbgsh.

Run the command attach-mcp.

Next run the command vnicl , to list the available vnics.

Look for the corresponding vnic name eth0 and eth1 and validate the settings.

UCS-IMM-A# connect adapter 1/1/1

Entering character mode

Escape character is '^]'.

(none) login: dbgsh

adapter (top):1#

adapter (top):4# attach-mcp

adapter (mcp):1# vnicl

adapter (mcp):19# vnicl

================================

vnicid : 18

name : eth0

type : enet

state : UP

adminst : UP

flags : OPEN, INIT, LINKUP, NOTIFY_INIT, ENABLE, USING_DEVCMD2

ucsm name : eth0

spec_flags : MULTIFUNC, TRUNK

mq_spec_flags :

slot : 0

h:bdf : 0:03:00.0

vs.mac : 00:25:b5:01:00:46

mac : 00:25:b5:01:00:46

vifid : 801

vifcookie : 801

uif : 0

portchannel_bypass : 0x0

cos : 0

vlan : 0

rate_limit : unlimited

cur_rate : unlimited

stby_vifid : 0

stby_vifcookie : 0

stby_recovery_delay : 0

channel : 0

stdby_channel : 0

profile :

stdby_profile :

init_errno : 0

cdn : eth0

devspec_flags : TSO, LRO, RXCSUM, TXCSUM, RSS, RSSHASH_IPV4, RSSHASH_TCPIPV4, RSSHASH_IPV6, RSSHASH_TCPIPV6

lif : 18

vmode : STATIC

encap mode : NONE

host wq : [11-18] (n=8)

host rq : [2010-2017] (n=8) (h=0x080107da)

host cq : [2002-2017] (n=16)

host intr : [3008-3025] (n=18)

notify : pa=0x10384de000/40 intr=17

devcmd2 wq : [19] (n=1)

================================

vnicid : 19

name : eth1

type : enet

state : UP

adminst : UP

flags : OPEN, INIT, LINKUP, NOTIFY_INIT, ENABLE, USING_DEVCMD2

ucsm name : eth1

spec_flags : MULTIFUNC, TRUNK

mq_spec_flags :

slot : 0

h:bdf : 0:03:00.1

vs.mac : 00:25:b5:01:00:45

mac : 00:25:b5:01:00:45

vifid : 800

vifcookie : 800

uif : 1

portchannel_bypass : 0x0

cos : 0

vlan : 0

rate_limit : unlimited

cur_rate : unlimited

stby_vifid : 0

stby_vifcookie : 0

stby_recovery_delay : 0

channel : 0

stdby_channel : 0

profile :

stdby_profile :

init_errno : 0

cdn : eth1

devspec_flags : TSO, LRO, RXCSUM, TXCSUM, RSS, RSSHASH_IPV4, RSSHASH_TCPIPV4, RSSHASH_IPV6, RSSHASH_TCPIPV6

lif : 19

vmode : STATIC

encap mode : NONE

host wq : [20-27] (n=8)

host rq : [2002-2009] (n=8) (h=0x080107d2)

host cq : [1986-2001] (n=16)

host intr : [2976-2993] (n=18)

notify : pa=0x1038e27000/40 intr=17

devcmd2 wq : [28] (n=1)

================================

Related Information

Technical Support & Documentation - Cisco Systems

Server Profiles in Intersight

Tuning Guidelines for Cisco UCS Virtual Interface Cards (White Paper)

Red Hat Enterprise Linux Network Performance Tuning Guide

Feedback

Feedback